背景

- 虚拟机安装 Hadoop 3.1.3,并运行了 HDFS

- 将网上查询到的资料的所有解决方法都试了一遍,下面这个解决方案成功解决了我的问题。

报错情况

- 启动HDFS后,执行统计词频实例wordcount时,显示Block受损。

异常信息

Error: java.io.IOException: org.apache.hadoop.hdfs.CannotObtainBlockLengthException: Cannot obtain block length for LocatedBlock{BP-1982579562-192.168.xxx.32-1629880080614:blk_1083851475_10110700; getBlockSize()=29733; corrupt=false; offset=0; locs=[DatanodeInfoWithStorage[192.168.114.33:50010,DS-c7e1e9b5-cea8-43cb-87a4-f429602b0e03,DISK], DatanodeInfoWithStorage[192.168.114.35:50010,DS-79ec8e0d-bb51-4779-aee8-53d8a98809d6,DISK], DatanodeInfoWithStorage[192.168.114.32:50010,DS-cf7e207c-0e1d-4b65-87f7-608450271039,DISK]]} of /log_collection/ods/ods_xxxx_log/dt=2021-11-24/log.1637744364144.lzo at org.apache.hadoop.hive.io.HiveIOExceptionHandlerChain.handleRecordReaderCreationException(HiveIOExceptionHandlerChain.java:97) at org.apache.hadoop.hive.io.HiveIOExceptionHandlerUtil.handleRecordReaderCreationException(HiveIOExceptionHandlerUtil.java:57) at org.apache.hadoop.hive.ql.io.HiveInputFormat.getRecordReader(HiveInputFormat.java:420) at org.apache.hadoop.mapred.MapTask$TrackedRecordReader.<init>(MapTask.java:175) at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:444) at org.apache.hadoop.mapred.MapTask.run(MapTask.java:349) at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:174) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729) at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:168) Caused by: org.apache.hadoop.hdfs.CannotObtainBlockLengthException: Cannot obtain block length for LocatedBlock{BP-1982579562-192.168.114.32-1629880080614:blk_1083851475_10110700; getBlockSize()=29733; corrupt=false; offset=0; locs=[DatanodeInfoWithStorage[192.168.114.33:50010,DS-c7e1e9b5-cea8-43cb-87a4-f429602b0e03,DISK], DatanodeInfoWithStorage[192.168.114.35:50010,DS-79ec8e0d-bb51-4779-aee8-53d8a98809d6,DISK], DatanodeInfoWithStorage[192.168.114.32:50010,DS-cf7e207c-0e1d-4b65-87f7-608450271039,DISK]]} of /log_collection/ods/ods_xxxx_log/dt=2021-11-24/log.1637744364144.lzo at org.apache.hadoop.hdfs.DFSInputStream.readBlockLength(DFSInputStream.java:440) at org.apache.hadoop.hdfs.DFSInputStream.getLastBlockLength(DFSInputStream.java:349) at org.apache.hadoop.hdfs.DFSInputStream.fetchLocatedBlocksAndGetLastBlockLength(DFSInputStream.java:330) at org.apache.hadoop.hdfs.DFSInputStream.openInfo(DFSInputStream.java:230) at org.apache.hadoop.hdfs.DFSInputStream.<init>(DFSInputStream.java:196) at org.apache.hadoop.hdfs.DFSClient.openInternal(DFSClient.java:1048) at org.apache.hadoop.hdfs.DFSClient.open(DFSClient.java:1011) at org.apache.hadoop.hdfs.DistributedFileSystem$4.doCall(DistributedFileSystem.java:321) at org.apache.hadoop.hdfs.DistributedFileSystem$4.doCall(DistributedFileSystem.java:317) at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) at org.apache.hadoop.hdfs.DistributedFileSystem.open(DistributedFileSystem.java:329) at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:899) at

解决

初步检查

- 查看受损模块

运行代码:hadoop fsck /你的path 将报错中file后面的路径粘贴过来即可 - 运行后会显示相关文件的受损信息

/你的path: MISSING 1 blocks of total size 69 B.Status: CORRUPT

Total size: 69 B

Total dirs: 0

Total files: 1

Total symlinks: 0

Total blocks (validated): 1 (avg. block size 69 B)

********************************

CORRUPT FILES: 1

MISSING BLOCKS: 1

MISSING SIZE: 69 B

CORRUPT BLOCKS: 1

********************************

Minimally replicated blocks: 0 (0.0 %)

Over-replicated blocks: 0 (0.0 %)

Under-replicated blocks: 0 (0.0 %)

Mis-replicated blocks: 1 (100.0 %)

Default replication factor: 3

Average block replication: 0.0

Corrupt blocks: 1

Missing replicas: 0

Number of data-nodes: 31

Number of racks: 2

FSCK ended at Thu Apr 14 13:37:15 CST 2022 in 25 milliseconds

The filesystem under path '/你的path' is CORRUPT

- CORRUPT说明文件受损

CORRUPT BLOCKS : 1 #说明有1个受损文件块

MISSING BLOCKS : 1 #说明有一个丢失文件块

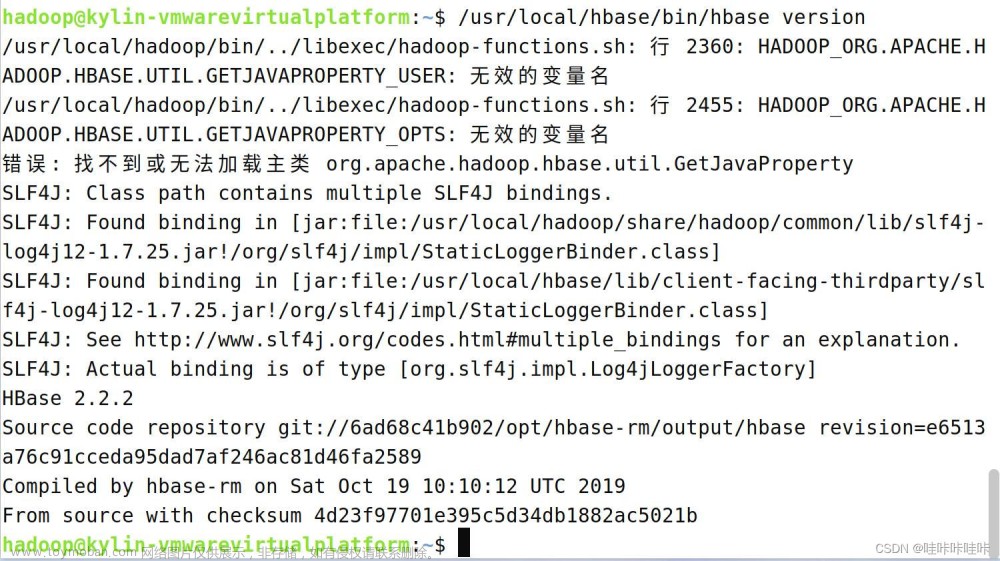

如下图:

进一步检查

由于有可能当前路径下的受损block远不止一个,而第一次查询只查出了一个block,可再次运行下方代码找到当前路径下所有的受损文件块。

hadoop fsck /path -list-corruptfileblocks

运行后可以输出当前路径下所有的受损文件块

删除损坏的block

运行下方代码可一次性将所有损坏文件块删除。文章来源:https://www.toymoban.com/news/detail-716220.html

hadoop fsck /path -delete

删除后再次运行实例就不会再报错啦。文章来源地址https://www.toymoban.com/news/detail-716220.html

到了这里,关于关于unbuntu启动hadoop时报错org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block的解决方案的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!