kubeadmin部署k8s1.27.4

环境介绍

| IP | 主机名 | 资源配置 | 系统版本 |

|---|---|---|---|

| 192.168.117.170 | k8s-master | 2c2g200g | Centos7.9 |

| 192.168.117.171 | k8s-node1 | 2c2g200g | Centos7.9 |

| 192.168.117.172 | k8s-node2 | 2c2g200g | Centos7.9 |

编辑本地解析且修改主机名

三台主机都要做

vim /etc/hosts

配置主机名

master

[root@k8s-master ~]# hostnamectl set-hostname k8s-master

node1

[root@k8s-node1 ~]# hostnamectl set-hostname k8s-node1

node2

[root@k8s-node2 ~]# hostnamectl set-hostname k8s-node2

master节点产成ssh密钥拷贝给node节点实现免密登录

[root@k8s-master ~]# ssh-keygen

[root@k8s-master ~]# ssh-copy-id k8s-node1

[root@k8s-master ~]# ssh-copy-id k8s-node2

开启路由转发功能

所有节点

##添加配置文件

[root@k8s-master ~]# cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

EOF

##加载br_netfilter模块 && 查看是否加载

[root@k8s-master ~]# modprobe br_netfilter && lsmod | grep br_netfilter

br_netfilter 22256 0

bridge 151336 1 br_netfilter

##加载⽹桥过滤及内核转发配置⽂件

[root@k8s-master ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

配置阿里云yum源

所有节点

[root@k8s-master ~] cd /etc/yum.repos.d/

[root@k8s-master yum.repos.d]# mv CentOS-Base.repo CentOS-Base.repo.bak

[root@k8s-master yum.repos.d]# curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@k8s-master yum.repos.d]# yum clean all && yum makecache

配置ipvs功能

所有节点

[root@k8s-master ~]# yum -y install ipset ipvsadm

##编辑需要添加的模块儿

[root@k8s-master ~]# cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

##给予执行权限

[root@k8s-master ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules

##运行模块儿脚本

[root@k8s-master ~]# bash /etc/sysconfig/modules/ipvs.modules

##检验是否成功

[root@k8s-master ~]# lsmod | grep -e ip_vs -e nf_conntrack

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack_netlink 36396 0

nfnetlink 14519 2 nf_conntrack_netlink

nf_conntrack_ipv4 15053 2

nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

nf_conntrack 139264 7 ip_vs,nf_nat,nf_nat_ipv4,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

关闭防火墙、内核以及swap分区

[root@k8s-master ~]# systemctl stop firewalld

[root@k8s-master ~]# setenforce 0

setenforce: SELinux is disabled

[root@k8s-master ~]# swapoff -a (临时关闭)

##永久关闭需要在/etc/fstab文件中注释掉

#/dev/mapper/centos-swap swap swap defaults 0 0

安装Docker

所有节点

[root@k8s-master ~]# curl https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -o /etc/yum.repos.d/docker.repo

[root@k8s-master ~]# yum -y install docker-ce

配置docker加速器及Cgroup驱动程序

[root@k8s-master ~]# cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://docker.mirrors.ustc.edu.cn",

"https://docker.m.daocloud.io",

"http://hub-mirrors.c.163.com"],

"max-concurrent-downloads": 10,

"log-driver": "json-file",

"log-level": "warn",

"data-root": "/var/lib/docker",

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

启动docker并设置开机自启

[root@k8s-master ~]# systemctl daemon-reload

[root@k8s-master ~]# systemctl enable --now docker

安装cri-docker

所有节点

作为接替 Docker 运行时的 Containerd 在早在 Kubernetes1.7 时就能直接与 Kubelet 集成使用,只是大部分时候我们因熟悉 Docker,在部署集群时采用了默认的 dockershim。在V1.24起的版本的 kubelet 就彻底移除了dockershim,改为默认使用Containerd了,当然也使用 cri-dockerd适配器来将Docker Engine与 Kubernetes 集成。可以参考官方文档:

https://kubernetes.io/zh-cn/docs/setup/production-environment/container-runtimes/#docker

CRI-Dockerd cri-dockerd是什么:

https://blog.51cto.com/u_16099281/6469310

##下载压缩包

[root@k8s-master ~]# wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.4/cri-dockerd-0.3.4.amd64.tgz

##解压

[root@k8s-master ~]# tar zxvf cri-dockerd-0.3.4.amd64.tgz

##拷贝二进制命令文件

[root@k8s-master ~]# cp cri-dockerd/* /usr/bin/

##发送给node1节点

[root@k8s-master ~]# scp cri-dockerd/* root@k8s-node1:/usr/bin/

##发送给node2节点

[root@k8s-master ~]# scp cri-dockerd/* root@k8s-node2:/usr/bin/

##配置systemctl管理

[root@k8s-master ~]# vim /usr/lib/systemd/system/cri-docker.service

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket

[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

[root@k8s-master ~]# vim /usr/lib/systemd/system/cri-docker.socket

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

[root@k8s-master ~]# systemctl daemon-reload

[root@k8s-master ~]# systemctl enable --now cri-docker

Created symlink from /etc/systemd/system/multi-user.target.wants/cri-docker.service to /usr/lib/systemd/system/cri-docker.service.

[root@k8s-master ~]# systemctl status cri-docker

安装kubelet、kubeadm、kubectl(有个小坑,之前更换阿里源里面没有这些包,需要再添加一个)

##添加源

[root@k8s-master ~]# cd /etc/yum.repos.d/

[root@k8s-master yum.repos.d]# vim kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

##下载

[root@k8s-master ~]# yum -y install kubelet-1.27.4-0 kubeadm-1.27.4-0 kubectl-1.27.4-0 --disableexcludes=kubernetes

##设置kubelet开机自启

[root@k8s-master ~]# systemctl enable --now kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

##初始化之前kubelet无法启动,可以查看它的状态,下面这种情况代表正在等待指令

[root@k8s-master ~]# systemctl is-active kubelet

activating

kubeadm初始化

##参数解释

--image-repository registry.aliyuncs.com/google_containers:使用阿里云镜像仓库

--kubernetes-version=v1.27.3:指定k8s的版本

--pod-network-cidr=10.10.20.0/24:指定pod的网段

--cri-socket unix:///var/run/cri-dockerd.sock:指定容器运行时的Socket文件路径,原本默认是dockershim.sock,但现在改成cri-docker.sock

maste节点

[root@k8s-master ~]# kubeadm init \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.27.4 \

--pod-network-cidr=10.10.20.0/24 \

--cri-socket unix:///var/run/cri-dockerd.sock

## 初始化成功后根据回显创建文件,并记录下节点加入集群的命令

kubeadm join 192.168.117.171:6443 --token jvb30p.4a9rnaqyls3ag0y5 \

--discovery-token-ca-cert-hash sha256:1a7cb15fbb63034879b8d1139de2d5e72f9a7c14027fe8daa16512825a48c920

master创建文件

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

若是出了问题可以使用 kubeadm reset 清除所有配置和数据重新初始化

加入集群

所有node节点执行

kubeadm join 192.168.117.171:6443 --token jvb30p.4a9rnaqyls3ag0y5 --discovery-token-ca-cert-hash sha256:1a7cb15fbb63034879b8d1139de2d5e72f9a7c14027fe8daa16512825a48c920 --cri-socket unix:///var/run/cri-dockerd.sock

注意加入 --cri-socket unix:///var/run/cri-dockerd.sock 参数

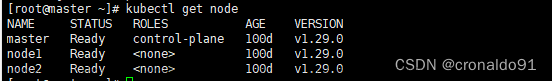

mastet节点查看

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane 144m v1.27.4

k8s-node1 NotReady <none> 50s v1.27.4

k8s-node2 NotReady <none> 4s v1.27.4

配置网络插件

两个网络插件二选一即可

1、master下载kube-flannel.yml文件

curl -O https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

修改网段 改初始化时配置的网段

提前拉取所需镜像

##所有节点都要拉取

docker pull docker.io/flannel/flannel-cni-plugin:v1.2.0

docker pull image: docker.io/flannel/flannel:v0.22.3

写入三行内容

- key: node.kubernetes.io/not-ready

operator: Exists

effect: NoSchedule

[root@k8s-master ~]# kubectl apply -f kube-flannel.yml

2、配置网络组件calico

calico官网:https://docs.tigera.io/calico/latest/getting-started/kubernetes/quickstart#install-calico

下载这两个文件并上传到master

[root@k8s-master ~]# kubectl create -f tigera-operator.yaml

[root@k8s-master ~]# vim custom-resources.yaml

## 这个要和master初始化网段保持一致

文章来源:https://www.toymoban.com/news/detail-716526.html

文章来源:https://www.toymoban.com/news/detail-716526.html

[root@k8s-master ~]# kubectl apply -f custom-resources.yaml

[root@k8s-master ~]# kubectl get pod -n calico-system

文章来源地址https://www.toymoban.com/news/detail-716526.html

文章来源地址https://www.toymoban.com/news/detail-716526.html

验证集群可用性

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 92m v1.27.4

k8s-node1 Ready <none> 90m v1.27.4

k8s-node2 Ready <none> 89m v1.27.4

查看集群健康情况,理想状态

[root@k8s-master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy

查看kubernetes集群pod运⾏情况

[root@k8s-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7bdc4cb885-bx4cq 1/1 Running 0 93m

coredns-7bdc4cb885-th6ft 1/1 Running 0 93m

etcd-k8s-master 1/1 Running 0 93m

kube-apiserver-k8s-master 1/1 Running 0 93m

kube-controller-manager-k8s-master 1/1 Running 0 93m

kube-proxy-btqbd 1/1 Running 0 91m

kube-proxy-j4qxl 1/1 Running 0 90m

kube-proxy-qhx4j 1/1 Running 0 93m

kube-scheduler-k8s-master 1/1 Running 0 93m

到了这里,关于kubeadmin部署k8s1.27.4的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!