概述

环境是spark 3.2.4 hadoop版本 3.2.4,所以官网下载的包为 spark-3.2.4-bin-hadoop3.2.tgz

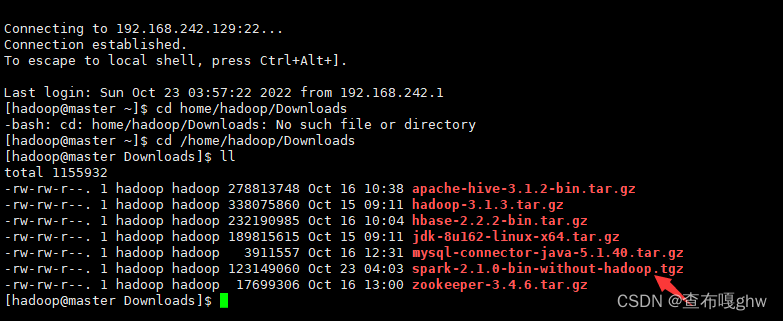

在具体安装部署之前,需要先下载Spark的安装包,进到 spark的官网,点击download按钮

使用Spark的时候一般都是需要和Hadoop交互的,所以需要下载带有Hadoop依赖的安装包

这个时候就需要选择Hadoop版本对应的Spark安装包

机器配置

注意:需要确保这几台机器上的基础环境是OK的,防火墙、免密码登录、还有JDK

因为这几台机器之前已经使用过了,基础环境都是配置过的,所以说在这就直接使用了

linux机器配置请参考此链接

| 机器ip | 机器名 |

|---|---|

| 10.32.xx.142 | hadoop01 |

| 10.32.xx.143 | hadoop02 |

| 10.32.xx.144 | hadoop03 |

| 10.32.xx.145 | hadoop04 |

standalone 配置

主节点

先在

hadoop01上进行配置

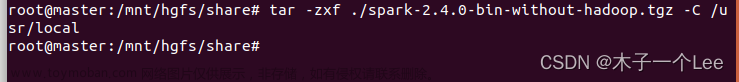

解压

# 解压

[root@hadoop01 soft]# tar -zxvf spark-3.2.4-bin-hadoop3.2.tgz

[root@hadoop01 soft]# cd spark-3.2.4-bin-hadoop3.2

[root@hadoop01 spark-3.2.4-bin-hadoop3.2]# cd conf/

[root@hadoop01 conf]# ls

fairscheduler.xml.template log4j.properties.template metrics.properties.template spark-defaults.conf.template spark-env.sh.template workers.template

配置spark-env.sh及workers

spark 2.x版本 从节点叫

slaves.templatespark 3.x 这后叫workers.template,这个需要注意

# 重命名spark-env.sh.template

[root@hadoop01 conf]# mv spark-env.sh.template spark-env.sh

[root@hadoop01 conf]# vi spark-env.sh

# 在最后加入下面两句

export JAVA_HOME=/data/soft/jdk1.8

export SPARK_MASTER_HOST=hadoop01

# 重命名workers.template

[root@hadoop01 conf]# mv workers.template workers

# 修改workers

[root@hadoop01 conf]# vi workers

hadoop02

hadoop03

hadoop04

分发

将修改好配置的spark安装包 分发到其它worker节点,即上文所说的

hadoop02hadoop03hadoop04三台机器

# 将修改好配置的spark安装包 分发到其它worker节点

[root@hadoop01 soft]# scp -rq spark-3.2.4-bin-hadoop3.2 hadoop02:/data/soft/

[root@hadoop01 soft]# scp -rq spark-3.2.4-bin-hadoop3.2 hadoop03:/data/soft/

[root@hadoop01 soft]# scp -rq spark-3.2.4-bin-hadoop3.2 hadoop04:/data/soft/

启动集群

启动Spark集群

[root@hadoop01 spark-3.2.4-bin-hadoop3.2]# ls

bin conf data examples jars kubernetes LICENSE licenses NOTICE python R README.md RELEASE sbin yarn

[root@hadoop01 spark-3.2.4-bin-hadoop3.2]# sbin/start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /data/soft/spark-3.2.4-bin-hadoop3.2/logs/spark-root-org.apache.spark.deploy.master.Master-1-hadoop01.out

hadoop04: starting org.apache.spark.deploy.worker.Worker, logging to /data/soft/spark-3.2.4-bin-hadoop3.2/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-hadoop04.out

hadoop03: starting org.apache.spark.deploy.worker.Worker, logging to /data/soft/spark-3.2.4-bin-hadoop3.2/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-hadoop03.out

hadoop02: starting org.apache.spark.deploy.worker.Worker, logging to /data/soft/spark-3.2.4-bin-hadoop3.2/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-hadoop02.out

验证

[root@hadoop01 spark-3.2.4-bin-hadoop3.2]# jps

10520 Master

12254 Jps

[root@hadoop02 soft]# jps

4224 Worker

7132 Jps

还可以访问主节点的8080端口来查看集群信息

执行任务

验证结束后,跑个

spark任务来测试一下吧

[root@hadoop01 spark-3.2.4-bin-hadoop3.2]# bin/spark-submit --class org.apache.spark.examples.SparkPi --master spark://hadoop01:7077 examples/jars/spark-examples_2.12-3.2.4.jar 2

文章来源:https://www.toymoban.com/news/detail-728134.html

文章来源:https://www.toymoban.com/news/detail-728134.html

结束

这就是

Spark的独立集群文章来源地址https://www.toymoban.com/news/detail-728134.html

到了这里,关于1.spark standalone环境安装的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!