转载请注明出处:小锋学长生活大爆炸[xfxuezhang.cn]文章来源:https://www.toymoban.com/news/detail-728173.html

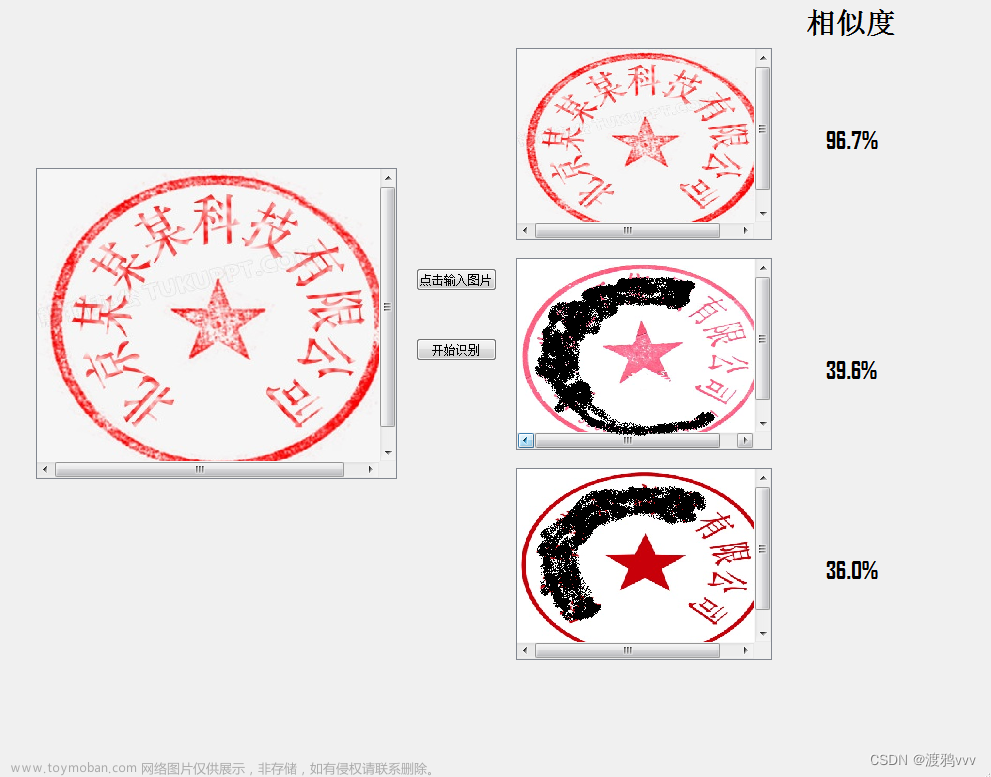

此代码可以替代内置的images.findImage函数使用,但可能会误匹配,如果是对匹配结果要求比较高的,还是得谨慎使用。文章来源地址https://www.toymoban.com/news/detail-728173.html

runtime.images.initOpenCvIfNeeded();

importClass(java.util.ArrayList);

importClass(java.util.List);

importClass(java.util.LinkedList);

importClass(org.opencv.imgproc.Imgproc);

importClass(org.opencv.imgcodecs.Imgcodecs);

importClass(org.opencv.core.Core);

importClass(org.opencv.core.Mat);

importClass(org.opencv.core.MatOfDMatch);

importClass(org.opencv.core.MatOfKeyPoint);

importClass(org.opencv.core.MatOfRect);

importClass(org.opencv.core.Size);

importClass(org.opencv.features2d.DescriptorMatcher);

importClass(org.opencv.features2d.Features2d);

importClass(org.opencv.features2d.SIFT);

importClass(org.opencv.features2d.ORB);

importClass(org.opencv.features2d.BRISK);

importClass(org.opencv.features2d.AKAZE);

importClass(org.opencv.features2d.BFMatcher);

importClass(org.opencv.core.MatOfPoint2f);

importClass(org.opencv.calib3d.Calib3d);

importClass(org.opencv.core.CvType);

importClass(org.opencv.core.Point);

importClass(org.opencv.core.Scalar);

importClass(org.opencv.core.MatOfByte);

/*

* 用法示例:

* var image1 = captureScreen();

* var image2 = images.read('xxxx');

* match(image1, image2);

*/

function match(img1, img2, method) {

console.time("匹配耗时");

// 指定特征点算法SIFT

var match_alg = null;

if(method == 'sift') {

match_alg = SIFT.create();

}else if(method == 'orb') {

match_alg = ORB.create();

}else if(method == 'brisk') {

match_alg = BRISK.create();

}else {

match_alg = AKAZE.create();

}

var bigTrainImage = Imgcodecs.imdecode(new MatOfByte(images.toBytes(img1)), Imgcodecs.IMREAD_UNCHANGED);

var smallTrainImage = Imgcodecs.imdecode(new MatOfByte(images.toBytes(img2)), Imgcodecs.IMREAD_UNCHANGED);

// 转灰度图

// console.log("转灰度图");

var big_trainImage_gray = new Mat(bigTrainImage.rows(), bigTrainImage.cols(), CvType.CV_8UC1);

var small_trainImage_gray = new Mat(smallTrainImage.rows(), smallTrainImage.cols(), CvType.CV_8UC1);

Imgproc.cvtColor(bigTrainImage, big_trainImage_gray, Imgproc.COLOR_BGR2GRAY);

Imgproc.cvtColor(smallTrainImage, small_trainImage_gray, Imgproc.COLOR_BGR2GRAY);

// 获取图片的特征点

// console.log("detect");

var big_keyPoints = new MatOfKeyPoint();

var small_keyPoints = new MatOfKeyPoint();

match_alg.detect(bigTrainImage, big_keyPoints);

match_alg.detect(smallTrainImage, small_keyPoints);

// 提取图片的特征点

// console.log("compute");

var big_trainDescription = new Mat(big_keyPoints.rows(), 128, CvType.CV_32FC1);

var small_trainDescription = new Mat(small_keyPoints.rows(), 128, CvType.CV_32FC1);

match_alg.compute(big_trainImage_gray, big_keyPoints, big_trainDescription);

match_alg.compute(small_trainImage_gray, small_keyPoints, small_trainDescription);

// console.log("matcher.train");

var matcher = new BFMatcher();

matcher.clear();

var train_desc_collection = new ArrayList();

train_desc_collection.add(big_trainDescription);

// vector<Mat>train_desc_collection(1, trainDescription);

matcher.add(train_desc_collection);

matcher.train();

// console.log("knnMatch");

var matches = new ArrayList();

matcher.knnMatch(small_trainDescription, matches, 2);

//对匹配结果进行筛选,依据distance进行筛选

// console.log("对匹配结果进行筛选");

var goodMatches = new ArrayList();

var nndrRatio = 0.8;

var len = matches.size();

for (var i = 0; i < len; i++) {

var matchObj = matches.get(i);

var dmatcharray = matchObj.toArray();

var m1 = dmatcharray[0];

var m2 = dmatcharray[1];

if (m1.distance <= m2.distance * nndrRatio) {

goodMatches.add(m1);

}

}

var matchesPointCount = goodMatches.size();

//当匹配后的特征点大于等于 4 个,则认为模板图在原图中,该值可以自行调整

if (matchesPointCount >= 4) {

log("模板图在原图匹配成功!");

var templateKeyPoints = small_keyPoints;

var originalKeyPoints = big_keyPoints;

var templateKeyPointList = templateKeyPoints.toList();

var originalKeyPointList = originalKeyPoints.toList();

var objectPoints = new LinkedList();

var scenePoints = new LinkedList();

var goodMatchesList = goodMatches;

var len = goodMatches.size();

for (var i = 0; i < len; i++) {

var goodMatch = goodMatches.get(i);

objectPoints.addLast(templateKeyPointList.get(goodMatch.queryIdx).pt);

scenePoints.addLast(originalKeyPointList.get(goodMatch.trainIdx).pt);

}

var objMatOfPoint2f = new MatOfPoint2f();

objMatOfPoint2f.fromList(objectPoints);

var scnMatOfPoint2f = new MatOfPoint2f();

scnMatOfPoint2f.fromList(scenePoints);

//使用 findHomography 寻找匹配上的关键点的变换

var homography = Calib3d.findHomography(objMatOfPoint2f, scnMatOfPoint2f, Calib3d.RANSAC, 3);

/**

* 透视变换(Perspective Transformation)是将图片投影到一个新的视平面(Viewing Plane),也称作投影映射(Projective Mapping)。

*/

var templateCorners = new Mat(4, 1, CvType.CV_32FC2);

var templateTransformResult = new Mat(4, 1, CvType.CV_32FC2);

var templateImage = smallTrainImage;

var doubleArr = util.java.array("double", 2);

doubleArr[0] = 0;

doubleArr[1] = 0;

templateCorners.put(0, 0, doubleArr);

doubleArr[0] = templateImage.cols();

doubleArr[1] = 0;

templateCorners.put(1, 0, doubleArr);

doubleArr[0] = templateImage.cols();

doubleArr[1] = templateImage.rows();

templateCorners.put(2, 0, doubleArr);

doubleArr[0] = 0;

doubleArr[1] = templateImage.rows();

templateCorners.put(3, 0, doubleArr);

//使用 perspectiveTransform 将模板图进行透视变以矫正图象得到标准图片

Core.perspectiveTransform(templateCorners, templateTransformResult, homography);

//矩形四个顶点

var pointA = templateTransformResult.get(0, 0);

var pointB = templateTransformResult.get(1, 0);

var pointC = templateTransformResult.get(2, 0);

var pointD = templateTransformResult.get(3, 0);

var y0 = Math.round(pointA[1])>0?Math.round(pointA[1]):0;

var y1 = Math.round(pointC[1])>0?Math.round(pointC[1]):0;

var x0 = Math.round(pointD[0])>0?Math.round(pointD[0]):0;

var x1 = Math.round(pointB[0])>0?Math.round(pointB[0]):0;

console.timeEnd("匹配耗时");

return {x: x0, y: y0};

} else {

console.timeEnd("匹配耗时");

log("模板图不在原图中!");

return null;

}

}

到了这里,关于【教程】Autojs使用OpenCV进行SIFT/BRISK等算法进行图像匹配的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!