目的

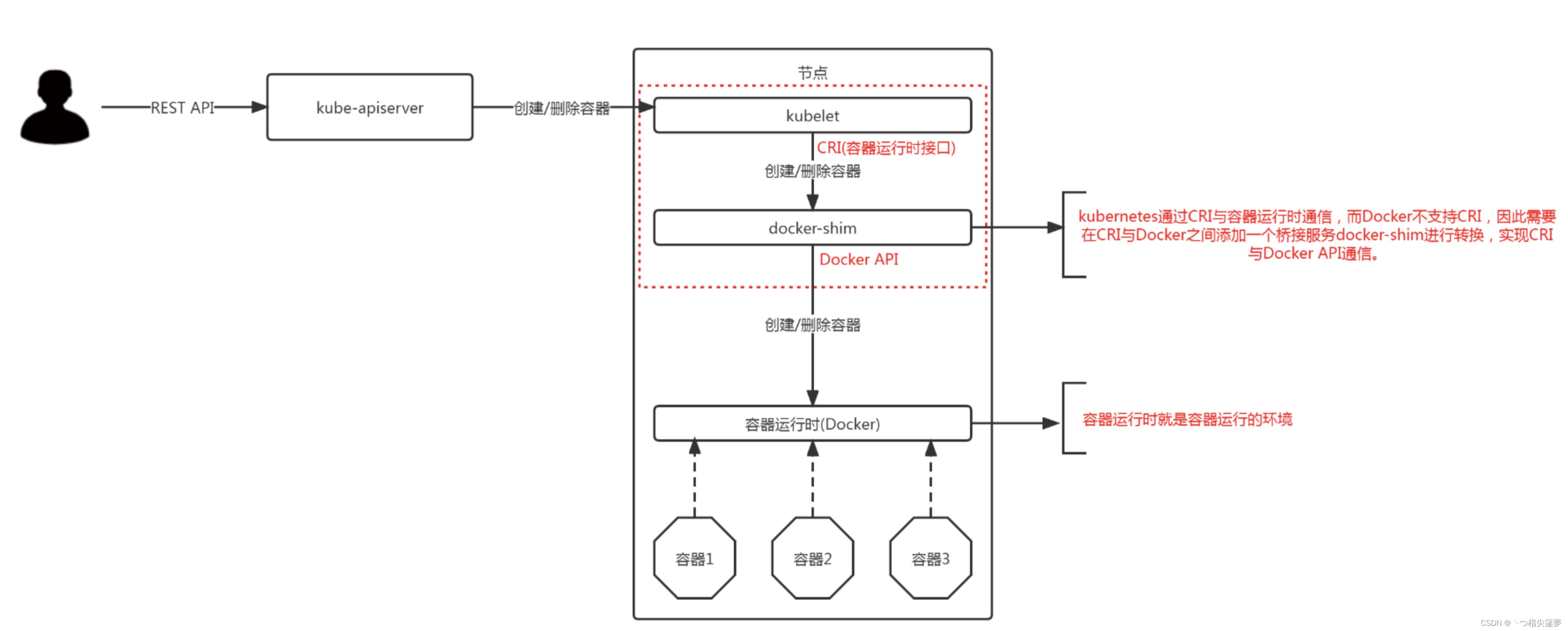

测试使用cri-docker做为containerd和docker的中间层垫片。

规划

| IP | 系统 | 主机名 |

|---|---|---|

| 10.0.6.5 | ubuntu 22.04.3 jammy | master01.kktb.org |

| 10.0.6.6 | ubuntu 22.04.3 jammy | master02.kktb.org |

| 10.0.6.7 | ubuntu 22.04.3 jammy | master03.kktb.org |

配置

步骤:

- 系统优化 禁用swap,设置ip_forward hosts地址配置 等

- 配置docker源

- 配置kubernetes源

- kubeadm初始化

- 取消master节点的污点

由于在局域网中配置了代理,所以不使用国内源。

配置hosts地址

[root@master02 ~]#grep master /etc/hosts

10.0.6.5 master01.kktb.org

10.0.6.6 master02.kktb.org

10.0.6.7 master03.kktb.org

# 这样get node出来的节点名是主机名

[root@master01 ~]#kubectl get node

NAME STATUS ROLES AGE VERSION

master01.kktb.org Ready control-plane 35m v1.24.3

master02.kktb.org Ready control-plane 28m v1.24.3

master03.kktb.org Ready control-plane 22m v1.24.3

docker

for pkg in docker.io docker-doc docker-compose podman-docker containerd runc; do sudo apt-get remove $pkg; done

sudo install -m 0755 -d /etc/apt/keyrings

apt-get install -y apt-transport-https ca-certificates curl

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

sudo chmod a+r /etc/apt/keyrings/docker.gpg

echo "deb [arch="$(dpkg --print-architecture)" signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

"$(. /etc/os-release && echo "$VERSION_CODENAME")" stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

apt-cache madison docker-ce | awk '{ print $3 }'

VERSION_STRING=5:20.10.24~3-0~ubuntu-jammy

apt-get install docker-ce=$VERSION_STRING docker-ce-cli=$VERSION_STRING containerd.io docker-buildx-plugin docker-compose-plugin

docker run hello-world

查看当前的cgroup驱动是不是systemd,不是的话要更改

[root@master03 ~]#docker info |grep -i driver

Storage Driver: overlay2

Logging Driver: json-file

Cgroup Driver: systemd

kubernetes源

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.24/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.28/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

apt update

配置组件

apt install -y kubeadm=1.24.3-1.1 kubelet=1.24.3-1.1 kubectl=1.24.3-1.1

配置cri-docker中间层

curl -LO https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.0/cri-dockerd_0.3.0.3-0.ubuntu-jammy_amd64.deb

dpkg -i cri-dockerd_0.3.0.3-0.ubuntu-jammy_amd64.deb

systemctl status cri-docker.service

kubeadm初始化集群

列出需要的镜像

[root@master01 ~]#kubeadm config images list

I0917 08:39:15.852977 207252 version.go:255] remote version is much newer: v1.28.2; falling back to: stable-1.24

k8s.gcr.io/kube-apiserver:v1.24.17

k8s.gcr.io/kube-controller-manager:v1.24.17

k8s.gcr.io/kube-scheduler:v1.24.17

k8s.gcr.io/kube-proxy:v1.24.17

k8s.gcr.io/pause:3.7

k8s.gcr.io/etcd:3.5.3-0

k8s.gcr.io/coredns/coredns:v1.8.6

拉取镜像

[root@master01 ~]#kubeadm config images pull --kubernetes-version=v1.24.0 --cri-socket unix:///run/cri-dockerd.sock

[config/images] Pulled k8s.gcr.io/kube-apiserver:v1.24.0

[config/images] Pulled k8s.gcr.io/kube-controller-manager:v1.24.0

[config/images] Pulled k8s.gcr.io/kube-scheduler:v1.24.0

[config/images] Pulled k8s.gcr.io/kube-proxy:v1.24.0

[config/images] Pulled k8s.gcr.io/pause:3.7

[config/images] Pulled k8s.gcr.io/etcd:3.5.3-0

[config/images] Pulled k8s.gcr.io/coredns/coredns:v1.8.6

初始化集群

[root@master01 ~]#kubeadm init --control-plane-endpoint="master01.kktb.org" --kubernetes-version=v1.24.0 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --token-ttl=0 --cri-socket unix:///run/cri-dockerd.sock --upload-certs

逐个参数解释

init 初始化集群

reset 重置集群,如果创建集群失败可以执行此命令重置集群

--control-plane-endpoint 控制平面的节点,可以是IP地址也可以是主机名,主机名要配置到hosts文件中,不然解析失败

--kubernetes-version 安装的k8s集群版本,跟配置的apt 源和拉取的镜像版本要保持一致

--pod-network-cidr 集群中的pod的网段

--service-cidr 集群中service的网段

--token-ttl 加入集群的token过期时间 0 表示永远不过期,不安全

--cri-socket 指定集群调用的cri的socket路径

输出

[init] Using Kubernetes version: v1.24.0

# 执行安装前检查

[preflight] Running pre-flight checks

[WARNING SystemVerification]: missing optional cgroups: blkio

# 拉取启动集群所需要的镜像 这要花费一段时间 取决于你的网络连接

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

# 也可以在安装集群前使用Kubeam config images pull提前拉取镜像

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

# 使用的证书文件夹

[certs] Using certificateDir folder "/etc/kubernetes/pki"

# 生成集群ca证书和key

[certs] Generating "ca" certificate and key

# 生成apiserver证书

[certs] Generating "apiserver" certificate and key

# apiserver服务的证书地址已签发,service名称和IP是xxx

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master01.kktb.org] and IPs [10.96.0.1 10.0.6.5]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

# etcd的service证书和dns解析名字

[certs] etcd/server serving cert is signed for DNS names [localhost master01.kktb.org] and IPs [10.0.6.5 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master01.kktb.org] and IPs [10.0.6.5 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

# 生成serviceaccount的私钥和公钥

[certs] Generating "sa" key and public key

# kubeconfig集群密钥的文件夹

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

# kubelet服务配置文件

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

# 使用的清单文件夹

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

# 创建k8s集群的关键服务的静态pod

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

# 创建惊天pod etcd的部署文件位置

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

# 等待kubelet服务启动控制节点的静态pod 从/etc/xxx目录

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

# apiclient检查所有的控制平面节点的健康状态用时

[apiclient] All control plane components are healthy after 24.002949 seconds

# 在kube-system 命名空间中使用configmap资源类型存储配置信息kubeadm-config

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

# 存储证书

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

1df01c66c2f92b14360bcaf893f6b3f6a5921fa65a6b080d9423a8362243f487

# 标记master01节点未控制平面节点并添加label标签

[mark-control-plane] Marking the node master01.kktb.org as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

# 添加控制平面节点禁止调度污点

[mark-control-plane] Marking the node master01.kktb.org as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: zcg7j7.zti8f568uzi9k6hn

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

# 配置rbac role-based access control 基于角色的访问控制密钥获取节点

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

# 应用 必要的插件 coredns kube-proxy

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

# 控制平面初始化完成

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

# 配置kubeconfig文件到当前用户的家目录中

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

# root用户可以运行

export KUBECONFIG=/etc/kubernetes/admin.conf

# 部署网络插件

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

# 在其他控制平面节点使用下面的命令将节点添加到集群

kubeadm join master01.kktb.org:6443 --token zcg7j7.zti8f568uzi9k6hn \

--discovery-token-ca-cert-hash sha256:23d1070adacf75c3f79b577d966afe0286c9a06bc77dc25a8fd766487935fb94 \

--control-plane --certificate-key 1df01c66c2f92b14360bcaf893f6b3f6a5921fa65a6b080d9423a8362243f487

# 默认证书在两个小时删除,可以使用如下命令重新生成证书

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

# 在工作节点worker 添加主机到集群中

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join master01.kktb.org:6443 --token zcg7j7.zti8f568uzi9k6hn \

--discovery-token-ca-cert-hash sha256:23d1070adacf75c3f79b577d966afe0286c9a06bc77dc25a8fd766487935fb94

配置网络插件flannel,安装时候用了flannel中默认的pod和svc的地址段,所以直接apply就行无需更改任何内容

[root@master01 ~]#kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

其他master节点加入集群

kubeadm join master01.kktb.org:6443 --token zcg7j7.zti8f568uzi9k6hn --discovery-token-ca-cert-hash sha256:23d1070adacf75c3f79b577d966afe0286c9a06bc77dc25a8fd766487935fb94 --control-plane --certificate-key 1df01c66c2f92b14360bcaf893f6b3f6a5921fa65a6b080d9423a8362243f487 --cri-socket unix:///run/cri-dockerd.sock

[root@master01 ~]#kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-78b49597cf-5f5dc 1/1 Running 0 9m44s 10.244.0.2 master01.kktb.org <none> <none>

demoapp-78b49597cf-fmqn8 1/1 Running 0 9m44s 10.244.0.3 master01.kktb.org <none> <none>

允许master节点参与调度

[root@master01 ~]#kubectl taint node master01.kktb.org node-role.kubernetes.io/master-

node/master01.kktb.org untainted

[root@master01 ~]#kubectl taint node master01.kktb.org node-role.kubernetes.io/control-plane-

node/master01.kktb.org untainted

# 一次允许所有的master节点允许调度

[root@master01 ~]#kubectl taint nodes --all node-role.kubernetes.io/control-plane-

[root@master01 ~]#kubectl taint nodes --all node-role.kubernetes.io/master-

创建pod测试文章来源:https://www.toymoban.com/news/detail-731650.html

[root@master01 ~]#kubectl create deployment demoapp --image=ikubernetes/demoapp:v1.0 --replicas=2

验证其他节点也能被调度文章来源地址https://www.toymoban.com/news/detail-731650.html

[root@master01 ~]#kubectl scale deployment demoapp --replicas 3

deployment.apps/demoapp scaled

[root@master01 ~]#kubectl scale deployment demoapp --replicas 6

[root@master01 ~]#kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

demoapp-78b49597cf-5f5dc 1/1 Running 0 10m 10.244.0.2 master01.kktb.org <none> <none>

demoapp-78b49597cf-dgcdz 1/1 Running 0 16s 10.244.2.3 master03.kktb.org <none> <none>

demoapp-78b49597cf-fmqn8 1/1 Running 0 10m 10.244.0.3 master01.kktb.org <none> <none>

demoapp-78b49597cf-j94ts 1/1 Running 0 16s 10.244.1.4 master02.kktb.org <none> <none>

demoapp-78b49597cf-k8mkw 1/1 Running 0 16s 10.244.1.5 master02.kktb.org <none> <none>

demoapp-78b49597cf-sq6vz 1/1 Running 0 24s 10.244.2.2 master03.kktb.org <none> <none>

到了这里,关于kubeadm部署k8sv1.24使用cri-docker做为CRI的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!