简单记录一下爬取网站图片保存到本地指定目录过程,希望对刚入门的小伙伴有所帮助!

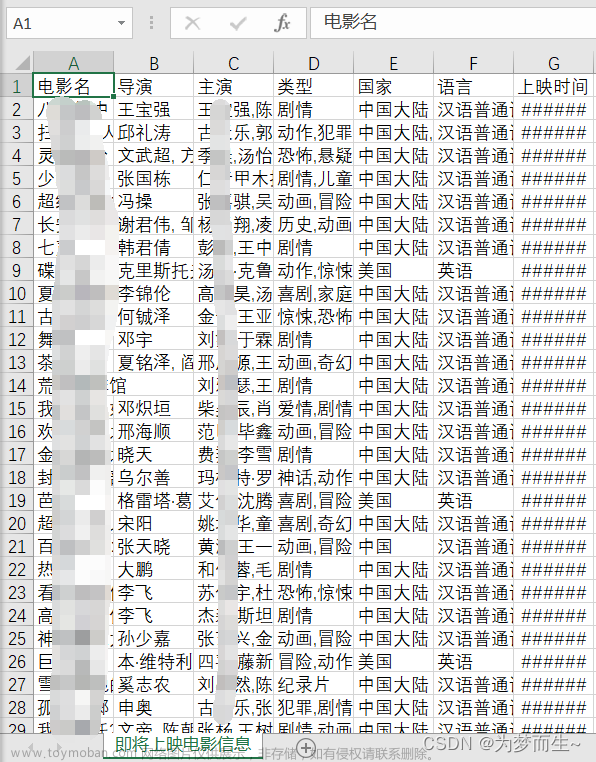

目标网站就是下图所示页面:

实现步骤:

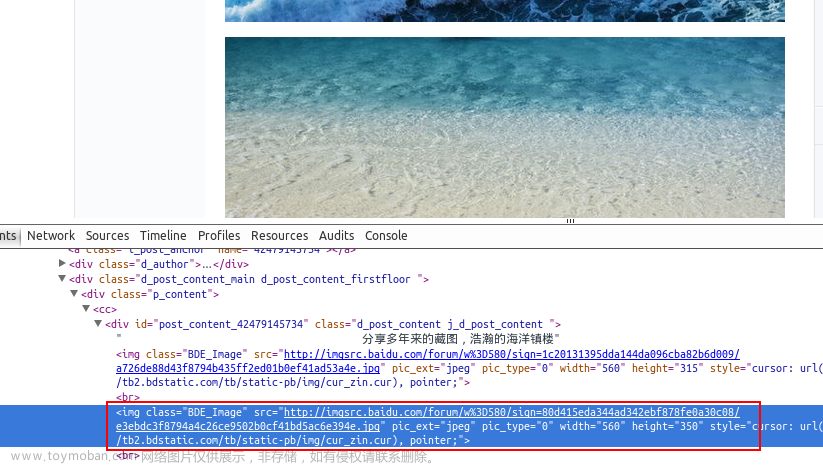

1.爬取每页的图片地址集合

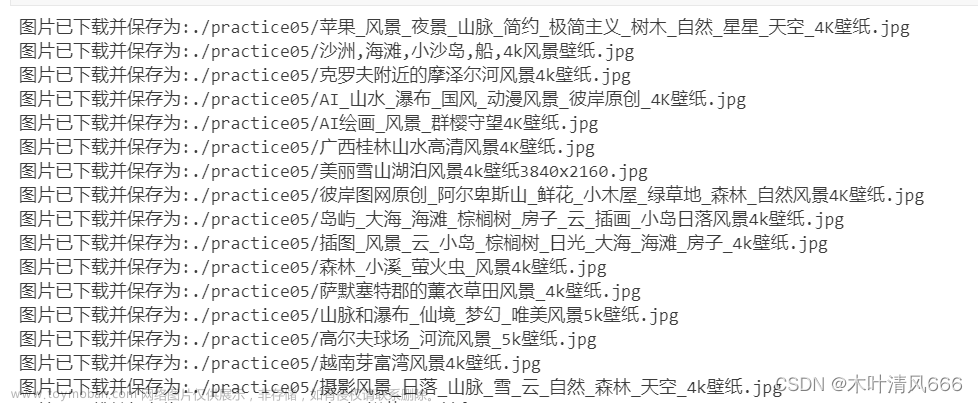

2.下载图片到本地

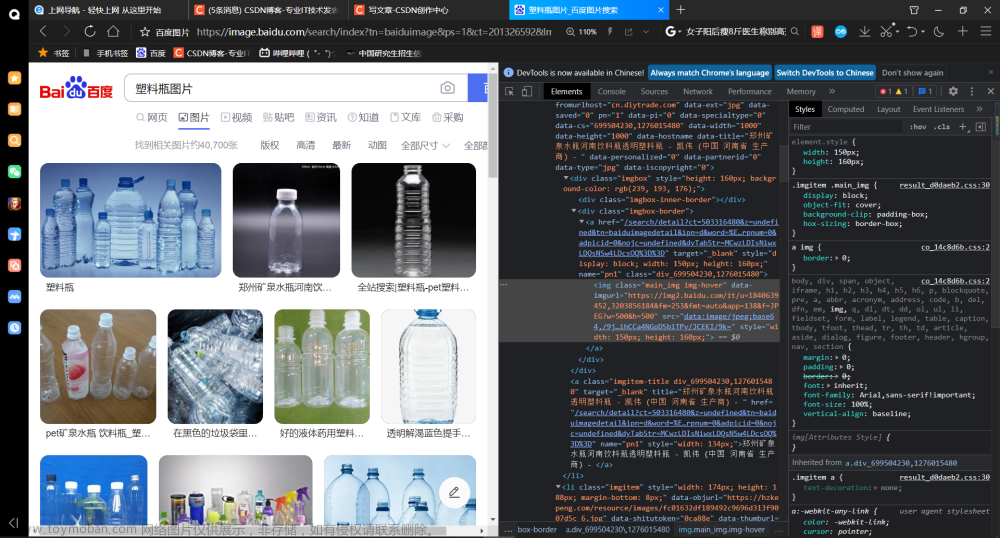

3. 获取指定页数的页面路径

以下是实现代码:文章来源地址https://www.toymoban.com/news/detail-733704.html

import bs4

import requests

import os

# 下载图片到本地

def down_load_img(local_path,img_url):

img_name = os.path.basename(img_url[img_url.rfind("/")+1:len(img_url)])

with open(f"{local_path}\{img_name}","wb") as imgFile:

res = requests.get(img_url)

if res.status_code == 200:

resp = imgFile.write(res.content)

if resp is not None:

print(f"{img_name}下载成功!")

else:

print(f"{img_name}下载失败!")

else:

print(f"{img_name}请求失败,下载失败!")

# 获取每页的图片地址

def get_img_url(website_url):

return_img_url_list=[]

res = requests.get(website_url)

if res.status_code != 200:

print("请求失败!")

website_content = res.content

soup = bs4.BeautifulSoup(website_content, "html.parser")

img_url_list = soup.find_all("div", class_="pic")

for imgUrl in img_url_list:

style_info = imgUrl["style"]

img = style_info[style_info.find("(") + 1:style_info.find(")")]

return_img_url_list.append(img)

return return_img_url_list

# 获取指定页数的页面路径

def get_website_url(page_num):

website_format="https://pic.netbian.top/4kmeinv/index_{}.html"

web_site_url_list=[]

for i in range(1,page_num+1):

web_site_url_list.append(f"https://pic.netbian.top/4kmeinv/index_{i}.html")

return web_site_url_list

if __name__ == '__main__':

local_path="D:\mvImg"

page_num=2

for website_url in get_website_url(page_num):

for img_url in get_img_url(website_url):

down_load_img(local_path,img_url)

文章来源:https://www.toymoban.com/news/detail-733704.html

到了这里,关于python入门实战:爬取图片到本地的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!