当前示例源码github地址:

https://github.com/vilyLei/voxwebgpu/blob/main/src/voxgpu/sample/SimplePBRTest.ts

此示例渲染系统实现的特性:

1. 用户态与系统态隔离。

细节请见:引擎系统设计思路 - 用户态与系统态隔离-CSDN博客

2. 高频调用与低频调用隔离。

3. 面向用户的易用性封装。

4. 渲染数据(内外部相关资源)和渲染机制分离。

5. 用户操作和渲染系统调度并行机制。

6. 数据/语义驱动。

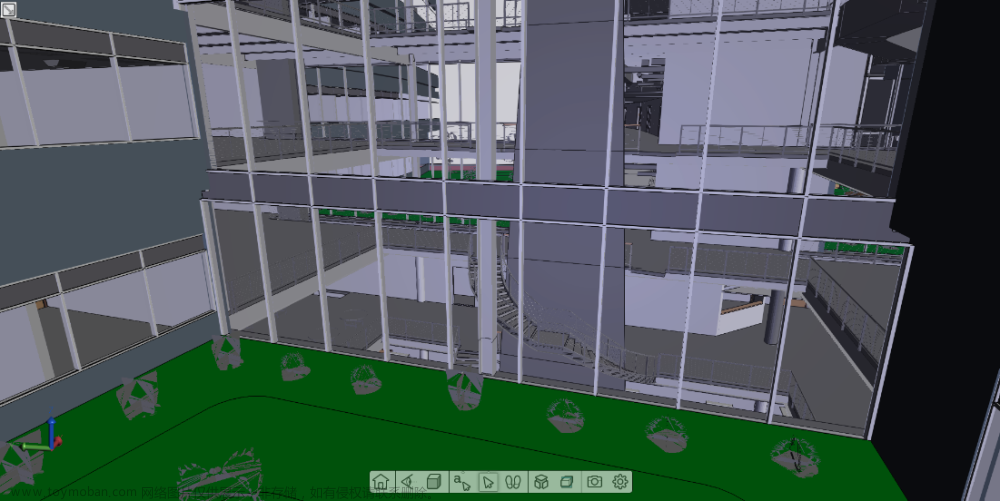

当前示例运行效果:

WGSL顶点shader:

@group(0) @binding(0) var<uniform> objMat : mat4x4<f32>;

@group(0) @binding(1) var<uniform> viewMat : mat4x4<f32>;

@group(0) @binding(2) var<uniform> projMat : mat4x4<f32>;

struct VertexOutput {

@builtin(position) Position : vec4<f32>,

@location(0) pos: vec4<f32>,

@location(1) uv : vec2<f32>,

@location(2) normal : vec3<f32>,

@location(3) camPos : vec3<f32>

}

fn inverseM33(m: mat3x3<f32>)-> mat3x3<f32> {

let a00 = m[0][0]; let a01 = m[0][1]; let a02 = m[0][2];

let a10 = m[1][0]; let a11 = m[1][1]; let a12 = m[1][2];

let a20 = m[2][0]; let a21 = m[2][1]; let a22 = m[2][2];

let b01 = a22 * a11 - a12 * a21;

let b11 = -a22 * a10 + a12 * a20;

let b21 = a21 * a10 - a11 * a20;

let det = a00 * b01 + a01 * b11 + a02 * b21;

return mat3x3<f32>(

vec3<f32>(b01, (-a22 * a01 + a02 * a21), (a12 * a01 - a02 * a11)) / det,

vec3<f32>(b11, (a22 * a00 - a02 * a20), (-a12 * a00 + a02 * a10)) / det,

vec3<f32>(b21, (-a21 * a00 + a01 * a20), (a11 * a00 - a01 * a10)) / det);

}

fn m44ToM33(m: mat4x4<f32>) -> mat3x3<f32> {

return mat3x3(m[0].xyz, m[1].xyz, m[2].xyz);

}

fn inverseM44(m: mat4x4<f32>)-> mat4x4<f32> {

let a00 = m[0][0]; let a01 = m[0][1]; let a02 = m[0][2]; let a03 = m[0][3];

let a10 = m[1][0]; let a11 = m[1][1]; let a12 = m[1][2]; let a13 = m[1][3];

let a20 = m[2][0]; let a21 = m[2][1]; let a22 = m[2][2]; let a23 = m[2][3];

let a30 = m[3][0]; let a31 = m[3][1]; let a32 = m[3][2]; let a33 = m[3][3];

let b00 = a00 * a11 - a01 * a10;

let b01 = a00 * a12 - a02 * a10;

let b02 = a00 * a13 - a03 * a10;

let b03 = a01 * a12 - a02 * a11;

let b04 = a01 * a13 - a03 * a11;

let b05 = a02 * a13 - a03 * a12;

let b06 = a20 * a31 - a21 * a30;

let b07 = a20 * a32 - a22 * a30;

let b08 = a20 * a33 - a23 * a30;

let b09 = a21 * a32 - a22 * a31;

let b10 = a21 * a33 - a23 * a31;

let b11 = a22 * a33 - a23 * a32;

let det = b00 * b11 - b01 * b10 + b02 * b09 + b03 * b08 - b04 * b07 + b05 * b06;

return mat4x4<f32>(

vec4<f32>(a11 * b11 - a12 * b10 + a13 * b09,

a02 * b10 - a01 * b11 - a03 * b09,

a31 * b05 - a32 * b04 + a33 * b03,

a22 * b04 - a21 * b05 - a23 * b03) / det,

vec4<f32>(a12 * b08 - a10 * b11 - a13 * b07,

a00 * b11 - a02 * b08 + a03 * b07,

a32 * b02 - a30 * b05 - a33 * b01,

a20 * b05 - a22 * b02 + a23 * b01) / det,

vec4<f32>(a10 * b10 - a11 * b08 + a13 * b06,

a01 * b08 - a00 * b10 - a03 * b06,

a30 * b04 - a31 * b02 + a33 * b00,

a21 * b02 - a20 * b04 - a23 * b00) / det,

vec4<f32>(a11 * b07 - a10 * b09 - a12 * b06,

a00 * b09 - a01 * b07 + a02 * b06,

a31 * b01 - a30 * b03 - a32 * b00,

a20 * b03 - a21 * b01 + a22 * b00) / det);

}

@vertex

fn main(

@location(0) position : vec3<f32>,

@location(1) uv : vec2<f32>,

@location(2) normal : vec3<f32>

) -> VertexOutput {

let wpos = objMat * vec4(position.xyz, 1.0);

var output : VertexOutput;

output.Position = projMat * viewMat * wpos;

output.uv = uv;

let invMat33 = inverseM33( m44ToM33( objMat ) );

output.normal = normalize( normal * invMat33 );

output.camPos = (inverseM44(viewMat) * vec4<f32>(0.0,0.0,0.0, 1.0)).xyz;

output.pos = wpos;

return output;

}WGSL片段shader:文章来源:https://www.toymoban.com/news/detail-737763.html

@group(0) @binding(3) var<storage> albedo: vec4f;

@group(0) @binding(4) var<storage> param: vec4f;

const PI = 3.141592653589793;

const PI2 = 6.283185307179586;

const PI_HALF = 1.5707963267948966;

const RECIPROCAL_PI = 0.3183098861837907;

const RECIPROCAL_PI2 = 0.15915494309189535;

const EPSILON = 1e-6;

fn approximationSRGBToLinear(srgbColor: vec3<f32>) -> vec3<f32> {

return pow(srgbColor, vec3<f32>(2.2));

}

fn approximationLinearToSRCB(linearColor: vec3<f32>) -> vec3<f32> {

return pow(linearColor, vec3(1.0/2.2));

}

fn accurateSRGBToLinear(srgbColor: vec3<f32>) -> vec3<f32> {

let linearRGBLo = srgbColor / 12.92;

let linearRGBHi = pow((srgbColor + vec3(0.055)) / vec3(1.055), vec3(2.4));

if( all( srgbColor <= vec3(0.04045) ) ) {

return linearRGBLo;

}

return linearRGBHi;

}

fn accurateLinearToSRGB(linearColor: vec3<f32>) -> vec3<f32> {

let srgbLo = linearColor * 12.92;

let srgbHi = (pow(abs(linearColor), vec3(1.0 / 2.4)) * 1.055) - 0.055;

if(all(linearColor <= vec3(0.0031308))) {

return srgbLo;

}

return srgbHi;

}

// Trowbridge-Reitz(Generalized-Trowbridge-Reitz,GTR)

fn DistributionGTR1(NdotH: f32, roughness: f32) -> f32 {

if (roughness >= 1.0) {

return 1.0/PI;

}

let a2 = roughness * roughness;

let t = 1.0 + (a2 - 1.0)*NdotH*NdotH;

return (a2 - 1.0) / (PI * log(a2) *t);

}

fn DistributionGTR2(NdotH: f32, roughness: f32) -> f32 {

let a2 = roughness * roughness;

let t = 1.0 + (a2 - 1.0) * NdotH * NdotH;

return a2 / (PI * t * t);

}

fn DistributionGGX(N: vec3<f32>, H: vec3<f32>, roughness: f32) -> f32 {

let a = roughness*roughness;

let a2 = a*a;

let NdotH = max(dot(N, H), 0.0);

let NdotH2 = NdotH*NdotH;

let nom = a2;

var denom = (NdotH2 * (a2 - 1.0) + 1.0);

denom = PI * denom * denom;

return nom / max(denom, 0.0000001); // prevent divide by zero for roughness=0.0 and NdotH=1.0

}

fn GeometryImplicit(NdotV: f32, NdotL: f32) -> f32 {

return NdotL * NdotV;

}

// ----------------------------------------------------------------------------

fn GeometrySchlickGGX(NdotV: f32, roughness: f32) -> f32 {

let r = (roughness + 1.0);

let k = (r*r) / 8.0;

let nom = NdotV;

let denom = NdotV * (1.0 - k) + k;

return nom / denom;

}

// ----------------------------------------------------------------------------

fn GeometrySmith(N: vec3<f32>, V: vec3<f32>, L: vec3<f32>, roughness: f32) -> f32 {

let NdotV = max(dot(N, V), 0.0);

let NdotL = max(dot(N, L), 0.0);

let ggx2 = GeometrySchlickGGX(NdotV, roughness);

let ggx1 = GeometrySchlickGGX(NdotL, roughness);

return ggx1 * ggx2;

}

// @param cosTheta is clamp(dot(H, V), 0.0, 1.0)

fn fresnelSchlick(cosTheta: f32, F0: vec3<f32>) -> vec3<f32> {

return F0 + (1.0 - F0) * pow(max(1.0 - cosTheta, 0.0), 5.0);

}

fn fresnelSchlick2(specularColor: vec3<f32>, L: vec3<f32>, H: vec3<f32>) -> vec3<f32> {

return specularColor + (1.0 - specularColor) * pow(1.0 - saturate(dot(L, H)), 5.0);

}

//fresnelSchlick2(specularColor, L, H) * ((SpecularPower + 2) / 8 ) * pow(saturate(dot(N, H)), SpecularPower) * dotNL;

const OneOnLN2_x6 = 8.656171;// == 1/ln(2) * 6 (6 is SpecularPower of 5 + 1)

// dot -> dot(N,V) or

fn fresnelSchlick3(specularColor: vec3<f32>, dot: f32, glossiness: f32) -> vec3<f32> {

return specularColor + (max(vec3(glossiness), specularColor) - specularColor) * exp2(-OneOnLN2_x6 * dot);

}

fn fresnelSchlickWithRoughness(specularColor: vec3<f32>, L: vec3<f32>, N: vec3<f32>, gloss: f32) -> vec3<f32> {

return specularColor + (max(vec3(gloss), specularColor) - specularColor) * pow(1.0 - saturate(dot(L, N)), 5.0);

}

const A = 2.51f;

const B = 0.03f;

const C = 2.43f;

const D = 0.59f;

const E = 0.14f;

fn ACESToneMapping(color: vec3<f32>, adapted_lum: f32) -> vec3<f32> {

let c = color * adapted_lum;

return (c * (A * c + B)) / (c * (C * c + D) + E);

}

//color = color / (color + vec3(1.0));

fn reinhard(v: vec3<f32>) -> vec3<f32> {

return v / (vec3<f32>(1.0) + v);

}

fn reinhard_extended(v: vec3<f32>, max_white: f32) -> vec3<f32> {

let numerator = v * (1.0f + (v / vec3(max_white * max_white)));

return numerator / (1.0f + v);

}

fn luminance(v: vec3<f32>) -> f32 {

return dot(v, vec3<f32>(0.2126f, 0.7152f, 0.0722f));

}

fn change_luminance(c_in: vec3<f32>, l_out: f32) -> vec3<f32> {

let l_in = luminance(c_in);

return c_in * (l_out / l_in);

}

fn reinhard_extended_luminance(v: vec3<f32>, max_white_l: f32) -> vec3<f32> {

let l_old = luminance(v);

let numerator = l_old * (1.0f + (l_old / (max_white_l * max_white_l)));

let l_new = numerator / (1.0f + l_old);

return change_luminance(v, l_new);

}

fn ReinhardToneMapping( color: vec3<f32>, toneMappingExposure: f32 ) -> vec3<f32> {

let c = color * toneMappingExposure;

return saturate( c / ( vec3( 1.0 ) + c ) );

}

// expects values in the range of [0,1]x[0,1], returns values in the [0,1] range.

// do not collapse into a single function per: http://byteblacksmith.com/improvements-to-the-canonical-one-liner-glsl-rand-for-opengl-es-2-0/

const highp_a = 12.9898;

const highp_b = 78.233;

const highp_c = 43758.5453;

fn rand( uv: vec2<f32> ) -> f32 {

let dt = dot( uv.xy, vec2<f32>( highp_a, highp_b ) );

let sn = modf( dt / PI ).fract;

return fract(sin(sn) * highp_c);

}

// // based on https://www.shadertoy.com/view/MslGR8

fn dithering( color: vec3<f32>, fragCoord: vec2<f32> ) -> vec3<f32> {

//Calculate grid position

let grid_position = rand( fragCoord );

//Shift the individual colors differently, thus making it even harder to see the dithering pattern

var dither_shift_RGB = vec3<f32>( 0.25 / 255.0, -0.25 / 255.0, 0.25 / 255.0 );

//modify shift acording to grid position.

dither_shift_RGB = mix( 2.0 * dither_shift_RGB, -2.0 * dither_shift_RGB, grid_position );

//shift the color by dither_shift

return color + dither_shift_RGB;

}

const dis = 700.0;

const disZ = 400.0;

const u_lightPositions = array<vec3<f32>, 4>(

vec3<f32>(-dis, dis, disZ),

vec3<f32>(dis, dis, disZ),

vec3<f32>(-dis, -dis, disZ),

vec3<f32>(dis, -dis, disZ)

);

const colorValue = 300.0;

const u_lightColors = array<vec3<f32>, 4>(

vec3<f32>(colorValue, colorValue, colorValue),

vec3<f32>(colorValue, colorValue, colorValue),

vec3<f32>(colorValue, colorValue, colorValue),

vec3<f32>(colorValue, colorValue, colorValue),

);

fn calcPBRColor3(Normal: vec3<f32>, WorldPos: vec3<f32>, camPos: vec3<f32>) -> vec3<f32> {

var color = vec3<f32>(0.0);

var ao = param.x;

var roughness = param.y;

var metallic = param.z;

var N = normalize(Normal);

var V = normalize(camPos.xyz - WorldPos);

var dotNV = clamp(dot(N, V), 0.0, 1.0);

// calculate reflectance at normal incidence; if dia-electric (like plastic) use F0

// of 0.04 and if it's a metal, use the albedo color as F0 (metallic workflow)

var F0 = vec3(0.04);

F0 = mix(F0, albedo.xyz, metallic);

// reflectance equation

var Lo = vec3(0.0);

for (var i: i32 = 0; i < 4; i++) {

// calculate per-light radiance

let L = normalize(u_lightPositions[i].xyz - WorldPos);

let H = normalize(V + L);

let distance = length(u_lightPositions[i].xyz - WorldPos);

let attenuation = 1.0 / (1.0 + 0.001 * distance + 0.0003 * distance * distance);

let radiance = u_lightColors[i].xyz * attenuation;

// Cook-Torrance BRDF

let NDF = DistributionGGX(N, H, roughness);

let G = GeometrySmith(N, V, L, roughness);

//vec3 F = fresnelSchlick(clamp(dot(H, V), 0.0, 1.0), F0);

let F = fresnelSchlick3(F0,clamp(dot(H, V), 0.0, 1.0), 0.9);

//vec3 F = fresnelSchlick3(F0,dotNV, 0.9);

let nominator = NDF * G * F;

let denominator = 4.0 * max(dot(N, V), 0.0) * max(dot(N, L), 0.0);

let specular = nominator / max(denominator, 0.001); // prevent divide by zero for NdotV=0.0 or NdotL=0.0

// kS is equal to Fresnel

let kS = F;

// for energy conservation, the diffuse and specular light can't

// be above 1.0 (unless the surface emits light); to preserve this

// relationship the diffuse component (kD) should equal 1.0 - kS.

var kD = vec3<f32>(1.0) - kS;

// multiply kD by the inverse metalness such that only non-metals

// have diffuse lighting, or a linear blend if partly metal (pure metals

// have no diffuse light).

kD *= 1.0 - metallic;

// scale light by NdotL

let NdotL = max(dot(N, L), 0.0);

// add to outgoing radiance Lo

// note that we already multiplied the BRDF by the Fresnel (kS) so we won't multiply by kS again

Lo += (kD * albedo.xyz / PI + specular) * radiance * NdotL;

}

// ambient lighting (note that the next IBL tutorial will replace

// this ambient lighting with environment lighting).

let ambient = vec3<f32>(0.03) * albedo.xyz * ao;

color = ambient + Lo;

// HDR tonemapping

color = reinhard( color );

// gamma correct

color = pow(color, vec3<f32>(1.0/2.2));

return color;

}

@fragment

fn main(

@location(0) pos: vec4<f32>,

@location(1) uv: vec2<f32>,

@location(2) normal: vec3<f32>,

@location(3) camPos: vec3<f32>

) -> @location(0) vec4<f32> {

var color4 = vec4(calcPBRColor3(normal, pos.xyz, camPos), 1.0);

return color4;

}

此示例基于此渲染系统实现,当前示例TypeScript源码如下:文章来源地址https://www.toymoban.com/news/detail-737763.html

export class SimplePBRTest {

private mRscene = new RendererScene();

geomData = new GeomDataBuilder();

initialize(): void {

console.log("SimplePBRTest::initialize() ...");

this.initEvent();

this.initScene();

}

private initEvent(): void {

const rc = this.mRscene;

rc.addEventListener(MouseEvent.MOUSE_DOWN, this.mouseDown);

new MouseInteraction().initialize(rc, 0, false).setAutoRunning(true);

}

private mouseDown = (evt: MouseEvent): void => {};

private createMaterial(shdSrc: WGRShderSrcType, color?: Color4, arm?: number[]): WGMaterial {

color = color ? color : new Color4();

let pipelineDefParam = {

depthWriteEnabled: true,

blendModes: [] as string[]

};

const material = new WGMaterial({

shadinguuid: "simple-pbr-materialx",

shaderCodeSrc: shdSrc,

pipelineDefParam

});

let albedoV = new WGRStorageValue({ data: new Float32Array([color.r, color.g, color.b, 1]) });

// arm: ao, roughness, metallic

let armV = new WGRStorageValue({ data: new Float32Array([arm[0], arm[1], arm[2], 1]) });

material.uniformValues = [albedoV, armV];

return material;

}

private createGeom(rgd: GeomRDataType, normalEnabled = false): WGGeometry {

const geometry = new WGGeometry()

.addAttribute({ position: rgd.vs })

.addAttribute({ uv: rgd.uvs })

.setIndices(rgd.ivs);

if (normalEnabled) {

geometry.addAttribute({ normal: rgd.nvs });

}

return geometry;

}

private initScene(): void {

const rc = this.mRscene;

const geometry = this.createGeom(this.geomData.createSphere(50), true);

const shdSrc = {

vertShaderSrc: { code: vertWGSL, uuid: "vertShdCode" },

fragShaderSrc: { code: fragWGSL, uuid: "fragShdCode" }

};

let tot = 7;

const size = new Vector3(150, 150, 150);

const pos = new Vector3().copyFrom(size).scaleBy(-0.5 * (tot - 1));

for (let i = 0; i < tot; ++i) {

for (let j = 0; j < tot; ++j) {

// arm: ao, roughness, metallic

let arm = [1.5, (i / tot) * 0.95 + 0.05, (j / tot) * 0.95 + 0.05];

let material = this.createMaterial(shdSrc, new Color4(0.5, 0.0, 0.0), arm);

let entity = new Entity3D({ materials: [material], geometry });

entity.transform.setPosition(new Vector3().setXYZ(i * size.x, j * size.y, size.z).addBy(pos));

rc.addEntity(entity);

}

}

}

run(): void {

this.mRscene.run();

}

}到了这里,关于轻量封装WebGPU渲染系统示例<11>- WebGP实现的简单PBR效果(源码)的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!