简介

记录Flume采集kafka数据到Hdfs。

配置文件

# vim job/kafka_to_hdfs_db.conf

a1.sources = r1

a1.channels = c1

a1.sinks = k1

a1.sources.r1.type = org.apache.flume.source.kafka.KafkaSource

#每一批有5000条的时候写入channel

a1.sources.r1.batchSize = 5000

#2秒钟写入channel(也就是如果没有达到5000条那么时间过了2秒拉去一次)

a1.sources.r1.batchDurationMillis = 2000

a1.sources.r1.kafka.bootstrap.servers = ip地址:9092,ip地址:9092,ip地址:9092

#指定对应的主题

# a1.sources.r1.kafka.topics.regex = ^topic[0-9]$ ---这里如果主题都是 数据库_表的情况那么就可是使用正则 ^数据库名称_,然后写拦截器的时候在header添加获取database的信息和表的信息

#写到头部,用%{database}_%{tablename}_inc 的形式进行写入到hdfs,达到好的扩展性。

a1.sources.r1.kafka.topics = cart_info,comment_info

#消费者主相同的的多个flume能够提高消费的吞吐量

a1.sources.r1.kafka.consumer.group.id = abs_flume

#在event头部添加一个topic的变量,/origin_data/gmall/db/%{topic}_inc/%Y-%m-%d,%{topic},key 为topic,value为消费的主题信息。

a1.sources.r1.setTopicHeader = true

a1.sources.r1.topicHeader = topic

a1.sources.r1.interceptors = i1

#这个拦截器里面可以在头部设置变量用来读取。%{头部的key}

a1.sources.r1.interceptors.i1.type = com.atguigu.flume.interceptor.db.TimestampInterceptor$Builder

#在最早的地方进行消费,如果有对应的消费者组了,那么就从最新的地方进行消费。

a1.sources.r1.kafka.consumer.auto.offset.reset=earliest

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /opt/module/flume/checkpoint/behavior2

a1.channels.c1.dataDirs = /opt/module/flume/data/behavior2/

a1.channels.c1.maxFileSize = 2146435071

a1.channels.c1.capacity = 1123456

a1.channels.c1.keep-alive = 6

## sink1

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = /origin_data/gmall/db/%{topic}_inc/%Y-%m-%d

a1.sinks.k1.hdfs.filePrefix = db

a1.sinks.k1.hdfs.round = false

#滚动的时间,这里是5分钟,如果不设置那么文件大小没有达到128m就不会回滚文件,不回滚文件就读取不到值。

a1.sinks.k1.hdfs.rollInterval = 300

a1.sinks.k1.hdfs.rollSize = 134217728

a1.sinks.k1.hdfs.rollCount = 0

a1.sinks.k1.hdfs.fileType = CompressedStream

a1.sinks.k1.hdfs.codeC = gzip

## 拼装

a1.sources.r1.channels = c1

a1.sinks.k1.channel= c1

拦截器(零点漂移问题)

主要是自定义下Flume读取event头部的时间。

TimestampInterceptor

import com.alibaba.fastjson.JSONObject;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import java.nio.charset.StandardCharsets;

import java.util.List;

import java.util.Map;

public class TimestampInterceptor implements Interceptor {

@Override

public void initialize() {

}

@Override

public Event intercept(Event event) {

Map<String, String> headers = event.getHeaders();

String log = new String(event.getBody(), StandardCharsets.UTF_8);

JSONObject jsonObject = JSONObject.parseObject(log);

Long ts = jsonObject.getLong("ts");

//Maxwell输出的数据中的ts字段时间戳单位为秒,Flume HDFSSink要求单位为毫秒

String timeMills = String.valueOf(ts * 1000);

headers.put("timestamp", timeMills);

return event;

}

@Override

public List<Event> intercept(List<Event> events) {

for (Event event : events) {

intercept(event);

}

return events;

}

@Override

public void close() {

}

public static class Builder implements Interceptor.Builder {

@Override

public Interceptor build() {

return new TimestampInterceptor();

}

@Override

public void configure(Context context) {

}

}

}将打好的包放入/opt/module/flume/lib文件夹下

[root@ lib]$ ls | grep interceptor

flume-interceptor-1.0-SNAPSHOT-jar-with-dependencies.jar

Flume启停文件

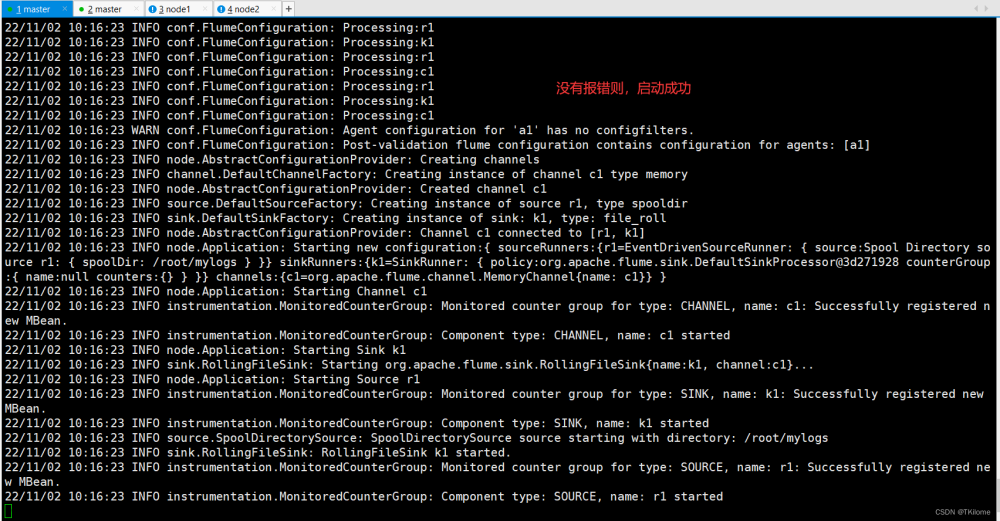

bin/flume-ng agent -n a1 -c conf/ -f job/kafka_to_hdfs_db.conf -Dflume.root.logger=info,console

#!/bin/bash

case $1 in

"start")

echo " --------启动 hadoop104 业务数据flume-------"

ssh hadoop104 "nohup /opt/module/flume/bin/flume-ng agent -n a1 -c /opt/module/flume/conf -f /opt/module/flume/job/kafka_to_hdfs_db.conf >/dev/null 2>&1 &"

;;

"stop")

echo " --------停止 hadoop104 业务数据flume-------"

ssh hadoop104 "ps -ef | grep kafka_to_hdfs_db.conf | grep -v grep |awk '{print \$2}' | xargs -n1 kill"

;;

esac生产实践

配置下flume的jvm

vi flume-env.shexport JAVA_OPTS="-Xms2048m -Xmx2048m"a1.sources = r1

a1.channels = c1

a1.sinks = k1

a1.sources.r1.type = org.apache.flume.source.kafka.KafkaSource

a1.sources.r1.batchSize = 3000

a1.sources.r1.batchDurationMillis = 2000

a1.sources.r1.kafka.bootstrap.servers = ip:9092,ip:9092

a1.sources.r1.kafka.topics = 表名,表名

a1.sources.r1.kafka.consumer.group.id = flume

a1.sources.r1.setTopicHeader = true

a1.sources.r1.topicHeader = topic

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = com.bigdata.bigdatautil.TimestampInterceptor$Builder

#如果是相同的消费者组那么首次是最开始的时候,后面在启动是末尾消费

a1.sources.r1.kafka.consumer.auto.offset.reset=earliest

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /home/bigdata/module/apache-flume-1.9.0-bin/checkpoint

a1.channels.c1.dataDirs = /home/bigdata/module/apache-flume-1.9.0-bin/data

a1.channels.c1.maxFileSize = 2146435071

a1.channels.c1.capacity = 1123456

a1.channels.c1.keep-alive = 6

## sink1

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = /user/hive/warehouse/abs/ods/ods_%{table_name}_inc/%Y-%m-%d

a1.sinks.k1.hdfs.round = false

#这里设置5分钟滚动一次文件,如果数据量不太大,建议增大数据,减少小文件的生成

a1.sinks.k1.hdfs.rollInterval = 300

a1.sinks.k1.hdfs.rollSize = 134217728

a1.sinks.k1.hdfs.rollCount = 0

a1.sinks.k1.hdfs.fileType = CompressedStream

a1.sinks.k1.hdfs.codeC = gzip

## 拼装

a1.sources.r1.channels = c1

a1.sinks.k1.channel= c1

拦截器

import com.alibaba.fastjson.JSONObject;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import java.nio.charset.StandardCharsets;

import java.util.List;

import java.util.Map;

/**

* "database": "数据库",

* "table": "表明",

* "type": "bootstrap-start",

* "ts": 时间戳秒,

* "data": {}

*/

public class TimestampInterceptor implements Interceptor {

@Override

public void initialize() {

}

@Override

public Event intercept(Event event) {

Map<String, String> headers = event.getHeaders();

String log = new String(event.getBody(), StandardCharsets.UTF_8);

JSONObject jsonObject = JSONObject.parseObject(log);

Long ts = jsonObject.getLong("ts");

String database_name = jsonObject.getString("database");

String table_name = jsonObject.getString("table");

//Maxwell输出的数据中的ts字段时间戳单位为秒,Flume HDFSSink要求单位为毫秒

String timeMills = String.valueOf(ts * 1000);

headers.put("timestamp", timeMills);

headers.put("database_name",database_name);

headers.put("table_name",table_name);

return event;

}

@Override

public List<Event> intercept(List<Event> events) {

for (Event event : events) {

intercept(event);

}

return events;

}

@Override

public void close() {

}

public static class Builder implements Interceptor.Builder {

@Override

public Interceptor build() {

return new TimestampInterceptor();

}

@Override

public void configure(Context context) {

}

}

}

集群启停文件

#!/bin/bash

case $1 in

"start")

echo " --------启动 abs3 flume-------"

ssh master2 "nohup /home/bigdata/module/apache-flume-1.9.0-bin/bin/flume-ng agent -n a1 -c /home/bigdata/module/apache-flume-1.9.0-bin/conf -f /home/bigdata/module/apache-flume-1.9.0-bin/conf/kafka_to_hdfs.conf >/dev/null 2>&1 &"

;;

"stop")

echo " --------启动 关闭 flume-------"

ssh master2 "ps -ef | grep kafka_to_hdfs.conf | grep -v grep |awk '{print \$2}' | xargs -n1 kill"

;;

esac

上面配置消费的形式是earliest,如果重新启动以后,他会从最新的位置开始消费,如果消费者组的名称有改变,那么就会从最开始的地方开始消费。

上面的集群脚本可以在要修改配置前,停止flume服务,停止以后,因为使用的是file的channel并且flume消费kafka,到hdfs,本身有事物保证,所以不会有数据丢失的问题,可能会有重复信息的问题。

处理异常情况问题

#查看所有的消费者组

bin/kafka-consumer-groups.sh --bootstrap-server ip:9092,ip:9092,ip:9092 --list

#查看对应消费者组的情况

bin/kafka-consumer-groups.sh --bootstrap-server ip:9092,ip:9092,ip:9092 --describe --group abs3_flume

#删除对应的消费者组

bin/kafka-consumer-groups.sh --bootstrap-server ip:9092,ip:9092,ip:9092 --delete --group abs_flume

#然后删除flume的checkoutpoint,和data数据重新消费。

#由于商城信息数据量不是特别大,这里每20分钟滚动一次文件。避免过多的小文件。(测试消费kafka数据1000多万秒消费)

a1.sinks.k1.hdfs.rollInterval = 1200

a1.sinks.k1.hdfs.rollSize = 134217728

a1.sinks.k1.hdfs.rollCount = 0相关参考文章文章来源:https://www.toymoban.com/news/detail-739557.html

Flume之 各种 Channel 的介绍及参数解析_flume的channel类型有哪些_阿浩_的博客-CSDN博客文章来源地址https://www.toymoban.com/news/detail-739557.html

到了这里,关于Flume实战篇-采集Kafka到hdfs的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!