java通过kerberos权限认证集成hive,并操作hive实现hive库和表、分区表的增删查等功能

1、pom文件中引入hive包

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.7.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.3</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>2.1.0</version>

</dependency>

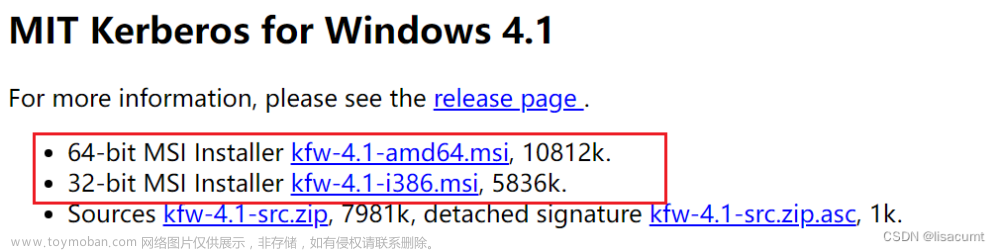

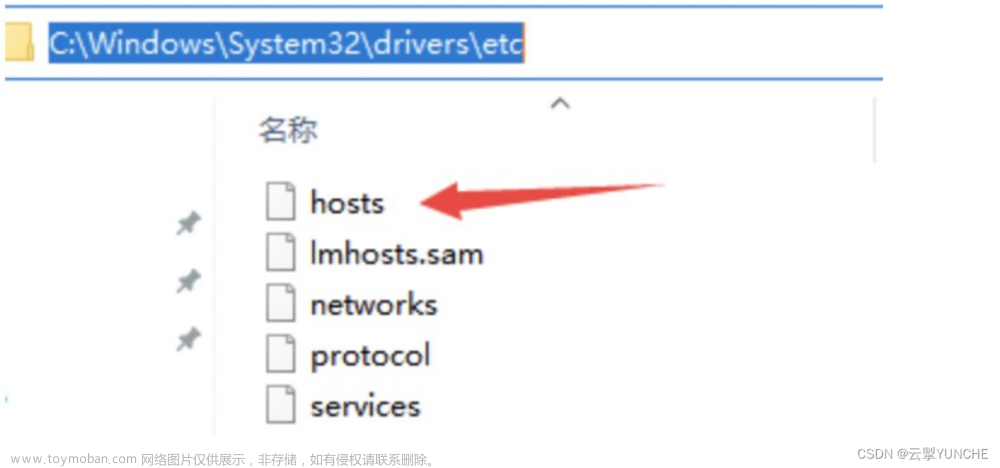

2、从集群中下载认证过可以登录的keytab文件,以及krb5.conf文件还有core-site.xml、hdfs-site.xml、hive-site.xml、yarn-site.xml放到项目的resources目录下

(xml文件按照自己项目需要下载) 文章来源:https://www.toymoban.com/news/detail-744965.html

文章来源:https://www.toymoban.com/news/detail-744965.html

3、代码实现文章来源地址https://www.toymoban.com/news/detail-744965.html

package com.example.demo.hive;

import com.beust.jcommander.internal.Lists;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.security.UserGroupInformation;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import java.io.IOException;

import java.sql.*;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Date;

import java.util.LinkedHashMap;

import java.util.List;

public class HiveUtil {

private final static Logger logger = LogManager.getLogger(HiveUtil.class);

private static SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM");

private static Connection connection = null;

public static void main(String[] args) {

connection = getConnection();

//createDatabase();//创建数据库

//dropDatabase();//删除数据库

//createTable();//创建表

//dropTable();//删除表

//insertTableData();//插入数据

descTable();//查看表结构

//getTableData();//查询表数据

//loadData();//导入数据

/* List<LinkedHashMap<String, Object>> tables = querySql("show databases");

for (LinkedHashMap<String, Object> table : tables) {

String s = JSONObject.toJSONString(table);

System.out.println(s);

}*/

}

public static Connection getConnection() {

System.setProperty("java.security.krb5.conf", "src/main/resources/krb5.conf");

Configuration config = new Configuration();

UserGroupInformation.setConfiguration(config);

try {

UserGroupInformation.loginUserFromKeytab("hive", "src/main/resources/hive.keytab");

String driver = "org.apache.hive.jdbc.HiveDriver";

String jdbc = "jdbc:hive2://192.168.1.110:10000/default;principal=hive/hive@HADOOP.COM";

String user = "hive";

String pass = "123456";

try {

Class.forName(driver);

} catch (ClassNotFoundException e) {

e.printStackTrace();

}

try {

connection = DriverManager.getConnection(jdbc, user, pass);

System.out.println("连接成功");

} catch (SQLException e) {

e.printStackTrace();

}

} catch (IOException e) {

e.printStackTrace();

}

return connection;

}

public static List<LinkedHashMap<String, Object>> querySql(String sql) {

// 执行sql

Statement statement = null;

ResultSet resultSet = null;

try {

statement = connection.createStatement();

resultSet = statement.executeQuery(sql);

return buildListMap(resultSet);

} catch (SQLException e) {

e.printStackTrace();

} finally {

// 关闭

close(resultSet, statement);

}

return null;

}

/**

* @Description 功能描述:将resultSet构造为List<Map>

**/

public static List<LinkedHashMap<String, Object>> buildListMap(ResultSet resultSet) throws SQLException {

if (resultSet == null) {

return Lists.newArrayList();

}

List<LinkedHashMap<String, Object>> resultList = new ArrayList<>();

// 获取元数据

ResultSetMetaData metaData = resultSet.getMetaData();

while (resultSet.next()) {

// 获取列数

int columnCount = metaData.getColumnCount();

LinkedHashMap<String, Object> map = new LinkedHashMap<>();

for (int i = 0; i < columnCount; i++) {

String columnName = metaData.getColumnName(i + 1);

// 过滤掉查询的结果包含序号的

if ("mm.row_num_01".equalsIgnoreCase(columnName)

|| "row_num_01".equalsIgnoreCase(columnName)) {

continue;

}

// 去除hive查询结果的mm.别名前缀

if (columnName.startsWith("mm.")) {

columnName = columnName.substring(columnName.indexOf(".") + 1);

}

Object object = resultSet.getObject(columnName);

map.put(columnName, object);

}

resultList.add(map);

}

return resultList;

}

/**

* 创建hive数据库

*/

public static void createDatabase() {

//创建hive库时指定location

/* create database if not exists hive_user_location

location "/user/hive/hive_location_db"

with dbproperties ('createby'='linda');*/

String sql = "create database if not exists test_db";

System.out.println("创建数据库,脚本:"+sql);

try (Statement statement = connection.createStatement()) {

statement.execute(sql);

System.out.println("创建数据库成功");

} catch (SQLException e) {

e.printStackTrace();

}

}

/**

* 创建表

*/

public static void createTable() {

//创建分区表

//String sql = "create table user_partition(deptno int, dname string) partitioned by (month string) row format delimited fields terminated by '\t'";

String sql = "create table user_tb(id int, name string) row format delimited fields terminated by ','";

logger.info("创建表,脚本:{}", sql);

try (Statement statement = connection.createStatement()) {

statement.execute(sql);

logger.info("创建表成功");

} catch (SQLException e) {

logger.error("创建表出错", e);

}

}

/**

* 查看表结构

*/

public static void descTable() {

/***

* 查看表已有分区信息

* show partitions 表名;

*/

String sql = "desc formatted user_partition";// 查看分区表结构:desc formatted user_tb;

System.out.println("查看表结构,脚本:"+sql);

try {

Statement statement = connection.createStatement();

ResultSet rs = statement.executeQuery(sql);

while (rs.next()) {

logger.info("字段名:{},类型:{}",rs.getString(1),rs.getString(2));

}

} catch (SQLException e) {

logger.error("查看表结构出错", e);

}

}

/**

* 查询表数据

*/

public static void getTableData() {

Date date = new Date();

String month = sdf.format(date);

//查看分区表数据

//String sql = "select * from user_partition where month='"+month+"'";

String sql = "select * from user_tb where id=1";

System.out.println("查看表数据,脚本:"+sql);

try {

Statement statement = connection.createStatement();

ResultSet rs = statement.executeQuery(sql);

while (rs.next()) {

logger.info("字段名:{},数据:{}",rs.getString(1),rs.getString(2));

}

} catch (SQLException e) {

logger.error("查看表数据出错", e);

}

}

/**

* 删除表

*/

public static void dropTable() {

String sql = "drop table if exists user_tb";

logger.info("删除表,脚本:{}", sql);

try (Statement statement = connection.createStatement()) {

statement.execute(sql);

logger.info("删除表成功");

} catch (SQLException e) {

logger.error("删除表出错", e);

}

}

/**

* 删除数据库

*/

public static void dropDatabase() {

String sql = "drop database if exists test_db";

logger.info("删除数据库,脚本:{}", sql);

try (Statement statement = connection.createStatement()) {

statement.execute(sql);

logger.info("删除数据库成功");

} catch (SQLException e) {

logger.error("删除数据库出错", e);

}

}

/**

* 插入数据

*/

public static void insertTableData() {

Date date = new Date();

String month = sdf.format(date);

//插入分区表数据

//String sql = "insert into table user_partition partition(month='"+month+"') values (1,'分区数据')";

String sql = "insert into table user_tb values (1,'张三')";

logger.info("插入数据,脚本:{}", sql);

try (Statement statement = connection.createStatement()) {

statement.execute(sql);

logger.info("插入数据成功");

} catch (SQLException e) {

logger.error("插入数据出错", e);

}

}

/**

* 导入数据,data.txt中的数据为格式为:<br>

* 1,张三<br>

* 2,李四

*/

public static void loadData() {

String sql = "load data local inpath '/home/data.txt' overwrite into table user_tb";

logger.info("导入数据,脚本:{}", sql);

try (Statement statement = connection.createStatement()) {

statement.execute(sql);

logger.info("导入数据成功");

} catch (SQLException e) {

logger.error("导入数据出错", e);

}

}

/**

* 关闭对象 传入多个时注意顺序, 需要先关闭哪个就传在参数前面

* @param objs 对象动态数组

*/

public static void close(Object... objs) {

if (objs == null || objs.length == 0) {

return;

}

for (Object obj : objs) {

if (obj instanceof Statement) {

try {

((Statement) obj).close();

} catch (Exception e) {

e.printStackTrace();

}

}

if (obj instanceof ResultSet) {

try {

((ResultSet) obj).close();

} catch (Exception e) {

e.printStackTrace();

}

}

if (obj instanceof Connection) {

try {

((Connection) obj).close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

}

到了这里,关于Java通过kerberos权限认证集成hive的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!