Matlab实现Transformer 模型

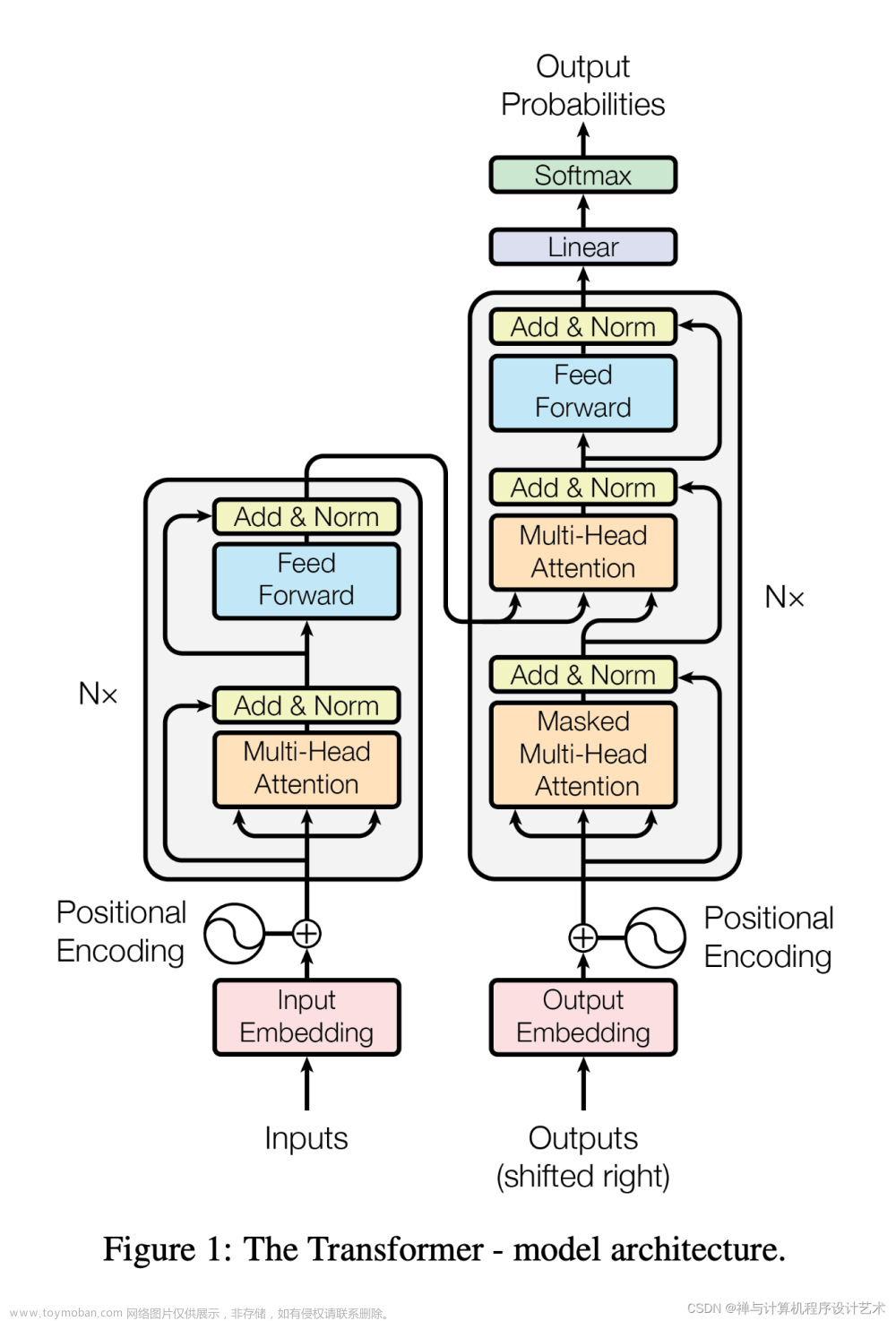

Transformer由论文《Attention is All You Need》提出,现在是谷歌云TPU推荐的参考模型。论文相关的Tensorflow的代码可以从GitHub获取,其作为Tensor2Tensor包的一部分。哈佛的NLP团队也实现了一个基于PyTorch的版本,并注释该论文。对原理感兴趣的可以去查找相关论文和博客学习一下,本博客旨在基于Matlab实现Transformer 模型文章来源:https://www.toymoban.com/news/detail-751973.html

实现代码如下:

MATLAB 实现 Transformer 模型,包括用于多头注意力和前馈层的模块,可实现高级序列建模和特征提取。该代码可用于各种任务,例如自然语言处理和时间序列分析。文章来源地址https://www.toymoban.com/news/detail-751973.html

classdef Transformer < matlab.mixin.Copyable

properties

embedding

encoderLayers

end

methods

function obj = Transformer(inputDim, hiddenDim, numLayers, numHeads)

obj.embedding = embeddingLayer(hiddenDim, inputDim);

obj.encoderLayers = repmat(EncoderLayer(hiddenDim, numHeads), 1, numLayers);

end

function encoded = forward(obj, x)

embedded = obj.embedding(x);

encoded = embedded;

for i = 1:numel(obj.encoderLayers)

encoded = obj.encoderLayers(i).forward(encoded);

end

end

end

end

classdef EncoderLayer < matlab.mixin.Copyable

properties

multiheadAttention

feedForward

end

methods

function obj = EncoderLayer(hiddenDim, numHeads)

obj.multiheadAttention = MultiheadAttention(hiddenDim, numHeads);

obj.feedForward = FeedForward(hiddenDim);

end

function encoded = forward(obj, x)

attended = obj.multiheadAttention.forward(x, x, x);

encoded = obj.feedForward.forward(attended);

end

end

end

classdef MultiheadAttention < matlab.mixin.Copyable

properties

numHeads

headDim

qLinear

kLinear

vLinear

outLinear

end

methods

function obj = MultiheadAttention(hiddenDim, numHeads)

obj.numHeads = numHeads;

obj.headDim = hiddenDim / numHeads;

obj.qLinear = fullyConnectedLayer(hiddenDim);

obj.kLinear = fullyConnectedLayer(hiddenDim);

obj.vLinear = fullyConnectedLayer(hiddenDim);

obj.outLinear = fullyConnectedLayer(hiddenDim);

end

function attended = forward(obj, query, key, value)

batchSize = size(query, 1);

q = obj.qLinear.forward(query);

k = obj.kLinear.forward(key);

v = obj.vLinear.forward(value);

q = reshape(q, [batchSize, obj.numHeads, obj.headDim]);

k = reshape(k, [batchSize, obj.numHeads, obj.headDim]);

v = reshape(v, [batchSize, obj.numHeads, obj.headDim]);

scores = (q * k') / sqrt(obj.headDim);

attention = softmax(scores, 'dim', -1);

attended = attention * v';

attended = reshape(attended, [batchSize, obj.headDim * obj.numHeads]);

attended = obj.outLinear.forward(attended);

end

end

end

classdef FeedForward < matlab.mixin.Copyable

properties

linear1

linear2

end

methods

function obj = FeedForward(hiddenDim)

obj.linear1 = fullyConnectedLayer(hiddenDim * 4);

obj.linear2 = fullyConnectedLayer(hiddenDim);

end

function x = forward(obj, x)

x = obj.linear1.forward(x);

x = relu(x);

x = obj.linear2.forward(x);

end

end

end

% Usage example

inputDim = 1000;

hiddenDim = 256;

numLayers = 6;

numHeads = 8;

model = Transformer(inputDim, hiddenDim, numLayers, numHeads);

inputData = [1, 2, 3, 4, 5; 6, 7, 8, 9, 10]; % Example input data

output = model.forward(inputData);

disp(size(output));

到了这里,关于Matlab实现Transformer 模型的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!