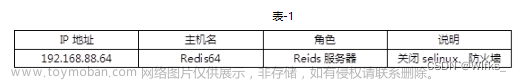

1、集群架构

操作部署前请仔细阅读 03 Temporal 详细介绍 篇

2、Temporal Server 部署流程

原理是:启动前先用 dockerize 生成一遍实际使用的配置,然后再启动Server本体

Self-hosted Temporal Cluster guide | Temporal Documentation

新建应用

app:编辑temporal-eco - 云效应用 - temporal-eco

数据库:temporal_eco 和 temporal_eco_vis

数据库 Schema 创建及数据初始化

涉及系统环境变量

TEMPORAL_STORE_DB_NAME

TEMPORAL_STORE_DB_HOST

TEMPORAL_STORE_DB_PORT

TEMPORAL_STORE_DB_USER

TEMPORAL_STORE_DB_PWD

TEMPORAL_VISIBILITY_STORE_DB_NAME

TEMPORAL_VISIBILITY_STORE_DB_HOST

TEMPORAL_VISIBILITY_STORE_DB_PORT

TEMPORAL_VISIBILITY_STORE_DB_USER

TEMPORAL_VISIBILITY_STORE_DB_PWD

参考 链接 和 v1.12.0 Makefile

|

集群部署

frontend history matching 在创建服务时选 GRPC 服务,worker 选择其他 (它在某个版本后不会暴露 GRPC 端口),然后客户端服务用 GRPC + 公司的服务发现连到 frontend 即可

Shards 数量

参考链接,确定数量为 4k = 4096。Shards 数量一旦确定,后续无法改变(唯一无法改变的配置)。Shards are very lightweight. There are no real implications on the cost of clusters. We (Temporal) have tested the system up to 16k shards.

组件数量及资源量

推荐值(v)参考链接

组件 | 数量 | 推荐 CPU 资源量 | 推荐内存资源量 |

|---|---|---|---|

| frontend | 3 | 4 | 4Gi |

| history | 5 | 8 | 8Gi |

| matching | 3 | 4 | 4Gi |

| worker | 2 | 4 | 4Gi |

测试环境数量及资源量

组件 | 数量 = floor(v/2) | 推荐 CPU 资源量 = floor(v/4) | 推荐内存资源量 = floor(v/4) |

|---|---|---|---|

| frontend | 1 | 1 | 1Gi |

| history | 2 | 2 | 2Gi |

| matching | 1 | 1 | 1Gi |

| worker | 1 | 1 | 1Gi |

生产环境数量及资源量

组件 | 数量 = floor(v/2) | 推荐 CPU 资源量 = floor(v/2) | 推荐内存资源量 = floor(v/2) |

|---|---|---|---|

| frontend | 1 | 2 | 2Gi |

| history | 2 | 4 | 4Gi |

| matching | 1 | 2 | 2Gi |

| worker | 1 | 2 | 2Gi |

对接外部应用的 Mesh 地址

update_at | ${unit}--master.app.svc |

|---|---|

| 2023.05.04 | temporal-frontend--master.temporal-eco.svc.cluster.local:80 |

3、部署细节

生产环境如何部署:Temporal Platform production deployments | Temporal Documentation

支持 Cassandra、MySQL 和 PostgreSQL(版本见链接),需要确定与 TiDB 的兼容性

数据库 Schema 升级

通过指标来进行系统性能监控与调优

Server 拓扑中的不同服务特性不同,最好独立部署

the Frontend service is more CPU bound

the History and Matching services require more memory

Server 的一些数据大小限制

what-is-the-recommended-setup-for-running-cadence-temporal-with-cassandra-on-production

Frontend: Responsible for hosting all service api. All client interaction goes through frontend and mostly scales with rps for the cluster. Start with 3 instances of 4 cores and 4GB memory.

History: This hosts the workflow state transition logic. Each history host is running a shard controller which is responsible for activating and passivating shards on that host. If you provision a cluster with 4k shards then they are distributed across all available history hosts within the cluster through shard controller. If history hosts are scalability bottleneck, you just add more history hosts to the cluster. All history hosts form its own membership ring and shards are distributed among available nodes in the hash ring. They are quite memory intensive as they host mutable state and event caches. Start with 5 history instances with 8 cores and 8 GB memory.

Matching: They are responsible for hosting TaskQueues within the system. Each TaskQueue partition is placed separately on all available matching hosts. They usually scale with the number of workers connecting for workflow or activity task, throughput of workflow/activity/query task, and number of total active TaskQueues in the system. Start with 3 matching instances each with 4 cores and 4 GB memory.

Worker: This is needed for various background logic for ElasticSearch kafka processor, CrossDC consumers, and some system workflows (archival, batch processing, etc). You can just start with 2 instances each with 4 cores and 4 GB memory.

Number of history shards is a setting which cannot be updated after the cluster is provisioned. For all other parameters you could start small and scale your cluster based on need with time but this one you have to think upfront about your maximum load

Temporal server consists of 4 roles. Although you can run all roles within same process but we highly recommend running them separately as they have completely different concerns and scale characteristics. It also makes it operationally much simpler to isolate problems in production. All of the roles are completely stateless and system scales horizontally as you spin up more instances of role once you identify any bottleneck. Here are some recommendations to use as a starting point:

接入 SSO

打指标到 Prometheus

如何配置 Grafana Dashboard

是否部署至 K8s 的讨论

4、Temporal UI 部署流程

https://temporal-eco-ui.pek01.in.zhihu.com/namespaces/default/workflows

| 步骤 | 详细 | 遇到问题 | |

|---|---|---|---|

| 本地部署 | 基础环境 |

| |

安装依赖 (会产出temporal web 所需要的前端环境及代码) | 安装最新的 temporal server

构建 ui-server

| 前端环境问题:nodejs、npm、pnpm

pnpm build:server 生成 assets 文件,否则报 ui/assets 的 all:assets 找不到 Temporal UI requires Temporal v1.16.0 or later | |

第三方依赖 (产出通信所需的 proto 文件) |

| grpc 依赖 protobuf 协议(指定了依赖的代码路径)

| |

| 尝试启动 |

| 然后切到自建的 temporal server 上,再次启动 | |

| 需关注文件 |

| 目前 temporal server 地址 是写死在 temporal ui 代码库里的(但一般应该不会变) 已做了动态配置生成 | |

| 线上部署 | git clone git@git.in.zhihu.com:xujialong01/temporal-eco-server.git 配置 ui-server 服务地址 | 外部包导入个人仓库,加快下载 与 UI 版本对齐(temporal server upgrade)文章来源:https://www.toymoban.com/news/detail-759070.html |

参考资料

Temporal Platform production deployments | Temporal Documentation文章来源地址https://www.toymoban.com/news/detail-759070.html

到了这里,关于Temporal部署指南:集群架构、服务器部署流程、部署细节及Temporal UI配置的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!