一、前言

上篇记录了Scrapy搭配selenium的使用方法,有了基本的了解后我们可以将这项技术落实到实际需求中。目前很多股票网站的行情信息都是动态数据,我们可以用Scrapy+selenium对股票进行实时采集并持久化,再进行数据分析、邮件通知等操作。文章来源:https://www.toymoban.com/news/detail-761497.html

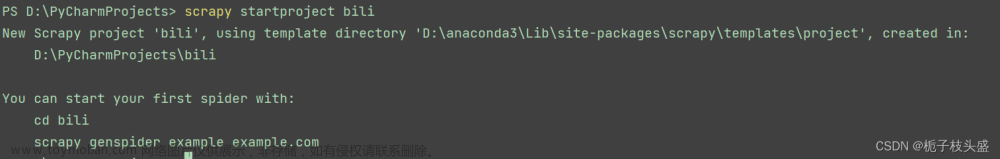

二、环境搭建

详情请看上篇笔记文章来源地址https://www.toymoban.com/news/detail-761497.html

三、代码实现

- items

class StockSpiderItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

# 股票代码

stock_code = scrapy.Field()

# 股票名称

stock_name = scrapy.Field()

# 最新价

last_price = scrapy.Field()

# 涨跌幅

rise_fall_rate = scrapy.Field()

# 涨跌额

rise_fall_price = scrapy.Field()

- middlewares

def __init__(self):

# ----------------firefox的设置------------------------------- #

self.options = firefox_options()

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

spider.driver = webdriver.Firefox(options=self.options) # 指定使用的浏览器

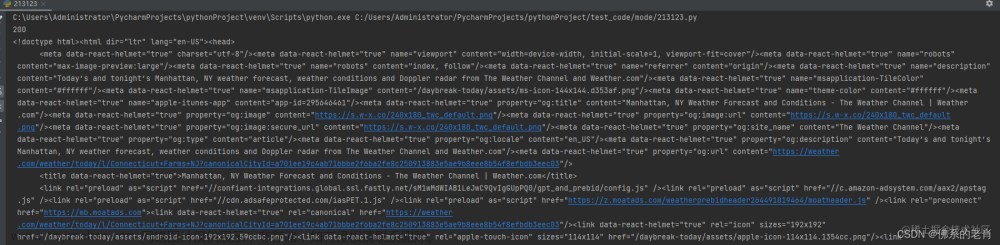

def process_request(self, request, spider):

# Called for each request that goes through the downloader

# middleware.

# Must either:

# - return None: continue processing this request

# - or return a Response object

# - or return a Request object

# - or raise IgnoreRequest: process_exception() methods of

# installed downloader middleware will be called

spider.driver.get("https://quote.eastmoney.com/center/gridlist.html#hs_a_board")

return None

def process_response(self, request, response, spider):

# Called with the response returned from the downloader.

# Must either;

# - return a Response object

# - return a Request object

# - or raise IgnoreRequest

response_body = spider.driver.page_source

return HtmlResponse(url=request.url, body=response_body, encoding='utf-8', request=request)

- settings设置

# See https://doc.scrapy.org/en/latest/topics/spider-middleware.html

SPIDER_MIDDLEWARES = {

'stock_spider.middlewares.StockSpiderSpiderMiddleware': 543,

}

# Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

DOWNLOADER_MIDDLEWARES = {

'stock_spider.middlewares.StockSpiderDownloaderMiddleware': 543,

}

- spider文件

def parse(self, response):

# 股票代码

stock_code = response.css("table.table_wrapper-table tbody tr td:nth-child(2) a::text").extract()

# 股票名称

stock_name = response.css("table.table_wrapper-table tbody tr td:nth-child(3) a::text").extract()

# 最新价

last_price = response.css("table.table_wrapper-table tbody tr td:nth-child(5) span::text").extract()

# 涨跌幅

rise_fall_rate = response.css("table.table_wrapper-table tbody tr td:nth-child(6) span::text").extract()

# 涨跌额

rise_fall_price = response.css("table.table_wrapper-table tbody tr td:nth-child(7) span::text").extract()

for i in range(len(stock_code)):

item = StockSpiderItem()

item["stock_code"] = stock_code[i]

item["stock_name"] = stock_name[i]

item["last_price"] = last_price[i]

item["rise_fall_rate"] = rise_fall_rate[i]

item["rise_fall_price"] = rise_fall_price[i]

yield item

def close(self, spider):

spider.driver.quit()

- pipelines持久化

def process_item(self, item, spider):

"""

接收到提交过来的对象后,写入csv文件

"""

filename = f'stock_info.csv'

with open(filename, 'a+', encoding='utf-8') as f:

line = item["stock_code"] + "," + item["stock_name"] + "," + item["last_price"] + "," + \

item["rise_fall_rate"] + "," + item["rise_fall_price"] + "\n"

f.write(line)

return item

- readme文件

1.安装依赖包

- python 3.0+

- pip install -r requirements.txt

2.将最第二层stock_spider文件夹设置为根目录

3.将firefox驱动程序包放到python环境的Scripts文件夹里

4.必须要安装firefox浏览器才会调用到浏览器

5.执行spider_main.py文件启动爬虫

到了这里,关于python爬虫进阶篇:Scrapy中使用Selenium+Firefox浏览器爬取沪深A股股票行情的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!