1.简介:

组织机构:Meta(Facebook)

代码仓:https://github.com/facebookresearch/llama

模型:llama-2-7b

下载:使用download.sh下载

硬件环境:暗影精灵7Plus

Windows版本:Windows 11家庭中文版 Insider Preview 22H2

内存 32G

GPU显卡:Nvidia GTX 3080 Laptop (16G)

2.代码和模型下载:

下载llama.cpp的代码仓:

git clone https://github.com/ggerganov/llama.cpp

需要获取原始LLaMA的模型文件,放到 models目录下,现在models目录下是这样的:

参考 https://blog.csdn.net/snmper/article/details/133578456

将上次在Jetson AGX Orin上的成功运行的7B模型文件传到 models目录下:

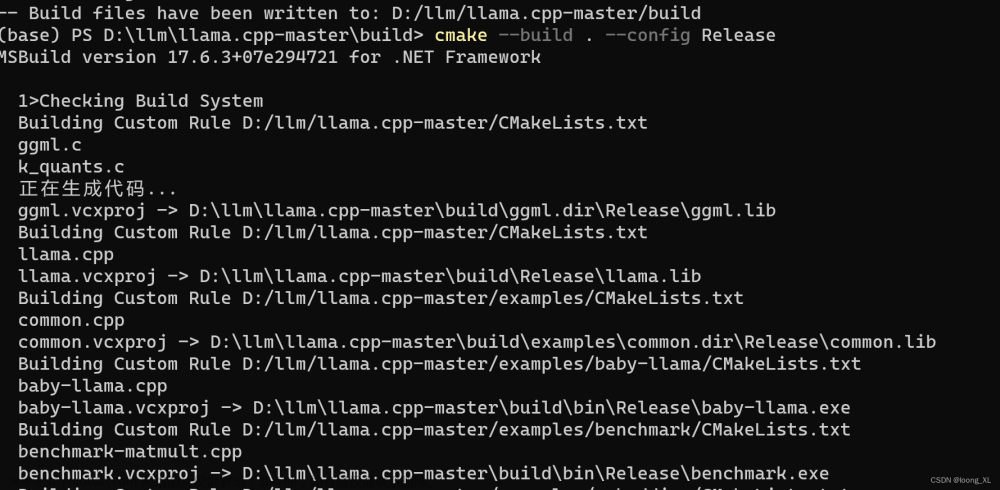

3.llama.cpp环境安装:

查看readme,找到llamp.cpp在Windows上的安装方式

打开 https://github.com/skeeto/w64devkit/releases

找到最新fortran版本的 w64devkit:

下载完成后系统弹出:

往前找一个版本v.19.0试试:https://github.com/skeeto/w64devkit/releases/tag/v1.19.0

解压到 D:\w64devkit

运行 w64devkit.exe

切换到 d: 盘

cd llama.cpp

python -V

这里python是3.7.5版本。

查看下make,cmake,gcc,g++的版本:

编译试试:

make

耐心等待编译结束(或者编译出错)

这个到底算不算恶意软件呢?

张小白感觉不像,于是到llama.cpp的官方去提了个issue确认一下:https://github.com/ggerganov/llama.cpp/issues/3463

官方回答如下:https://github.com/ggerganov/llama.cpp/discussions/3464

张小白还是决定使用 w64devkit,而且是最新版。在编译期间关掉 360杀毒软件!!!(其实还得关闭360安全卫士)

重新打开 https://github.com/skeeto/w64devkit/releases

下载 w64devkit-fortran-1.20.0.zip

解压到D盘:

双击运行 w64devkit.exe

cd d:/

cd llama.cpp

make

耐心等待编译结束:

编译成功。

其中exe就是生成好的windows可执行文件。

退出 w64devkit.编译环境。

4.安装依赖

创建conda环境

conda create -n llama python=3.10

conda activate llama

cd llama.cpp

pip install -r requirements.txt

5.部署验证

阅读下面这段内容:

将7B模型(14G左右)转换成 ggml FP16模型

python convert.py models/7B/

模型写到了 models\7B\ggml-model-f16.gguf 文件中:也是14G左右。

将刚才转换好的FP16模型进行4-bit量化:

./quantize ./models/7B/ggml-model-f16.gguf ./models/7B/ggml-model-q4_0.gguf q4_0

量化后的文件为:./models/7B/ggml-model-q4_0.gguf

大小只有3.8G了。

进行推理:

./main -m ./models/7B/ggml-model-q4_0.gguf -n 128

运行结果如下:

Refresh your summer look with our stylish new range of women's swimwear. Shop the latest styles in bikinis, tankinis and one pieces online at Simply Beach today! Our collection offers a wide selection of flattering designs from classic cuts to eye-catching prints that will turn heads on your next day by the pool. [end of text]这个貌似是随机生成的一段话。

换个提示词: ./main -m ./models/7B/ggml-model-q4_0.gguf --prompt "Once upon a time"

补齐的文字如下:

Once upon a time, there was no such thing as a "social network". The idea of connecting with someone else on the Internet simply by clicking on their name and seeing who they were connected to and what we might have in common is a relatively new concept. But this kind of connection has become so much a part of our lives that we don't even think twice about it, right?

But once upon a time there was only one way to connect with someone: you either knew them or you didn't. And if you met somebody and became friends, the way you maintained your relationship was to stay in touch by phone, letter, or in person. It wasn't that easy before e-mail, cell phones, Facebook, Twitter, texting, and all the other ways we keep in touch today.

So I say once upon a time because social networking is not quite as new as it seems to be. In fact, I think the first true social network was formed back in 1594 when Shakespeare's "Hamlet" premiered at London's Globe Theatre and his performance was greeted by thunderous applause and a standing ovation by the entire audience.

At that time there were no movie theatre chains to advertise, no TV shows, no radio stations or even newspapers with paid reviews to promote "Hamlet" in advance of its opening night. Shakespeare's only way to get the word out about his latest production was through a series of "word-of-mouth" conversations between the people who had gone to see it and all those they encountered afterwards.

This was, by far, the most advanced social network that existed up until that time! And yet this type of social networking is probably still used today in the modern theatre world where actors and producers meet with audience members after their show to get feedback on how well (or not) it went over for them.

What we now call "social networking" is nothing more than the latest iteration of a centuries-old system that's already proven itself to be effective, but only when used by those who choose to engage in it voluntarily and without coercion. And yes, I realize that this particular definition of social networking has changed over time as well: from Shakespeare's "word of mouth" all the way up to the first online bulletin board systems (BBS) with 300-baud modems.

And yet, the latest innovation in social networking, Web 2.0 and its accompanying sites like Facebook, Twitter and LinkedIn still have yet to surpass these earlier methods in the minds of those who prefer not to use them (and they exist by virtue of an ever-growing user base).

So why is it that so many people are afraid of social networking? After all, there's no reason for anyone to feel compelled or coerced into joining these sites. And yet, despite this fact, a growing number of people seem more than willing to give up their personal information and privacy on the Internet. Why is that?

The answer is simple: most people don't have an accurate picture of what social networking really means. What they imagine it looks like bears little resemblance to how these sites actually work, let alone what's actually going on behind the scenes.

In a nutshell, those who believe that Web 2.0 is nothing but another attempt at getting us all "connected" are missing out on something very important: social networking isn't really about connecting with other people (much like Facebook and LinkedIn) or exchanging information (like Twitter). It's actually about the things we do when we connect, exchange information and interact.

So what does this mean? Simply put, all of these sites are ineffective at helping us get to know each other better. They have very little influence on how we choose who to trust or not to trust among our personal networks. What they're actually good for is gathering data (or information) about us as a way to sell us things that we don't really need and might not even want.

This isn't an attack on social networking, it's just the truth. Facebook may have started out as a site where students can connect with each other but it has now evolved into something much more sinister: a database of personal information about every one of its users that can be sold to anyone at any time without your consent (or even knowledge).

In effect, sites like LinkedIn and Facebook are nothing but the modern version of the old fashioned "spammers" who used to send us junk email. In addition to their obvious privacy concerns and their inability to help us connect with each other or exchange information, these social networking sites should also be regarded as a direct threat to our personal safety.

Why? Well, for one thing the information that they collect about us (and sell to others) can also be used by criminals to commit fraud against us and even extort money from us. This is why it's so important that we take control of this information and use it wisely instead of letting these sites control our private lives for their own selfish reasons.

The reality is that these sites cannot be trusted with the kind of personal information that they require about each one of us. Sites like LinkedIn or Facebook are nothing more than a threat to our privacy and should be regarded as such by every single person who uses them. In fact, sites like this (and any others) are in effect "spammers" who use the same tactics that spammers used to use in order to scam us into using their services.

I don't have a Facebook account and I don't plan on ever creating one either. This site is actually nothing more than a direct threat to my privacy because it uses the same old trick of collecting personal information about me (without my permission) in order to spam me with ads that will help them get rich at my expense. They have even resorted to using psychological tricks and sophisticated surveys in order to manipulate our feelings into believing that they are something important to us.

The truth is that sites like Facebook (or LinkedIn) can only be trusted if we're the ones who control them instead of letting others control them so that they can profit from it. In fact, a site like this can never even hope to become our friend because it doesn't respect the privacy rights of its users at all. This is why I am against these sites and their invasive surveys but if you want to know more about how these sites work then check out the link that we have below in order to learn a bit more about these sites. [end of text]由于llama原始模型都是英文回答(后面会考虑试验改进后的中文),有请词霸翻译一下:

文章来源:https://www.toymoban.com/news/detail-773860.html

文章来源:https://www.toymoban.com/news/detail-773860.html

先试验到这里吧!文章来源地址https://www.toymoban.com/news/detail-773860.html

到了这里,关于大模型部署手记(8)LLaMa2+Windows+llama.cpp+英文文本补齐的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!