1 k8s组件介绍

1.1 kube-apiserver:

Kubernetes API server 为 api 对象验证并配置数据,包括 pods、 services、replicationcontrollers和其它 api 对象,API Server 提供 REST 操作,并为集群的共享状态提供前端访问⼊⼝,kubernetes中的所有其他组件都通过该前端进⾏交互。

https://kubernetes.io/zh/docs/reference/command-line-tools-reference/kube-apiserver/

1.2 kube-scheduler

Kubernetes的pod调度器,负责将Pods指派到合法的节点上,kube-scheduler调度器基于约束和可⽤资源为调度队列中每个Pod确定其可合法放置的节点,kube-scheduler⼀个拥有丰富策略、能够感知拓扑变化、⽀持特定负载的功能组件,kube-scheduler需要考虑独⽴的和集体的资源需求、服务质量需求、硬件/软件/策略限制、亲和与反亲和规范等需求。

https://kubernetes.io/zh/docs/reference/command-line-tools-reference/kube-scheduler/

1.3 kube-controller-manager

Controller Manager作为集群内部的管理控制中⼼,负责集群内的Node、

Pod副本、服务端点(Endpoint)、命名空间(Namespace)、服务账号(ServiceAccount)、资源定额(ResourceQuota)的管理,当某个Node意外宕机时,Controller Manager会及时发现并执⾏⾃动化修复流程,确保集群中的pod副本始终处于预期的⼯作状态。

https://kubernetes.io/zh/docs/reference/command-line-tools-reference/kube-controller-manager/

1.4 kube-proxy

Kubernetes ⽹络代理运⾏在 node 上,它反映了 node 上 Kubernetes API 中定义的服务,并可以通过⼀组后端进⾏简单的 TCP、UDP 和 SCTP 流转发或者在⼀组后端进⾏循环 TCP、UDP 和SCTP 转发,⽤户必须使⽤ apiserver API 创建⼀个服务来配置代理,其实就是kube-proxy通过在主机上维护⽹络规则并执⾏连接转发来实现Kubernetes服务访问。

https://kubernetes.io/zh/docs/reference/command-line-tools-reference/kube-proxy/

1.5 kubelet

是运⾏在每个worker节点的代理组件,它会监视已分配给节点的pod,具体功能如下:

向master汇报node节点的状态信息

接受指令并在Pod中创建 docker容器

准备Pod所需的数据卷

返回pod的运⾏状态

在node节点执⾏容器健康检查

https://kubernetes.io/zh/docs/reference/command-line-tools-reference/kubelet/

1.6 etcd:

etcd 是CoreOS公司开发⽬前是Kubernetes默认使⽤的key-value数据存储系统,⽤于保存所有集群数据,⽀持分布式集群功能,⽣产环境使⽤时需要为etcd数据提供定期备份机制。

#核⼼组件: apiserver:提供了资源操作的唯⼀⼊⼝,并提供认证、授权、访问控制、API注册和发现等机制

controller manager:负责维护集群的状态,⽐如故障检测、⾃动扩展、滚动更新等

scheduler:负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上

kubelet:负责维护容器的⽣命周期,同时也负责Volume(CVI)和⽹络(CNI)的管理;

Container runtime:负责镜像管理以及Pod和容器的真正运⾏(CRI);

kube-proxy:负责为Service提供cluster内部的服务发现和负载均衡;

etcd:保存了整个集群的状态

#可选组件:

kube-dns:负责为整个集群提供DNS服务

Ingress Controller:为服务提供外⽹⼊⼝

Heapster:提供资源监控

Dashboard:提供GUI

Federation:提供跨可⽤区的集群

Fluentd-elasticsearch:提供集群⽇志采集、存储与查询

2 k8s安装部署:

2.1:安装⽅式:

2.1.1:kubeadm:

使⽤k8s官⽅提供的部署⼯具kubeadm⾃动安装,需要在master和node节点上安装docker等组件,然后初始化,把管理端的控制服务和node上的服务都以pod的⽅式运⾏。

2.1.2:安装注意事项:

注意:

禁⽤swap

关闭selinux

关闭iptables,

优化内核参数及资源限制参数

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

#⼆层的⽹桥在转发包时会被宿主机iptables的FORWARD规则匹配

2.2:部署过程:

2.2.1:具体步骤:

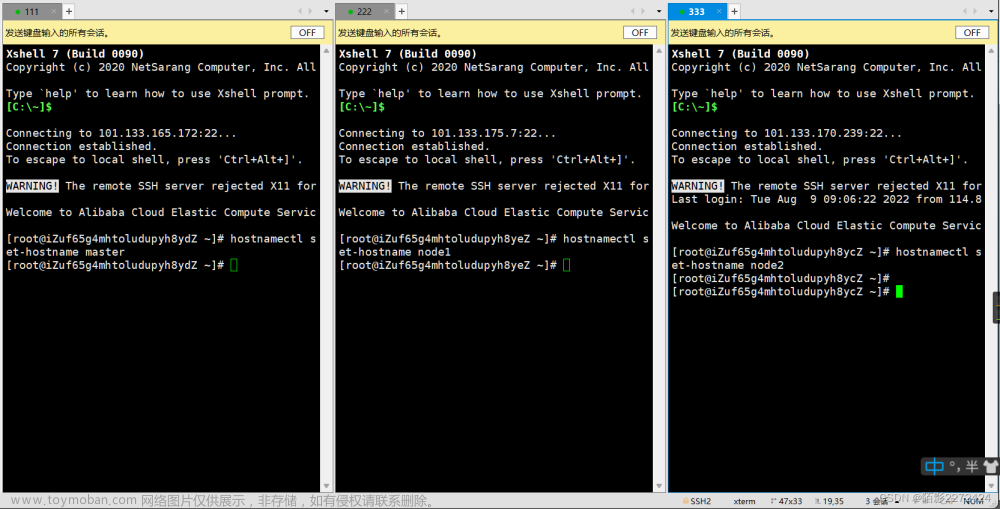

1、基础环境准备

2、部署harbor及haproxy⾼可⽤反向代理,实现控制节点的API反问⼊⼝⾼可⽤

3、在所有master节点安装指定版本的kubeadm 、kubelet、kubectl、docker

4、在所有node节点安装指定版本的kubeadm 、kubelet、docker,在node节点kubectl为可选安装,看是否需要在node执⾏kubectl命令进⾏集群管理及pod管理等操作。

5、master节点运⾏kubeadm init初始化命令

6、验证master节点状态

7、在node节点使⽤kubeadm命令将⾃⼰加⼊k8smaster(需要使⽤master⽣成token认证)

8、验证node节点状态

9、创建pod并测试⽹络通信

10、部署web服务Dashboard

2.2.2基础环境准备:

| ⻆⾊ | 主机名 | IP地址 |

|---|---|---|

| k8s-master1 | k8s-master1 | 192.168.100.31 |

| k8s-master2 | k8s-master2 | 192.168.100.32 |

| k8s-master3 | k8s-master3 | 192.168.100.33 |

| k8s-haproxy-1 | k8s-ha1 | 192.168.100.34 |

| k8s-haproxy-2 | k8s-ha2 | 192.168.100.35 |

| k8s-harbor | k8s-harbor | 192.168.100.36 |

| k8s-node1 | k8s-node1 | 192.168.100.37 |

| k8s-node2 | k8s-node2 | 192.168.100.38 |

| k8s-node3 | k8s-node3 | 192.168.100.39 |

2.3:⾼可⽤反向代理:

基于keepalived及HAProxy实现⾼可⽤反向代理环境,为k8s apiserver提供⾼可⽤反向代理。

2.3.1:keepalived安装及配置:

安装及配置keepalived,并测试VIP的⾼可⽤

节点1安装及配置keepalived:

root@k8s-ha1:~# apt install keepalived

root@k8s-ha1:~# vim /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state MASTER

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.100.188 dev eth0 label eth0:1

}

}

root@k8s-ha1:~# systemctl restart keepalived

root@k8s-ha1:~# systemctl enable keepalived

节点2安装及配置keepalived:

root@k8s-ha2:~# apt install keepalived

root@k8s-ha2:~# cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

root@k8s-ha2:~# vim /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state BACKUP

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.100.188 dev eth0 label eth0:1

}

}

root@k8s-ha2:~# systemctl restart keepalived

root@k8s-ha3:~# systemctl enable keepalived

root@k8s-ha3:~# ifconfig eth0:1

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.100.188 netmask 255.255.255.255 broadcast 0.0.0.0

ether 00:0c:29:51:ba:18 txqueuelen 1000 (Ethernet)

2.3.2:haproxy安装及配置:

使用脚本进行编译安装,所使用的安装脚本以及安装包,见文末:

安装脚本:

FILE_DIR=`pwd`

LUA_PKG="lua-5.3.5.tar.gz"

LUA_DIR="lua-5.3.5"

HAPROXY_PKG="haproxy-2.0.15.tar.gz"

HAPROXY_DIR="haproxy-2.0.15"

HAPROXY_VER="2.0.15"

function install_system_package(){

grep "Ubuntu" /etc/issue &> /dev/null

if [ $? -eq 0 ];then

apt update

apt install iproute2 ntpdate make tcpdump telnet traceroute nfs-kernel-server nfs-common lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute gcc openssh-server lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute iotop unzip zip libreadline-dev libsystemd-dev -y

fi

grep "Kernel" /etc/issue &> /dev/null

if [ $? -eq 0 ];then

yum install vim iotop bc gcc gcc-c++ glibc glibc-devel pcre pcre-devel openssl openssl-devel zip unzip zlib-devel net-tools lrzsz tree ntpdate telnet lsof tcpdump wget libevent libevent-devel bc systemd-devel bash-completion traceroute psmisc -y

fi

}

function install_lua(){

cd ${FILE_DIR} && tar xvf ${LUA_PKG} && cd ${LUA_DIR} && make linux test

}

function install_haptroxy(){

if -d /etc/haproxy;then

echo "HAProxy 已经安装,即将退出安装过程!"

else

mkdir -p /var/lib/haproxy /etc/haproxy

cd ${FILE_DIR} && tar xvf ${HAPROXY_PKG} && cd ${HAPROXY_DIR} && make ARCH=x86_64 TARGET=linux-glibc USE_PCRE=1 USE_OPENSSL=1 USE_ZLIB=1 USE_SYSTEMD=1 USE_CPU_AFFINITY=1 USE_LUA=1 LUA_INC=/usr/local/src/lua-5.3.5/src/ LUA_LIB=/usr/local/src/lua-5.3.5/src/ PREFIX=/apps/haproxy && make install PREFIX=/apps/haproxy && cp haproxy /usr/sbin/

\cp ${FILE_DIR}/haproxy.cfg /etc/haproxy/haproxy.cfg

\cp ${FILE_DIR}/haproxy.service /lib/systemd/system/haproxy.service

systemctl daemon-reload && systemctl restart haproxy && systemctl enable haproxy

killall -0 haproxy

if [ $? -eq 0 ];then

echo "HAProxy ${HAPROXY_VER} 安装成功!" && echo "即将退出安装过程!" && sleep 1

else

echo "HAProxy ${HAPROXY_VER} 安装失败!" && echo "即将退出安装过程!" && sleep 1

fi

fi

}

main(){

install_system_package

install_lua

install_haptroxy

}

main

节点1安装及配置haproxy:

root@k8s-ha1:~# vim /etc/haproxy/haproxy.cfg

timeout client 300000ms

timeout server 300000ms

listen stats

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth haadmin:123456

listen k8s-6443

bind 192.168.100.188:6443

mode tcp

balance roundrobin

log global

server 192.168.100.31 192.168.100.31:6443 check inter 3000 fall 2 rise 5

server 192.168.100.32 192.168.100.32:6443 check inter 3000 fall 2 rise 5

server 192.168.100.33 192.168.100.33:6443 check inter 3000 fall 2 rise 5

root@k8s-ha1:~# systemctl enable haproxy

root@k8s-ha1:~# systemctl restart haproxy

节点2安装配置参考节点一,配置一样

2.4:harbor

habor可以不进行安装,自行决定,使用过程用可以将镜像上传至harbor,其余节点从harbor进行拉取,节约时间,具体安装教程见下一篇博客!

2.5安装kubeadm等组件:

在master和node节点安装kubeadm 、kubelet、kubectl、docker等组件,负载均衡服务器不需要安装。

2.5.1 安装docker-脚本一键安装

安装版本为:19.03.15,安装包,脚本文件见文末!

#!/bin/bash

DOCKER_FILE="docker-19.03.15.tgz"

DOCKER_DIR="/data/docker"

#wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.15.tgz

install_docker(){

mkdir -p /data/docker

mkdir -p /etc/docker

tar xvf $DOCKER_FILE -C $DOCKER_DIR

cd $DOCKER_DIR

cp docker/* /usr/bin/

#创建containerd的service文件,并且启动

cat >/etc/systemd/system/containerd.service <<EOF

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target

[Service]

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

LimitNPROC=infinity

LimitCORE=infinity

LimitNOFILE=1048576

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

EOF

systemctl enable --now containerd.service

#准备docker的service文件

cat > /etc/systemd/system/docker.service <<EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service containerd.service

Wants=network-online.target

Requires=docker.socket containerd.service

[Service]

Type=notify

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

OOMScoreAdjust=-500

[Install]

WantedBy=multi-user.target

EOF

#准备docker的socket文件

cat > /etc/systemd/system/docker.socket <<EOF

[Unit]

Description=Docker Socket for the API

[Socket]

ListenStream=/var/run/docker.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF

groupadd docker

cat >/etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": [

"https://fe4wv34b.mirror.aliyuncs.com",

"https://docker.mirrors.ustc.edu.cn",

"http://hub-mirror.c.163.com"

],

"max-concurrent-downloads": 10,

"log-driver": "json-file",

"log-level": "warn",

"log-opts": {

"max-size": "10m",

"max-file": "3"

},

"data-root": "/var/lib/docker"

}

EOF

#启动docker

systemctl enable --now docker.socket && systemctl enable --now docker.service

}

install_docker

2.5.2:所有节点安装kubelet kubeadm kubectl

所有节点配置阿⾥云仓库地址并安装相关组件,node节点可选安装kubectl

配置阿⾥云镜像的kubernetes源(⽤于安装kubelet kubeadm kubectl命令)

https://developer.aliyun.com/mirror/kubernetes?spm=a2c6h.13651102.0.0.3e221b11Otippu

apt-get update && apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

apt-get install -y kubelet kubeadm kubectl

验证:

master节点运⾏kubeadm init初始化命令

2.6:master节点运⾏kubeadm init初始化命令

2.6.1:kubeadm命令使⽤:

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/ #命令选项及帮助

Available Commands:

alpha #kubeadm处于测试阶段的命令

completion #bash命令补全,需要安装bash-completion

# mkdir /data/scripts -p

# kubeadm completion bash > /data/scripts/kubeadm_completion.sh

# source /data/scripts/kubeadm_completion.sh

# vim /etc/profile

source /data/scripts/kubeadm_completion.sh

config #管理kubeadm集群的配置,该配置保留在集群的ConfigMap中

#kubeadm config print init-defaults

help Help about any command

init #初始化⼀个Kubernetes控制平⾯

join #将节点加⼊到已经存在的k8s master

reset 还原使⽤kubeadm init或者kubeadm join对系统产⽣的环境变化

token #管理token

upgrade #升级k8s版本

version #查看版本信息

2.6.2:kubeadm init命令简介:

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/ #命令使⽤

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm-init/ #集群

初始化:

root@docker-node1:~# kubeadm init --help

## --apiserver-advertise-address string #K8S API Server将要监听的监听的本机IP

## --apiserver-bind-port int32 #API Server绑定的端⼝,默认为6443

--apiserver-cert-extra-sans stringSlice #可选的证书额外信息,⽤于指定API Server的服务

器证书。可以是IP地址也可以是DNS名称。

--cert-dir string #证书的存储路径,缺省路径为 /etc/kubernetes/pki

--certificate-key string #定义⼀个⽤于加密kubeadm-certs Secret中的控制平台证书的密钥

--config string #kubeadm #配置⽂件的路径

## --control-plane-endpoint string #为控制平台指定⼀个稳定的IP地址或DNS名称,即配置⼀个

可以⻓期使⽤切是⾼可⽤的VIP或者域名,k8s 多master⾼可⽤基于此参数实现

--cri-socket string #要连接的CRI(容器运⾏时接⼝,Container Runtime Interface, 简称

CRI)套接字的路径,如果为空,则kubeadm将尝试⾃动检测此值,"仅当安装了多个CRI或具有⾮标准CRI

插槽时,才使⽤此选项"

--dry-run #不要应⽤任何更改,只是输出将要执⾏的操作,其实就是测试运⾏。

--experimental-kustomize string #⽤于存储kustomize为静态pod清单所提供的补丁的路径。

--feature-gates string #⼀组⽤来描述各种功能特性的键值(key=value)对,选项是:

IPv6DualStack=true|false (ALPHA - default=false)

## --ignore-preflight-errors strings #可以忽略检查过程 中出现的错误信息,⽐如忽略

swap,如果为all就忽略所有

## --image-repository string #设置⼀个镜像仓库,默认为k8s.gcr.io

## --kubernetes-version string #指定安装k8s版本,默认为stable-1

--node-name string #指定node节点名称

## --pod-network-cidr #设置pod ip地址范围

## --service-cidr #设置service⽹络地址范围

## --service-dns-domain string #设置k8s内部域名,默认为cluster.local,会有相应的DNS服

务(kube-dns/coredns)解析⽣成的域名记录。

--skip-certificate-key-print #不打印⽤于加密的key信息

--skip-phases strings #要跳过哪些阶段

--skip-token-print #跳过打印token信息

--token #指定token

--token-ttl #指定token过期时间,默认为24⼩时,0为永不过期

--upload-certs #更新证书

#全局可选项:

--add-dir-header #如果为true,在⽇志头部添加⽇志⽬录

--log-file string #如果不为空,将使⽤此⽇志⽂件

--log-file-max-size uint #设置⽇志⽂件的最⼤⼤⼩,单位为兆,默认为1800兆,0为没有限制

--rootfs #宿主机的根路径,也就是绝对路径

--skip-headers #如果为true,在log⽇志⾥⾯不显示标题前缀

--skip-log-headers #如果为true,在log⽇志⾥⾥不显示标题

2.6.3:准备镜像:

[k8s-master1 root ~]# kubeadm config images list --kubernetes-version v1.20.5

k8s.gcr.io/kube-apiserver:v1.20.5

k8s.gcr.io/kube-controller-manager:v1.20.5

k8s.gcr.io/kube-scheduler:v1.20.5

k8s.gcr.io/kube-proxy:v1.20.5

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns:1.7.0

2.6.4:master节点下载镜像:

建议提前在master节点下载镜像以减少安装等待时间,但是镜像默认使⽤Google的镜像仓库,所以国内⽆法直接下载,但是可以通过阿⾥云的镜像仓库把镜像先提前下载下来,可以避免后期因镜像下载异常⽽导致k8s部署异常。

[k8s-master1 root ~]# cat images-download.sh

#!/bin/bash

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kubeapiserver:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kubecontroller-manager:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kubescheduler:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kubeproxy:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0

2.6.5:验证当前镜像:

2.7 ⾼可⽤master初始化:

基于keepalived实现⾼可⽤VIP,通过haproxy实现kube-apiserver的反向代理,然后将对kube-apiserver的管理请求转发⾄多台 k8s master以实现管理端⾼可⽤。

2.7.1:基于命令初始化⾼可⽤master⽅式:

初始化命令,根据自己的集群设计,不同环境稍有差异:

kubeadm init --apiserver-advertise-address=192.168.100.31 --control-plane-endpoint=192.168.100.188 --apiserver-bind-port=6443 --kubernetes-version=v1.20.5 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=test.local --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --ignore-preflight-errors=swap

2.7.2:基于⽂件初始化⾼可⽤master⽅式:

# kubeadm config print init-defaults #输出默认初始化配置

# kubeadm config print init-defaults > kubeadm-init.yaml #将默认配置输出⾄⽂件

# cat kubeadm-init.yaml #修改后的初始化⽂件内容

root@k8s-master1:~# cat kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.100.31

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master1.example.local

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.100.188:6443

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.5

networking:

dnsDomain: jiege.local

podSubnet: 10.100.0.0/16

serviceSubnet: 10.200.0.0/16

scheduler: {}

root@k8s-master1:~# kubeadm init --config kubeadm-init.yaml #基于⽂件执⾏k8s

master初始化

2.8:配置kube-config⽂件及⽹络组件:

2.8.1:kube-config⽂件:

Kube-config⽂件中包含kube-apiserver地址及相关认证信息

[k8s-master1 root ~]# mkdir -p $HOME/.kube

[k8s-master1 root ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[k8s-master1 root ~]# sudo chown

(

i

d

−

u

)

:

(id -u):

(id−u):(id -g) $HOME/.kube/config

[k8s-master1 root ~]# kubectl get node

部署⽹络组件flannel,需要连接外网进行下载,kube-flannel.yml文件见文末,我已经将flannel作用到的镜像导出,见文末,自行导入到docker:

[k8s-master1 root ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[k8s-master1 root ~]# vim kube-flannel.yml

修改Network:10.100.0.0/16 需要保持跟初始化时一致

[k8s-master1 root ~]# kubectl apply -f kube-flannel.yml

验证master节点状态,这个需要稍微等一两分钟:

2.8.2:当前maste⽣成证书⽤于添加新控制节点:

[k8s-master1 root ~]# kubeadm init phase upload-certs --upload-certs

2.9:添加节点到k8s集群:

将其他的maser节点及node节点分别添加到k8集群中

2.9.1:master节点2:

见2.7.1图中的添加方式,配合2.8.2生成的证书

kubeadm join 192.168.100.188:6443 --token g0v6kt.h3tdcd4uzbarpngk \

--discovery-token-ca-cert-hash sha256:676f6d36823d8c872d6f4831326c3b695999de602c41b319ef744c7ebf201a07 \

--control-plane --certificate-key dcb213158115e9f9fc97c03655527ff8b56e6e628c603bc616940320fb83243b

2.9.2:master节点3:

见2.7.1图中的添加方式,配合2.8.2生成的证书

kubeadm join 192.168.100.188:6443 --token g0v6kt.h3tdcd4uzbarpngk \

--discovery-token-ca-cert-hash sha256:676f6d36823d8c872d6f4831326c3b695999de602c41b319ef744c7ebf201a07 \

--control-plane --certificate-key dcb213158115e9f9fc97c03655527ff8b56e6e628c603bc616940320fb83243b

2.9.3:添加node节点:

各需要加⼊到k8s master集群中的node节点都要安装docker kubeadm kubelet ,因此都要执⾏安装docker kubeadm kubelet的步骤。

kubeadm join 192.168.100.188:6443 --token g0v6kt.h3tdcd4uzbarpngk \

--discovery-token-ca-cert-hash sha256:676f6d36823d8c872d6f4831326c3b695999de602c41b319ef744c7ebf201a07

2.9.4:验证当前node状态:

各Node节点会⾃动加⼊到master节点,下载镜像并启动flannel,直到最终在master看到node处于Ready状态。

2.9.5:k8s创建容器并测试内部⽹络:

2.9.6:验证外部⽹络

3 部署dashboard:

https://github.com/kubernetes/dashboard/releases/tag/v2.7.0

3.1:部署dashboard v2.7.0:

[k8s-master1 root ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

[k8s-master1 root ~]# mv recommended.yaml dashboard-2.7.0.yaml

[k8s-master1 root ~]# vim dashboard-2.7.0.yaml

更改以上两行

[k8s-master1 root ~]# vim admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

[k8s-master1 root ~]# kubectl apply -f dashboard-2.7.0.yaml -f admin-user.yaml

验证30002端口是否打开:

3.2:访问dashboard:

输入https://192.168.100.31:30002

3.3:获取登录token:

[k8s-master1 root ~]# kubectl get secret -A | grep admin

kubernetes-dashboard admin-user-token-m74vn kubernetes.io/service-account-token 3 4m41s

[k8s-master1 root ~]# kubectl describe secret admin-user-token-m74vn -n kubernetes-dashboard

Name: admin-user-token-m74vn

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: d9834b25-0d89-4675-9fe7-091da4b3da44

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImtCdVI2LTN3Rm51N3ZCcmV0Z29UdHR5eTFZZDlNc1FqMUR4OWxkN1JiTzgifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLW03NHZuIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJkOTgzNGIyNS0wZDg5LTQ2NzUtOWZlNy0wOTFkYTRiM2RhNDQiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.iHaYFnhfB_NJLxq8Cke_eCJkkYiaozCAkSJq3knlV3MS6sqB5NKA77DBWgEWiKzsr12K4JB4lfTx_mtJOY1959z7iPY3_xKaNsSy90sXV4N-8_w0R8WsS_u9rmdJpgculFrw9bEp7QZPTj39Mmqx9yjYsmeLPbInVnRi585sxl9fwi2sxJ4K5PSsEqyundoP_2lAonB5BQ_zARfE8MvK13M4C69hNEfENtpOvyIjEKO4UsaT2g4Tl8Cn0XoVeL6-MTU7_AUmqGC_SlW4ssguGI7jS_GgOwBzVmFjz7y_j-8VMWat-5UL7kyvpH9BAsfJkALzaQn1DHOT4xZtVOUKfw

3.4:dashboard 界⾯:

文章来源:https://www.toymoban.com/news/detail-774110.html

文章来源:https://www.toymoban.com/news/detail-774110.html

划重点,搭建过程中所使用到的文件,安装包:

链接:https://pan.baidu.com/s/1vlyPA-VFMERlcXvZhX_GLQ?pwd=0525 提取码:0525

搭建过程中遇到文件可以联系我qq:2573522468文章来源地址https://www.toymoban.com/news/detail-774110.html

到了这里,关于k8s集群环境部署-高可用部署的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!