Hello, good morning. Welcome to ANT 218, Leveling Up Computer Vision and Artificial Intelligence Development. If you are here to learn about Kin's video streams and how it can help you build computer vision applications and about AWS Customer Cyvision, you're in the right place.

My name is David Malone. I'm an IoT Specialist Solutions Architect with AWS and I'm joined this morning by Dr. AJ Gatti, Chief Technology Officer of Cyvision.

This is a brief agenda of what we'll be going through today:

-

We'll talk a little bit about how Amazon Kinesis Video Streams can be used to help you solve connected video solution problems and train computer vision models.

-

Then I will have Doctor Gulati come up and talk about Chemi Vision and their innovative uh patient care solution Chemica.

-

Then we'll talk a little bit about the things that you can do to get started with using Kinesis Video Streams in your solutions today to get started.

It's helpful to understand what customers are doing with connected video today. Some of these are a little bit obvious. We see camera devices being used in the connected home, everything from smart doorbells to baby monitors, pet cameras and things like that.

In industrial settings, cameras are being placed in operational technology settings, to remotely monitor assets and to automatically detect if something is failing in production lines.

In the public sector, we're seeing traffic management solutions starting to use Kinesis Video Streams for ingesting traffic camera data to then feed that back out to public audiences. We're also seeing public safety solutions being built for things like crowd control, things like that.

Then there's automotive and robotics. We're seeing an uptick in usage in those areas from autonomous vehicles and autonomous robots where there are plenty of cameras on the vehicles on the robots where the video data needs to be ingested into a solution that allows them to continue to train their machine vision models to continue to innovate in their areas.

And this is not an all inclusive list. Obviously, we have other areas that are using connected cameras probably even as you walked around throughout re invent ic cameras everywhere. You also see things like in the health care space such as Kia vision, which we'll learn about more shortly.

The challenges that customers face when building these types of solutions is fairly common. They all have issues with scalability and security. It's difficult to build connected camera solutions to begin with. There's a lot of concerns in play when building these types of solutions, everything from the embedded side building and connecting the camera and then you have to worry about ingesting all of the video data into a service so that you can do something with that. It's hard to do. There's a lot of different types of camera devices out there and you could build a solution to do this on your own. But it would take a lot of staff to build out that sort of solution.

Amazon Kinesis Video Streams, makes it easy to securely stream video data from camera devices into AWS for analytics, machine learning playback and other processing Kines video streams automatically provisions and elastically scales all the infrastructure needed to ingest streaming video data for the types of use cases that I've already described it securely stores encrypts and indexes your data so that you can play it back through easy to use API s.

To begin with, there are two streaming methods that you can use in Kine video streams. First, we'll just call streams for the sake of this talk. This allows you to ingest data from cameras to store it securely to play it back and to do things like training machine vision models. There are integrations with Kinesis video streams and other services such as S maker that make it easy to build out these sorts of models.

Then there is Kinesis Video Streams, Web RTC which we will just call Web RTC. And this is helpful for building low latency bidirectional streaming video applications again like smart doorbells. Whereas Kinesis Video Streams is unidirectional Kinesis Video Stream is Web RTC allows for that bidirectional communication, both video and audio.

So how do you get started? Kinesis Video Streams provides you a number of producer SDKs that are open source available on GitHub and written in C++ C and Java. And to get started, you would just clone one of these devices, clone one of these repositories on 20 year camera devices and begin streaming from there.

But say that you don't have access to modify the camera devices. So you have some existing network cameras that you want to integrate with. You could also take the producer SDK and some of the examples we have in docker to obtain feeds over protocols such as RTSP to then stream that media up into K Video Streams and Kinesis Video Streams provides a GStreamer custom sync that allows you to easily do that.

Now what is a stream and what are some of the other things that are provided by Kinesis Video Streams? Uh and the producer SDK itself, typically streams are 1 to 1 for camera devices. So you'd have one stream per camera even if you had multiple cameras at the edge and the stream itself is going to store that media and allow for playback the Kinesis stream, a video streams.

Producer SDK has a couple of levers and switches that you can play with that allow you to configure how the media fragments are uploaded from your devices. It also provides buffering just in case there's network intermittent connectivity that you have to deal with and that the media still makes its way up to Kinesis Video Streams.

One of the things that you can configure is the size of the fragments or the frequency of the fragments that are then uploaded into Kinesis Video Streams. And that's easily configurable from between one and 10 seconds from there.

Each stream is individually configurable allowing you to set the retention policy for the amount of time that you want to maintain the media in the cloud. And that policy is anywhere from zero. Meaning it's not going to store any media at all to up to 10 years allowing you to then turn around and retrieve that media at a later date and time.

There's also APIs and interfaces to use HLS streaming and DASH streaming to play back that media either live or to go back and choose a specific time period to replay media at a later date in time. And then there's APIs that allow you to extract the media directly and build more advanced applications.

In addition to that, we have a media viewer application that's open sourced on GitHub that will help you building your applications to play back that media. The open source application, just like everything else I've mentioned is on GitHub. And this makes it very easy to get up and running quickly with playing back your media and understanding how these different APIs work and can be used within your own applications.

Finally, for KISS Video Streams, there are a number of other ways to extract the media that lands inside of each stream. One of those is a feature that was released earlier this year, which will automatically extract images from the incoming media on a basis that you can set that can then be used for either machine vision applications in the cloud or for creating something like preview images that can be shown on your user interfaces.

To kind of let you know what's been captured. These images can then be configured to be automatically exported to an S3 bucket of your choice on a periodic basis and allows you to then build all sorts of custom media processing applications.

Then there's integrations with Amazon Recognition Video today, there's Recognition Video events which will automatically detect people, pets and packages. And then of course the integration with SageMaker to build and train your own computer vision applications, Kinesis Video Streams, WebRTC is the other set of features that allow you to build again low latency, bidirectional peer to peer connectivity applications.

It can be used to exchange media from a video or audio perspective. And the way it works is pretty simple. It's a standards compliant WebRTC implementation, fully managed on AWS. And it starts by again installing an open source SDK onto a device and then connecting it to something like either AWS IoT Core that provides credentials provider to securely connect to services such as Kinesis Video Streams.

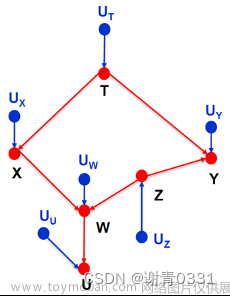

But then from there, the first thing the device does is it connects to a signaling channel. And what this allows for is asynchronous remote peer to peer connections to be initialized at a later date in time when the peer is ready to attempt to connect, once each set of devices are connected to the signaling service, it will have the opportunity to try to exchange what are called ICE candidates through what's called the SUN server.

And what this provides are a set of IP addresses that try to bypass N restrictions on the network. And so if you've ever tried to open up something on your home network so that someone can try and just view a web page, you'll notice sometimes that just doesn't work.

WebRTC was designed with that kind of thing in mind to help bypass N restrictions, firewalls and so on. So a set of candidate IP addresses are exchanged and negotiated between each of the peers and the SDKs help you take care of all of this. It's just kind of nitty gritty detail and it just works from there.

Signaling messages are exchanged, the connections negotiated. And then finally, if direct peer to peer connectivity cannot be achieved, finally, there is a managed TURN service that allows the media to then be relayed back through the cloud so that the media connection is not interrupted.

So with that, I'd like to introduce you to Dr. Ajay Gulati.

Ok, thanks David. Ok. They have talked about K Video Streams and Web RDC and a couple of AWS technologies here. I'm going to talk about a real life use case where we took some of those technologies. We built a very unique vision AI platform, then we built a solution on top of that platform and we launched that solution. And actually there are two cameras here. So we are going to do a live demo as well towards the end. And since the solution is about fall detection in elder care, hopefully, we'll do a safe fall on the stage and we'll be able to do that demo.

So this this one next. Ok. So let me start with what was the main goal with which we started this project at C Vision and also give you a little bit of brief overview of the company.

So at C one of our main goals is how do we make high quality vision AI affordable and accessible to both consumers, SMBs and even enterprises across different verticals. So if you look at vision AI solutions, there are two types of solutions in the market.

One, there are vertically integrated solutions that focus on one use case one vertical. One problem, what we came to realize is that if you look at a lot of different use cases. There is a huge amount of common commonality between them and I'll walk you through what a typical solution is and what all is common. And the idea is that if you build that common substrate, then using that, we can build a lot of solutions very quickly.

Let me just briefly talk about the company. So we are headquartered in San Jose, California. We have about 200 plus employees all over the world. We have roughly 15 million devices connected to our back end and we have about 6 million active users and the cameras are being sold across different use cases in different countries.

Now, a lot of our cameras, they are also used for a general home security use case and we deliver almost 240 million alerts. This might include motion alerts, person detection and all sorts of alerts. And we have huge amount of data that comes every day from these alerts from the cameras.

Now, let's start with the exact problem statement that we did for the project. So the goal is how do we build this robust vision AI platform that can handle diverse use cases across verticals, across industries.

If you look at any such solution, there are few components which are common to all of them. First is that you need to do device management. You would have bunch of cameras connected to some back end and you need to be able to manage those devices.

Second is that you need to be able to deal with events coming from those cameras. And these days with the advances in AI technologies, there are a lot of cameras which come with the AI chip so you can run the AI on the camera itself, which is one of the areas for us where we try to look for use cases where AI runs on the camera instead of running it on the cloud. Because that helps with latency, it helps with network bandwidth. And in general keep the cost of the solution very low.

Then we basically, once we get the event, each event comes with some sort of storage, so that storage is kept in the cloud, there might be some time interval defined for that particular video or storage to be saved in the cloud. And you should be able to access all of this platform across multiple devices.

So we typically offer web based interface to access also a mobile lab so that you can get notifications and other functionality on the mobile lab. Another very common feature that pretty much every solution has is you want to be able to do alerting and notifications.

What that means is that once you get an event, somebody needs to handle that event, and you should be able to say if the event comes between 8am to 8pm send it to this person, send a phone notification SMS email. If the event comes later in the night, you might send it to somebody else on the staff and it may be a different form of notification itself.

And in addition to all that, one of the very basic requirements is you need to be able to handle users and access control for those users. So the goal is we wanted to build such a platform while using some of the key pieces that we could leverage from AWS, which we don't have to build ourselves. But also add enough things where we can provide enough value on top of Kinesis and other solutions.

So let me go over the high level product design, how it looks and then I'll go into further details. So if you look at the high level design here, you can see on the left hand side, there are cameras and the platform should be able to support a lot of different cameras. And in most cases, we look for cameras which have the AI chip in cases where the camera doesn't have AI chip, we look to add a AI box on the edge itself.

So the AI processing still happens on the edge. And in order for a camera to be compatible with the platform, we have built a layer of, you can say application code that we run on that camera that makes that camera compatible with the cloud. And that application code essentially includes the KVS SDK that they've talked about.

It includes WebRTC SDK and it also includes IOD code SDK, which is another one of the services that we leverage. Now, once you have the devices or different types of cameras on the left hand side, the middle piece is the actual core back end. And there essentially it has all of these services that we talked about like camera management, managing different AI models, dealing with video and image storage and user access control alerts and notifications and things like that.

And if you think about any vision AI solution, you would notice that in most cases, what really changes is just the AI model. Everything else is pretty much very common because what else would you do? You would have cameras, you would have events, you would have notifications, you would deal with them. The AI model is the one piece that needs to change. And that, that does take some time and effort, especially if you are trying to run the AI model on the camera because it's easier to develop models on standard GPUs.

But cameras come with specific AI chips with their own restrictions. So it takes time and effort to do some of that porting as well. On the right hand side is more of the consumption of the platform, which as I said, before is using web and mobile and there you can consume events, you can manage devices and you can see videos and things like that.

So with that, let me go into a little bit more detailed architecture. So you can see. Yes. Oh how about we do it at the end? Yeah, that's fine.

So here is a little bit more detailed design of how things fit together. So as I said, on the left hand side, we have the consumption from the web or the mobile app, all the front end apps, then there is a load balancer through which all the API requests go. And then there are two instances which are running containers and in most cases, those are the containers of services that we have built ourselves.

And after that, there is a layer of AWS services that we consume SQS. I'm sure most people here are familiar with these services. SQS is for queuing S3 is for some storage KVS is Video Streams is what we use where cameras are actually uploading. Each camera has a unique stream on which events are coming.

IoT Core is to do the device management itself so that we can see when the devices are online offline. When how we can talk to the device, we can set make a change in the shadow and the shadow change gets reflected on the device. So it's more about just managing that device and managing the settings on the device.

And then RDS is for database for our back end. And on the right hand side, you can see that there are cameras and the cameras typically talk to two or three services, only one, they talk to IoT Core so that the cameras can be managed through IoT Core, they talk to KVS so that they can upload the video stream and then they also talk to our own back end because there are certain API calls that they would make directly on our own back end.

Now, some of the key benefits of using Kinesis Video Streams and also WebRTC. I would say the main benefit that we get from using KVS is one, it significantly reduced the development time that we took to build the platform. When we started the project, we actually looked at a lot of different milestones that need to be hit. And then once we started replacing some of the components, we realized that we can shrink the time by almost six months.

Second is that since we are leveraging some of these pieces, we also don't need to have a dedicated team to deal with all the video management functions. So whenever you build a component, you have to build some of the ancillary functions in order to troubleshoot and do other things. And there we could leverage Kinesis Video Stream UI existing features.

It also helped in troubleshooting some of the times when the problems are happened because there is support around that. Third is that as every company has to deal with their own devops challenges where once you build something, you have to deploy that infrastructure, you have to monitor it, you have to take care of alerting logs all of these things. And in this case, we don't need to worry about the video platform, scaling and deployment monitoring and things like that.

And finally using WebRTC as a standard for live video where one it gives us lower latency, other solutions typically have much longer latency. Second, it's a well known standard. And third, again, we don't have to worry about WebRTC infrastructure.

Another benefit that I want to talk about, which is what we got from AWS is one, the fact that we were leveraging AWS, there is a large pool of AWS, certified developers, devops and other engineers who have already dealt with different construction on AWS. So that also helped because we could easily get some of the people who knew all the functionality already.

Second, in this case, since this was a solution we were building from the ground up, we had a couple of questions concerns things like that. And AWS team itself introduced us to various partners to help with the development and we actually interviewed a couple of them. We ended up hiring a partner who actually had a small team that worked with our team and that partner had already done a couple of AWS projects and AWS supported that through credits and other financial incentives as well.

And working with that partner also sped up some of the time to development. And thanks to AWS, they subsidize that overall cost and make the decision easier within the company. Also.

Now, with that in mind, I would like to go into the specific use case that we built because so far I have talked about the platform, but a platform is only as good as what you can do with the platform. So the first solution that we built using this platform is we we call Cami Care. It's a fall management solution. It's focused on fall detection in elder care communities and even more so on communities which are memory care or who are dealing with people with Alzheimer's.

Ok. Now, if you look at the fall, I'm not sure how many people are familiar with this. This is not something that people may have heard or realized, but falls are one of the number one causes for hospitalizations in adults aged 65 or older. And every 20 seconds, there is a fall in US which can lead to significant injury. And the main problem is that most of these falls are unwitnessed. And if somebody is suffering from dementia, then it's even harder to know what exactly happened and they can't explain what happened.

And in many cases, the only recourse is to take them for EMS checkup. And then you go through a full checkup and realize is there something that you really need to pay attention to?

Now in ChemiCare what we have built and deployed, this is a solution which is deployed in senior communities. It's very easy to deploy using literally WiFi based cameras. I have two of these cameras right here on the stage, there is very high accuracy for the AI plus we have a human in the loop to make sure that pretty much no false positive would go through. And whenever there is a fall within a minute or so, it would send alert to the care staff. And once they get the alert, they would also get a small video of how exactly the fall happened. They will go and attend to the person immediately and then we also let them store a much longer video with more of a pre fall as well as post fall. So that later on it can be reviewed and you can figure out if there is something that can be done to avoid future falls.

Ok? And one of the key advantages of a video based solution is one you get to see the reason of the fall. But second is you can also differentiate between when there is a behavioral fall, which means somebody just lowered themselves on the ground and they were found on the ground. But really there is no action to be taken versus when there was a real fall and it can save a lot of trouble for the care staff, for the family if you are able to save some of the EMS visits. Because now, you know exactly what happened. And in many cases, we have also seen that once you realize the reason for the fall, there are scenarios where we can prevent future falls because a lot of falls. Uh they may happen when somebody is coming in and out of bed, they may be trying to access something which they are not able to access. Maybe that thing needs to be closer to the bed or some positioning in the room needs to change. And this has a huge benefit because now you can see what's causing the fall otherwise, which all of them they go unwitnessed just to give a little bit of a glimpse of the UI how it looks.

And in the demo, I'll explain a little bit more and we'll go through a live system. But essentially the UI shows you a fall history page where you can see a lot of these falls when they happen, there is a video attached to that and then the same UI lets you do other operations related to the ChemiCare.

Ok. Similarly, once you set up the cameras, you can actually define different rules and schedule. So you can say that in our community, there are three different shifts you can define three different schedules. You can define different people who are part of a schedule and you can define a notification which says in this schedule, you need to notify this set of people using this mechanism. And you can also set up different levels of escalation where you can say, ok, notify via phone SMS email. And unless it is acknowledged, you can go through more number of people.

Ok. In addition to that, we also actually launched a mobile app, but we realized this in some of the communities, people actually have a phone and in many cases, the phone is actually not even connected to 4G or 5G. It's an internal phone on which they have other apps installed to take care of things within the community. And we built a phone app so that they can see the notification on the phone as well and they can see the video and they can take some appropriate action.

Ok. With that. Um I would like to go through a ChemiCare demo and I would show the set up here. And then David, are you planning to do the fall? Ok. Do it gently. It doesn't detect how hard you fall. It's only that you are on the ground, that's what matters.

Ok. I think you can get up. It will take some time because it does pre buffer and some post buffer. So it will wait for that much video to be accumulated once the fall is detected and then it will go through the system and then we should see the notification.

Ok. So in the meantime, I'll just go through the UI a little bit. So you can see here that, um, we have schedule that tells you what is the time window. We have receivers. These are the people who are going to get the alert. And then we have rules that essentially map, um, a schedule to different receivers with different escalation level. And you can specify what sort of alert, um, should come.

I'm also sending a message to the team um in the office so that they will also do a fall in one of the other rooms here. So if I look a t managed cameras back, so you can see that there are a couple of other cameras in this facility which a re installed in our office. So I'm sending them a message that they can also do a fall right now. And then uh if there is a wi fi issue here, we'll be able to capture it from there.

Ok. Let's go to the um fall history page. I just want to show how the fall actually looks here. So let's go to one of the files that we just tested recently. This is one of the labs we have in office where we change the set up of the room, we do some testing. And in this case, you can see that somebody is coming in during the fall. And the fact that the person comes during the video is because when a fall is detected, there is a preconfigured period of pre fall. So if the fall is detected at a certain time, it would give the video 15 or 30 seconds before that and then a few minutes after that. And these are configurable in the UI ok.

Let's see if any new fall has come. Oh, this camera says offline, which is not a good sign. Yeah, I think the wi fi has been a little choppy. You can see that it's one more thing i just want to quickly highlight. So if you look at any camera, let's say the furniture got changed in the room, you can also configure the view of the camera and you can set up some avoidance zone. So you can basically say that don't detect anything on the bed. So you can set up an avoidance zone on the bed and make sure that falls are not detected in certain areas of the room.

I actually got a alert on my phone here. So that's the concept here. You can see that it says entry corner with the burden zone so that there are certain zones where if somebody is sleeping or sitting on some area, then no fall is detected that also significantly reduces the false positive rate.

I see. Ok. So actually just to let you know, I'm getting a phone call because my uh number is there on the notification and I think they did the call and I'm getting a phone call here. I'll see if it's easy to all was detected by opposite corner with avoidance stones in room four aws demo on november 30th 2022 at 11. colon 18 press met for acknowledge.

Ok. So basically maybe someone at college or the token got expired.

Ok. So basically, um since my phone was there on the notification, the phone call came and then once somebody acknowledges it won't go to the next level, but coming back to your question. So from what I understand, first question was we put the AI model on the camera, how do we update the AI model? Right?

So there are actually two ways to update the AI model on different cameras. We have implemented different ways. One is that when the camera comes online, it can actually check if there is a new AI model being available and it can download that AI model on the fly and on every camera power on it can check. Second is that in some cases the AI model where it is not separated out, it is part of the camera firmware. So when there is a new firmware available for the camera and you do a firmware upgrade as part of that, the AI model also comes on the camera. And in this UI, if you, I'm getting another call, I don't know how many files they are doing was detected by opposite corner with avoidance stones in room four aws demo on november 30th 2022 at 11 colon. Thanks for acknowledging maybe someone at college or the token. got expired.

Ok. I think they sent me a message saying that there was a fall that shows up here as well. So if you can see the fall, this is the fall in that room. Ok. And looks like there is another fall. There are two falls that came in other.

Ok? I want to actually quickly highlight another solution that we built using the same platform. So I don't know how many people are familiar with. Pers. Pers is an industry called personal emergency response system. Beam is a company which is one of the leading provider in the purse industry where somebody um if they need help, they can press a button and then they would get help in terms of emergency services or 911. The thing with this industry is it's a well known existing industry, but there is a lot of false positives here. You don't know what exactly happened. You don't know if the button was pressed by mistake and you do not, you don't know what exactly is the help needed.

So what we did with Bela medical is we took one of the cameras and we designed basically a custom integration where this is another one of the cameras on our platform which has a lid. So in order to give complete privacy, the lid actually remains closed pretty much most of the time only when somebody presses a button for help. At that time, the lid opens up and the camera will record a video and send it to the cloud. The same video will go to the monitoring center where the button press goes. And now they can see a alert has come along with that video and they can figure out what exactly is going on. They can send much better help or they can deal with a false alert and things like that.

Ok? And going back to i think one of the question that was asked that how can we make it generic and sell it to others. So actually there is another UI that we have, which is called CMI hub, which is more of a generic UI it's not specific to fault detection or any other solution. And this is what we work with some of the partners and customers to say, look, this is a generic UI you can pretty much use something like that. We can either white label it or using our API somebody can build their own UI if they want to.

Ok. Um before wrapping up, I just wanted to mention a few things that I learned as part of this journey and wanted to share some of these experiences. The first thing is I feel that as a technical leader where you are thinking of building the product, especially in a start up company, it's critical to focus on the product value and proving that value out first, instead of spending a lot of technical resources trying to build everything on your own.

Second is whenever you think of a solution, the first thing typically that i start with is you create the architecture diagram, you see what all do you need and you should be able to pretty much uh foresee the full solution with all the components or a blueprint that you can design and then you can cherry pick and say, ok, this component we don't have to build today. Let's replace it with something. And these days as you know, most of the storage solutions, nobody is building them themselves. you are pretty much picking what is the storage back end that is best needed. but i think the same can be applied now to a lot of other components in any architecture.

Third thing that we realized is we build the product internally. we did a lot of testing, everything was looking great. but once you go and deploy in the field, you realize that there is just so many things that can go wrong. there are things that you did not account for. and for example, wi fi is one of the things that when we go to communities, we deploy the cameras, we spend actually the most amount of time goes into making sure good wi fi is available to the cameras and we have built in some mechanisms by which we can reboot the ca mera if needed, make it easier to change uh wi fi settings if needed. and in some cases, we even go and install extenders and then install the cameras.

so i just feel that product meeting customers is very different than product meeting q a and q a. even if what they do to me, it seems like it's a clean room test. meeting customers really realize real world problems. we have had cases where people would take out the plug of the camera. i mean, it's just not a clean environment out there. and i think once we deployed in some of the communities, we learned a lot of the quirks in the communities and we made a bunch of changes quickly over the next three months in order to really make sure that we are able to handle the real world scenarios out there.

and i think that is something whichever solution you are building, make sure that you keep some time where you launch the product. and then in the next 23 months, you can actually make it a lot more robust.

So just to summarize um in terms of KVS benefits, um KVS makes it very easy to basically stream videos from devices being able to do certain operations on those videos in terms of storage analysis, life cycle, we don't have to write any code or anything else for it.

Second, KVS handles all the infrastructure required for this solution. So all of that headache goes away from the dev ops team or the dev team. And it supports both live video using WebRTC as well as playback of events, which uh what we showed was essentially a playback of a stored event on KVS.

And it's pretty easy to basically integrate with AI models running in the cloud. We actually have some other solutions where the cameras don't have enough AI chip or power to do the AI. So in that case, we try to get part of the video, for example, either based on a motion detection or a human detection, what the camera can do. And then on that particular video, we run the AI in the cloud itself because 24 7 streaming is almost a no, no, it's consumes too much network bandwidth and cost. But if you can filter the videos to be for a smaller duration, you can run the AI in the cloud.

And finally, I think SDKs were really helpful to build the application quickly because now we have ready made SDKs from AWS for KVS WebRTC IoT Core. So now you really have to write just the pieces that you care about. A lot of this other headache is pretty much taken care of for you.

Ok. Ok. I think that's pretty much what i had. Dave. Do you have anything just to wrap things up.文章来源:https://www.toymoban.com/news/detail-775587.html

Thank you. Thank you. So, we've already talked about this a few times, but if you want to get started with Kinesis Video Streams, um one thing we wanted to offer was a quick smart home reference architecture. Uh as you can see here, there's a connected camera device on the left hand side, um on the right hand side would be the web and mobile applications and right in the middle would be uh the A to services used in this case. So you could get started by downloading and installing the SDKs either on a camera or gateway device to obtain the media. It's fairly easy to turn around and begin transmitting that video northbound to the cloud and then begin building applications to consume that video. So there's a quick reference architecture for you. Um other than that, we don't have anything else for you today. Um please do apologies one more. Please do take time to rate the session and thank you for coming today.文章来源地址https://www.toymoban.com/news/detail-775587.html

到了这里,关于Leveling up computer vision and artificial intelligence development的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!