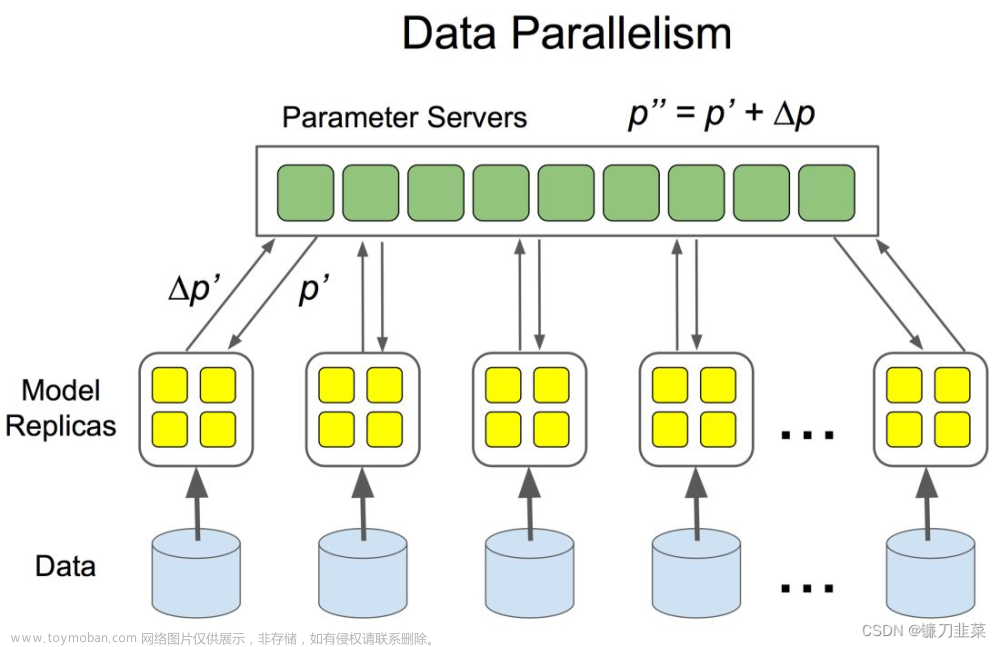

分布式训练相关基本参数的概念如下:

Definitions

-

Node- A physical instance or a container; maps to the unit that the job manager works with. -

Worker- A worker in the context of distributed training. -

WorkerGroup- The set of workers that execute the same function (e.g. trainers). -

LocalWorkerGroup- A subset of the workers in the worker group running on the same node. -

RANK- The rank of the worker within a worker group. -

WORLD_SIZE- The total number of workers in a worker group. -

LOCAL_RANK- The rank of the worker within a local worker group. -

LOCAL_WORLD_SIZE- The size of the local worker group. -

rdzv_id- A user-defined id that uniquely identifies the worker group for a job. This id is used by each node to join as a member of a particular worker group.

-

rdzv_backend- The backend of the rendezvous (e.g.c10d). This is typically a strongly consistent key-value store. -

rdzv_endpoint- The rendezvous backend endpoint; usually in form<host>:<port>.

A Node runs LOCAL_WORLD_SIZE workers which comprise a LocalWorkerGroup. The union of all LocalWorkerGroups in the nodes in the job comprise the WorkerGroup.

翻译:

Node: 通常代表有几台机器

Worker: 指一个训练进程

WORD_SIZE: 总训练进程数,通常与所有机器加起来的GPU数相等(通常每个GPU跑一个训练进程)

RANK: 每个Worker的标号,用来标识每个每个训练进程(所有机器)

LOCAL_RANK : 在同一台机器上woker的标识,例如一台8卡机器上的woker标识就是0-7

总结:

一个节点(一台机器) 跑 LOCAL_WORLD_SIZE 个数的workers, 这些workers 构成了LocalWorkerGroup(组的概念),

所有机器上的LocalWorkerGroup 就组成了WorkerGroup

ps: Local 就是代表一台机器上的相关概念, 当只有一台机器时,Local的数据和不带local的数据时一致的

reference:文章来源:https://www.toymoban.com/news/detail-783602.html

torchrun (Elastic Launch) — PyTorch 2.1 documentation文章来源地址https://www.toymoban.com/news/detail-783602.html

到了这里,关于pytorch 分布式 Node/Worker/Rank等基础概念的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!