1、概念

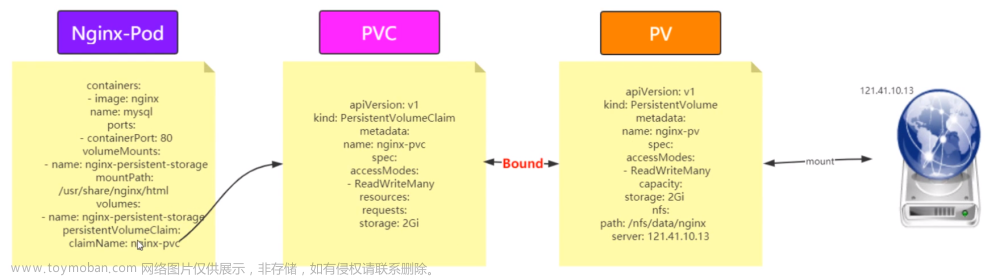

StorageClass是一个存储类,通过创建StorageClass可以动态生成一个存储卷,供k8s用户使用。

使用StorageClass可以根据PVC动态的创建PV,减少管理员手工创建PV的工作。

StorageClass的定义主要包括名称、后端存储的提供者(privisioner)和后端存储的相关参数配置。StorageClass一旦被创建,就无法修改,如需修改,只能删除重建。

2、创建

要使用 StorageClass,我们就得安装对应的自动配置程序,比如本篇文章使用的存储后端是 nfs,那么我们就需要使用到一个 NFS-Subdir-External-Provisioner 的自动配置程序,我们也叫它 Provisioner,

教程:https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner,这个程序使用我们已经配置好的 nfs 服务器,来自动创建持久卷,也就是自动帮我们创建 PV。

自动创建的 PV 以{namespace}-{pvcName}-{pvName} 这样的命名

格式创建在 NFS 服务器上的共享数据目录中

当这个 PV 被回收后会以archieved-{namespace}-{pvcName}-{pvName} 这样的命名格式存在 NFS 服务器上。

在部署NFS-Subdir-External-Provisioner 之前,我们需要先成功安装上 nfs 服务器,安装方法,在前面文章已经讲解过了https://blog.csdn.net/u011837804/article/details/128588864,

2.1、集群环境

[root@k8s-master ~]# kubectl cluster-info

Kubernetes control plane is running at https://10.211.55.11:6443

CoreDNS is running at https://10.211.55.11:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready control-plane 19h v1.25.0 10.211.55.11 <none> CentOS Stream 8 4.18.0-408.el8.x86_64 docker://20.10.22

k8s-node1 Ready <none> 19h v1.25.0 10.211.55.12 <none> CentOS Stream 8 4.18.0-408.el8.x86_64 docker://20.10.22

k8s-node2 Ready <none> 19h v1.25.0 10.211.55.13 <none> CentOS Stream 8 4.18.0-408.el8.x86_64 docker://20.10.22

[root@k8s-master ~]#2.2、创建 ServiceAccount

现在的 Kubernetes 集群大部分是基于 RBAC 的权限控制,所以我们需要创建一个拥有一定权限的 ServiceAccount 与后面要部署的 NFS Subdir Externa Provisioner 组件绑定。

注意:ServiceAccount是必须的,否则将不会动态创建PV,PVC状态一直为Pending

RBAC 资源文件 nfs-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: dev

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: dev

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: dev

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: dev

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: dev

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

2.3、部署NFS-Subdir-External-Provisioner

我们以master(10.211.55.11)为nfs服务器,共享目录为/root/data/nfs,StorageClass名称为storage-nfs 部署NFS-Subdir-External-Provisioner

创建nfs-provisioner-deploy.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

namespace: dev

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate #设置升级策略为删除再创建(默认为滚动更新)

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner #上一步创建的ServiceAccount名称

containers:

- name: nfs-client-provisioner

image: registry.cn-beijing.aliyuncs.com/mydlq/nfs-subdir-external-provisioner:v4.0.0

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME # Provisioner的名称,以后设置的storageclass要和这个保持一致

value: storage-nfs

- name: NFS_SERVER # NFS服务器地址,需和valumes参数中配置的保持一致

value: 10.211.55.11

- name: NFS_PATH # NFS服务器数据存储目录,需和valumes参数中配置的保持一致

value: /root/data/nfs

- name: ENABLE_LEADER_ELECTION

value: "true"

volumes:

- name: nfs-client-root

nfs:

server: 10.211.55.11 # NFS服务器地址

path: /root/data/nfs # NFS共享目录

执行效果

# 创建

[root@k8s-master ~]# kubectl apply -f nfs-provisioner-deploy.yaml

deployment.apps/nfs-client-provisioner created

[root@k8s-master ~]#

[root@k8s-master ~]#

# 查看

[root@k8s-master ~]# kubectl get deploy,pod -n dev

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nfs-client-provisioner 1/1 1 1 9s

NAME READY STATUS RESTARTS AGE

pod/nfs-client-provisioner-59b496764-5kts2 1/1 Running 0 9s

[root@k8s-master ~]#2.4、创建 NFS StorageClass

我们在创建 PVC 时经常需要指定 storageClassName 名称,这个参数配置的就是一个 StorageClass 资源名称,PVC 通过指定该参数来选择使用哪个 StorageClass,并与其关联的 Provisioner 组件来动态创建 PV 资源。所以,这里我们需要提前创建一个 Storagelcass 资源。

创建nfs-storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

namespace: dev

name: nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "false" ## 是否设置为默认的storageclass

provisioner: storage-nfs ## 动态卷分配者名称,必须和上面创建的deploy中环境变量“PROVISIONER_NAME”变量值一致

parameters:

archiveOnDelete: "true" ## 设置为"false"时删除PVC不会保留数据,"true"则保留数据

mountOptions:

- hard ## 指定为硬挂载方式

- nfsvers=4 ## 指定NFS版本,这个需要根据NFS Server版本号设置查看nfs-server版本号

# nfs 服务器版本号查看 其中 “Server nfs v4” 说明版本为4

[root@k8s-master ~]# nfsstat -v

Server packet stats:

packets udp tcp tcpconn

813 0 813 237

Server rpc stats:

calls badcalls badfmt badauth badclnt

585 228 228 0 0

Server reply cache:

hits misses nocache

0 0 585

Server io stats:

read write

0 0

Server read ahead cache:

size 0-10% 10-20% 20-30% 30-40% 40-50% 50-60% 60-70% 70-80% 80-90% 90-100% notfound

32 0 0 0 0 0 0 0 0 0 0 0

Server file handle cache:

lookup anon ncachedir ncachenondir stale

0 0 0 0 0

Server nfs v4:

null compound

8 1% 577 98%

Server nfs v4 operations:

op0-unused op1-unused op2-future access close

0 0% 0 0% 0 0% 36 2% 0 0%

commit create delegpurge delegreturn getattr

0 0% 5 0% 0 0% 0 0% 335 22%

getfh link lock lockt locku

55 3% 0 0% 0 0% 0 0% 0 0%

lookup lookup_root nverify open openattr

51 3% 0 0% 0 0% 0 0% 0 0%

open_conf open_dgrd putfh putpubfh putrootfh

0 0% 0 0% 344 23% 0 0% 21 1%

read readdir readlink remove rename

0 0% 3 0% 0 0% 0 0% 3 0%

renew restorefh savefh secinfo setattr

0 0% 0 0% 3 0% 0 0% 5 0%

setcltid setcltidconf verify write rellockowner

0 0% 0 0% 0 0% 0 0% 0 0%

bc_ctl bind_conn exchange_id create_ses destroy_ses

0 0% 0 0% 17 1% 10 0% 8 0%

free_stateid getdirdeleg getdevinfo getdevlist layoutcommit

0 0% 0 0% 0 0% 0 0% 0 0%

layoutget layoutreturn secinfononam sequence set_ssv

0 0% 0 0% 10 0% 535 36% 0 0%

test_stateid want_deleg destroy_clid reclaim_comp allocate

0 0% 0 0% 7 0% 9 0% 0 0%

copy copy_notify deallocate ioadvise layouterror

0 0% 0 0% 0 0% 0 0% 0 0%

layoutstats offloadcancel offloadstatus readplus seek

0 0% 0 0% 0 0% 0 0% 0 0%

write_same

0 0%

[root@k8s-master ~]#

# nfs客户端版本号查看 其中 “Client nfs v4” 说明客户端版本为4

[root@k8s-node1 ~]# nfsstat -c

Client rpc stats:

calls retrans authrefrsh

586 0 586

Client nfs v4:

null read write commit open

8 1% 0 0% 0 0% 0 0% 0 0%

open_conf open_noat open_dgrd close setattr

0 0% 0 0% 0 0% 0 0% 5 0%

fsinfo renew setclntid confirm lock

30 5% 0 0% 0 0% 0 0% 0 0%

lockt locku access getattr lookup

0 0% 0 0% 36 6% 46 7% 51 8%

lookup_root remove rename link symlink

10 1% 0 0% 3 0% 0 0% 0 0%

create pathconf statfs readlink readdir

5 0% 20 3% 92 15% 0 0% 3 0%

server_caps delegreturn getacl setacl fs_locations

50 8% 0 0% 0 0% 0 0% 0 0%

rel_lkowner secinfo fsid_present exchange_id create_session

0 0% 0 0% 0 0% 17 2% 10 1%

destroy_session sequence get_lease_time reclaim_comp layoutget

8 1% 165 28% 1 0% 9 1% 0 0%

getdevinfo layoutcommit layoutreturn secinfo_no test_stateid

0 0% 0 0% 0 0% 10 1% 0 0%

free_stateid getdevicelist bind_conn_to_ses destroy_clientid seek

0 0% 0 0% 0 0% 7 1% 0 0%

allocate deallocate layoutstats clone

0 0% 0 0% 0 0% 0 0%

执行效果

# 创建

[root@k8s-master ~]# kubectl apply -f nfs-storageclass.yaml

storageclass.storage.k8s.io/nfs-storage created

[root@k8s-master ~]#

# 查看

[root@k8s-master ~]# kubectl get sc -n dev

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-storage storage-nfs Delete Immediate false 7s3、测试PVC使用StorageClass

创建storage-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: storage-pvc

namespace: dev

spec:

storageClassName: nfs-storage ## 需要与上面创建的storageclass的名称一致

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Mi执行效果文章来源:https://www.toymoban.com/news/detail-785744.html

# 创建

[root@k8s-master ~]# kubectl apply -f storage-pvc.yaml

persistentvolumeclaim/storage-pvc created

[root@k8s-master ~]#

[root@k8s-master ~]#

# 查看pvc

[root@k8s-master ~]# kubectl get pvc -n dev

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

storage-pvc Bound pvc-8b5da590-2436-472e-a671-038822f15252 1Mi RWO nfs-storage 6s

[root@k8s-master ~]#

[root@k8s-master ~]#

# 查看是否动态创建了pv

[root@k8s-master ~]# kubectl get pv -n dev

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-8b5da590-2436-472e-a671-038822f15252 1Mi RWO Delete Bound dev/storage-pvc nfs-storage 19s

[root@k8s-master ~]#

# 查看共享目录是否动态创建了文件

[root@k8s-master ~]# cd /root/data/nfs/

[root@k8s-master nfs]# ls

dev-storage-pvc-pvc-8b5da590-2436-472e-a671-038822f15252

[root@k8s-master nfs]#4、异常处理

如果出现异常,请查看https://blog.csdn.net/u011837804/article/details/128693933 需要解决方案文章来源地址https://www.toymoban.com/news/detail-785744.html

到了这里,关于Kubernetes(K8S)中StorageClass(SC)详解、实例的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!