目标

1、使用开源的大模型服务搭建属于自己的模型服务;

2、调优自己的大模型;

选型

采用通义千问模型,https://github.com/QwenLM/Qwen

步骤

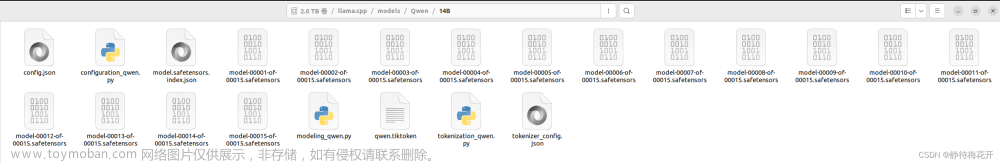

1、下载模型文件

开源模型库:https://www.modelscope.cn/models

mkdir -p /data/qwen

cd /data/qwen

git clone --depth 1 https://www.modelscope.cn/qwen/Qwen-14B-Chat.git

# 小内存机器下载1.8B参数的,14B需要几十内存

# git clone --depth 1 https://www.modelscope.cn/qwen/Qwen-1_8B-Chat.git

2、下载使用docker 镜像

docker pull qwenllm/qwen3、启动脚本

https://github.com/QwenLM/Qwen/blob/main/docker/docker_web_demo.sh

# 修改如下内容

IMAGE_NAME=qwenllm/qwen

QWEN_CHECKPOINT_PATH=/data/qwen/Qwen-14B-Chat

PORT=8000

CONTAINER_NAME=qwen4、运行

访问http://localhost:8080 即可

sh docker_web_demo.sh输出如下,可以查看容器日志是否报错。

Successfully started web demo. Open '...' to try!

Run `docker logs ...` to check demo status.

Run `docker rm -f ...` to stop and remove the demo.效果

文档参考

https://github.com/QwenLM/Qwen/blob/main/README_CN.md

常见问题

1、运行报错

去掉docker_web_demo.sh中--gpus all

docker: Error response from daemon: could not select device driver "" with capabilities: [[gpu]].

2、Error while deserializing header: HeaderTooLarge

先安装yum install git-lfs 在下载模型文件,模型是git大文件管理,需要git-lfs的支持。

Traceback (most recent call last):

File "web_demo.py", line 209, in <module>

main()

File "web_demo.py", line 203, in main

model, tokenizer, config = _load_model_tokenizer(args)

File "web_demo.py", line 50, in _load_model_tokenizer

model = AutoModelForCausalLM.from_pretrained(

File "/usr/local/lib/python3.8/dist-packages/transformers/models/auto/auto_factory.py", line 511, in from_pretrained

return model_class.from_pretrained(

File "/usr/local/lib/python3.8/dist-packages/transformers/modeling_utils.py", line 3091, in from_pretrained

) = cls._load_pretrained_model(

File "/usr/local/lib/python3.8/dist-packages/transformers/modeling_utils.py", line 3456, in _load_pretrained_model

state_dict = load_state_dict(shard_file)

File "/usr/local/lib/python3.8/dist-packages/transformers/modeling_utils.py", line 458, in load_state_dict

with safe_open(checkpoint_file, framework="pt") as f:

safetensors_rust.SafetensorError: Error while deserializing header: HeaderTooLarge

3、Cannot allocate memory文章来源:https://www.toymoban.com/news/detail-790964.html

内存不足,可以尝试选择1_8B小参数的模型。文章来源地址https://www.toymoban.com/news/detail-790964.html

到了这里,关于使用开源通义千问模型(Qwen)搭建自己的大模型服务的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!