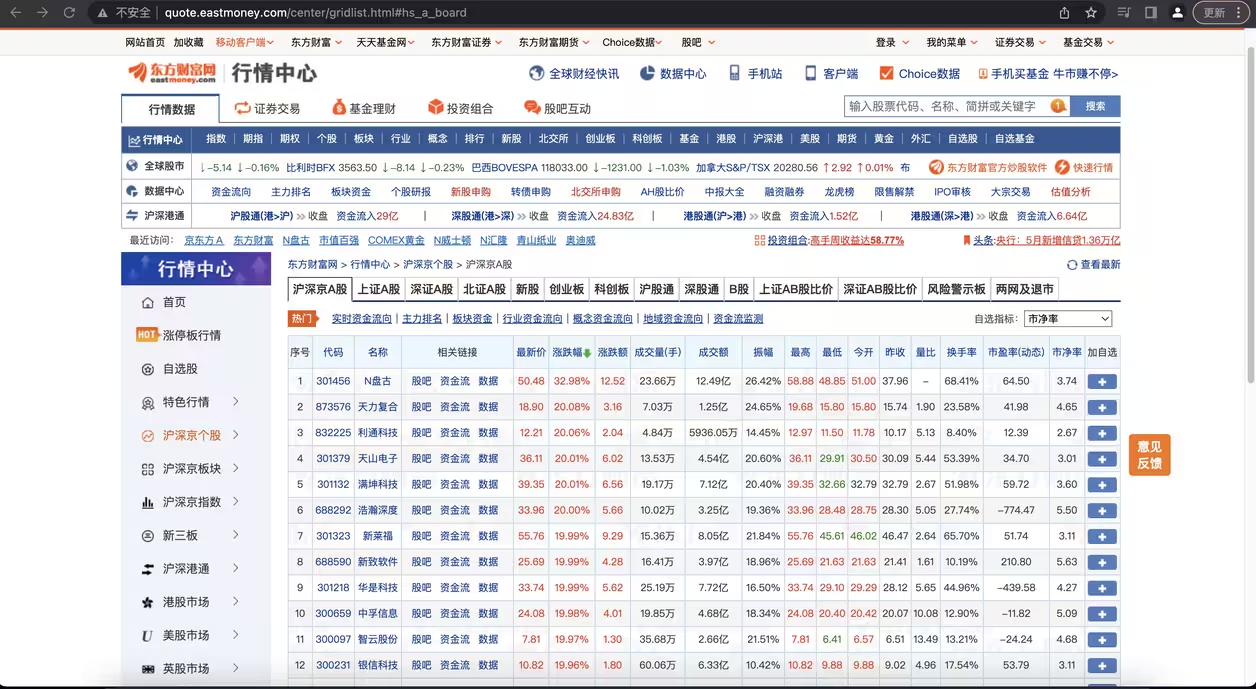

学习主要内容:使用Python定时在非节假日爬取东方财富股行情数据存入数据库中,

东方财富行情中心网地址如下:

http://quote.eastmoney.com/center/gridlist.html#hs_a_board

东方财富行情中心网地址

通过点击该网站的下一页发现,网页内容在变化,但是网站的 URL 却不变,说明这里使用了 Ajax 技术,动态从服务器拉取数据,这种方式的好处是可以在不重新加载整幅网页的情况下更新部分数据,减轻网络负荷,加快页面加载速度。

通过 F12 来查看网络请求情况,可以很容易的发现,网页上的数据都是通过如下地址请求的:

http://38.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112409036039385296142_1658838397275&pn=3&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1658838404848

Json及URL地址

接下来同过多次,来观察该地址的变化情况,发现其中的pn参数代表这页数,于是通过修改&pn=后面的数字来访问不同页面对应的数据:

import requests

import json

import os

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36"

}

json_url = "http://48.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112402508937289440778_1658838703304&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1658838703305"

res = requests.get(json_url, headers=headers)接下来通过观察返回的数据,可以得出数据并不是标准的 json 数据,需要转换一下数据格式,于是先进行 json 化:

result = res.text.split("jQuery112402508937289440778_1658838703304")[1].split("(")[1].split(");")[0]

result_json = json.loads(result)

print(result_json)这样数据就整齐多了,所有的股票数据都在data.diff下面,我们只需要编写解析函数即可

返回各参数对应含义。

先准备一个存储函数:

def save_data(data):

# "股票代码,股票名称,最新价,涨跌幅,涨跌额,成交量(手),成交额,振幅,换手率,市盈率,量比,最高,最低,今开,昨收,市净率"

for i in data:

Code = i["f12"]

Name = i["f14"]

Close = i['f2'] if i["f2"] != "-" else None

ChangePercent = i["f3"] if i["f3"] != "-" else None

Change = i['f4'] if i["f4"] != "-" else None

Volume = i['f5'] if i["f5"] != "-" else None

Amount = i['f6'] if i["f6"] != "-" else None

Amplitude = i['f7'] if i["f7"] != "-" else None

TurnoverRate = i['f8'] if i["f8"] != "-" else None

PERation = i['f9'] if i["f9"] != "-" else None

VolumeRate = i['f10'] if i["f10"] != "-" else None

Hign = i['f15'] if i["f15"] != "-" else None

Low = i['f16'] if i["f16"] != "-" else None

Open = i['f17'] if i["f17"] != "-" else None

PreviousClose = i['f18'] if i["f18"] != "-" else None

PB = i['f23'] if i["f23"] != "-" else None然后再把前面处理好的 json 数据传入

stock_data = result_json['data']['diff']

save_data(stock_data)这样我们就得到了第一页的股票数据,最后我们只需要循环抓取所有网页即可。

代码如下:

def craw_data():

stock_data = []

json_url1= "http://72.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112406903204148811937_1678420818118&pn=%s&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1678420818127" % str(

1)

res1 = requests.get(json_url1, headers=headers)

result1 = res1.text.split("jQuery112406903204148811937_1678420818118")[1].split("(")[1].split(");")[0]

result_json1 = json.loads(result1)

total_value = result_json1['data']['total']

maxn = math.ceil(total_value/20)

for i in range(1, maxn + 1):

json_url = "http://72.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112406903204148811937_1678420818118&pn=%s&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1678420818127" % str(

i)

res = requests.get(json_url, headers=headers)

result = res.text.split("jQuery112406903204148811937_1678420818118")[1].split("(")[1].split(");")[0]

result_json = json.loads(result)

stock_data.extend(result_json['data']['diff'])

return stock_data最后,针对此块,全部python代码整合如下,在非节假日每天定时15:05分爬取,并存入Mysql数据库中:

import math

import requests

import json

import db

import time

import holidays

import datetime

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36"

}

def save_data(data):

# "股票代码,股票名称,最新价,涨跌幅,涨跌额,成交量(手),成交额,振幅,换手率,市盈率,量比,最高,最低,今开,昨收,市净率"

for i in data:

Code = i["f12"]

Name = i["f14"]

Close = i['f2'] if i["f2"] != "-" else None

ChangePercent = i["f3"] if i["f3"] != "-" else None

Change = i['f4'] if i["f4"] != "-" else None

Volume = i['f5'] if i["f5"] != "-" else None

Amount = i['f6'] if i["f6"] != "-" else None

Amplitude = i['f7'] if i["f7"] != "-" else None

TurnoverRate = i['f8'] if i["f8"] != "-" else None

PERation = i['f9'] if i["f9"] != "-" else None

VolumeRate = i['f10'] if i["f10"] != "-" else None

Hign = i['f15'] if i["f15"] != "-" else None

Low = i['f16'] if i["f16"] != "-" else None

Open = i['f17'] if i["f17"] != "-" else None

PreviousClose = i['f18'] if i["f18"] != "-" else None

PB = i['f23'] if i["f23"] != "-" else None

insert_sql = """

insert t_stock_code_price(code, name, close, change_percent, `change`, volume, amount, amplitude, turnover_rate, peration, volume_rate, hign, low, open, previous_close, pb, create_time)

values (%s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s)

"""

val = (Code, Name, Close, ChangePercent, Change, Volume, Amount, Amplitude,

TurnoverRate, PERation, VolumeRate, Hign, Low, Open, PreviousClose, PB, datetime.now().strftime('%F'))

db.insert_or_update_data(insert_sql, val)

def craw_data():

stock_data = []

json_url1= "http://72.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112406903204148811937_1678420818118&pn=%s&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1678420818127" % str(

1)

res1 = requests.get(json_url1, headers=headers)

result1 = res1.text.split("jQuery112406903204148811937_1678420818118")[1].split("(")[1].split(");")[0]

result_json1 = json.loads(result1)

total_value = result_json1['data']['total']

maxn = math.ceil(total_value/20)

for i in range(1, maxn + 1):

json_url = "http://72.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112406903204148811937_1678420818118&pn=%s&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1678420818127" % str(

i)

res = requests.get(json_url, headers=headers)

result = res.text.split("jQuery112406903204148811937_1678420818118")[1].split("(")[1].split(");")[0]

result_json = json.loads(result)

stock_data.extend(result_json['data']['diff'])

return stock_data

def craw():

# print("运行爬取任务...")

stock_data = craw_data()

save_data(stock_data)

def is_business_day(date):

cn_holidays = holidays.CountryHoliday('CN')

return date.weekday() < 5 and date not in cn_holidays

def schedule_task():

while True:

now = datetime.datetime.now()

target_time = now.replace(hour=15, minute=10, second=0, microsecond=0)

if now >= target_time and is_business_day(now.date()):

craw()

# 计算下一个工作日的日期

next_day = now + datetime.timedelta(days=1)

while not is_business_day(next_day.date()):

next_day += datetime.timedelta(days=1)

# 计算下一个工作日的目标时间

next_target_time = next_day.replace(hour=15, minute=5, second=0, microsecond=0)

# 计算下一个任务运行的等待时间

sleep_time = (next_target_time - datetime.datetime.now()).total_seconds()

# 休眠等待下一个任务运行时间

time.sleep(sleep_time)

if __name__ == "__main__":

schedule_task()其中db.py及mysql相关配置如下:

import pymysql

def get_conn():

return pymysql.connect(

host='localhost',

user='root',

password='1234',

database='test',

port=3306

)

def query_data(sql):

conn = get_conn()

try:

cursor = conn.cursor()

cursor.execute(sql)

return cursor.fetchall()

finally:

conn.close()

def insert_or_update_data(sql, val):

conn = get_conn()

try:

cursor = conn.cursor()

cursor.execute(sql,val)

conn.commit()

finally:

conn.close()mysql相关表结构如下:文章来源:https://www.toymoban.com/news/detail-794558.html

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for t_stock_code_price

-- ----------------------------

DROP TABLE IF EXISTS `t_stock_code_price`;

CREATE TABLE `t_stock_code_price` (

`id` bigint NOT NULL AUTO_INCREMENT,

`code` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL COMMENT '股票代码',

`name` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL COMMENT '股票名称',

`close` double DEFAULT NULL COMMENT '最新价',

`change_percent` double DEFAULT NULL COMMENT '涨跌幅',

`change` double DEFAULT NULL COMMENT '涨跌额',

`volume` double DEFAULT NULL COMMENT '成交量(手)',

`amount` double DEFAULT NULL COMMENT '成交额',

`amplitude` double DEFAULT NULL COMMENT '振幅',

`turnover_rate` double DEFAULT NULL COMMENT '换手率',

`peration` double DEFAULT NULL COMMENT '市盈率',

`volume_rate` double DEFAULT NULL COMMENT '量比',

`hign` double DEFAULT NULL COMMENT '最高',

`low` double DEFAULT NULL COMMENT '最低',

`open` double DEFAULT NULL COMMENT '今开',

`previous_close` double DEFAULT NULL COMMENT '昨收',

`pb` double DEFAULT NULL COMMENT '市净率',

`create_time` varchar(64) NOT NULL COMMENT '写入时间',

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci;

SET FOREIGN_KEY_CHECKS = 1;以上即是全部内容。文章来源地址https://www.toymoban.com/news/detail-794558.html

到了这里,关于Python定时爬取东方财富行情数据的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!