1. 需要的类库

import requests

import pandas as pd

2. 分析

通过分析,本站的热榜数据可以直接通过接口拿到,故不需要解析标签,请求热榜数据接口

url = "https://xxxt/xxxx/web/blog/hot-rank?page=0&pageSize=25&type=" #本站地址

直接请求解析会有点问题,数据无法解析,加上请求头

headers = {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "zh-CN,zh;q=0.9",

"Sec-Ch-Ua": "\"Chromium\";v=\"116\", \"Not)A;Brand\";v=\"24\", \"Google Chrome\";v=\"116\"",

"Sec-Ch-Ua-Mobile": "?1",

"Sec-Ch-Ua-Platform": "\"Android\"",

"Sec-Fetch-Dest": "empty",

"Sec-Fetch-Mode": "cors",

"Sec-Fetch-Site": "same-site",

"User-Agent": "Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Mobile Safari/537.36"

}

完整请求代码文章来源:https://www.toymoban.com/news/detail-795050.html

# 发送HTTP请求

r = requests.get(url, headers=headers)

# 解析JSON数据

data = r.json()

# 提取所需信息

articles = []

for item in data["data"]:

title = item["articleTitle"]

link = item["articleDetailUrl"]

rank = item["hotRankScore"]

likes = item["favorCount"]

comments = item["commentCount"]

views = item["viewCount"]

author = item["nickName"]

time = item["period"]

articles.append({

"标题": title,

"链接": link,

"热度分": rank,

"点赞数": likes,

"评论数": comments,

"查看数": views,

"作者": author,

"时间": time

})

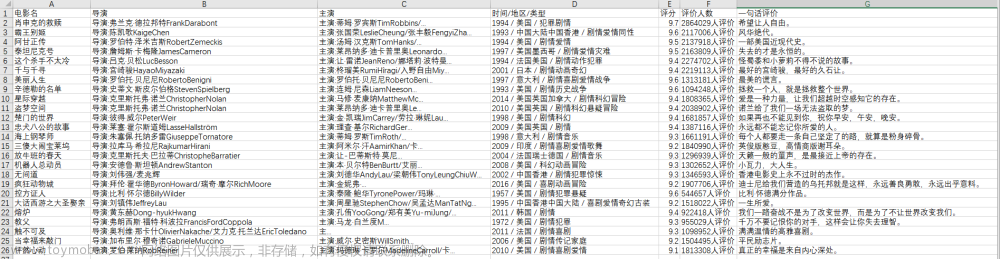

3.导出Excel

# 创建DataFrame

df = pd.DataFrame(articles)

# 将DataFrame保存为Excel文件

df.to_excel("csdn_top.xlsx", index=False)

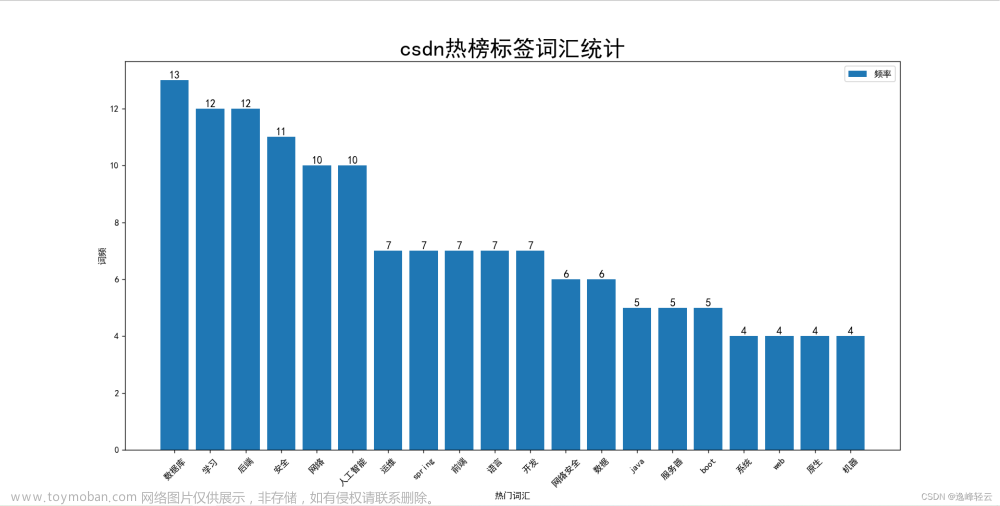

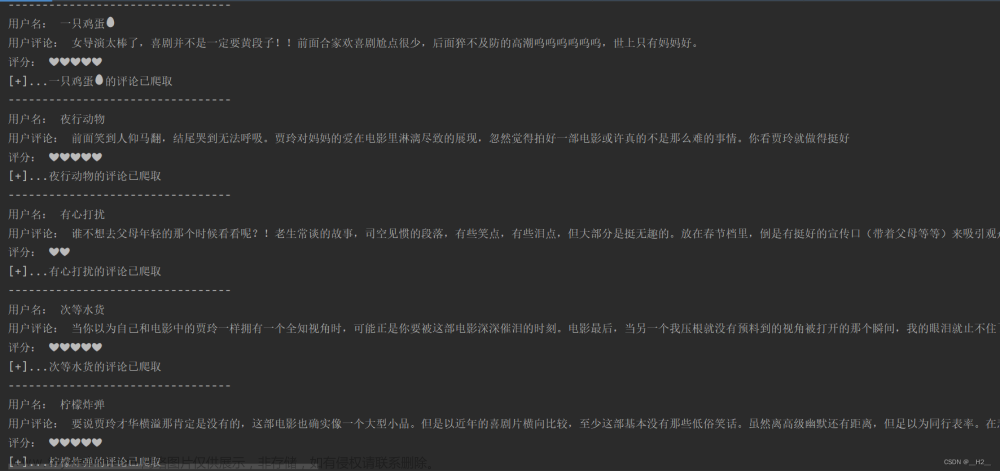

4. 成果展示

文章来源地址https://www.toymoban.com/news/detail-795050.html

文章来源地址https://www.toymoban.com/news/detail-795050.html

到了这里,关于python爬虫实战(10)--获取本站热榜的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!