目录

一 装备三台机器linux(centos)

二 准备前置环境并安装kubernetes

1 三台机器都要做如下操作

1.1 关闭防火墙:

1.2 关闭 selinux:

1.3 关闭 swap

1.4 添加主机名与 IP 对应关系

1.5 date 查看时间 (可选)

1.6 卸载系统之前的 docke 命令自行百度不做说明

1.7 安装 Docker-CE

1. 7.1 装必须的依赖

1.7.2 设置 docker repo 的 yum 位置

1.7.3 安装 docker,以及 docker-cli

1.7.4 配置 docker 加速器(自己去阿里云镜像加速器粘贴 下面是例子 不一定好用)

1.7.5 动 docker & 设置 docker 开机自启

1.7.6 添加阿里云 yum

1.8 安装 kubeadm,kubelet 和 kubectl

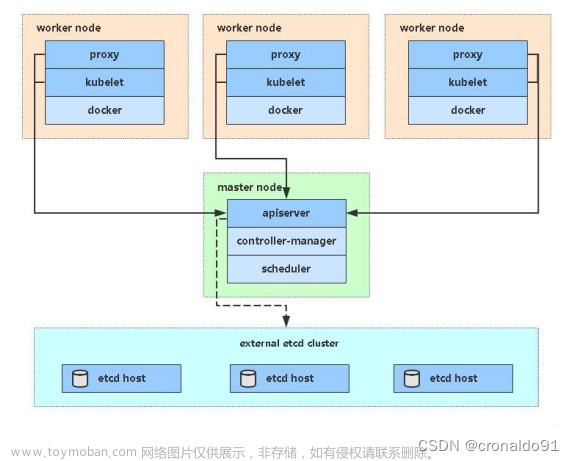

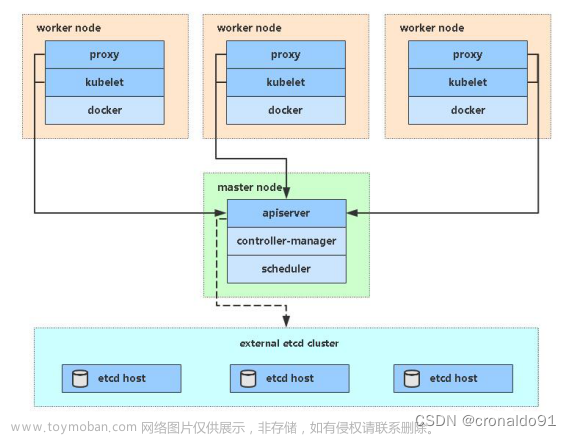

2 部署 k8s-master

2.1 master 机器上执行

2.2 等待上诉命令执行完成

2.3 master执行

2.4 在master节点上安装网络

2.5 在其他的分支节点执行(k8s-node1 k8s-node2)

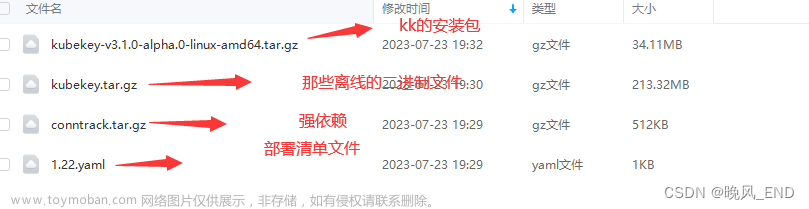

三 安装 kubesphere

1.1 创建StorageClass存储

1.1.1 创建kubesphere-system命名空间

1.1.2 创建rbac授权

1.1.3 创建storageclass的deployment

1.1.4 创建StorageClass

1.1.5 查看StorageClass

1.1.6 设置默认的StorageClass

1.2 部署最小化Kubersphere

1.2.1 下载kubersphere的配控文件

1.2.2 执行如下命令启动

1.2.3 查看安装日志

完结撒花

一 装备三台机器linux(centos)

ip hostname

192.168.49.101 k8s-master

192.168.49.102 k8s-node1

192.168.49.103 k8s-node2

二 准备前置环境并安装kubernetes

1 三台机器都要做如下操作

1.1 关闭防火墙:

systemctl stop firewalld

systemctl disable firewalld1.2 关闭 selinux:

sed -i 's/enforcing/disabled/'

/etc/selinux/config setenforce 01.3 关闭 swap

swapoff -a #临时

sed -ri 's/.*swap.*/#&/' /etc/fstab #永久

free -g #验证,swap 必须为 0;1.4 添加主机名与 IP 对应关系

vi /etc/hosts

192.168.49.101 k8s-master

192.168.49.101 k8s-node1

192.168.49.101 k8s-node21.5 date 查看时间 (可选)

yum install -y

ntpdate ntpdate time.windows.com 同步最新时间1.6 卸载系统之前的 docke 命令自行百度不做说明

1.7 安装 Docker-CE

1. 7.1 装必须的依赖

sudo yum install -y yum-utils device-mapper-persistent-data lvm21.7.2 设置 docker repo 的 yum 位置

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo1.7.3 安装 docker,以及 docker-cli

sudo yum install -y docker-ce docker-ce-cli containerd.io1.7.4 配置 docker 加速器(自己去阿里云镜像加速器粘贴 下面是例子 不一定好用)

sudo mkdir -p /etc/docker sudo tee /etc/docker/daemon.json <<-'EOF' { "registry-mirrors": ["https://82m9ar63.mirror.aliyuncs.com"] } EOF sudo systemctl daemon-reload sudo systemctl restart dock1.7.5 动 docker & 设置 docker 开机自启

systemctl enable docker1.7.6 添加阿里云 yum

cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF1.8 安装 kubeadm,kubelet 和 kubectl

yum list|grep kube

yum install -y kubelet-1.19.0 kubeadm-1.19.0 kubectl-1.19.0

systemctl enable kubelet

systemctl start kubele2 部署 k8s-master

2.1 master 机器上执行

kubeadm init --kubernetes-version=v1.19.0 \

--apiserver-advertise-address=192.168.49.101 \ # apiserver-advertise-address 改成你自己的IP

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/162.2 等待上诉命令执行完成

执行打印的日志

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.49.101:6443 --token 8alqa1.28d3n8pjzxlmex1q --discovery-token-ca-cert-hash sha256:a3883e22dbdbca2ce3c1de98b5e58379e38d3750abfb7d82bd014526b834061e 2.3 master执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config2.4 在master节点上安装网络

kube-flannel.yml

内容如下:

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

seLinux:

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: rancher/mirrored-flannelcni-flannel:v0.18.1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: rancher/mirrored-flannelcni-flannel:v0.18.1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

vim kube-flannel.yml

在master上执行

kubectl apply -f kube-flannel.yml

查看执行情况 kubectl get pods --all-namespaces 所有状态为Running

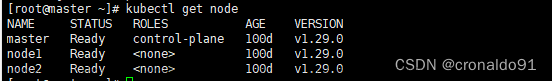

查看主机运行状态 kubectl get nodes 如图:

2.5 在其他的分支节点执行(k8s-node1 k8s-node2)

kubeadm join 192.168.49.101:6443 --token 8alqa1.28d3n8pjzxlmex1q --discovery-token-ca-cert-hash sha256:a3883e22dbdbca2ce3c1de98b5e58379e38d3750abfb7d82bd014526b834061e

分支节点执行上述命令等待所有机器加入master

查看所有机器状态如下为成功

如果到此时 等待时间过久 或者是2.1 kubeadm init..... 失败

重装k8s 再次尝试

卸载k8s的命令

systemctl stop kubelet

systemctl stop etcd

systemctl stop docker

kubeadm reset -f

modprobe -r ipip

lsmod

rm -rf ~/.kube/

rm -rf /etc/kubernetes/

rm -rf /etc/systemd/system/kubelet.service.d

rm -rf /etc/systemd/system/kubelet.service

rm -rf /usr/bin/kube*

rm -rf /etc/cni

rm -rf /opt/cni

rm -rf /var/lib/etcd

rm -rf /var/etcd

yum -y remove kube*

yum clean all

yum -y update

yum makecache多执行几次 保证删除干净 如果master 卸载不了 重启机器再尝试删除

删除成功后 再执行 1.8 -2.5 小结的操作

到此k8s 安装成功 自己可以创建pod service 验证下 百度有

三 安装 kubesphere

1.1 创建StorageClass存储

1.1.1 创建kubesphere-system命名空间

vim ks-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: kubesphere-system

labels:

name: kubesphere-system

kubectl apply -f ks-namespace.yaml

1.1.2 创建rbac授权

vim sc-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: kubesphere-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: nfs-client-provisioner-cr

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get","list","watch","create","delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get","list","watch","update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get","list","watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list","watch","create","update","patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["create","delete","get","list","watch","patch","update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: nfs-client-provisioner-crb

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: kubesphere-system

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-cr

apiGroup: rbac.authorization.k8s.io

kubectl apply -f sc-rbac.yaml

1.1.3 创建storageclass的deployment

vim sc-deployment.yaml

NFS_SERVER 设置为安装nfs服务的服务器ip地址

NFS_PATH 即安装nfs服务的时候创建的挂载目录

volumes中的nfs同样需要配置对应的nfs环境信息

vim sc-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

namespace: kubesphere-system

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-beijing.aliyuncs.com/xngczl/nfs-subdir-external-provisione:v4.0.0

env:

- name: PROVISIONER_NAME

value: nfs-storage-class

- name: NFS_SERVER

value: 192.168.16.40

- name: NFS_PATH

value: /root/data/nfs/storageclass

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

volumes:

- name: nfs-client-root

nfs:

server: 192.168.16.40

path: /root/data/nfs/storageclass

kubectl apply -f sc-deployment.yaml

1.1.4 创建StorageClass

vim sc-resource.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storageclass

provisioner: nfs-storage-class

allowVolumeExpansion: true

reclaimPolicy: Retain

kubectl apply -f sc-resource.yaml

1.1.5 查看StorageClass

[root@master kubersphere]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-storageclass nfs-storage-class Retain Immediate true 33s

sc-nexus3 kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 88d

1.1.6 设置默认的StorageClass

kubectl patch storageclass nfs-storageclass -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

再次查看,可以看到此时已经设置好默认的StorageClass了

[root@master kubersphere]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-storageclass (default) nfs-storage-class Retain Immediate true 2m6s

sc-nexus3 kubernetes.io/no-provisioner Delete WaitForFirstConsumer false 88d

[root@master kubersphere]#

1.2 部署最小化Kubersphere

1.2.1 下载kubersphere的配控文件

curl -L -O https://github.com/kubesphere/ks-installer/releases/download/v3.3.1/cluster-configuration.yaml

curl -L -O https://github.com/kubesphere/ks-installer/releases/download/v3.3.1/kubesphere-installer.yamlkubersphere-installer.yaml 配置文件 注意这里不需要修改

---

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: clusterconfigurations.installer.kubesphere.io

spec:

group: installer.kubesphere.io

versions:

- name: v1alpha1

served: true

storage: true

schema:

openAPIV3Schema:

type: object

properties:

spec:

type: object

x-kubernetes-preserve-unknown-fields: true

status:

type: object

x-kubernetes-preserve-unknown-fields: true

scope: Namespaced

names:

plural: clusterconfigurations

singular: clusterconfiguration

kind: ClusterConfiguration

shortNames:

- cc

---

apiVersion: v1

kind: Namespace

metadata:

name: kubesphere-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: ks-installer

namespace: kubesphere-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ks-installer

rules:

- apiGroups:

- ""

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apps

resources:

- '*'

verbs:

- '*'

- apiGroups:

- extensions

resources:

- '*'

verbs:

- '*'

- apiGroups:

- batch

resources:

- '*'

verbs:

- '*'

- apiGroups:

- rbac.authorization.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apiregistration.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apiextensions.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- tenant.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- certificates.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- devops.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- monitoring.coreos.com

resources:

- '*'

verbs:

- '*'

- apiGroups:

- logging.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- jaegertracing.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- storage.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- admissionregistration.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- policy

resources:

- '*'

verbs:

- '*'

- apiGroups:

- autoscaling

resources:

- '*'

verbs:

- '*'

- apiGroups:

- networking.istio.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- config.istio.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- iam.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- notification.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- auditing.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- events.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- core.kubefed.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- installer.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- storage.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- security.istio.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- monitoring.kiali.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- kiali.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- networking.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- edgeruntime.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- types.kubefed.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- monitoring.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- application.kubesphere.io

resources:

- '*'

verbs:

- '*'

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: ks-installer

subjects:

- kind: ServiceAccount

name: ks-installer

namespace: kubesphere-system

roleRef:

kind: ClusterRole

name: ks-installer

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

app: ks-installer

spec:

replicas: 1

selector:

matchLabels:

app: ks-installer

template:

metadata:

labels:

app: ks-installer

spec:

serviceAccountName: ks-installer

containers:

- name: installer

image: kubesphere/ks-installer:v3.3.1

imagePullPolicy: "Always"

resources:

limits:

cpu: "1"

memory: 1Gi

requests:

cpu: 20m

memory: 100Mi

volumeMounts:

- mountPath: /etc/localtime

name: host-time

readOnly: true

volumes:

- hostPath:

path: /etc/localtime

type: ""

name: host-time

cluster-configuration.yaml 配置文件 注意这里同样不需要做任何修改

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.3.1

spec:

persistence:

storageClass: "nfs-storageclass" # If there is no default StorageClass in your cluster, you need to specify an existing StorageClass here.

authentication:

# adminPassword: "" # Custom password of the admin user. If the parameter exists but the value is empty, a random password is generated. If the parameter does not exist, P@88w0rd is used.

jwtSecret: "" # Keep the jwtSecret consistent with the Host Cluster. Retrieve the jwtSecret by executing "kubectl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecret" on the Host Cluster.

local_registry: "" # Add your private registry address if it is needed.

# dev_tag: "" # Add your kubesphere image tag you want to install, by default it's same as ks-installer release version.

etcd:

monitoring: false # Enable or disable etcd monitoring dashboard installation. You have to create a Secret for etcd before you enable it.

endpointIps: localhost # etcd cluster EndpointIps. It can be a bunch of IPs here.

port: 2379 # etcd port.

tlsEnable: true

common:

core:

console:

enableMultiLogin: true # Enable or disable simultaneous logins. It allows different users to log in with the same account at the same time.

port: 30880

type: NodePort

# apiserver: # Enlarge the apiserver and controller manager's resource requests and limits for the large cluster

# resources: {}

# controllerManager:

# resources: {}

redis:

enabled: false

enableHA: false

volumeSize: 2Gi # Redis PVC size.

openldap:

enabled: false

volumeSize: 2Gi # openldap PVC size.

minio:

volumeSize: 20Gi # Minio PVC size.

monitoring:

# type: external # Whether to specify the external prometheus stack, and need to modify the endpoint at the next line.

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090 # Prometheus endpoint to get metrics data.

GPUMonitoring: # Enable or disable the GPU-related metrics. If you enable this switch but have no GPU resources, Kubesphere will set it to zero.

enabled: false

gpu: # Install GPUKinds. The default GPU kind is nvidia.com/gpu. Other GPU kinds can be added here according to your needs.

kinds:

- resourceName: "nvidia.com/gpu"

resourceType: "GPU"

default: true

es: # Storage backend for logging, events and auditing.

# master:

# volumeSize: 4Gi # The volume size of Elasticsearch master nodes.

# replicas: 1 # The total number of master nodes. Even numbers are not allowed.

# resources: {}

# data:

# volumeSize: 20Gi # The volume size of Elasticsearch data nodes.

# replicas: 1 # The total number of data nodes.

# resources: {}

logMaxAge: 7 # Log retention time in built-in Elasticsearch. It is 7 days by default.

elkPrefix: logstash # The string making up index names. The index name will be formatted as ks-<elk_prefix>-log.

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchHost: ""

externalElasticsearchPort: ""

alerting: # (CPU: 0.1 Core, Memory: 100 MiB) It enables users to customize alerting policies to send messages to receivers in time with different time intervals and alerting levels to choose from.

enabled: false # Enable or disable the KubeSphere Alerting System.

# thanosruler:

# replicas: 1

# resources: {}

auditing: # Provide a security-relevant chronological set of records,recording the sequence of activities happening on the platform, initiated by different tenants.

enabled: false # Enable or disable the KubeSphere Auditing Log System.

# operator:

# resources: {}

# webhook:

# resources: {}

devops: # (CPU: 0.47 Core, Memory: 8.6 G) Provide an out-of-the-box CI/CD system based on Jenkins, and automated workflow tools including Source-to-Image & Binary-to-Image.

enabled: false # Enable or disable the KubeSphere DevOps System.

# resources: {}

jenkinsMemoryLim: 8Gi # Jenkins memory limit.

jenkinsMemoryReq: 4Gi # Jenkins memory request.

jenkinsVolumeSize: 8Gi # Jenkins volume size.

events: # Provide a graphical web console for Kubernetes Events exporting, filtering and alerting in multi-tenant Kubernetes clusters.

enabled: false # Enable or disable the KubeSphere Events System.

# operator:

# resources: {}

# exporter:

# resources: {}

# ruler:

# enabled: true

# replicas: 2

# resources: {}

logging: # (CPU: 57 m, Memory: 2.76 G) Flexible logging functions are provided for log query, collection and management in a unified console. Additional log collectors can be added, such as Elasticsearch, Kafka and Fluentd.

enabled: false # Enable or disable the KubeSphere Logging System.

logsidecar:

enabled: true

replicas: 2

# resources: {}

metrics_server: # (CPU: 56 m, Memory: 44.35 MiB) It enables HPA (Horizontal Pod Autoscaler).

enabled: false # Enable or disable metrics-server.

monitoring:

storageClass: "" # If there is an independent StorageClass you need for Prometheus, you can specify it here. The default StorageClass is used by default.

node_exporter:

port: 9100

# resources: {}

# kube_rbac_proxy:

# resources: {}

# kube_state_metrics:

# resources: {}

# prometheus:

# replicas: 1 # Prometheus replicas are responsible for monitoring different segments of data source and providing high availability.

# volumeSize: 20Gi # Prometheus PVC size.

# resources: {}

# operator:

# resources: {}

# alertmanager:

# replicas: 1 # AlertManager Replicas.

# resources: {}

# notification_manager:

# resources: {}

# operator:

# resources: {}

# proxy:

# resources: {}

gpu: # GPU monitoring-related plug-in installation.

nvidia_dcgm_exporter: # Ensure that gpu resources on your hosts can be used normally, otherwise this plug-in will not work properly.

enabled: false # Check whether the labels on the GPU hosts contain "nvidia.com/gpu.present=true" to ensure that the DCGM pod is scheduled to these nodes.

# resources: {}

multicluster:

clusterRole: none # host | member | none # You can install a solo cluster, or specify it as the Host or Member Cluster.

network:

networkpolicy: # Network policies allow network isolation within the same cluster, which means firewalls can be set up between certain instances (Pods).

# Make sure that the CNI network plugin used by the cluster supports NetworkPolicy. There are a number of CNI network plugins that support NetworkPolicy, including Calico, Cilium, Kube-router, Romana and Weave Net.

enabled: false # Enable or disable network policies.

ippool: # Use Pod IP Pools to manage the Pod network address space. Pods to be created can be assigned IP addresses from a Pod IP Pool.

type: none # Specify "calico" for this field if Calico is used as your CNI plugin. "none" means that Pod IP Pools are disabled.

topology: # Use Service Topology to view Service-to-Service communication based on Weave Scope.

type: none # Specify "weave-scope" for this field to enable Service Topology. "none" means that Service Topology is disabled.

openpitrix: # An App Store that is accessible to all platform tenants. You can use it to manage apps across their entire lifecycle.

store:

enabled: false # Enable or disable the KubeSphere App Store.

servicemesh: # (0.3 Core, 300 MiB) Provide fine-grained traffic management, observability and tracing, and visualized traffic topology.

enabled: false # Base component (pilot). Enable or disable KubeSphere Service Mesh (Istio-based).

istio: # Customizing the istio installation configuration, refer to https://istio.io/latest/docs/setup/additional-setup/customize-installation/

components:

ingressGateways:

- name: istio-ingressgateway

enabled: false

cni:

enabled: false

edgeruntime: # Add edge nodes to your cluster and deploy workloads on edge nodes.

enabled: false

kubeedge: # kubeedge configurations

enabled: false

cloudCore:

cloudHub:

advertiseAddress: # At least a public IP address or an IP address which can be accessed by edge nodes must be provided.

- "" # Note that once KubeEdge is enabled, CloudCore will malfunction if the address is not provided.

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

# resources: {}

# hostNetWork: false

iptables-manager:

enabled: true

mode: "external"

# resources: {}

# edgeService:

# resources: {}

gatekeeper: # Provide admission policy and rule management, A validating (mutating TBA) webhook that enforces CRD-based policies executed by Open Policy Agent.

enabled: false # Enable or disable Gatekeeper.

# controller_manager:

# resources: {}

# audit:

# resources: {}

terminal:

# image: 'alpine:3.15' # There must be an nsenter program in the image

timeout: 600 # Container timeout, if set to 0, no timeout will be used. The unit is seconds

1.2.2 执行如下命令启动

kubectl apply -f kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml1.2.3 查看安装日志

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f 文章来源:https://www.toymoban.com/news/detail-798921.html

文章来源:https://www.toymoban.com/news/detail-798921.html

文章来源地址https://www.toymoban.com/news/detail-798921.html

文章来源地址https://www.toymoban.com/news/detail-798921.html

完结撒花

到了这里,关于kubernetes(k8s)+kubesphere部署的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!