1 相关引用

1 同济子豪兄关键点检测教程视频

2 同济子豪兄的GitHub代码参考

3 提出问题的小伙伴的博客

2 问题描述

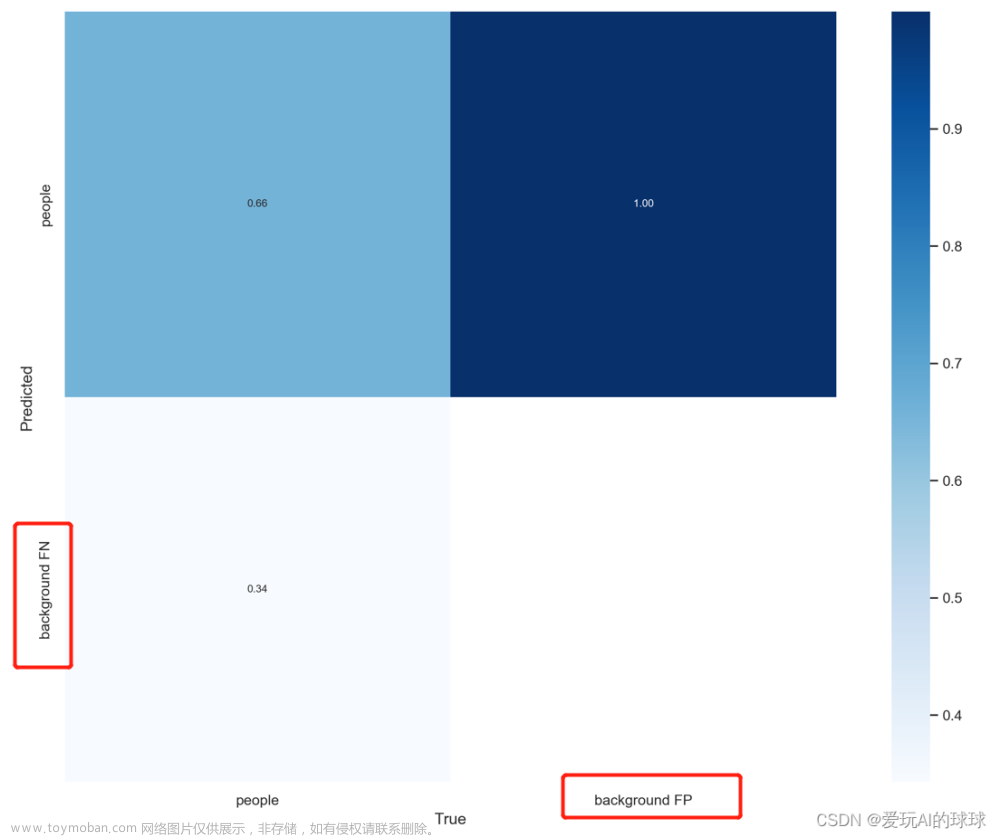

本节调用了YOLOV8的预训练模型来对视频进行预测,采用的是python的API,并将关键点检测的结果可视化。在未更改代码之前,跑出来的效果如图所示。如果检测到的点数少于16,会被自动映射到原点。

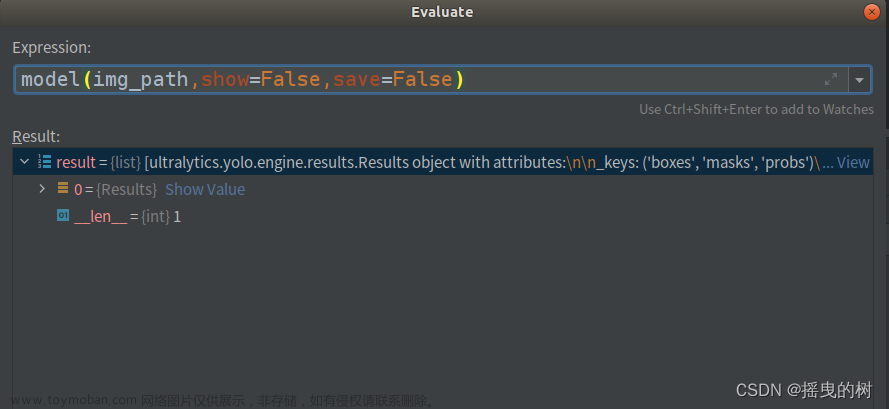

要注意在同济子豪兄的源码中,以下这句代码要加上.data才能正常运行,否则会发生报错。

results[0].keypoints.data.cpu().numpy().astype('uint32')3 问题解决

对代码进行了解析,想到了一种解决方法。首先,映射到了原点可能是因为原点也作为关键点被检测了出来并且进行了链接绘制,假如我们只取检测到的关键点进行绘制连线,应该可以解决这个问题。所以我们加上一句代码,获取检查的关键点的数量。后面画框的关键点的时候只去画检测到的关键点。

# 该框所有关键点坐标和置信度

bbox_keypoints = bboxes_keypoints[idx]

# 检查关键点的数量

num_keypoints = bbox_keypoints.shape[0] # 画该框的关键点

for kpt_id in range(num_keypoints):

# 获取该关键点的颜色、半径、XY坐标

kpt_color = kpt_color_map[kpt_id]['color']

kpt_radius = kpt_color_map[kpt_id]['radius']

kpt_x = int(bbox_keypoints[kpt_id][0])

kpt_y = int(bbox_keypoints[kpt_id][1])

# 画圆:图片、XY坐标、半径、颜色、线宽(-1为填充)

img_bgr = cv2.circle(img_bgr, (kpt_x, kpt_y), kpt_radius, kpt_color, -1)

# 写关键点类别文字:图片,文字字符串,文字左上角坐标,字体,字体大小,颜色,字体粗细

kpt_label = str(kpt_id) # 写关键点类别 ID(二选一)

# kpt_label = str(kpt_color_map[kpt_id]['name']) # 写关键点类别名称(二选一)

img_bgr = cv2.putText(img_bgr, kpt_label,

(kpt_x + kpt_labelstr['offset_x'], kpt_y + kpt_labelstr['offset_y']),

cv2.FONT_HERSHEY_SIMPLEX, kpt_labelstr['font_size'], kpt_color,

kpt_labelstr['font_thickness'])

return img_bgr但是发现原点的关键点还是被检测了出来,于是思考能否加上一个置信度的判断条件,将置信度低的检测到的关键点给筛出掉。这里选择置信度大于0.5的关键点进行链接,

bbox_keypoints[srt_kpt_id][2] > 0.5 and bbox_keypoints[dst_kpt_id][2] > 0.5

# 画该框的骨架连接

for skeleton in skeleton_map:

# 获取起始点坐标

srt_kpt_id = skeleton['srt_kpt_id']

# 获取终止点坐标

dst_kpt_id = skeleton['dst_kpt_id']

if (

srt_kpt_id < num_keypoints and

dst_kpt_id < num_keypoints and

bbox_keypoints[srt_kpt_id][2] > 0.5 and

bbox_keypoints[dst_kpt_id][2] > 0.5

):

# 获取起始点坐标

srt_kpt_x = int(bbox_keypoints[srt_kpt_id][0])

srt_kpt_y = int(bbox_keypoints[srt_kpt_id][1])

# 获取终止点坐标

dst_kpt_x = int(bbox_keypoints[dst_kpt_id][0])

dst_kpt_y = int(bbox_keypoints[dst_kpt_id][1])

# 获取骨架连接颜色

skeleton_color = skeleton['color']

# 获取骨架连接线宽

skeleton_thickness = skeleton['thickness']

# 画骨架连接

img_bgr = cv2.line(img_bgr, (srt_kpt_x, srt_kpt_y), (dst_kpt_x, dst_kpt_y), color=skeleton_color,

thickness=skeleton_thickness)但是我们按照这个代码运行发现原点的关键点还是会被连接起来。这里的原因在于在前面results[0].keypoints.data.cpu().numpy().astype('uint32')这一句代码,我们将他转成整型的时候,连着置信度也转成整型了,因为置信度都小于1,转过去就都变成了0。所以无论设置什么阈值,都还是会画到原点的连接。所以在这里,我们进行代码的修改,使得置信度这个参数不化成整型。 最后再将各个参数量拼成整体bboxes_keypoints。

# 关键点的 xy 坐标

bboxes_keypoints_position = results[0].keypoints.data[:, :, :2].cpu().numpy().astype('uint32')

confidence = results[0].keypoints.data[:, :, 2].cpu().numpy()

bboxes_keypoints = np.concatenate([bboxes_keypoints_position, confidence[:, :, None]], axis=2)注意,进行以上修改之后,后续画关键点和画连接,获取参数信息的时候,最好都强制转换为整型,否则容易报错。

# 获取起始点坐标

srt_kpt_x = int(bbox_keypoints[srt_kpt_id][0])

srt_kpt_y = int(bbox_keypoints[srt_kpt_id][1])

# 获取终止点坐标

dst_kpt_x = int(bbox_keypoints[dst_kpt_id][0])

dst_kpt_y = int(bbox_keypoints[dst_kpt_id][1])

kpt_x = int(bbox_keypoints[kpt_id][0])

kpt_y = int(bbox_keypoints[kpt_id][1])进行以上修改后,就不会出现映射到原点并连接的问题了,如果还是出现了问题,可以尝试去调整一下前面提到的置信度阈值,我的是0.5,可以按实际情况进行调整。本人修改之后跑出来的效果是这样的:

4 完整代码

import cv2

import numpy as np

import time

from tqdm import tqdm

from ultralytics import YOLO

import matplotlib.pyplot as plt

import torch

# 有 GPU 就用 GPU,没有就用 CPU

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

print('device:', device)

# 载入预训练模型

# model = YOLO('yolov8n-pose.pt')

# model = YOLO('yolov8s-pose.pt')

# model = YOLO('yolov8m-pose.pt')

# model = YOLO('yolov8l-pose.pt')

# model = YOLO('yolov8x-pose.pt')

model = YOLO('yolov8x-pose-p6.pt')

# 切换计算设备

model.to(device)

# model.cpu() # CPU

# model.cuda() # GPU

# 框(rectangle)可视化配置

bbox_color = (150, 0, 0) # 框的 BGR 颜色

bbox_thickness = 2 # 框的线宽

# 框类别文字

bbox_labelstr = {

'font_size':1, # 字体大小

'font_thickness':2, # 字体粗细

'offset_x':0, # X 方向,文字偏移距离,向右为正

'offset_y':-10, # Y 方向,文字偏移距离,向下为正

}

# 关键点 BGR 配色

kpt_color_map = {

0:{'name':'Nose', 'color':[0, 0, 255], 'radius':6}, # 鼻尖

1:{'name':'Right Eye', 'color':[255, 0, 0], 'radius':6}, # 右边眼睛

2:{'name':'Left Eye', 'color':[255, 0, 0], 'radius':6}, # 左边眼睛

3:{'name':'Right Ear', 'color':[0, 255, 0], 'radius':6}, # 右边耳朵

4:{'name':'Left Ear', 'color':[0, 255, 0], 'radius':6}, # 左边耳朵

5:{'name':'Right Shoulder', 'color':[193, 182, 255], 'radius':6}, # 右边肩膀

6:{'name':'Left Shoulder', 'color':[193, 182, 255], 'radius':6}, # 左边肩膀

7:{'name':'Right Elbow', 'color':[16, 144, 247], 'radius':6}, # 右侧胳膊肘

8:{'name':'Left Elbow', 'color':[16, 144, 247], 'radius':6}, # 左侧胳膊肘

9:{'name':'Right Wrist', 'color':[1, 240, 255], 'radius':6}, # 右侧手腕

10:{'name':'Left Wrist', 'color':[1, 240, 255], 'radius':6}, # 左侧手腕

11:{'name':'Right Hip', 'color':[140, 47, 240], 'radius':6}, # 右侧胯

12:{'name':'Left Hip', 'color':[140, 47, 240], 'radius':6}, # 左侧胯

13:{'name':'Right Knee', 'color':[223, 155, 60], 'radius':6}, # 右侧膝盖

14:{'name':'Left Knee', 'color':[223, 155, 60], 'radius':6}, # 左侧膝盖

15:{'name':'Right Ankle', 'color':[139, 0, 0], 'radius':6}, # 右侧脚踝

16:{'name':'Left Ankle', 'color':[139, 0, 0], 'radius':6}, # 左侧脚踝

}

# 点类别文字

kpt_labelstr = {

'font_size':0.5, # 字体大小

'font_thickness':1, # 字体粗细

'offset_x':10, # X 方向,文字偏移距离,向右为正

'offset_y':0, # Y 方向,文字偏移距离,向下为正

}

# 骨架连接 BGR 配色

skeleton_map = [

{'srt_kpt_id':15, 'dst_kpt_id':13, 'color':[0, 100, 255], 'thickness':2}, # 右侧脚踝-右侧膝盖

{'srt_kpt_id':13, 'dst_kpt_id':11, 'color':[0, 255, 0], 'thickness':2}, # 右侧膝盖-右侧胯

{'srt_kpt_id':16, 'dst_kpt_id':14, 'color':[255, 0, 0], 'thickness':2}, # 左侧脚踝-左侧膝盖

{'srt_kpt_id':14, 'dst_kpt_id':12, 'color':[0, 0, 255], 'thickness':2}, # 左侧膝盖-左侧胯

{'srt_kpt_id':11, 'dst_kpt_id':12, 'color':[122, 160, 255], 'thickness':2}, # 右侧胯-左侧胯

{'srt_kpt_id':5, 'dst_kpt_id':11, 'color':[139, 0, 139], 'thickness':2}, # 右边肩膀-右侧胯

{'srt_kpt_id':6, 'dst_kpt_id':12, 'color':[237, 149, 100], 'thickness':2}, # 左边肩膀-左侧胯

{'srt_kpt_id':5, 'dst_kpt_id':6, 'color':[152, 251, 152], 'thickness':2}, # 右边肩膀-左边肩膀

{'srt_kpt_id':5, 'dst_kpt_id':7, 'color':[148, 0, 69], 'thickness':2}, # 右边肩膀-右侧胳膊肘

{'srt_kpt_id':6, 'dst_kpt_id':8, 'color':[0, 75, 255], 'thickness':2}, # 左边肩膀-左侧胳膊肘

{'srt_kpt_id':7, 'dst_kpt_id':9, 'color':[56, 230, 25], 'thickness':2}, # 右侧胳膊肘-右侧手腕

{'srt_kpt_id':8, 'dst_kpt_id':10, 'color':[0,240, 240], 'thickness':2}, # 左侧胳膊肘-左侧手腕

{'srt_kpt_id':1, 'dst_kpt_id':2, 'color':[224,255, 255], 'thickness':2}, # 右边眼睛-左边眼睛

{'srt_kpt_id':0, 'dst_kpt_id':1, 'color':[47,255, 173], 'thickness':2}, # 鼻尖-左边眼睛

{'srt_kpt_id':0, 'dst_kpt_id':2, 'color':[203,192,255], 'thickness':2}, # 鼻尖-左边眼睛

{'srt_kpt_id':1, 'dst_kpt_id':3, 'color':[196, 75, 255], 'thickness':2}, # 右边眼睛-右边耳朵

{'srt_kpt_id':2, 'dst_kpt_id':4, 'color':[86, 0, 25], 'thickness':2}, # 左边眼睛-左边耳朵

{'srt_kpt_id':3, 'dst_kpt_id':5, 'color':[255,255, 0], 'thickness':2}, # 右边耳朵-右边肩膀

{'srt_kpt_id':4, 'dst_kpt_id':6, 'color':[255, 18, 200], 'thickness':2} # 左边耳朵-左边肩膀

]

def process_frame(img_bgr):

'''

输入摄像头画面 bgr-array,输出图像 bgr-array

'''

results = model(img_bgr, verbose=False) # verbose设置为False,不单独打印每一帧预测结果

# 预测框的个数

num_bbox = len(results[0].boxes.cls)

# 预测框的 xyxy 坐标

bboxes_xyxy = results[0].boxes.xyxy.cpu().numpy().astype('uint32')

# 关键点的 xy 坐标

bboxes_keypoints_position = results[0].keypoints.data[:, :, :2].cpu().numpy().astype('uint32')

confidence = results[0].keypoints.data[:, :, 2].cpu().numpy()

bboxes_keypoints = np.concatenate([bboxes_keypoints_position, confidence[:, :, None]], axis=2)

for idx in range(num_bbox): # 遍历每个框

# 获取该框坐标

bbox_xyxy = bboxes_xyxy[idx]

# 获取框的预测类别(对于关键点检测,只有一个类别)

bbox_label = results[0].names[0]

# 画框

img_bgr = cv2.rectangle(img_bgr, (bbox_xyxy[0], bbox_xyxy[1]), (bbox_xyxy[2], bbox_xyxy[3]), bbox_color,

bbox_thickness)

# 写框类别文字:图片,文字字符串,文字左上角坐标,字体,字体大小,颜色,字体粗细

img_bgr = cv2.putText(img_bgr, bbox_label,

(bbox_xyxy[0] + bbox_labelstr['offset_x'], bbox_xyxy[1] + bbox_labelstr['offset_y']),

cv2.FONT_HERSHEY_SIMPLEX, bbox_labelstr['font_size'], bbox_color,

bbox_labelstr['font_thickness'])

bbox_keypoints = bboxes_keypoints[idx] # 该框所有关键点坐标和置信度

# 检查关键点的数量

num_keypoints = bbox_keypoints.shape[0]

# 画该框的骨架连接

for skeleton in skeleton_map:

# 获取起始点坐标

srt_kpt_id = skeleton['srt_kpt_id']

# 获取终止点坐标

dst_kpt_id = skeleton['dst_kpt_id']

if (

srt_kpt_id < num_keypoints and

dst_kpt_id < num_keypoints and

bbox_keypoints[srt_kpt_id][2] > 0.5 and

bbox_keypoints[dst_kpt_id][2] > 0.5

):

# 获取起始点坐标

srt_kpt_x = int(bbox_keypoints[srt_kpt_id][0])

srt_kpt_y = int(bbox_keypoints[srt_kpt_id][1])

# 获取终止点坐标

dst_kpt_x = int(bbox_keypoints[dst_kpt_id][0])

dst_kpt_y = int(bbox_keypoints[dst_kpt_id][1])

# 获取骨架连接颜色

skeleton_color = skeleton['color']

# 获取骨架连接线宽

skeleton_thickness = skeleton['thickness']

# 画骨架连接

img_bgr = cv2.line(img_bgr, (srt_kpt_x, srt_kpt_y), (dst_kpt_x, dst_kpt_y), color=skeleton_color,

thickness=skeleton_thickness)

# 画该框的关键点

for kpt_id in range(num_keypoints):

# 获取该关键点的颜色、半径、XY坐标

kpt_color = kpt_color_map[kpt_id]['color']

kpt_radius = kpt_color_map[kpt_id]['radius']

kpt_x = int(bbox_keypoints[kpt_id][0])

kpt_y = int(bbox_keypoints[kpt_id][1])

# 画圆:图片、XY坐标、半径、颜色、线宽(-1为填充)

img_bgr = cv2.circle(img_bgr, (kpt_x, kpt_y), kpt_radius, kpt_color, -1)

# 写关键点类别文字:图片,文字字符串,文字左上角坐标,字体,字体大小,颜色,字体粗细

kpt_label = str(kpt_id) # 写关键点类别 ID(二选一)

# kpt_label = str(kpt_color_map[kpt_id]['name']) # 写关键点类别名称(二选一)

img_bgr = cv2.putText(img_bgr, kpt_label,

(kpt_x + kpt_labelstr['offset_x'], kpt_y + kpt_labelstr['offset_y']),

cv2.FONT_HERSHEY_SIMPLEX, kpt_labelstr['font_size'], kpt_color,

kpt_labelstr['font_thickness'])

return img_bgr

# 视频逐帧处理代码模板

# 不需修改任何代码,只需定义process_frame函数即可

# 同济子豪兄 2021-7-10

def generate_video(input_path='videos/robot.mp4'):

filehead = input_path.split('/')[-1]

output_path = "out-" + filehead

print('视频开始处理', input_path)

# 获取视频总帧数

cap = cv2.VideoCapture(input_path)

frame_count = 0

while (cap.isOpened()):

success, frame = cap.read()

frame_count += 1

if not success:

break

cap.release()

print('视频总帧数为', frame_count)

# cv2.namedWindow('Crack Detection and Measurement Video Processing')

cap = cv2.VideoCapture(input_path)

frame_size = (cap.get(cv2.CAP_PROP_FRAME_WIDTH), cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

# fourcc = int(cap.get(cv2.CAP_PROP_FOURCC))

# fourcc = cv2.VideoWriter_fourcc(*'XVID')

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

fps = cap.get(cv2.CAP_PROP_FPS)

out = cv2.VideoWriter(output_path, fourcc, fps, (int(frame_size[0]), int(frame_size[1])))

# 进度条绑定视频总帧数

with tqdm(total=frame_count - 1) as pbar:

try:

while (cap.isOpened()):

success, frame = cap.read()

if not success:

break

# 处理帧

# frame_path = './temp_frame.png'

# cv2.imwrite(frame_path, frame)

try:

frame = process_frame(frame)

except:

print('error')

pass

if success == True:

# cv2.imshow('Video Processing', frame)

out.write(frame)

# 进度条更新一帧

pbar.update(1)

# if cv2.waitKey(1) & 0xFF == ord('q'):

# break

except:

print('中途中断')

pass

cv2.destroyAllWindows()

out.release()

cap.release()

print('视频已保存', output_path)

generate_video(input_path='videos/two.mp4')

#generate_video(input_path='videos/lym.mp4')

#generate_video(input_path='videos/cxk.mp4')5 感谢

感谢同济子豪兄的教程视频以及GitHub代码教学。子豪兄csdn主页

感谢提出问题的小伙伴。又又土主页

第一次写博客,如果有错误的地方,欢迎批评和指正,一起讨论交流学习。文章来源:https://www.toymoban.com/news/detail-803365.html

谢谢大家! 文章来源地址https://www.toymoban.com/news/detail-803365.html

到了这里,关于用python调用YOLOV8预测视频并解析结果----错误解决的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!