一般而言,6443端口是用于给apiserver使用的,如果报这个错误,就说明apiserver要么没起来,要么就是端口被占用了。

快速排查

- 挨个检查以下几个守护进程有无问题,如果有报错日志,则需要进行排查

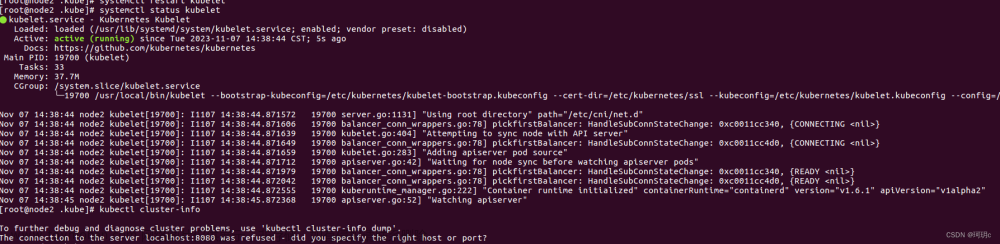

systemctl status kubelet

systemctl status docker

systemctl status containerd

如果都没有问题,那就查看apiserver容器是否起来了

docker ps -a | grep kube-apiserver

nerdctl -n k8s.io ps | grep kube-apiserver

如果apisever没有正常运行中,就需要查看日志进行错误排查。如果正常运行了,再确认etcd是否挂了

docker ps -a | grep etcd

nerdctl -n k8s.io ps | grep etcd

同样如果有问题就需要看日志进行排查解决

一、证书过期

1.1 查看证书

在master节点上查看证书过期时间

$ kubeadm certs check-expiration

[check-expiration] Reading configuration from the cluster...

[check-expiration] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[check-expiration] Error reading configuration from the Cluster. Falling back to default configuration

CERTIFICATE EXPIRES RESIDUAL TIME CERTIFICATE AUTHORITY EXTERNALLY MANAGED

admin.conf Jan 21, 2023 06:27 UTC <invalid> ca no

apiserver Jan 21, 2023 06:27 UTC <invalid> ca no

apiserver-etcd-client Jan 21, 2023 06:27 UTC <invalid> etcd-ca no

apiserver-kubelet-client Jan 21, 2023 06:27 UTC <invalid> ca no

controller-manager.conf Jan 21, 2023 06:27 UTC <invalid> ca no

etcd-healthcheck-client Jan 21, 2023 06:27 UTC <invalid> etcd-ca no

etcd-peer Jan 21, 2023 06:27 UTC <invalid> etcd-ca no

etcd-server Jan 21, 2023 06:27 UTC <invalid> etcd-ca no

front-proxy-client Jan 21, 2023 06:27 UTC <invalid> front-proxy-ca no

scheduler.conf Jan 21, 2023 06:27 UTC <invalid> ca no

CERTIFICATE AUTHORITY EXPIRES RESIDUAL TIME EXTERNALLY MANAGED

ca Jan 19, 2032 06:27 UTC 8y no

etcd-ca Jan 19, 2032 06:27 UTC 8y no

front-proxy-ca Jan 19, 2032 06:27 UTC 8y no

如果出现invalid字样的,就说明证书过期了

低版本集群执行这个命令可能会报错,可以执行这个命令

kubeadm alpha certs check-expiration

1.2 备份相关文件

cp -r /etc/kubernetes /etc/kubernetes.old

1.3 在每个master上执行命令更新证书

$ kubeadm certs renew all

[renew] Reading configuration from the cluster...

[renew] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[renew] Error reading configuration from the Cluster. Falling back to default configuration

certificate embedded in the kubeconfig file for the admin to use and for kubeadm itself renewed

certificate for serving the Kubernetes API renewed

certificate the apiserver uses to access etcd renewed

certificate for the API server to connect to kubelet renewed

certificate embedded in the kubeconfig file for the controller manager to use renewed

certificate for liveness probes to healthcheck etcd renewed

certificate for etcd nodes to communicate with each other renewed

certificate for serving etcd renewed

certificate for the front proxy client renewed

certificate embedded in the kubeconfig file for the scheduler manager to use renewed

低版本的集群下,执行命令会报错,可以执行命令:文章来源:https://www.toymoban.com/news/detail-805640.html

kubeadm alpha certs renew all

1.4 在每个master节点上重启相关服务

docker ps |egrep "k8s_kube-apiserver|k8s_kube-scheduler|k8s_kube-controller"|awk '{print $1}'|xargs docker restart

1.5 更新kube config文件

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

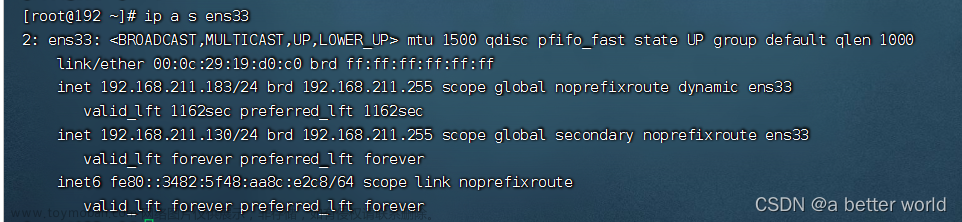

二、集群硬件时间和系统时间不同步

重启服务器后系统时间会同步硬件时间,导致集群的时间管理混乱,进而导致此类问题

此类现象需要重新更改系统时间并把硬件时间和软件时间同步文章来源地址https://www.toymoban.com/news/detail-805640.html

date #确认系统时间

hwclock #确认硬件

#如果此时系统时间和硬件时间同步,但明显不是服务器重启之前的时间。请继续往下看。否则就不是本情况,请查看其他案例。

date -s "2022-12-08 12:00:00" #首先进行系统时间的修改,此处为举例:系统时间修改为2022年12月8日 中午12点整

hwclock --hctosys #然后用硬件时钟同步系统时钟

timedatectl |awk -F":" '/synchronized/{print $2}' #检查ntp时间同步是否就绪,一般等待20-30分钟左右后会显示yes

kubectl get node #检查是否还会报错

三、查看端口是否被占用/被防火墙、iptables拦截

netstat -napt | grep 6443 #首选确认端口是否被占用

#如果使用firewalld服务,通过firewall添加相应的端口来解决问题

systemctl enable firewalld|

systemctl start firewalld|

firewall-cmd --permanent --add-port=6443/tcp|

firewall-cmd --permanent --add-port=2379-2380/tcp|

firewall-cmd --permanent --add-port=10250-10255/tcp|

firewall-cmd –reload

#iptables相关规则/做过相关的安全加固等措施禁用了端口

iptables -nL #查看是否存在6443端口相关规则被禁止,如果出现相关的问题,请进行相关排查

四、主机名修改

1、通用方案

#重新声明环境变量

ll /etc/kubernetes/admin.conf #查看文件是否存在,如果不存在执行下面的步骤

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile #重新写入环境变量

source ~/.bash_profile

2、containerd容器解决方案

systemctl restart kubelet #尝试重启kubelet测试是否可以重新恢复正常

journalctl -xefu kubelet #查看kubelet的日志,里面寻找相应报错

nerdctl -n k8s.io ps #根据iomp版本是用docker或者nerdctl,来查看k8s容器状态

#确认相关k8s容器是否正常,如果容器出现异常,进行相关排查

kubectl get node #检查是否还会报错

到了这里,关于k8s遇 The connection to the server :6443 was refused的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!