python版本是3.12

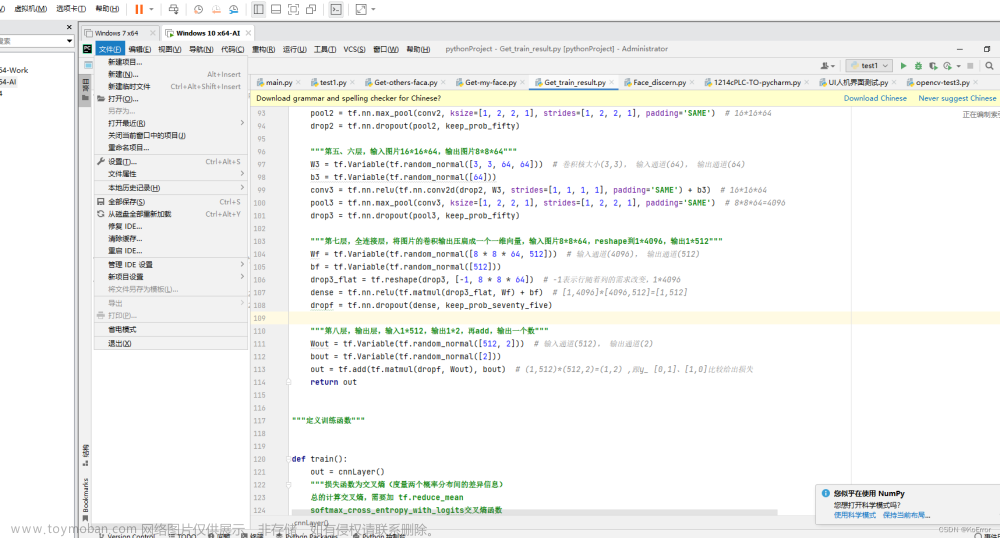

输入代码:

from pyspark import SparkConf,SparkContext

# 在PySpark中调用python解释器

import os

os.environ['PYSPARK_PYTHON'] = "D:/python/python.exe"

# 创建SparkConf类对象

conf = SparkConf().setMaster("local[*]").setAppName("test_spark_app")

sc = SparkContext(conf=conf)

# 打印版本

print(sc.version)

# 数据计算

rdd1 = sc.parallelize([1,2,3,4,5])

rdd3 = rdd1.map(lambda X: X * 10)

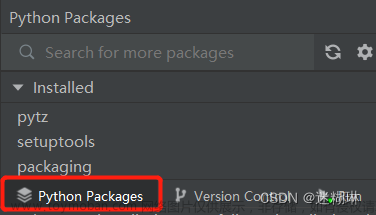

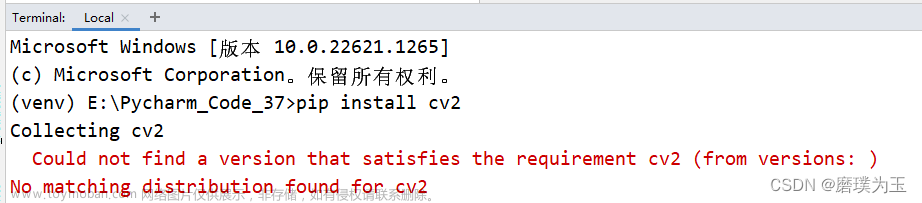

print(rdd3.collect())环境配置如下:

path路径配置:

错误代码如下:

D:\python\python.exe "D:\python工具\python学习工具\第二阶段\test pyspark.py"

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

3.5.0

24/01/07 16:24:31 ERROR Executor: Exception in task 14.0 in stage 0.0 (TID 14)

org.apache.spark.SparkException: Python worker exited unexpectedly (crashed)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator$$anonfun$1.applyOrElse(PythonRunner.scala:612)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator$$anonfun$1.applyOrElse(PythonRunner.scala:594)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:38)

at org.apache.spark.api.python.PythonRunner$$anon$3.read(PythonRunner.scala:789)

at org.apache.spark.api.python.PythonRunner$$anon$3.read(PythonRunner.scala:766)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator.hasNext(PythonRunner.scala:525)

at org.apache.spark.InterruptibleIterator.hasNext(InterruptibleIterator.scala:37)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at org.apache.spark.InterruptibleIterator.foreach(InterruptibleIterator.scala:28)

at scala.collection.generic.Growable.$plus$plus$eq(Growable.scala:62)

at scala.collection.generic.Growable.$plus$plus$eq$(Growable.scala:53)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:105)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:49)

at scala.collection.TraversableOnce.to(TraversableOnce.scala:366)

at scala.collection.TraversableOnce.to$(TraversableOnce.scala:364)

at org.apache.spark.InterruptibleIterator.to(InterruptibleIterator.scala:28)

at scala.collection.TraversableOnce.toBuffer(TraversableOnce.scala:358)

at scala.collection.TraversableOnce.toBuffer$(TraversableOnce.scala:358)

at org.apache.spark.InterruptibleIterator.toBuffer(InterruptibleIterator.scala:28)

at scala.collection.TraversableOnce.toArray(TraversableOnce.scala:345)

at scala.collection.TraversableOnce.toArray$(TraversableOnce.scala:339)

at org.apache.spark.InterruptibleIterator.toArray(InterruptibleIterator.scala:28)

at org.apache.spark.rdd.RDD.$anonfun$collect$2(RDD.scala:1046)

at org.apache.spark.SparkContext.$anonfun$runJob$5(SparkContext.scala:2438)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:93)

at org.apache.spark.TaskContext.runTaskWithListeners(TaskContext.scala:161)

at org.apache.spark.scheduler.Task.run(Task.scala:141)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$4(Executor.scala:620)

at org.apache.spark.util.SparkErrorUtils.tryWithSafeFinally(SparkErrorUtils.scala:64)

at org.apache.spark.util.SparkErrorUtils.tryWithSafeFinally$(SparkErrorUtils.scala:61)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:94)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:623)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)

at java.base/java.lang.Thread.run(Thread.java:842)

Caused by: java.io.EOFException

at java.base/java.io.DataInputStream.readInt(DataInputStream.java:398)

at org.apache.spark.api.python.PythonRunner$$anon$3.read(PythonRunner.scala:774)

... 32 more

24/01/07 16:24:32 WARN TaskSetManager: Lost task 14.0 in stage 0.0 (TID 14) (lxs010571022059.bdo.com.cn executor driver): org.apache.spark.SparkException: Python worker exited unexpectedly (crashed)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator$$anonfun$1.applyOrElse(PythonRunner.scala:612)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator$$anonfun$1.applyOrElse(PythonRunner.scala:594)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:38)

at org.apache.spark.api.python.PythonRunner$$anon$3.read(PythonRunner.scala:789)

at org.apache.spark.api.python.PythonRunner$$anon$3.read(PythonRunner.scala:766)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator.hasNext(PythonRunner.scala:525)

at org.apache.spark.InterruptibleIterator.hasNext(InterruptibleIterator.scala:37)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at org.apache.spark.InterruptibleIterator.foreach(InterruptibleIterator.scala:28)

at scala.collection.generic.Growable.$plus$plus$eq(Growable.scala:62)

at scala.collection.generic.Growable.$plus$plus$eq$(Growable.scala:53)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:105)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:49)

at scala.collection.TraversableOnce.to(TraversableOnce.scala:366)

at scala.collection.TraversableOnce.to$(TraversableOnce.scala:364)

at org.apache.spark.InterruptibleIterator.to(InterruptibleIterator.scala:28)

at scala.collection.TraversableOnce.toBuffer(TraversableOnce.scala:358)

at scala.collection.TraversableOnce.toBuffer$(TraversableOnce.scala:358)

at org.apache.spark.InterruptibleIterator.toBuffer(InterruptibleIterator.scala:28)

at scala.collection.TraversableOnce.toArray(TraversableOnce.scala:345)

at scala.collection.TraversableOnce.toArray$(TraversableOnce.scala:339)

at org.apache.spark.InterruptibleIterator.toArray(InterruptibleIterator.scala:28)

at org.apache.spark.rdd.RDD.$anonfun$collect$2(RDD.scala:1046)

at org.apache.spark.SparkContext.$anonfun$runJob$5(SparkContext.scala:2438)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:93)

at org.apache.spark.TaskContext.runTaskWithListeners(TaskContext.scala:161)

at org.apache.spark.scheduler.Task.run(Task.scala:141)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$4(Executor.scala:620)

at org.apache.spark.util.SparkErrorUtils.tryWithSafeFinally(SparkErrorUtils.scala:64)

at org.apache.spark.util.SparkErrorUtils.tryWithSafeFinally$(SparkErrorUtils.scala:61)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:94)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:623)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)

at java.base/java.lang.Thread.run(Thread.java:842)

Caused by: java.io.EOFException

at java.base/java.io.DataInputStream.readInt(DataInputStream.java:398)

at org.apache.spark.api.python.PythonRunner$$anon$3.read(PythonRunner.scala:774)

... 32 more

24/01/07 16:24:32 ERROR TaskSetManager: Task 14 in stage 0.0 failed 1 times; aborting job

Traceback (most recent call last):

File "D:\python工具\python学习工具\第二阶段\test pyspark.py", line 21, in <module>

print(rdd3.collect())

^^^^^^^^^^^^^^

File "D:\python\Lib\site-packages\pyspark\rdd.py", line 1833, in collect

sock_info = self.ctx._jvm.PythonRDD.collectAndServe(self._jrdd.rdd())

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "D:\python\Lib\site-packages\py4j\java_gateway.py", line 1322, in __call__

return_value = get_return_value(

^^^^^^^^^^^^^^^^^

File "D:\python\Lib\site-packages\py4j\protocol.py", line 326, in get_return_value

raise Py4JJavaError(

py4j.protocol.Py4JJavaError: An error occurred while calling z:org.apache.spark.api.python.PythonRDD.collectAndServe.

: org.apache.spark.SparkException: Job aborted due to stage failure: Task 14 in stage 0.0 failed 1 times, most recent failure: Lost task 14.0 in stage 0.0 (TID 14) (lxs010571022059.bdo.com.cn executor driver): org.apache.spark.SparkException: Python worker exited unexpectedly (crashed)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator$$anonfun$1.applyOrElse(PythonRunner.scala:612)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator$$anonfun$1.applyOrElse(PythonRunner.scala:594)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:38)

at org.apache.spark.api.python.PythonRunner$$anon$3.read(PythonRunner.scala:789)

at org.apache.spark.api.python.PythonRunner$$anon$3.read(PythonRunner.scala:766)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator.hasNext(PythonRunner.scala:525)

at org.apache.spark.InterruptibleIterator.hasNext(InterruptibleIterator.scala:37)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at org.apache.spark.InterruptibleIterator.foreach(InterruptibleIterator.scala:28)

at scala.collection.generic.Growable.$plus$plus$eq(Growable.scala:62)

at scala.collection.generic.Growable.$plus$plus$eq$(Growable.scala:53)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:105)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:49)

at scala.collection.TraversableOnce.to(TraversableOnce.scala:366)

at scala.collection.TraversableOnce.to$(TraversableOnce.scala:364)

at org.apache.spark.InterruptibleIterator.to(InterruptibleIterator.scala:28)

at scala.collection.TraversableOnce.toBuffer(TraversableOnce.scala:358)

at scala.collection.TraversableOnce.toBuffer$(TraversableOnce.scala:358)

at org.apache.spark.InterruptibleIterator.toBuffer(InterruptibleIterator.scala:28)

at scala.collection.TraversableOnce.toArray(TraversableOnce.scala:345)

at scala.collection.TraversableOnce.toArray$(TraversableOnce.scala:339)

at org.apache.spark.InterruptibleIterator.toArray(InterruptibleIterator.scala:28)

at org.apache.spark.rdd.RDD.$anonfun$collect$2(RDD.scala:1046)

at org.apache.spark.SparkContext.$anonfun$runJob$5(SparkContext.scala:2438)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:93)

at org.apache.spark.TaskContext.runTaskWithListeners(TaskContext.scala:161)

at org.apache.spark.scheduler.Task.run(Task.scala:141)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$4(Executor.scala:620)

at org.apache.spark.util.SparkErrorUtils.tryWithSafeFinally(SparkErrorUtils.scala:64)

at org.apache.spark.util.SparkErrorUtils.tryWithSafeFinally$(SparkErrorUtils.scala:61)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:94)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:623)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)

at java.base/java.lang.Thread.run(Thread.java:842)

Caused by: java.io.EOFException

at java.base/java.io.DataInputStream.readInt(DataInputStream.java:398)

at org.apache.spark.api.python.PythonRunner$$anon$3.read(PythonRunner.scala:774)

... 32 more文章来源:https://www.toymoban.com/news/detail-807700.html

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.failJobAndIndependentStages(DAGScheduler.scala:2844)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2(DAGScheduler.scala:2780)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2$adapted(DAGScheduler.scala:2779)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:2779)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1(DAGScheduler.scala:1242)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1$adapted(DAGScheduler.scala:1242)

at scala.Option.foreach(Option.scala:407)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:1242)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:3048)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2982)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2971)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:984)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2398)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2419)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2438)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2463)

at org.apache.spark.rdd.RDD.$anonfun$collect$1(RDD.scala:1046)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:407)

at org.apache.spark.rdd.RDD.collect(RDD.scala:1045)

at org.apache.spark.api.python.PythonRDD$.collectAndServe(PythonRDD.scala:195)

at org.apache.spark.api.python.PythonRDD.collectAndServe(PythonRDD.scala)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:374)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.ClientServerConnection.waitForCommands(ClientServerConnection.java:182)

at py4j.ClientServerConnection.run(ClientServerConnection.java:106)

at java.base/java.lang.Thread.run(Thread.java:842)

Caused by: org.apache.spark.SparkException: Python worker exited unexpectedly (crashed)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator$$anonfun$1.applyOrElse(PythonRunner.scala:612)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator$$anonfun$1.applyOrElse(PythonRunner.scala:594)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:38)

at org.apache.spark.api.python.PythonRunner$$anon$3.read(PythonRunner.scala:789)

at org.apache.spark.api.python.PythonRunner$$anon$3.read(PythonRunner.scala:766)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator.hasNext(PythonRunner.scala:525)

at org.apache.spark.InterruptibleIterator.hasNext(InterruptibleIterator.scala:37)

at scala.collection.Iterator.foreach(Iterator.scala:943)

at scala.collection.Iterator.foreach$(Iterator.scala:943)

at org.apache.spark.InterruptibleIterator.foreach(InterruptibleIterator.scala:28)

at scala.collection.generic.Growable.$plus$plus$eq(Growable.scala:62)

at scala.collection.generic.Growable.$plus$plus$eq$(Growable.scala:53)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:105)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:49)

at scala.collection.TraversableOnce.to(TraversableOnce.scala:366)

at scala.collection.TraversableOnce.to$(TraversableOnce.scala:364)

at org.apache.spark.InterruptibleIterator.to(InterruptibleIterator.scala:28)

at scala.collection.TraversableOnce.toBuffer(TraversableOnce.scala:358)

at scala.collection.TraversableOnce.toBuffer$(TraversableOnce.scala:358)

at org.apache.spark.InterruptibleIterator.toBuffer(InterruptibleIterator.scala:28)

at scala.collection.TraversableOnce.toArray(TraversableOnce.scala:345)

at scala.collection.TraversableOnce.toArray$(TraversableOnce.scala:339)

at org.apache.spark.InterruptibleIterator.toArray(InterruptibleIterator.scala:28)

at org.apache.spark.rdd.RDD.$anonfun$collect$2(RDD.scala:1046)

at org.apache.spark.SparkContext.$anonfun$runJob$5(SparkContext.scala:2438)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:93)

at org.apache.spark.TaskContext.runTaskWithListeners(TaskContext.scala:161)

at org.apache.spark.scheduler.Task.run(Task.scala:141)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$4(Executor.scala:620)

at org.apache.spark.util.SparkErrorUtils.tryWithSafeFinally(SparkErrorUtils.scala:64)

at org.apache.spark.util.SparkErrorUtils.tryWithSafeFinally$(SparkErrorUtils.scala:61)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:94)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:623)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)

... 1 more

Caused by: java.io.EOFException

at java.base/java.io.DataInputStream.readInt(DataInputStream.java:398)

at org.apache.spark.api.python.PythonRunner$$anon$3.read(PythonRunner.scala:774)

... 32 more文章来源地址https://www.toymoban.com/news/detail-807700.html

到了这里,关于在pycharm中使用PySpark第三方包时调用python失败,求教的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!