本笔记基于清华大学《机器学习》的课程讲义中有关机器学习的此前未提到的部分,基本为笔者在考试前一两天所作的Cheat Sheet。内容较多,并不详细,主要作为复习和记忆的资料。

Robust Machine Learning

Attack: PGD

- max δ ∈ Δ L o s s ( f θ ( x + δ ) , y ) \max_{\delta\in \Delta}Loss(f_\theta(x+\delta),y) maxδ∈ΔLoss(fθ(x+δ),y): find δ \delta δ that maximizes the loss

- How to compute

δ

\delta

δ?

- Projected Gradient Descent: GD then project back to Δ \Delta Δ

- Fast Gradient Sign Method: For example, Δ = { δ : ∣ δ ∣ ∞ ≤ ϵ } \Delta =\{\delta:|\delta|_\infty\le \epsilon\} Δ={δ:∣δ∣∞≤ϵ}. Then as learning rate goes to ∞ \infty ∞, we always go to corner. Then δ = η ⋅ s i g n ( ∇ x L θ ( x + δ t , y ) ) \delta=\eta\cdot sign(\nabla_xL_\theta(x+\delta_t,y)) δ=η⋅sign(∇xLθ(x+δt,y)), we only take the sign.

- PGD: runs FGSM multiple times.

Defend: Adversarial Training

-

Daskin’s Theorem:

∂ ∂ θ max δ L ( f θ ( x + δ , y ) ) = ∂ ∂ θ L ( f θ ( x + δ ∗ , y ) ) \frac{\partial}{\partial \theta }\max_\delta L(f_\theta(x+\delta,y))=\frac{\partial }{\partial \theta}L(f_\theta(x+\delta^*,y)) ∂θ∂δmaxL(fθ(x+δ,y))=∂θ∂L(fθ(x+δ∗,y))

Only care about the worst attack samples. For a batch of samples, find δ ∗ \delta^* δ∗, then do GD on θ \theta θ based on δ ∗ \delta^* δ∗. -

Models become more semantically meaningful by adversarial training

Robust Feature Learning

- For a dog photo x x x, attack it to x ′ x' x′, so that f ( x ′ ) = c a t f(x')=cat f(x′)=cat. This ( x ′ , c a t ) (x',cat) (x′,cat) training set gives model the “non-robust” features.

- For train image x x x, generate from random initialization x τ x_\tau xτ such that g ( x ) ≈ g ( x τ ) g(x)\approx g(x_\tau) g(x)≈g(xτ). x τ x_\tau xτ is also a good training sample that gives similar robust feature to the model.

False Sense of Security

- Backward pass differentiable approximation(ignore the gradient that is hard to compute).

- Take multiple samples to solve the randomness.

Provable Robust Certificates

- Classification problem: use histogram, pick the largest color nearby.

- Compare the histogram centered at

x

x

x and

x

+

δ

x+\delta

x+δ:

- Worst case: Greedy filling(*)

Hyperparameter

Bayesian Optimization

- estimate the parameters as Gaussian.

- explore both the great-variance point and the low point.

Gradient descent

- Directly use GD to find hyperparameter: memory storage problem

- SGD with momentum:

v

t

+

1

=

γ

t

v

t

−

(

1

−

γ

t

)

∇

w

L

(

w

t

,

θ

,

t

)

v_{t+1}=\gamma_tv_t-(1-\gamma_t)\nabla _wL(w_t,\theta ,t)

vt+1=γtvt−(1−γt)∇wL(wt,θ,t)

- Store w t + 1 , v t + 1 w_{t+1},v_{t+1} wt+1,vt+1 and ∇ w L ( w t , θ , t ) \nabla _wL(w_t,\theta,t) ∇wL(wt,θ,t), we can recover the whole chain

- Need: Continuous

Random Search

- Work better than grid search

Best Arm identification

-

Successive Halving(SH) Algorithm(*)

- Per round use B / log 2 ( n ) B/\log_2(n) B/log2(n), log 2 ( n ) \log_2(n) log2(n) rounds totally.

- Each round, every survivor use the same budget. Only half of them survive in each round.

-

Assume v 1 > v 2 ≥ . . . ≥ v n , Δ i = v 1 − v i v_1>v_2\ge ...\ge v_n,\Delta_i=v_1-v_i v1>v2≥...≥vn,Δi=v1−vi. With probability 1 − δ 1-\delta 1−δ, the algorithm finds the best arm with B = O ( max i > 1 i Δ i 2 log n log ( log n / δ ) ) B=O(\max_{i>1}\frac{i}{\Delta_i^2}\log n\log(\log n/\delta)) B=O(maxi>1Δi2ilognlog(logn/δ)) assuming v i ∈ [ 0 , 1 ] v_i\in [0,1] vi∈[0,1]

- Proof. Concentration inequaility, probability that 1 3 \frac{1}{3} 31 of 3 4 N r \frac{3}{4}N_r 43Nr smaller ones are greater than best one is bounded, union bound for all round.

-

Hyperparameter tuning

- B ≥ 2 log 2 ( n ) ( n + ∑ i = 2... n γ ˉ − 1 ( v i − v 1 2 ) ) B\ge 2\log_2(n)\left(n+\sum_{i=2...n}\bar{\gamma}^{-1}(\frac{v_i-v_1}{2})\right) B≥2log2(n)(n+∑i=2...nγˉ−1(2vi−v1))

Neural Architecture Search

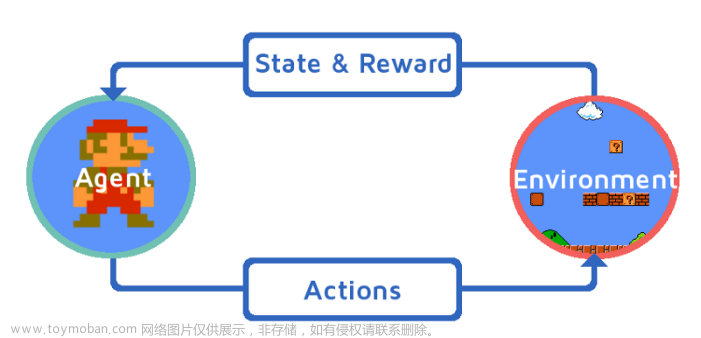

- Reinforcement learning

- ProxylessNAS: each architecture has weight α i \alpha_i αi to be chosen. Choose a binary variable, that is only one architecture exists, and the probability is about α i \alpha_i αi. ∂ L ∂ α i ≈ ∂ L ∂ g i ∂ p i ∂ α i \frac{\partial L}{\partial \alpha_i}\approx\frac{\partial L}{\partial g_i}\frac{\partial p_i}{\partial \alpha_i} ∂αi∂L≈∂gi∂L∂αi∂pi, g i = 0 / 1 g_i=0/1 gi=0/1, ∂ L / ∂ g j \partial L/\partial g_j ∂L/∂gj means the influence of architecture j j j to L L L.

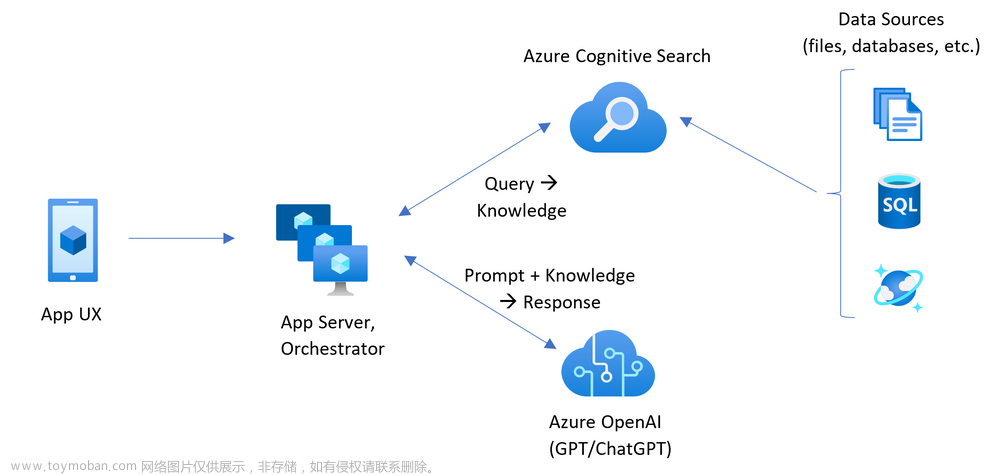

Machine Learning Augmented

Differencial Privacy

-

Randomness is essential.

- If we have a non-trivial deterministic algorithm, we can change data base A A A to B B B one by one until their output is the same. There exists a pair of databases differ by one row, then we know about that row.

-

Database: histogram N ∣ X ∣ N^{|X|} N∣X∣ for discrete set X X X(the categories).

-

M M M is ( ϵ , δ ) (\epsilon,\delta ) (ϵ,δ)-differentially privacy if ∀ x , y ∈ N ∣ X ∣ , ∣ x − y ∣ 1 ≤ 1 , S ⊆ M ( N ∣ X ∣ ) \forall x,y\in N^{|X|},|x-y|_1\le 1,S\subseteq M(N^{|X|}) ∀x,y∈N∣X∣,∣x−y∣1≤1,S⊆M(N∣X∣)

P ( M ( x ) ∈ S ) ≤ e ϵ P ( M ( y ) ∈ S ) + δ P(M(x)\in S)\le e^{\epsilon}P(M(y)\in S)+\delta P(M(x)∈S)≤eϵP(M(y)∈S)+δ -

Differencial privacy is immune to post-processing. Proof:

- For deterministic function: Proved easily by inverse function

- Random function is convex combination of deterministic function, that is, each deterministic function is chosen with some probability.

-

With ( ϵ , 0 ) (\epsilon,0) (ϵ,0)-differential privacy(DP mechanism), the voting result will not change too much by changing one’s vote.

E a ∼ f ( M ( x ) ) [ u i ( a ) ] = ∑ a ∈ A u i ( a ) Pr f ( M ( x ) ) [ a ] ≤ ∑ a ∈ A u i ( a ) exp ( ϵ ) Pr f ( M ( y ) ) [ a ] = exp ( ϵ ) E a ∼ f ( M ( y ) ) [ u i ( a ) ] \begin{align*} E_{a\sim f(M(x))}[u_i(a)]&=\sum_{a\in A} u_i(a)\Pr_{f(M(x))}[a]\\&\le \sum_{a\in A}u_i(a)\exp(\epsilon)\Pr_{f(M(y))}[a]\\&=\exp(\epsilon)E_{a\sim f(M(y))}[u_i(a)] \end{align*} Ea∼f(M(x))[ui(a)]=a∈A∑ui(a)f(M(x))Pr[a]≤a∈A∑ui(a)exp(ϵ)f(M(y))Pr[a]=exp(ϵ)Ea∼f(M(y))[ui(a)]

f f f is a map to the feature, and we only consider the expected voting utility u i ( a ) u_i(a) ui(a) about the feature a a a. -

Laplace Mechanism: reach ( ϵ , 0 ) (\epsilon,0) (ϵ,0)-DP by just adding a random gaussian noise to f ( x ) , x ∈ N ∣ X ∣ f(x),x\in N^{|X|} f(x),x∈N∣X∣

-

Assume f : N ∣ X ∣ → R k f:N^{|X|}\to \R^{k} f:N∣X∣→Rk

-

Definition: M L ( x , f , ϵ ) = f ( x ) + ( Y 1 , . . , Y k ) M_L(x,f,\epsilon)=f(x)+(Y_1,..,Y_k) ML(x,f,ϵ)=f(x)+(Y1,..,Yk)

- Y i Y_i Yi is iid random variable from L a p ( Δ f ϵ ) Lap(\frac{\Delta f }{\epsilon}) Lap(ϵΔf)

- l 1 l_1 l1 sensitive of f f f is Δ f = max x , y , ∣ x − y ∣ 1 ≤ 1 ∣ f ( x ) − f ( y ) ∣ 1 \Delta f=\max_{x,y,|x-y|_1\le 1}|f(x)-f(y)|_1 Δf=maxx,y,∣x−y∣1≤1∣f(x)−f(y)∣1. How sensitive f f f is by changing one entry a little.

- Probability density: L a p ( b ) = 1 2 b exp ( − ∣ x ∣ b ) Lap(b)=\frac{1}{2b}\exp(-\frac{|x|}{b}) Lap(b)=2b1exp(−b∣x∣). Variance σ 2 = 2 b 2 \sigma^2=2b^2 σ2=2b2.

-

Proof. The probability density of M L ( x , f , ϵ ) M_L(x,f,\epsilon) ML(x,f,ϵ) is p x ( z ) = ∏ i = 1 k exp ( − ϵ ∣ f ( x ) i − z i ∣ Δ f ) p_x(z)=\prod_{i=1}^k\exp\left(-\frac{\epsilon|f(x)_i-z_i|}{\Delta f}\right) px(z)=∏i=1kexp(−Δfϵ∣f(x)i−zi∣). Easily prove p x ( z ) / p y ( z ) ≤ exp ( ϵ ) p_x(z)/p_y(z)\le \exp(\epsilon) px(z)/py(z)≤exp(ϵ)文章来源:https://www.toymoban.com/news/detail-808456.html

-

Accuracy: for δ ∈ ( 0 , 1 ] \delta\in(0,1] δ∈(0,1],

Pr [ ∣ f ( x ) − M L ( x , f , ϵ ) ∣ ∞ ≥ ln ( k δ ) ⋅ ( Δ f ϵ ) ] ≤ δ \Pr\left[|f(x)-M_L(x,f,\epsilon)|_\infty\ge \ln\left(\frac{k}{\delta}\right)\cdot \left(\frac{\Delta f}{\epsilon}\right)\right]\le \delta Pr[∣f(x)−ML(x,f,ϵ)∣∞≥ln(δk)⋅(ϵΔf)]≤δ文章来源地址https://www.toymoban.com/news/detail-808456.html- Directly prove by Pr [ ∣ Y ∣ ≥ t ⋅ b ] = exp ( − t ) \Pr[|Y|\ge t\cdot b]=\exp(-t) Pr[∣Y∣≥t⋅b]=exp(−t) for Y ∼ L a p ( b ) Y\sim Lap(b) Y∼Lap(b)

-

Big data

- Idea: data distribution rarely changes.

- Replace B-tree with a model. Know err_min err_max because you only care about the data in your database

- advantage: less storage, faster lookups, more parallelism, hardware accelators

- use linear model(fast)

- Do exponential search since the prediction is good enough

- Bloom Filter: is the key in my set=>maybe yes/no

Low Rank Apporximation

- A ∈ R n × m A\in \mathbb{R}^{n\times m} A∈Rn×m, [ A ] k = arg min r a n k ( A ′ ) ≤ k ∣ A − A ′ ∣ F [A]_k=\arg \min_{rank(A')\le k} |A-A'|_F [A]k=argminrank(A′)≤k∣A−A′∣F

- Learn S ∈ R p × m S\in \mathbb{R}^{p\times m} S∈Rp×m and do low rank decomposition for S A SA SA, then do some recover.

Recified flow

- Given empirical observations about distribution

π

0

\pi_0

π0 and

π

1

\pi_1

π1. Find a transport map

T

T

T that

T

(

Z

)

∼

π

1

T(Z)\sim \pi_1

T(Z)∼π1 for

Z

∼

π

0

Z\sim \pi_0

Z∼π0.

- E.g. π 0 \pi_0 π0 is gaussian noise, π 1 \pi_1 π1 are data containing pictures. We want to find a map from noise to picture(diffusion) that has some features.

- If T ( X 0 ) = X 1 T(X_0)=X_1 T(X0)=X1, then we want v ( t X 0 + ( 1 − t ) X 1 , t ) = d X d t = X 1 − X 0 v(tX_0+(1-t)X_1,t)=\frac{dX}{dt}=X_1-X_0 v(tX0+(1−t)X1,t)=dtdX=X1−X0. The transformation is linear. So that one cluster will map to another clusters.

- Minimize the loss E [ ∥ ( X 1 − X 0 ) − v ( X t , t ) ∥ 2 ] \mathbb{E}[\|(X_1-X_0)-v(X_t,t)\|^2] E[∥(X1−X0)−v(Xt,t)∥2]

- Algorithm:

- randomly connect π 0 , π 1 \pi_0,\pi_1 π0,π1

- minimize loss of $\theta $ for v θ v_\theta vθ

- connect π 0 , π 1 \pi_0,\pi_1 π0,π1 by v θ v_\theta vθ again, and repeat minimization.

Rope Attention

- Embed position to

q

m

,

k

n

q_m,k_n

qm,kn for attention by rotation in complex field

- β \beta β-base system: focus on different accuracy level

- use inner product of q m , k n q_m,k_n qm,kn to represent their similarity

- Softmax attention Attention ( Q , K , V ) = softmax ( Q K ⊤ ) V \text{Attention}(Q,K,V)=\text{softmax}(QK^\top)V Attention(Q,K,V)=softmax(QK⊤)V

- Faster computation

- linear

- softmax separately

- design Q K ⊤ QK^\top QK⊤

到了这里,关于【Machine Learning】Other Stuff的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!

![[machine Learning]强化学习](https://imgs.yssmx.com/Uploads/2024/02/706328-1.png)

![[Machine Learning][Part 8]神经网络的学习训练过程](https://imgs.yssmx.com/Uploads/2024/02/742943-1.png)