AGI之Agent:《Agent AI: Surveying the Horizons of Multimodal Interaction智能体AI:多模态交互视野的考察》翻译与解读

导读:这篇文章探讨了一种新的多模态智能代理体系结构,该体系结构可感知视觉刺激、语言输入和其他环境相关数据,并产生有意义的实体动作。

>> 文章提出,随着深度学习的发展,语言模型和视觉语言模型在某些任务上显示出超人水平的能力。然而,这些模型通常难以在物理环境中产生实体动作。为此,文章提出了一种多模态智能代理框架,将语言模型和视觉语言模型纳入一个统一的系统架构中,以产生实体动作。该框架主要包含以下要点:

>> 整合各种感知模块,例如视觉、语音和传感器输入,以满足环境交互的需求。

>> 基于深度学习算法训练智能代理,使其可以理解多种模态输入,并生成有意义的实体操作。

>> 提供一个统一的接口,支持语言、视觉和其他类型的输入,并产生相应的输出。

>> 引入记忆模块,记录环境交互的历史信息,支持长期规划。

>> 设计一个通用的智能代理转换器结构,直接使用语言和视觉协处理 Tokens 作为输入,支持端到端训练。

>> 将此框架应用于游戏、机器人以及医疗保健等不同领域,验证其广泛适用性。

总体来说,该框架的目标是建立一个全面性的多模态智能代理体系,允许基于深度学习技术整合不同感知模块,生成环境相符的动作,以实现良好的环境交互能力。未来还需要开展额外工作,弥补当前模型在解释能力、公平性和安全性等方面的不足。

目录

《Agent AI: Surveying the Horizons of Multimodal Interaction智能体AI:多模态交互视野的考察》翻译与解读

Abstract摘要

>> 多模态人工智能:多模态人工智能系统将无处不在

>> 具身智能体:当前系统以现有基础模型为创建具身智能体的基本构建模块。通过感知用户动作、人类行为、环境物体、音频表达和场景情感,系统可用于引导代理在给定环境中的响应。

>> 减轻幻觉:通过发展在有根基环境中的具体人工智能系统,可以减轻大型基础模型幻觉和生成环境不正确输出的倾向。

>> 虚拟现实:Agent工智能这一新兴领域涵盖了多模态交互的更广泛的具体和代理方面,未来人们可轻松创建虚拟现实或模拟场景,并与嵌入虚拟环境中的代理进行互动。

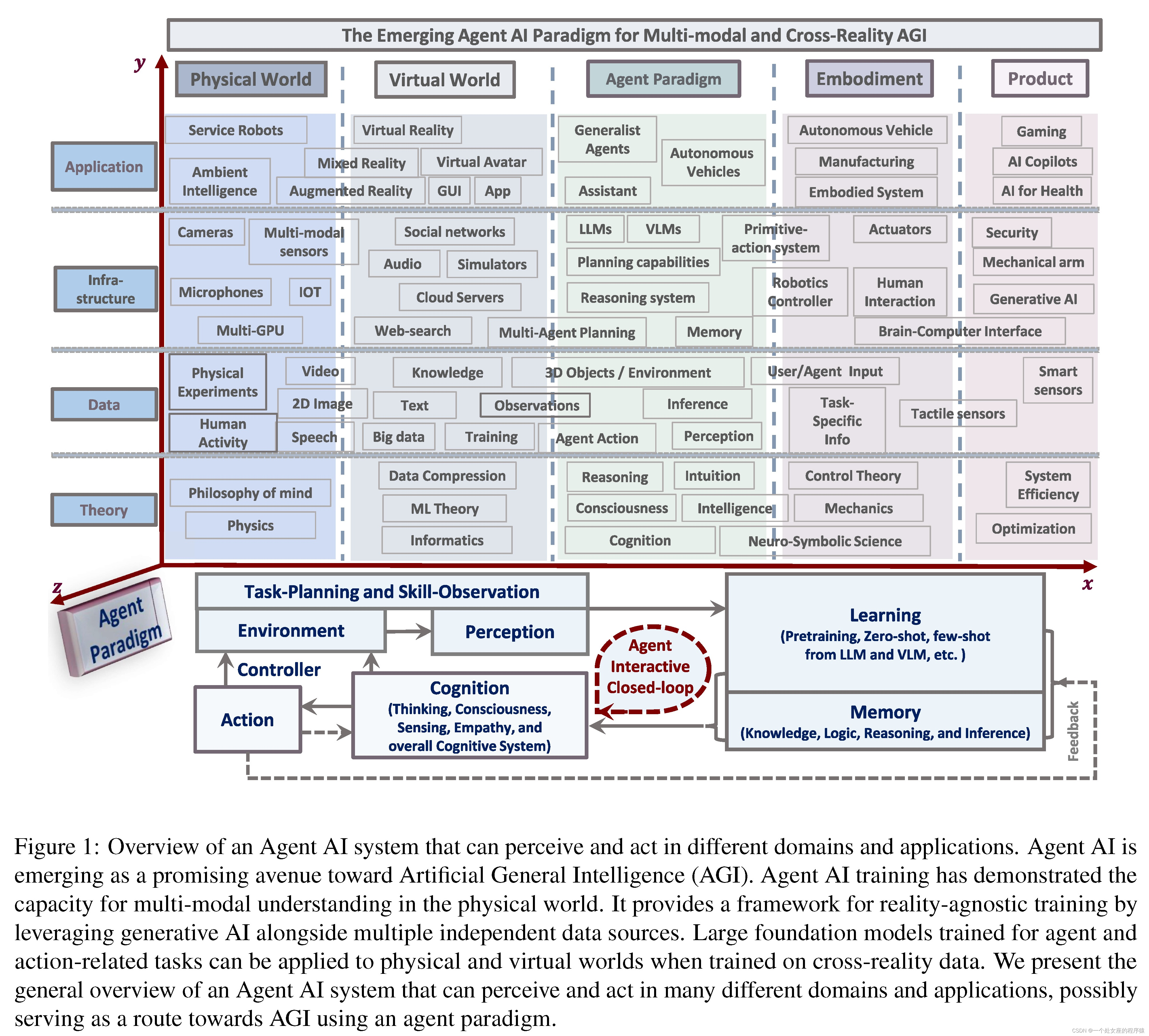

图1:基于不同领域和应用程序中感知和行动的Agent AI系统概述

1 Introduction

1.1 Motivation动机

人工智能历史演进:从1956年达特茅斯会议定义的AI系统为人工生命形式开始,经历分化和问题解决的过程,但过度简化模糊了AI研究的总体目标。

重返AI基础与整体主义:为了超越现状,需要回归亚里士多德整体主义的AI基本原理,而近年来大型语言模型(LLMs)和视觉语言模型(VLMs)的革命为此提供了可能性。

综合模型的探索与潜在综合:利用LLMs和VLMs,探索整合语言能力、视觉认知、上下文记忆、直觉推理和适应性的模型,并探讨使用这些模型进行整体综合的潜在可能性。

AI模型的巨大潜力与转变、代理中心AI的影响:利用LLMs和VLMs作为代理,特别是在游戏、机器人技术和医疗领域,不仅为AI系统提供了严格的评估平台,还预示了对社会和产业的转变影响。

技术与伦理的交织:AI的巨大进步将与技术和伦理层面相互交织,这需要综合考虑

1.2 Background背景

大型基础模型:LLM和VLM推动了建立通用智能机的工作

Embodied AI:一些研究利用LLM进行任务规划,利用LLM的强大知识和零打样本体现能力进行复杂任务规划(关键词:LLM、任务规划)

交互学习AI:AI同时利用机器学习和用户交互来学习,初期在大数据集上训练,然后通过用户反馈和观察学习来改进(关键词:机器学习、用户交互、学习改进)

1.3 Overview

模态代理性AI(MAA):根据多模态感知输入在给定环境下生成有效行为

研究方法:详细例子介绍如何利用LLM和VLM提升MAA,通过游戏、机器人和医疗案例阐述

性能评估:重点关注MAA在相关数据集上的有效性和泛化能力

伦理考量:讨论部署代理性AI对社会影响和倫理问题,强调负责任开发实践

2 Agent AI IntegrationAgentAI集成

2.1 Infinite AI agent无限人工智能代理

AI代理系统的四大能力:预测、决策、处理歧义、持续改进

无限智能体(Infinite Agent):可以不断地学习并适应新任务,而无需为每个新任务收集大量的训练数据,比如RoboGen

Figure 2: The multi-model agent AI for 2D/3D embodied generation and editing interaction in cross-reality.图2:跨现实中2D/3D嵌入生成和编辑交互的多模型agent AI。

2.2 Agent AI with Large Foundation Models具有大型基础模型的人工智能代理

LLM中的基准数据发挥重要作用:可以检测代理在不同环境下行为限制条件

越来越感兴趣:人们对产生受语言和环境因素影响的有条件的人体动作

2.2.1 Hallucinations幻觉

LLMs中的幻觉:两类幻觉=内在(与原始材料相矛盾)+外在(原始材料未包含的额外信息)

改进方法:RAG或其他方法,旨在通过检索额外的源材料并提供机制来检查生成的响应与源材料之间的矛盾来增强语言生成

VLMs(多模态)中的幻觉:仅依赖预训练模型的内部知识库,无法准确理解世界状态

2.2.2 Biases and Inclusivity偏见和包容性

Agent偏见和包容性的关键方面

训练数据:基础模型从互联网大数据源训练,数据可能包含社会偏见,包括与种族、性别、族裔、宗教等相关的刻板印象和偏见

历史文化偏见:训练数据可能包含历史偏见或不同文化立场,其中可能包含冒犯性或贬损性语言

语言环境限制:模型难以完全理解语言细微差别导致误导,如讽刺、幽默或文化引用

政策和准则: 要确保公平和包容,例如在生成图像时,有规定多样化描绘人物的规则,避免与种族、性别等相关的刻板印象。

不当泛化:模型倾向于根据训练数据模式产生回复,导致过度概括,可能形成立场

持续监测更新:用户反馈和伦理研究帮助解决新出现问题

强化主流观点: 模型可能更偏向主导文化或群体的观点,潜在地低估或误代表少数观点。

道德和包容性设计: 尊重文化差异,促进多样性,并确保AI不延续有害的刻板印象。

用户指南: 引导以促进包容和尊重的方式与AI进行互动,包括避免可能导致有偏见或不适当输出的请求,以减轻模型从用户互动中学到有害材料的可能性。

纠正偏见的努力:

多样和包容的训练数据、偏见检测和修正、道德准则和政策、多样性代表、偏见缓解、文化敏感性、可访问性、语言包容性、道德和尊重的互动、用户反馈和适应、符合包容性准则

Agent AI包容性的首要目标:创建一个尊重所有用户的Agent

2.2.3 Data Privacy and Usage数据隐私和使用

数据收集、使用和目的,存储和安全,数据删除和保留,数据可移植性和隐私政策,匿名化

2.2.4 Interpretability and Explainability可理解性和可解释性

模仿学习→解耦:无限记忆代理通过模仿学习从专家数据中学习策略,改善对未知环境的探索和利用。解耦通过上下文提示或隐式奖励函数学习代理,克服传统模仿学习的缺点,使代理更适应各种情况

解耦→泛化:分离学习过程和任务奖励函数,通过模仿专家行为学习策略,使模型能在不同任务和环境中适应和泛化

泛化→新兴行为:从简单规则或元素间的互动中产生复杂行为,模型在不同复杂层面上学习普适原则,促进跨领域知识迁移,导致涌现特性

Figure 3: Example of the Emergent Interactive Mechanism using an agent to identify text relevant to the image from candidates. The task involves using a multi-modal AI agent from the web and human-annotated knowledge interaction samples to incorporate external world information.图3:使用代理识别候选图像相关文本的紧急交互机制示例。该任务涉及使用来自网络的多模态AI代理和人类注释的知识交互样本来整合外部世界信息。

2.2.5 Inference Augmentation推理增强

数据增强:引入额外数据提供更多上下文,帮助AI在不熟悉领域做出更明智推理。

算法增强:改进AI基础算法以更精准推理,包括更先进的机器学习模型等。

人机协作:在关键领域如伦理和创造性任务中,人类指导可补充AI推理

实时反馈集成:利用实时用户或环境反馈提高推理性能,如AI根据用户反馈调整推荐

跨领域知识迁移:将一个领域的知识应用于另一个领域以改善推理

特定用例定制:针对特定应用或行业定制AI推理能力,如法律分析、医疗诊断。

伦理和偏见考虑:确保增强过程不引入新偏见或伦理问题,考虑数据源和算法对公平性的影响

持续学习和适应:定期更新AI能力,以跟上发展、数据变化和用户需求

2.2.6 Regulation监管

开发利用LLM或VLM的下一代AI驱动的人机交互管道:与机器的有效沟通和互动,对话能力来识别并满足人类需求,并采用提示工程、高层级验证来限制

Figure 4: A robot teaching system developed in (Wake et al., 2023c). (Left) The system workflow. The process involves three steps: Task planning, where ChatGPT plans robotic tasks from instructions and environmental information; Demonstration, where the user visually demonstrates the action sequence. All the steps are reviewed by the user, and if any step fails or shows deficiencies, the previous steps can be revisited as necessary. (Right) A web application that enables uploading of demonstration data and the interaction between the user and ChatGPT.图4:在(Wake et al., 2023c)开发的机器人教学系统。(左)系统工作流程。这个过程包括三个步骤:任务规划,ChatGPT根据指令和环境信息计划机器人的任务;演示,用户直观地演示操作顺序。所有步骤都由用户审查,如果任何步骤失败或显示缺陷,则可以根据需要重新访问前面的步骤。(右)一个网络应用程序,可以上传演示数据和用户与ChatGPT之间的交互。

2.3 Agent AI for Emergent Abilities涌现能力的人工智能代理

提出一种利用通用模型知识实现人机新交互场景的方法,探索混合现实下知识推断机制协助复杂环境任务解决

Figure 5: Our proposed new agent paradigm for a multi-modal generalist agent. There are 5 main modules as shown in the figures: 1) Environment and Perception with task-planning and skill observation; 2) Agent learning; 3) Memory; 4) Agent action; 5) Cognition.图5:我们为多模态多面手智能体提出的新智能体范式。如图所示,主要有5个模块:1)环境与感知,包含任务规划和技能观察;2) Agent学习;3)内存;4)代理行为;5)认知。

3 Agent AI Paradigm人工智能代理范式

提出训练Agent AI的新范式和框架:旨在利用预训练模型和策略,支持长期任务规划,包括记忆框架和环境反馈

3.1 LLMs and VLMs大语言模型 (LLMs) 和大视觉语言模型 (VLMs)

使用LLM和VLM模型引导Agent组件:提供任务规划、世界知识、逻辑推理等功能,利用LLM语言模型和VLM视觉模型为代理各组件提供初始化

3.2 Agent Transformer Definition—Agent Transformer 定义

Agent Transformer定义:是一种替代使用冻结LLMs和VLMs的方法,通过单个模型接受视觉、语言和Agent tokens作为输入

Agent Transformer优势:相对于大型专有LLMs的优势包括定制能力、解释性和潜在的成本优势

Figure 6: We show the current paradigm for creating multi-modal AI agents by incorporating a Large Language Model (LLM) with a Large Vision Model (LVM). Generally, these models take visual or language inputs and use pre-trained and frozen visual and language models, learning smaller sub-network that connect and bridge modalities. Examples include Flamingo (Alayrac et al., 2022), BLIP-2 (Li et al., 2023c), InstructBLIP (Dai et al., 2023), and LLaVA (Liu et al., 2023c).

Figure 7: The unified agent multi-modal transformer model. Instead of connecting frozen submodules and using existing foundation models as building blocks, we propose a unified and end-to-end training paradigm for agent systems. We can still initialize the submodules with LLMs and LVMs as in Figure 6 but also make use of agent tokens, specialized tokens for training the model to perform agentic behaviors in a specific domain (e.g., robotics). For more details about agent tokens, see Section 3.2

3.3 Agent Transformer CreationAgentTransformer 创建

创建过程:利用基础模型数据训练Agent Transformer,明确目标和行为空间。

持续改进:监控模型表现,收集反馈以进行微调和更新,确保无偏见和不道德结果

4 Agent AI Learning人工智能代理 学习

4.1 Strategy and Mechanism策略和机制

4.1.1 Reinforcement Learning (RL)强化学习

4.1.2 Imitation Learning (IL)模仿学习

4.1.3 Traditional RGB传统 RGB

利用图像强化智能代理行为的研究方向,增加数据或加入模型偏置等方法改进样本效率问题。

4.1.4 In-context Learning上下文学习

4.1.5 Optimization in the Agent SystemAgent系统中的优化

4.2 Agent Systems (zero-shot and few-shot level) Agent系统 (零样本和少样本水平)

4.2.1 Agent ModulesAgent模块

4.2.2 Agent InfrastructureAgent基础设施

4.3 Agentic Foundation Models (pretraining and finetune level)主观基础模型 (预训练和微调水平)

5 Agent AI Categorization人工智能代理分类

5.1 Generalist Agent Areas通用Agent领域

5.2 Embodied Agents具身Agent

5.2.1 Action Agents行动Agent

5.2.2 Interactive Agents互动Agent

5.3 Simulation and Environments Agents模拟和环境Agent

5.4 Generative Agents生成Agent

5.4.1 AR/VR/mixed-reality Agents基于AR/VR/混合现实Agent

5.5 Knowledge and Logical Inference Agents知识和逻辑推理Agent

5.5.1 Knowledge Agent

5.5.3 Agents for Emotional Reasoning情感推理Agent

5.5.4 Neuro-Symbolic Agents神经符号Agent

5.6 LLMs and VLMs Agent基于LLMs 和 VLMs Agent

6 Agent AI Application Tasks AgentAI应用任务

6.1 Agents for Gaming游戏Agent

6.1.1 NPC Behavior基于NPC 行为

Figure 8: The embodied agent for user interactive gaming action prediction and interactive editing with Minecraft Dungeons gaming sense simulation and generation via GPT-4V.

6.1.2 Human-NPC Interaction人-NPC 互动

6.1.3 Agent-based Analysis of Gaming游戏的基于Agent的分析

Figure 9: GPT-4V can effectively predict the high-level next actions when given the “action history" and a “gaming target" in the prompt. Furthermore, GPT-4V accurately recognized that the player is holding wooden logs in their hand and can incorporate this perceived information into its plan for future actions. Although GPT-4V appears to be capable of predicting some low-level actions (such as pressing ‘E‘ to open the inventory), the model’s outputs are not inherently suitable for raw low-level action prediction (including mouse movements) and likely requires supplemental modules for low-level action control.

6.1.4 Scene Synthesis for Gaming游戏场景合成

Figure 10: Masked video prediction on unseen Minecraft videos. From left to right: the original frame, the masked frame, the reconstructed frame, and the reconstructed frame with patches.

6.1.5 Experiments and Result实验和结果

Figure 11: The low-level next step action prediction with the small agent pretraining model in gaming Minecraft scene.

Figure 12: The MindAgent of in-context learning gaming Infrastructure. Planning Skill and Tool Use: The game environment requires diverse planning skills and tool use to complete tasks. It generates relevant game information and converts the game data into a structured text format that the LLMs can process. LLM: The main workhorse of our infrastructure makes decisions, thus serving as a dispatcher for the multi-agent system. Memory History: A storage utility for relevant information. Action Module: Extracts actions from text inputs and convertd them into domain-specific language and validates DSLs so that they cause no errors during execution.

Figure 13: Overview of the robot teaching system that integrates a ChatGPT-empowered task planner. The process involves two steps: Task planning, where the user employs the task planner to create an action sequence and adjusts the result through feedback as necessary, and Demonstration, where the user visually demonstrates the action sequence to provide information needed for robot operation. The vision system collects visual parameters that will be used for robot execution.

Figure 14: Example of adjusting an output sequence through auto-generated feedback. We use an open-sourced simulator, VirtualHome for the experiment. Given an instruction “Take the pie on the table and warm it using the stove.,” the task planner plans a sequence of functions that are provided in VirtualHome. If an error in execution is detected, the task planner correct its output based on the auto-generated error message.

6.2 Robotics机器人学

6.2.1 LLM/VLM Agent for Robotics用于机器人学的LLM/VLMAgent

6.2.2 Experiments and Results实验和结果

Figure 15: Overview of the multimodal task planner that leverages GPT-4V and GPT-4. The system processes video demonstrations and text instructions, generating task plans for robotic execution.

Figure 16: Examples of the output of the video analyzer. The five frames are extracted at regular intervals and fed into GPT-4V. We describe the entire pipeline in Section 6.2.2.

Figure 17: Examples of the outputs of the scene analyzer that leverages GPT-4V. We describe our entire pipeline in Section 6.2.2.

Figure 18: Demonstration of embodied agent for the VLN task (Wang et al., 2019). The instruction, the local visual scene, and the global trajectories in a top-down view is shown. The agent does not have access to the top-down view. Path A is the demonstration path following the instruction. Path B and C are two different paths executed by the agent.

6.3 Healthcare医疗保健

6.3.1 Current Healthcare Capabilities 当前医疗保健能力

6.4 Multimodal Agents多模态Agent

6.4.1 Image-Language Understanding and Generation图像-语言理解与生成

Figure 19: Example prompts and responses when using GPT-4V within the domain of healthcare image understanding. From left to right: (1) an image of a nurse and doctor conducting a CT scan, (2) a synthetic image of an irregular EKG scan, and (3) an image from the ISIC (Codella et al., 2018) skin lesion dataset. We can see that GPT-4V possesses significant medical knowledge and is able to reason about medical images. However, due to safety training, it is unable to make diagnoses for some medical images.

6.4.2 Video and Language Understanding and Generation视频和语言理解与生成

Figure 20: Example prompts and responses when using GPT-4V within the domain of healthcare video understanding. We input the example videos as 2x2 grids with overlaid text indicating the order of frames. In the first two examples, we prompt GPT-4V to examine the frames in the video to detect the clinical bedside activities performed on the volunteer patients. For the final example, we attempt to prompt GPT-4V to assess an echocardiogram video, however due to GPT-4V’s safety training, it does not provide a detailed response. For clarity, we bold text that describes the activity of interest, and abbreviate model responses that are unnecessary. We gray-out faces from the individuals to preserve their privacy.

Figure 21: Interactive multimodal agents include four main pillars: Interaction, Speech, Vision, and Language. Co-pilot agents are made up of different services. 1) Interaction services help make a unified platform for automated actions, cognition, and decision-making. 2) Audio services integrate audio and speech processing into apps and services. 3) Vision services identify and analyze content within images, videos, and digital ink. 4) Language services extract meaning from structured and unstructured text.

Figure 22: Example of Intensive Neural Knowledge (INK) (Park et al., 2022) task that uses knowledge to identify text relevant to the image from a set of text candidates. Our task involves leveraging visual and text knowledge retrieved from web and human-annotated knowledge.

Figure 23: The KAT model (Gui et al., 2022a) uses a contrastive-learning-based module to retrieve knowledge entries from an explicit knowledge base and uses GPT-3 to retrieve implicit knowledge with supporting evidence. The integration of knowledge is processed by the respective encoder transformer and jointly with reasoning module and the decoder transformer via end-to-end training for answer generation.

6.4.3 Experiments and Results实验和结果

Figure 24: The overall architecture of the VLC model (Gui et al., 2022b). Our model consists of three modules: (1) Modality-specific projection. We use a simple linear projection to embed patched images and a word embedding layer to embed tokenized text; (2) Multi-modal encoder. We use a 12-layer ViT (Dosovitskiy et al., 2021) initialized from MAE (He et al., 2022) (ImageNet-1K without labels) as our backbone; (3) Task-specific decoder. We learn our multi-modal representations by masked image/language modeling and image-text matching which are only used during pre-training. We use a 2-layer MLP to fine-tune our multi-modal encoder for downstream tasks. Importantly, we find that the masked image modeling objective is important throughout second-stage pre-training, not only for initialization of the visual transformer.

Figure 25: Example prompts and responses when using a video fine-tuned variant of InstructBLIP (method described in Section 6.5). Our model is able to produce long-form textual responses that describe scenes and is able to answer questions related to the temporality of events in the videos.

Figure 26: The audio-multimodal agent described in Section 6.5. Hallucinated content are highlighted in red. We use GPT-4V to generate 1) the videochat summary with video frames; 2) the video summary with the frame captions; 3) the video summary with frame captioning and audio information.

Figure 27: An interactive multimodal agent that incorporates visual, audio, and text modalities for video understanding. Our pipeline mines hard negative hallucinations to produce difficult queries for the VideoAnalytica challenge. More the related details of interactive audio-video-language agent dataset are described in Section 9.2.

6.5 Video-language Experiments视频语言实验

6.6 Agent for NLP基于 NLP Agent

6.6.1 LLM agent基于LLM Agent

工具使用:整合外部知识增强理解,利用外部整合外部知识库、网络搜索等工具提升AI代理的理解和响应准确性

提升推理规划能力:增强AI代理的推理和规划能力,理解复杂指令,预测未来情景。

反馈整合:融合系统和人类反馈,通过学习改进,自适应学习机制,优化代理策略

6.6.2 General LLM agent通用LLMAgent

深入理解生成对话:加入知识检索步骤

Figure 28: The training recipe used to train the Alpaca model (Taori et al., 2023). At a high level, existing LLMs are used to generate a large pool of instruction-following examples from a smaller set of seed tasks. The generated instruction-following examples are then used to instruction-tune an LLM where the underlying model weights are available.

Figure 29: The logic transformer agent model (Wang et al., 2023e). We integrate a logical reasoning module into the transformer-based abstractive summarization model in order to endow the logic agent the ability to reason over text and dialogue logic, so that it can generate better-quality abstractive summarizations and reduce factuality errors.

Figure 30: Architecture of one proposed NLP agent (Wang et al., 2023g) mutual learning framework. In each epoch, Phase 1 and Phase 2 are executed alternately. During Phase 1, the parameters of the reader model remain fixed, and only the weights of the knowledge selector are updated. Conversely, during Phase 2, the reader model’s parameters are adjusted, while the knowledge selector’s weights remain frozen.

6.6.3 Instruction-following LLM agents遵循指令的LLMAgent

指令遵循LLM:通过强化学习或指令调整训练AI代理有效遵循人类指令。

6.6.4 Experiments and Results实验和结果

逻辑感知模型:构建逻辑感知的输入嵌入,提升Transformer语言模型的逻辑理解能力。

知识选择器:提出互学习框架,通过知识选择器代理优化检索-阅读模型的性能。

7 Agent AI Across Modalities, Domains, and Realities 跨模态、领域和现实的人工智能代理

7.1 Agents for Cross-modal Understanding 用于跨模态理解的Agent

7.2 Agents for Cross-domain Understanding用于跨领域理解的Agent

7.3 Interactive agent for cross-modality and cross-reality用于跨模态和跨现实的互动Agent

7.4 Sim to Real Transfer从模拟到真实的转移

8 Continuous and Self-improvement for Agent 人工智能代理 AI 的持续和自我改进

8.1 Human-based Interaction Data基于人类交互数据

8.2 Foundation Model Generated Data 基础模型生成的数据

9 Agent Dataset and LeaderboardAgent数据集和排行榜

9.1 “CuisineWorld” Dataset for Multi-agent Gaming多Agent游戏数据集

9.1.1 Benchmark基准

9.1.2 Task任务

9.1.3 Metrics and Judging指标和评判

9.1.4 Evaluation评估

9.2 Audio-Video-Language Pre-training Dataset音频-视频-语言预训练数据集

10 Broader Impact Statement更广泛的影响声明

11 Ethical Considerations道德考虑

12 Diversity Statement多样性声明

《Agent AI: Surveying the Horizons of Multimodal Interaction智能体AI:多模态交互视野的考察》翻译与解读

| 地址 |

论文地址:https://arxiv.org/abs/2401.03568 |

| 时间 |

2024年1月7日 |

| 作者 |

Zane Durante, Qiuyuan Huang, Naoki Wake, Ran Gong, Jae Sung Park, Bidipta Sarkar, Rohan Taori, Yusuke Noda, Demetri Terzopoulos, Yejin Choi, Katsushi Ikeuchi, Hoi Vo, Li Fei-Fei, Jianfeng Gao 1斯坦福大学;2微软研究院,雷德蒙德; 3加州大学洛杉矶分校;4华盛顿大学;5微软游戏 |

Abstract摘要

>> 多模态人工智能:多模态人工智能系统将无处不在

>> 具身智能体:当前系统以现有基础模型为创建具身智能体的基本构建模块。通过感知用户动作、人类行为、环境物体、音频表达和场景情感,系统可用于引导代理在给定环境中的响应。

>> 减轻幻觉:通过发展在有根基环境中的具体人工智能系统,可以减轻大型基础模型幻觉和生成环境不正确输出的倾向。

>> 虚拟现实:Agent工智能这一新兴领域涵盖了多模态交互的更广泛的具体和代理方面,未来人们可轻松创建虚拟现实或模拟场景,并与嵌入虚拟环境中的代理进行互动。

| Multi-modal AI systems will likely become a ubiquitous presence in our everyday lives. A promising approach to making these systems more interactive is to embody them as agents within physical and virtual environments. At present, systems leverage existing foundation models as the basic building blocks for the creation of embodied agents. Embedding agents within such environments facilitates the ability of models to process and interpret visual and contextual data, which is critical for the creation of more sophisticated and context-aware AI systems. For example, a system that can perceive user actions, human behavior, environmental objects, audio expressions, and the collective sentiment of a scene can be used to inform and direct agent responses within the given environment. To accelerate research on agent-based multimodal intelligence, we define “Agent AI” as a class of interactive systems that can perceive visual stimuli, language inputs, and other environmentally-grounded data, and can produce meaningful embodied action with infinite agent. In particular, we explore systems that aim to improve agents based on next-embodied action prediction by incorporating external knowledge, multi-sensory inputs, and human feedback. We argue that by developing agentic AI systems in grounded environments, one can also mitigate the hallucinations of large foundation models and their tendency to generate environmentally incorrect outputs. The emerging field of Agent AI subsumes the broader embodied and agentic aspects of multimodal interactions. Beyond agents acting and interacting in the physical world, we envision a future where people can easily create any virtual reality or simulated scene and interact with agents embodied within the virtual environment. |

多模态人工智能系统可能会在我们的日常生活中无处不在。使这些系统更具互动性的一种有希望的方法是将它们作为物理和虚拟环境中的代理。目前,系统利用现有的基础模型作为创建具身智能体的基本构建块。在这样的环境中嵌入代理有助于模型处理和解释视觉和上下文数据的能力,这对于创建更复杂和上下文感知的人工智能系统至关重要。例如,可以感知用户动作、人类行为、环境对象、音频表达和场景的集体情感的系统,可以用于通知和指导给定环境中的代理响应。 为了加速基于Agent的多模态智能的研究,我们将“Agent AI”定义为一类能够感知视觉刺激、语言输入和其他基于环境的数据,并能产生有意义的具有无限Agent的具体动作的交互系统。 特别是,我们探索了旨在通过结合外部知识、多感官输入和人类反馈来改进基于下一体现动作预测的智能体的系统。我们认为,通过在基础环境中开发Agent工智能系统,还可以减轻大型基础模型的幻觉及其产生环境错误输出的倾向。Agent AI的新兴领域包含了多模态交互的更广泛的具体化和代理方面。 除了代理在物理世界中行动和互动之外,我们设想未来人们可以轻松地创建任何虚拟现实或模拟场景,并与嵌入虚拟环境中的代理进行交互。 |

图1:基于不同领域和应用程序中感知和行动的Agent AI系统概述

| Figure 1: Overview of an Agent AI system that can perceive and act in different domains and applications. Agent AI is emerging as a promising avenue toward Artificial General Intelligence (AGI). Agent AI training has demonstrated the capacity for multi-modal understanding in the physical world. It provides a framework for reality-agnostic training by leveraging generative AI alongside multiple independent data sources. Large foundation models trained for agent and action-related tasks can be applied to physical and virtual worlds when trained on cross-reality data. We present the general overview of an Agent AI system that can perceive and act in many different domains and applications, possibly serving as a route towards AGI using an agent paradigm. |

图1:可以在不同领域和应用程序中感知和行动的Agent AI系统概述。Agent工智能(Agent AI)正在成为通用人工智能(AGI)的一个有前途的途径。AI代理的训练已经证明了在物理世界中进行多模态理解的能力。它通过利用生成式人工智能以及多个独立数据源,为现实无关训练提供了一个框架。当在跨现实数据上训练时,为Agent和行动相关任务训练的大型基础模型可以应用于物理和虚拟世界。我们介绍了Agent AI系统的总体概述,该系统可以在许多不同的领域和应用中感知和行动,可能作为使用Agent范式实现AGI的途径。 |

1 Introduction

1.1 Motivation动机

人工智能历史演进:从1956年达特茅斯会议定义的AI系统为人工生命形式开始,经历分化和问题解决的过程,但过度简化模糊了AI研究的总体目标。

| Historically, AI systems were defined at the 1956 Dartmouth Conference as artificial life forms that could collect information from the environment and interact with it in useful ways. Motivated by this definition, Minsky’s MIT group built in 1970 a robotics system, called the “Copy Demo,” that observed “blocks world” scenes and successfully reconstructed the observed polyhedral block structures. The system, which comprised observation, planning, and manipulation modules, revealed that each of these subproblems is highly challenging and further research was necessary. The AI field fragmented into specialized subfields that have largely independently made great progress in tackling these and other problems, but over-reductionism has blurred the overarching goals of AI research. |

从历史上看,人工智能系统在1956年达特茅斯会议上被定义为能够从环境中收集信息并以有用的方式与之互动的人工生命形式。受这一定义的启发,明斯基在麻省理工学院的研究小组于1970年建立了一个名为“复制演示”的机器人系统,该系统可以观察“积木世界”场景,并成功地重建了观察到的多面体积木结构。该系统由观察、规划和操作模块组成,表明每个子问题都极具挑战性,需要进一步研究。人工智能领域被分割成专门的子领域,这些子领域在解决这些问题和其他问题方面取得了很大的进展,但过度简化主义模糊了人工智能研究的总体目标。 |

重返AI基础与整体主义:为了超越现状,需要回归亚里士多德整体主义的AI基本原理,而近年来大型语言模型(LLMs)和视觉语言模型(VLMs)的革命为此提供了可能性。

| To advance beyond the status quo, it is necessary to return to AI fundamentals motivated by Aristotelian Holism. Fortunately, the recent revolution in Large Language Models (LLMs) and Visual Language Models (VLMs) has made it possible to create novel AI agents consistent with the holistic ideal. Seizing upon this opportunity, this article explores models that integrate language proficiency, visual cognition, context memory, intuitive reasoning, and adaptability. It explores the potential completion of this holistic synthesis using LLMs and VLMs. In our exploration, we also revisit system design based on Aristotle’s Final Cause, the teleological “why the system exists”, which may have been overlooked in previous rounds of AI development. |

为了超越现状,有必要回到由亚里士多德整体主义推动的人工智能基础。幸运的是,最近大型语言模型(llm)和视觉语言模型(vlm)的革命使得创建符合整体理想的新型AI代理成为可能。抓住这个机会,本文探讨了整合语言能力、视觉认知、上下文记忆、直觉推理和适应性的模型。它探讨了使用llm和vlm完成这种整体合成的可能性。在我们的探索中,我们还重新审视了基于亚里士多德最终原因的系统设计,即目的论的“系统存在的原因”,这可能在前几轮人工智能开发中被忽视了。 |

综合模型的探索与潜在综合:利用LLMs和VLMs,探索整合语言能力、视觉认知、上下文记忆、直觉推理和适应性的模型,并探讨使用这些模型进行整体综合的潜在可能性。

| With the advent of powerful pretrained LLMs and VLMs, a renaissance in natural language processing and computer vision has been catalyzed. LLMs now demonstrate an impressive ability to decipher the nuances of real-world linguistic data, often achieving abilities that parallel or even surpass human expertise (OpenAI, 2023). Recently, researchers have shown that LLMs may be extended to act as agents within various environments, performing intricate actions and tasks when paired with domain-specific knowledge and modules (Xi et al., 2023). These scenarios, characterized by complex reasoning, understanding of the agent’s role and its environment, along with multi-step planning, test the agent’s ability to make highly nuanced and intricate decisions within its environmental constraints (Wu et al., 2023; Meta Fundamental AI Research (FAIR) Diplomacy Team et al., 2022). |

随着强大的预训练llm和vlm的出现,自然语言处理和计算机视觉的复兴已经被催化。法学硕士现在展示了一种令人印象深刻的能力,可以破译现实世界语言数据的细微差别,通常可以达到与人类专业知识相当甚至超越的能力(OpenAI, 2023)。最近,研究人员已经表明,llm可以扩展为各种环境中的代理,在与领域特定知识和模块配对时执行复杂的动作和任务(Xi et al., 2023)。这些场景的特点是复杂的推理,对智能体角色及其环境的理解,以及多步骤规划,测试智能体在环境约束下做出高度细微和复杂决策的能力(Wu et al., 2023;元基础人工智能研究(FAIR)外交团队等,2022)。 |

AI模型的巨大潜力与转变、代理中心AI的影响:利用LLMs和VLMs作为代理,特别是在游戏、机器人技术和医疗领域,不仅为AI系统提供了严格的评估平台,还预示了对社会和产业的转变影响。

| Building upon these initial efforts, the AI community is on the cusp of a significant paradigm shift, transitioning from creating AI models for passive, structured tasks to models capable of assuming dynamic, agentic roles in diverse and complex environments. In this context, this article investigates the immense potential of using LLMs and VLMs as agents, emphasizing models that have a blend of linguistic proficiency, visual cognition, contextual memory, intuitive reasoning, and adaptability. Leveraging LLMs and VLMs as agents, especially within domains like gaming, robotics, and healthcare, promises not just a rigorous evaluation platform for state-of-the-art AI systems, but also foreshadows the transformative impacts that Agent-centric AI will have across society and industries. When fully harnessed, agentic models can redefine human experiences and elevate operational standards. The potential for sweeping automation ushered in by these models portends monumental shifts in industries and socio-economic dynamics. Such advancements will be intertwined with multifaceted leader-board, not only technical but also ethical, as we will elaborate upon in Section 11. We delve into the overlapping areas of these sub-fields of Agent AI and illustrate their interconnectedness in Fig.1. |

在这些初步努力的基础上,人工智能社区正处于重大范式转变的风口上,从为被动、结构化任务创建人工智能模型,转变为能够在多样化和复杂的环境中承担动态、代理角色的模型。在这种背景下,本文研究了使用llm和vlm作为代理的巨大潜力,强调了混合了语言熟练度、视觉认知、上下文记忆、直觉推理和适应性的模型。利用llm和vlm作为代理,特别是在游戏、机器人和医疗保健等领域,不仅可以为最先进的人工智能系统提供严格的评估平台,而且还预示着以代理为中心的人工智能将对整个社会和行业产生变革性影响。如果充分利用,代理模型可以重新定义人类体验并提高操作标准。这些模型带来的全面自动化的潜力预示着行业和社会经济动态的巨大变化。这种进步将与多方面的排行榜交织在一起,不仅是技术上的,而且是道德上的,我们将在第11节详细说明。我们深入研究了Agent AI的这些子领域的重叠区域,并在图1中说明了它们的相互联系。 |

技术与伦理的交织:AI的巨大进步将与技术和伦理层面相互交织,这需要综合考虑

内容在上边

1.2 Background背景

大型基础模型:LLM和VLM推动了建立通用智能机的工作

| We will now introduce relevant research papers that support the concepts, theoretical background, and modern implementations of Agent AI. |

我们现在将介绍相关的研究论文,这些论文支持Agent AI的概念、理论背景和现代实现。 |

| Large Foundation Models: LLMs and VLMs have been driving the effort to develop general intelligent machines (Bubeck et al., 2023; Mirchandani et al., 2023). Although they are trained using large text corpora, their superior problem-solving capacity is not limited to canonical language processing domains. LLMs can potentially tackle complex tasks that were previously presumed to be exclusive to human experts or domain-specific algorithms, ranging from mathematical reasoning (Imani et al., 2023; Wei et al., 2022; Zhu et al., 2022) to answering questions of professional law (Blair-Stanek et al., 2023; Choi et al., 2023; Nay, 2022). Recent research has shown the possibility of using LLMs to generate complex plans for robots and game AI (Liang et al., 2022; Wang et al., 2023a,b; Yao et al., 2023a; Huang et al., 2023a), marking an important milestone for LLMs as general-purpose intelligent agents. |

大型基础模型:llm和vlm一直在推动开发通用智能机器的努力(Bubeck等人,2023;Mirchandani et al., 2023)。虽然他们使用大型文本语料库进行训练,但他们卓越的问题解决能力并不局限于规范语言处理领域。法学硕士可以潜在地解决以前被认为是人类专家或特定领域算法专有的复杂任务,包括数学推理(Imani et al., 2023;Wei et al., 2022;Zhu et al., 2022),以回答专业法律的问题(Blair-Stanek et al., 2023;Choi et al., 2023;不,2022)。最近的研究表明,使用llm为机器人和游戏AI生成复杂计划的可能性(Liang et al., 2022;Wang et al., 2023a,b;姚等人,2023a;Huang et al., 2023a),标志着llm作为通用智能代理的重要里程碑。 |

Embodied AI:一些研究利用LLM进行任务规划,利用LLM的强大知识和零打样本体现能力进行复杂任务规划(关键词:LLM、任务规划)

| Embodied AI: A number of works leverage LLMs to perform task planning (Huang et al., 2022a; Wang et al., 2023b; Yao et al., 2023a; Li et al., 2023a), specifically the LLMs’ WWW-scale domain knowledge and emergent zero-shot embodied abilities to perform complex task planning and reasoning. Recent robotics research also leverages LLMs to perform task planning (Ahn et al., 2022a; Huang et al., 2022b; Liang et al., 2022) by decomposing natural language instruction into a sequence of subtasks, either in the natural language form or in Python code, then using a low-level controller to execute these subtasks. Additionally, they incorporate environmental feedback to improve task performance (Huang et al., 2022b), (Liang et al., 2022), (Wang et al., 2023a), and (Ikeuchi et al., 2023). |

嵌入式AI:许多作品利用llm来执行任务规划(Huang et al., 2022a;Wang et al., 2023b;姚等人,2023a;Li et al., 2023a),特别是法学硕士在执行复杂任务规划和推理方面的www级领域知识和突发零概率体现能力。最近的机器人研究也利用llm来执行任务规划(Ahn等人,2022a;黄等,20022b;Liang et al., 2022)通过将自然语言指令分解为一系列子任务,以自然语言形式或Python代码的形式,然后使用低级控制器来执行这些子任务。此外,他们还结合环境反馈来提高任务绩效(Huang et al., 2022b)、(Liang et al., 2022)、(Wang et al., 2023a)和(Ikeuchi et al., 2023)。 |

交互学习AI:AI同时利用机器学习和用户交互来学习,初期在大数据集上训练,然后通过用户反馈和观察学习来改进(关键词:机器学习、用户交互、学习改进)

| Interactive Learning: AI agents designed for interactive learning operate using a combination of machine learning techniques and user interactions. Initially, the AI agent is trained on a large dataset. This dataset includes various types of information, depending on the intended function of the agent. For instance, an AI designed for language tasks would be trained on a massive corpus of text data. The training involves using machine learning algorithms, which could include deep learning models like neural networks. These training models enable the AI to recognize patterns, make predictions, and generate responses based on the data on which it was trained. The AI agent can also learn from real-time interactions with users. This interactive learning can occur in various ways: 1) Feedback-based learning: The AI adapts its responses based on direct user feedback (Li et al., 2023b; Yu et al., 2023a; Parakh et al., 2023; Zha et al., 2023; Wake et al., 2023a,b,c). For example, if a user corrects the AI’s response, the AI can use this information to improve future responses (Zha et al., 2023; Liu et al., 2023a). 2) Observational Learning: The AI observes user interactions and learns implicitly. For example, if users frequently ask similar questions or interact with the AI in a particular way, the AI might adjust its responses to better suit these patterns. It allows the AI agent to understand and process human language, multi-model setting, interpret the cross reality-context, and generate human-users’ responses. Over time, with more user interactions and feedback, the AI agent’s performance generally continuous improves. This process is often supervised by human operators or developers who ensure that the AI is learning appropriately and not developing biases or incorrect patterns. |

交互式学习:为交互式学习设计的AI代理使用机器学习技术和用户交互的组合进行操作。最初,AI代理是在一个大数据集上训练的。该数据集包括各种类型的信息,具体取决于代理的预期功能。例如,为语言任务设计的人工智能将在大量文本数据语料库上进行训练。训练涉及使用机器学习算法,其中可能包括神经网络等深度学习模型。这些训练模型使人工智能能够识别模式,做出预测,并根据训练的数据生成响应。AI代理还可以从与用户的实时交互中学习。这种交互式学习可以以多种方式发生:1)基于反馈的学习:AI根据直接的用户反馈调整其响应(Li et al., 2023b;Yu et al., 2009;Parakh et al., 2023;Zha et al., 2023;Wake et al., 2023a,b,c)。例如,如果用户纠正了AI的响应,AI可以使用这些信息来改进未来的响应(Zha et al., 2023;Liu et al., 2023a)。2)观察性学习:AI观察用户交互并隐式学习。例如,如果用户经常问类似的问题或以特定方式与AI交互,AI可能会调整其响应以更好地适应这些模式。它允许AI代理理解和处理人类语言,多模型设置,解释交叉现实上下文,并生成人类用户的响应。随着时间的推移,随着更多的用户交互和反馈,AI代理的性能通常会持续提高。这一过程通常由人工操作员或开发人员监督,以确保人工智能适当地学习,而不会产生偏见或不正确的模式。 |

1.3 Overview

模态代理性AI(MAA):根据多模态感知输入在给定环境下生成有效行为

| Multimodal Agent AI (MAA) is a family of systems that generate effective actions in a given environment based on the understanding of multimodal sensory input. With the advent of Large Language Models (LLMs) and Vision-Language Models (VLMs), numerous MAA systems have been proposed in fields ranging from basic research to applications. While these research areas are growing rapidly by integrating with the traditional technologies of each domain (e.g., visual question answering and vision-language navigation), they share common interests such as data collection, benchmarking, and ethical perspectives. In this paper, we focus on the some representative research areas of MAA, namely multimodality, gaming (VR/AR/MR), robotics, and healthcare, and we aim to provide comprehensive knowledge on the common concerns discussed in these fields. As a result we expect to learn the fundamentals of MAA and gain insights to further advance their research. |

多模态智能体(MAA)是基于对多模态感官输入的理解,在给定环境中产生有效动作的一系列系统。随着大型语言模型(llm)和视觉语言模型(vlm)的出现,从基础研究到应用领域都提出了许多MAA系统。虽然这些研究领域通过与各个领域的传统技术(如视觉问答和视觉语言导航)相结合而迅速发展,但它们在数据收集、基准测试和伦理观点等方面有着共同的兴趣。在本文中,我们将重点关注MAA的一些代表性研究领域,即多模态,游戏(VR/AR/MR),机器人和医疗保健,我们的目标是提供有关这些领域讨论的共同关注点的全面知识。因此,我们希望学习MAA的基础知识,并获得进一步推进他们研究的见解。 |

研究方法:详细例子介绍如何利用LLM和VLM提升MAA,通过游戏、机器人和医疗案例阐述

| Specific learning outcomes include: >>MAA Overview: A deep dive into its principles and roles in contemporary applications, providing researcher with a thorough grasp of its importance and uses. >>Methodologies: Detailed examples of how LLMs and VLMs enhance MAAs, illustrated through case studies in gaming, robotics, and healthcare. >>Performance Evaluation: Guidance on the assessment of MAAs with relevant datasets, focusing on their effectiveness and generalization. >>Ethical Considerations: A discussion on the societal impacts and ethical leader-board of deploying Agent AI, highlighting responsible development practices. >>Emerging Trends and Future leader-board: Categorize the latest developments in each domain and discuss the future directions. |

具体的学习成果包括: >>MAA概述:深入探讨其在当代应用中的原理和作用,为研究人员提供对其重要性和用途的全面掌握。 >>方法:llm和vlm如何增强maa的详细示例,通过游戏、机器人和医疗保健方面的案例研究进行说明。 >>绩效评估:使用相关数据集对maa进行评估的指南,重点关注其有效性和泛化。 >>伦理考虑:讨论部署人工智能的社会影响和伦理排行榜,强调负责任的开发实践。 >>新兴趋势和未来排行榜:对每个领域的最新发展进行分类,并讨论未来的发展方向。 |

性能评估:重点关注MAA在相关数据集上的有效性和泛化能力

| Computer-based action and generalist agents (GAs) are useful for many tasks. A GA to become truly valuable to its users, it can natural to interact with, and generalize to a broad range of contexts and modalities. We aims to cultivate a vibrant research ecosystem and create a shared sense of identity and purpose among the Agent AI community. MAA has the potential to be widely applicable across various contexts and modalities, including input from humans. Therefore, we believe this Agent AI area can engage a diverse range of researchers, fostering a dynamic Agent AI community and shared goals. Led by esteemed experts from academia and industry, we expect that this paper will be an interactive and enriching experience, complete with agent instruction, case studies, tasks sessions, and experiments discussion ensuring a comprehensive and engaging learning experience for all researchers. |

基于计算机的动作和通才代理(GAs)对许多任务都很有用。一个遗传算法要真正对它的用户有价值,它就可以自然地与之交互,并推广到广泛的上下文和模式。我们的目标是培养一个充满活力的研究生态系统,并在Agent AI社区中创造一种共同的认同感和使命感。MAA具有广泛应用于各种环境和模式的潜力,包括人类的投入。因此,我们相信这个人工智能领域可以吸引各种各样的研究人员,培养一个充满活力的人工智能社区和共同的目标。在学术界和工业界备受尊敬的专家的带领下,我们希望这篇论文将是一个互动和丰富的体验,包括代理指导,案例研究,任务会议和实验讨论,确保所有研究人员都能获得全面而引人入胜的学习体验。 |

伦理考量:讨论部署代理性AI对社会影响和倫理问题,强调负责任开发实践

| This paper aims to provide general and comprehensive knowledge about the current research in the field of Agent AI. To this end, the rest of the paper is organized as follows. Section 2 outlines how Agent AI benefits from integrating with related emerging technologies, particularly large foundation models. Section 3 describes a new paradigm and framework that we propose for training Agent AI. Section 4 provides an overview of the methodologies that are widely used in the training of Agent AI. Section 5 categorizes and discusses various types of agents. Section 6 introduces Agent AI applications in gaming, robotics, and healthcare. Section 7 explores the research community’s efforts to develop a versatile Agent AI, capable of being applied across various modalities, domains, and bridging the sim-to-real gap. Section 8 discusses the potential of Agent AI that not only relies on pre-trained foundation models, but also continuously learns and self-improves by leveraging interactions with the environment and users. Section 9 introduces our new datasets that are designed for the training of multimodal Agent AI. Section 11 discusses the hot topic of the ethics consideration of AI agent, limitations, and societal impact of our paper. |

本文旨在为Agent AI领域的研究现状提供一般和全面的知识。为此,本文的其余部分组织如下。第2节概述了Agent AI如何从与相关新兴技术(特别是大型基础模型)的集成中获益。第3节描述了我们提出的用于训练Agent AI的新范式和框架。第4节概述了在Agent AI训练中广泛使用的方法。第5节对各种类型的代理进行了分类和讨论。第6节介绍了Agent AI在游戏、机器人和医疗保健中的应用。第7节探讨了研究界为开发一种多功能智能体所做的努力,这种智能体能够应用于各种模式、领域,并弥合模拟与现实之间的差距。第8节讨论了Agent AI的潜力,它不仅依赖于预训练的基础模型,而且还通过利用与环境和用户的交互不断学习和自我改进。第9节介绍了我们为训练多模式Agent AI而设计的新数据集。第11节讨论了人工智能主体的伦理考虑的热门话题,本文的局限性和社会影响。 |

2 Agent AI IntegrationAgentAI集成

| Foundation models based on LLMs and VLMs, as proposed in previous research, still exhibit limited performance in the area of embodied AI, particularly in terms of understanding, generating, editing, and interacting within unseen environments or scenarios (Huang et al., 2023a; Zeng et al., 2023). Consequently, these limitations lead to sub-optimal outputs from AI agents. Current agent-centric AI modeling approaches focus on directly accessible and clearly defined data (e.g. text or string representations of the world state) and generally use domain and environment-independent patterns learned from their large-scale pretraining to predict action outputs for each environment (Xi et al., 2023; Wang et al., 2023c; Gong et al., 2023a; Wu et al., 2023). In (Huang et al., 2023a), we investigate the task of knowledge-guided collaborative and interactive scene generation by combining large foundation models, and show promising results that indicate knowledge-grounded LLM agents can improve the performance of 2D and 3D scene understanding, generation, and editing, alongside with other human-agent interactions (Huang et al., 2023a). By integrating an Agent AI framework, large foundation models are able to more deeply understand user input to form a complex and adaptive HCI system. Emergent ability of LLM and VLM works invisible in generative AI, embodied AI, knowledge augmentation for multi-model learning, mix-reality generation, text to vision editing, human interaction for 2D/3D simulation in gaming or robotics tasks. Agent AI recent progress in foundation models present an imminent catalyst for unlocking general intelligence in embodied agents. The large action models, or agent-vision-language models open new possibilities for general-purpose embodied systems such as planning, problem-solving and learning in complex environments. Agent AI test further step in metaverse, and route the early version of AGI. |

先前研究中提出的基于LLMs和VLMs的基础模型,在具身智能领域仍然表现有限,特别是在未知环境或场景中的理解、生成、编辑和交互方面(Huang et al., 2023a;Zeng et al., 2023)。因此,这些限制导致AI代理的输出不够优化。Wang et al., 2023c;龚等人,2009;Wu等人,2023)。在(Huang et al., 2023a)中,我们通过结合大型基础模型研究了知识引导的协作和交互场景生成任务,并显示了有希望的结果,表明基于知识的LLM代理可以提高2D和3D场景理解、生成和编辑的性能,以及其他人类代理交互(Huang et al., 2023a)。 通过集成Agent AI框架,大型基础模型能够更深入地理解用户输入,形成复杂且自适应的HCI系统。LLM和VLM的涌现能力在生成式AI、体验式AI、多模型学习的知识增强、混合现实生成、文本到视觉编辑、游戏或机器人任务中2D/3D仿真的人机交互中,起着无形的作用。人工智能基础模型的最新进展为解锁具身智能体的通用智能提供了迫在眉睫的催化剂。大型动作模型或代理-视觉-语言模型,为通用的具体化系统(如复杂环境中的规划、解决问题和学习)开辟了新的可能性。Agent AI在元宇宙中迈出了进一步的步伐,并为早期版本的通用人工智能铺平了道路。 |

2.1 Infinite AI agent无限人工智能代理

AI代理系统的四大能力:预测、决策、处理歧义、持续改进

| AI agents have the capacity to interpret, predict, and respond based on its training and input data. While these capabilities are advanced and continually improving, it’s important to recognize their limitations and the influence of the underlying data they are trained on. AI agent systems generally possess the following abilities: 1) Predictive Modeling: AI agents can predict likely outcomes or suggest next steps based on historical data and trends. For instance, they might predict the continuation of a text, the answer to a question, the next action for a robot, or the resolution of a scenario. 2) Decision Making: In some applications, AI agents can make decisions based on their inferences. Generally, the agent will base their decision on what is most likely to achieve a specified goal. For AI applications like recommendation systems, an agent can decide what products or content to recommend based on its inferences about user preferences. 3) Handling Ambiguity: AI agents can often handle ambiguous input by inferring the most likely interpretation based on context and training. However, their ability to do so is limited by the scope of their training data and algorithms. 4) Continuous Improvement: While some AI agents have the ability to learn from new data and interactions, many large language models do not continuously update their knowledge-base or internal representation after training. Their inferences are usually based solely on the data that was available up to the point of their last training update. |

AI代理有能力根据其训练和输入的数据进行解释、预测和响应。虽然这些功能是先进的,并且在不断改进,但重要的是要认识到它们的局限性以及它们所训练的底层数据的影响。AI代理系统通常具有以下能力: 1)预测建模:AI代理可以根据历史数据和趋势预测可能的结果或建议下一步的步骤。例如,它们可以预测文本的延续、问题的答案、机器人的下一步动作或场景的解决方案。 2)决策制定:在某些应用中,AI代理可以根据他们的推断做出决策。一般来说,代理将根据最可能实现指定目标的方式做出决策。对于像推荐系统这样的人工智能应用程序,代理可以根据对用户偏好的推断来决定推荐哪些产品或内容。 3)处理歧义:AI代理通常可以通过基于上下文和训练推断最可能的解释来处理歧义输入。然而,他们这样做的能力受到训练数据和算法范围的限制。 4)持续改进:虽然一些AI代理有能力从新的数据和交互中学习,但许多大型语言模型在训练后并没有持续更新其知识库或内部表示。他们的推断通常仅仅基于他们最后一次训练更新时可用的数据。 |

无限智能体(Infinite Agent):可以不断地学习并适应新任务,而无需为每个新任务收集大量的训练数据,比如RoboGen

| We show augmented interactive agents for multi-modality and cross reality-agnostic integration with an emergence mechanism in Fig. 2. An AI agent requires collecting extensive training data for every new task, which can be costly or impossible for many domains. In this study, we develop an infinite agent that learns to transfer memory information from general foundation models (e.g., GPT-X, DALL-E) to novel domains or scenarios for scene understanding, generation, and interactive editing in physical or virtual worlds. An application of such an infinite agent in robotics is RoboGen (Wang et al., 2023d). In this study, the authors propose a pipeline that autonomously run the cycles of task proposition, environment generation, and skill learning. RoboGen is an effort to transfer the knowledge embedded in large models to robotics. |

我们在图2中展示了用于多模态和跨现实未知集成的增强交互代理与新兴机制。AI代理需要为每个新任务收集大量的训练数据,这对于许多领域来说可能是昂贵的或不可能的。在这项研究中,我们开发了一个无限智能体,它可以学习将记忆信息从一般的基础模型(例如,GPT-X, DALL-E)转移到新的领域或场景中,以便在物理或虚拟世界中进行场景理解、生成和交互式编辑。 这种无限智能体在机器人技术中的一个应用是RoboGen (Wang et al., 2023d)。在这项研究中,作者提出了一个自主运行任务提出、环境生成和技能学习循环的流水线。RoboGen致力于将嵌入在大型模型中的知识转移到机器人技术中。 |

Figure 2: The multi-model agent AI for 2D/3D embodied generation and editing interaction in cross-reality.图2:跨现实中2D/3D嵌入生成和编辑交互的多模型agent AI。

2.2 Agent AI with Large Foundation Models具有大型基础模型的人工智能代理

LLM中的基准数据发挥重要作用:可以检测代理在不同环境下行为限制条件

| Recent studies have indicated that large foundation models play a crucial role in creating data that act as benchmarks for determining the actions of agents within environment-imposed constraints. For example, using foundation models for robotic manipulation (Black et al., 2023; Ko et al., 2023) and navigation (Shah et al., 2023a; Zhou et al., 2023a). To illustrate, Black et al. employed an image-editing model as a high-level planner to generate images of future sub-goals, thereby guiding low-level policies (Black et al., 2023). For robot navigation, Shah et al. proposed a system that employs a LLM to identify landmarks from text and a VLM to associate these landmarks with visual inputs, enhancing navigation through natural language instructions (Shah et al., 2023a). |

最近的研究表明,大型基础模型在创建数据方面发挥着至关重要的作用,这些数据可以作为确定环境施加约束下代理行为的基准。例如,使用基础模型进行机器人操纵(Black et al., 2023;Ko等人,2023)和导航(Shah等人,2023a;周等人,2009)。 为了说明这一点,Black等人使用图像编辑模型作为高级计划器来生成未来子目标的图像,从而指导低级政策(Black et al., 2023)。 对于机器人导航,Shah等人提出了一种系统,该系统使用LLM从文本中识别地标,并使用VLM将这些地标与视觉输入关联起来,通过自然语言指令增强导航(Shah等人,2023a)。 |

越来越感兴趣:人们对产生受语言和环境因素影响的有条件的人体动作

| There is also growing interest in the generation of conditioned human motions in response to language and environmental factors. Several AI systems have been proposed to generate motions and actions that are tailored to specific linguistic instructions (Kim et al., 2023; Zhang et al., 2022; Tevet et al., 2022) and to adapt to various 3D scenes (Wang et al., 2022a). This body of research emphasizes the growing capabilities of generative models in enhancing the adaptability and responsiveness of AI agents across diverse scenarios. |

人们对产生受语言和环境因素影响的有条件的人体动作也越来越感兴趣。已经提出了几个人工智能系统,用于生成根据特定语言指令定制的动作(Kim等人,2023;Zhang et al., 2022;Tevet et al., 2022)以及适应各种3D场景(Wang et al., 2022a)。这一研究强调了生成模型在增强AI代理在不同场景中的适应性和响应性方面日益增长的能力。 |

2.2.1 Hallucinations幻觉

LLMs中的幻觉:两类幻觉=内在(与原始材料相矛盾)+外在(原始材料未包含的额外信息)

| Agents that generate text are often prone to hallucinations, which are instances where the generated text is nonsensical or unfaithful to the provided source content (Raunak et al., 2021; Maynez et al., 2020). Hallucinations can be split into two categories, intrinsic and extrinsic (Ji et al., 2023). Intrinsic hallucinations are hallucinations that are contradictory to the source material, whereas extrinsic hallucinations are when the generated text contains additional information that was not originally included in the source material. |

生成文本的代理通常容易产生幻觉,即生成的文本毫无意义或与提供的源内容不忠实(Raunak et al., 2021;Maynez et al., 2020)。 幻觉可以分为两类,内在的和外在的(Ji et al., 2023)。内在幻觉是指与原始材料相矛盾的幻觉,而外在幻觉是指生成的文本包含了原始材料中没有包含的额外信息。 |

改进方法:RAG或其他方法,旨在通过检索额外的源材料并提供机制来检查生成的响应与源材料之间的矛盾来增强语言生成

| Some promising routes for reducing the rate of hallucination in language generation involve using retrieval-augmented generation (Lewis et al., 2020; Shuster et al., 2021) or other methods for grounding natural language outputs via external knowledge retrieval (Dziri et al., 2021; Peng et al., 2023). Generally, these methods seek to augment language generation by retrieving additional source material and by providing mechanisms to check for contradictions between the generated response and the source material. |

减少语言生成中幻觉率的一些有希望的途径包括使用检索增强生成(Lewis et al., 2020;Shuster等人,2021)或通过外部知识检索为自然语言输出提供基础的其他方法(Dziri等人,2021;Peng et al., 2023)。总体而言,这些方法旨在通过检索额外的源材料并提供机制来检查生成的响应与源材料之间的矛盾来增强语言生成。 |

VLMs(多模态)中的幻觉:仅依赖预训练模型的内部知识库,无法准确理解世界状态

| Within the context of multi-modal agent systems, VLMs have been shown to hallucinate as well (Zhou et al., 2023b). One common cause of hallucination for vision-based language-generation is due to the over-reliance on co-occurrence of objects and visual cues in the training data (Rohrbach et al., 2018). AI agents that exclusively rely upon pretrained LLMs or VLMs and use limited environment-specific finetuning can be particularly vulnerable to hallucinations since they rely upon the internal knowledge-base of the pretrained models for generating actions and may not accurately understand the dynamics of the world state in which they are deployed. |

在多模态智能体系统的背景下,VLMs也会产生幻觉(Zhou et al., 2023b)。基于视觉的语言生成产生幻觉的一个常见原因是过度依赖训练数据中对象和视觉提示的共同出现(Rohrbach et al., 2018)。 仅依赖于预训练的LLMs或VLMs并使用有限的环境特定微调的AI代理可能特别容易产生幻觉,因为它们依赖于预训练模型的内部知识库来生成动作,并且可能无法准确理解它们所部署的世界状态的动态。 |

2.2.2 Biases and Inclusivity偏见和包容性

| AI agents based on LLMs or LMMs (large multimodal models) have biases due to several factors inherent in their design and training process. When designing these AI agents, we must be mindful of being inclusive and aware of the needs of all end users and stakeholders. In the context of AI agents, inclusivity refers to the measures and principles employed to ensure that the agent’s responses and interactions are inclusive, respectful, and sensitive to a wide range of users from diverse backgrounds. We list key aspects of agent biases and inclusivity below |

基于LLMs或LMMs(大型多模态模型)的AI代理由于其设计和训练过程中固有的几个因素而存在偏见。在设计这些AI代理时,我们必须注意包容性,并了解所有最终用户和利益相关者的需求。 在人工智能智能体的背景下,包容性是指为确保智能体的响应和交互对来自不同背景的广泛用户具有包容性、尊重性和敏感性而采用的措施和原则。 |

Agent偏见和包容性的关键方面

训练数据:基础模型从互联网大数据源训练,数据可能包含社会偏见,包括与种族、性别、族裔、宗教等相关的刻板印象和偏见

| >>Training Data: Foundation models are trained on vast amounts of text data collected from the internet, including books, articles, websites, and other text sources. This data often reflects the biases present in human society, and the model can inadvertently learn and reproduce these biases. This includes stereotypes, prejudices, and slanted viewpoints related to race, gender, ethnicity, religion, and other personal attributes. In particular, by training on internet data and often only English text, models implicitly learn the cultural norms of Western, Educated, Industrialized, Rich, and Democratic (WEIRD) societies (Henrich et al., 2010) who have a disproportionately large internet presence. However, it is essential to recognize that datasets created by humans cannot be entirely devoid of bias, since they frequently mirror the societal biases and the predispositions of the individuals who generated and/or compiled the data initially. |

我们在下面列出了Agent偏见和包容性的关键方面 >>训练数据:基础模型在从互联网收集的大量文本数据上进行训练,包括书籍,文章,网站和其他文本来源。这些数据通常反映了人类社会中存在的偏见,而模型可以在不经意间学习和复制这些偏见。这包括与种族、性别、民族、宗教和其他个人属性有关的刻板印象、偏见和倾斜的观点。特别是,通过对互联网数据和通常只有英文文本的训练,模型隐含地学习了西方、受过教育的、工业化的、富裕的和民主的(WEIRD)社会的文化规范(Henrich et al., 2010),这些社会拥有不成比例的大量互联网存在。然而,必须认识到,人类创建的数据集不可能完全没有偏见,因为它们经常反映社会偏见,以及最初生成和/或汇编数据的个人的倾向。 |

历史文化偏见:训练数据可能包含历史偏见或不同文化立场,其中可能包含冒犯性或贬损性语言

语言环境限制:模型难以完全理解语言细微差别导致误导,如讽刺、幽默或文化引用

政策和准则: 要确保公平和包容,例如在生成图像时,有规定多样化描绘人物的规则,避免与种族、性别等相关的刻板印象。

不当泛化:模型倾向于根据训练数据模式产生回复,导致过度概括,可能形成立场

| >>Historical and Cultural Biases: AI models are trained on large datasets sourced from diverse content. Thus, the training data often includes historical texts or materials from various cultures. In particular, training data from historical sources may contain offensive or derogatory language representing a particular society’s cultural norms, attitudes, and prejudices. This can lead to the model perpetuating outdated stereotypes or not fully understanding contemporary cultural shifts and nuances. >>Language and Context Limitations: Language models might struggle with understanding and accurately representing nuances in language, such as sarcasm, humor, or cultural references. This can lead to misinterpretations or biased responses in certain contexts. Furthermore, there are many aspects of spoken language that are not captured by pure text data, leading to a potential disconnect between human understanding of language and how models understand language. >>Policies and Guidelines: AI agents operate under strict policies and guidelines to ensure fairness and inclusivity. For instance, in generating images, there are rules to diversify depictions of people, avoiding stereotypes related to race, gender, and other attributes. >>Overgeneralization: These models tend to generate responses based on patterns seen in the training data. This can lead to overgeneralizations, where the model might produce responses that seem to stereotype or make broad assumptions about certain groups. |

>>历史和文化偏见:人工智能模型是在来自不同内容的大型数据集上训练的。因此,训练数据通常包括历史文本或来自不同文化的材料。特别是,来自历史来源的训练数据可能包含代表特定社会文化规范、态度和偏见的攻击性或贬损性语言。这可能导致模型延续过时的刻板印象,或者不能完全理解当代文化的转变和细微差别。 >>语言和上下文限制:语言模型可能难以理解和准确地表示语言中的细微差别,例如讽刺、幽默或文化参考。在某些情况下,这可能导致误解或有偏见的反应。此外,口语的许多方面没有被纯文本数据捕获,从而导致人类对语言的理解与模型对语言的理解之间存在潜在的脱节。 >>政策和指南:AI代理在严格的政策和指南下运行,以确保公平性和包容性。例如,在生成图像时,有一些规则可以使人物的描述多样化,避免与种族、性别和其他属性相关的刻板印象。 >>过度概括:这些模型倾向于根据训练数据中看到的模式生成响应。这可能会导致过度概括,模型可能会产生似乎是刻板印象或对某些群体做出广泛假设的反应。 |

持续监测更新:用户反馈和伦理研究帮助解决新出现问题

强化主流观点: 模型可能更偏向主导文化或群体的观点,潜在地低估或误代表少数观点。

道德和包容性设计: 尊重文化差异,促进多样性,并确保AI不延续有害的刻板印象。

用户指南: 引导以促进包容和尊重的方式与AI进行互动,包括避免可能导致有偏见或不适当输出的请求,以减轻模型从用户互动中学到有害材料的可能性。

| >>Constant Monitoring and Updating: AI systems are continuously monitored and updated to address any emerging biases or inclusivity issues. Feedback from users and ongoing research in AI ethics play a crucial role in this process. >>Amplification of Dominant Views: Since the training data often includes more content from dominant cultures or groups, the model may be more biased towards these perspectives, potentially underrepresenting or misrepresenting minority viewpoints. >>Ethical and Inclusive Design: AI tools should be designed with ethical considerations and inclusivity as core principles. This includes respecting cultural differences, promoting diversity, and ensuring that the AI does not perpetuate harmful stereotypes. >>User Guidelines: Users are also guided on how to interact with AI in a manner that promotes inclusivity and respect. This includes refraining from requests that could lead to biased or inappropriate outputs. Furthermore, it can help mitigate models learning harmful material from user interactions. |

>>持续监控和更新:人工智能系统被持续监控和更新,以解决任何新出现的偏见或包容性问题。来自用户的反馈和正在进行的人工智能伦理研究在这一过程中发挥着至关重要的作用。 >>主流观点的放大:由于训练数据通常包含更多来自主流文化或群体的内容,模型可能更偏向于这些观点,可能会低估或歪曲少数人的观点。 >>道德和包容性设计:人工智能工具的设计应以道德考虑和包容性为核心原则。这包括尊重文化差异,促进多样性,并确保人工智能不会使有害的刻板印象永久化。 >>用户指南:还指导用户如何以促进包容性和尊重的方式与人工智能进行交互。这包括避免提出可能导致有偏见或不适当产出的要求。此外,它可以帮助减轻模型从用户交互中学习有害材料的情况。 |

纠正偏见的努力:

多样和包容的训练数据、偏见检测和修正、道德准则和政策、多样性代表、偏见缓解、文化敏感性、可访问性、语言包容性、道德和尊重的互动、用户反馈和适应、符合包容性准则

>> 多样和包容的训练数据: 在训练数据中包含更多多样和包容的来源,以减少偏见。

>> 偏见检测和修正: 不断进行研究以检测和修正模型响应中的偏见。

>> 道德准则和政策: 模型受到旨在减轻偏见并确保尊重和包容互动的道德准则和政策的指导。

>> 多样性代表: 确保AI代理生成的内容或提供的响应能够代表广泛的人类经验、文化、族裔和身份。

>> 偏见缓解: 积极努力减少与种族、性别、年龄、残疾、性取向等个人特征相关的偏见,提供公正平衡的响应,不延续刻板印象或偏见。

>> 文化敏感性: AI设计为具有文化敏感性,认可和尊重文化规范、实践和价值观的多样性。

>> 可访问性: 确保AI代理对具有不同能力的用户可访问,包括视觉、听觉、运动或认知障碍的人。这可能涉及整合使交互更容易的功能。

>> 语言包容性: 支持多种语言和方言,以满足全球用户群,并对语言内的细微差别和变化保持敏感。

>> 道德和尊重的互动: 编程使代理与所有用户道德和尊重地互动,避免可能被视为冒犯、有害或不尊重的响应。

>> 用户反馈和适应: 结合用户反馈不断改进AI代理的包容性和效果,学习如何更好地理解和服务多样的用户群体。

>> 符合包容性准则: 遵循由行业团体、伦理委员会或监管机构制定的AI代理包容性准则和标准。

| Despite these measures, AI agents still exhibit biases. Ongoing efforts in agent AI research and development are focused on further reducing these biases and enhancing the inclusivity and fairness of agent AI systems. Efforts to Mitigate Biases: >>Diverse and Inclusive Training Data: Efforts are made to include a more diverse and inclusive range of sources in the training data. >>Bias Detection and Correction: Ongoing research focuses on detecting and correcting biases in model responses. >>Ethical Guidelines and Policies: Models are often governed by ethical guidelines and policies designed to mitigate biases and ensure respectful and inclusive interactions. >>Diverse Representation: Ensuring that the content generated or the responses provided by the AI agent represent a wide range of human experiences, cultures, ethnicities, and identities. This is particularly relevant in scenarios like image generation or narrative construction. >>Bias Mitigation: Actively working to reduce biases in the AI’s responses. This includes biases related to race, gender, age, disability, sexual orientation, and other personal characteristics. The goal is to provide fair and balanced responses that do not perpetuate stereotypes or prejudices. >>Cultural Sensitivity: The AI is designed to be culturally sensitive, acknowledging and respecting the diversity of cultural norms, practices, and values. This includes understanding and appropriately responding to cultural references and nuances. >>Accessibility: Ensuring that the AI agent is accessible to users with different abilities, including those with disabilities. This can involve incorporating features that make interactions easier for people with visual, auditory, motor, or cognitive impairments. >>Language-based Inclusivity: Providing support for multiple languages and dialects to cater to a global user base, and being sensitive to the nuances and variations within a language (Liu et al., 2023b). >>Ethical and Respectful Interactions: The Agent is programmed to interact ethically and respectfully with all users, avoiding responses that could be deemed offensive, harmful, or disrespectful. >>User Feedback and Adaptation: Incorporating user feedback to continually improve the inclusivity and effectiveness of the AI agent. This includes learning from interactions to better understand and serve a diverse user base. >>Compliance with Inclusivity Guidelines: Adhering to established guidelines and standards for inclusivity in AI agent, which are often set by industry groups, ethical boards, or regulatory bodies. |

尽管采取了这些措施,AI代理仍然表现出偏见。正在进行的人工智能研究和开发工作的重点是进一步减少这些偏见,增强人工智能系统的包容性和公平性。减少偏见的努力: >>多样化和包容性培训数据:努力在培训数据中纳入更多样化和包容性的来源。 >>偏差检测和纠正:正在进行的研究重点是检测和纠正模型反应中的偏差。 >>道德准则和政策:模型通常受道德准则和政策的约束,这些准则和政策旨在减轻偏见,确保尊重和包容的互动。 多样化代表:确保AI代理生成的内容或提供的响应代表广泛的人类经验、文化、种族和身份。这在图像生成或叙事构建等场景中尤为重要。 >>减少偏见:积极努力减少AI反应中的偏见。这包括与种族、性别、年龄、残疾、性取向和其他个人特征有关的偏见。目标是提供公平和平衡的回应,而不是使陈规定型观念或偏见永久化。 >>文化敏感性:人工智能被设计为具有文化敏感性,承认并尊重文化规范、实践和价值观的多样性。这包括理解和适当地回应文化参考和细微差别。 >>可访问性:确保具有不同能力的用户(包括残疾人)可以访问AI代理。这可以包括整合功能,使视觉、听觉、运动或认知障碍的人更容易互动。 >>基于语言的包容性:为多种语言和方言提供支持,以迎合全球用户群,并对语言中的细微差别和变化敏感(Liu et al., 2023b)。 >>道德和尊重的互动:代理被编程为与所有用户进行道德和尊重的互动,避免可能被视为冒犯、有害或不尊重的回应。 >>用户反馈和适应:结合用户反馈,不断提高AI代理的包容性和有效性。这包括从交互中学习,以更好地理解和服务不同的用户群。 >>遵守包容性准则:遵守AI代理中包容性的既定准则和标准,这些准则和标准通常由行业团体、道德委员会或监管机构制定。 |

Agent AI包容性的首要目标:创建一个尊重所有用户的Agent

| Despite these efforts, it’s important to be aware of the potential for biases in responses and to interpret them with critical thinking. Continuous improvements in AI agent technology and ethical practices aim to reduce these biases over time. One of the overarching goals for inclusivity in agent AI is to create an agent that is respectful and accessible to all users, regardless of their background or identity. |

尽管有这些努力,但我们需要意识到回应中存在潜在偏见的可能性,并用批判性思维来解释它们。随着时间的推移,AI代理技术和道德实践的不断改进旨在减少这些偏见。Agent AI包容性的首要目标之一是创建一个尊重所有用户的代理,无论他们的背景或身份如何。 |

2.2.3 Data Privacy and Usage数据隐私和使用

数据收集、使用和目的,存储和安全,数据删除和保留,数据可移植性和隐私政策,匿名化

>> 数据收集、使用和目的: AI代理在生产和与用户互动中收集数据,包括文本输入、用户使用模式、个人偏好和敏感信息。用户应了解数据收集的内容和用途,并有权纠正错误信息。

>> 存储和安全: 开发者应知道用户互动数据存储在何处,采取何种安全措施,以防止未经授权的访问或违规行为。数据的分享需透明,并通常需要用户同意。

>> 数据删除和保留: 用户需了解用户数据存储时间,有权要求删除数据,符合数据保护法规,如欧盟的GDPR或加利福尼亚州的CCPA。

>> 数据可移植性和隐私政策: 开发者需创建AI代理的隐私政策,详细说明数据的处理方式、用户权利和获得用户同意的重要性。

>> 匿名化: 对于用于更广泛分析或AI训练的数据,最好进行匿名化处理以保护个体身份。

总结:理解AI代理的数据隐私涉及了对用户数据的收集、使用、存储和保护的认知,确保用户理解他们在访问、更正和删除数据方面的权利。对于综合了解数据隐私,对于用户和AI代理的数据检索机制的意识也至关重要。

| One key ethical consideration of AI agents involves comprehending how these systems handle, store, and potentially retrieve user data. We discuss key aspects below: |

AI代理的一个关键伦理考虑涉及理解这些系统如何处理、存储和潜在地检索用户数据。我们讨论以下关键方面: |

| Data Collection, Usage and Purpose. When using user data to improve model performance, model developers access the data the AI agent has collected while in production and interacting with users. Some systems allow users to view their data through user accounts or by making a request to the service provider. It is important to recognize what data the AI agent collects during these interactions. This could include text inputs, user usage patterns, personal preferences, and sometimes more sensitive personal information. Users should also understand how the data collected from their interactions is used. If, for some reason, the AI holds incorrect information about a particular person or group, there should be a mechanism for users to help correct this once identified. This is important for both accuracy and to be respectful of all users and groups. Common uses for retrieving and analyzing user data include improving user interaction, personalizing responses, and system optimization. It is extremely important for developers to ensure the data is not used for purposes that users have not consented to, such as unsolicited marketing. |

资料收集、使用及目的。当使用用户数据来提高模型性能时,模型开发人员访问AI代理在生产和与用户交互时收集的数据。有些系统允许用户通过用户帐户或向服务提供者提出请求来查看他们的数据。识别AI代理在这些交互过程中收集的数据非常重要。这可能包括文本输入、用户使用模式、个人偏好,有时还包括更敏感的个人信息。用户还应该了解如何使用从他们的交互中收集的数据。如果由于某种原因,人工智能持有关于特定个人或群体的不正确信息,则应该有一种机制让用户在识别后帮助纠正这一错误。这对于准确性和尊重所有用户和群体都很重要。检索和分析用户数据的常见用途包括改进用户交互、个性化响应和系统优化。对于开发者来说,确保数据不会被用于用户不同意的目的(如未经请求的营销)是非常重要的。 |

| Storage and Security. Developers should know where the user interaction data is stored and what security measures are in place to protect it from unauthorized access or breaches. This includes encryption, secure servers, and data protection protocols. It is extremely important to determine if agent data is shared with third parties and under what conditions. This should be transparent and typically requires user consent. Data Deletion and Retention. It is also important for users to understand how long user data is stored and how users can request its deletion. Many data protection laws give users the right to be forgotten, meaning they can request their data be erased. AI agents must adhere to data protection laws like GDPR in the EU or CCPA in California. These laws govern data handling practices and user rights regarding their personal data. |

存储和安全。开发人员应该知道用户交互数据存储在哪里,以及采取了哪些安全措施来防止未经授权的访问或破坏。这包括加密、安全服务器和数据保护协议。确定是否与第三方共享代理数据以及在什么条件下共享代理数据是极其重要的。这应该是透明的,通常需要用户同意。 数据删除和保留。对于用户来说,了解用户数据的存储时间以及用户如何请求删除数据也很重要。许多数据保护法赋予用户被遗忘的权利,这意味着他们可以要求删除自己的数据。AI代理必须遵守数据保护法,如欧盟的GDPR或加州的CCPA。这些法律规管有关个人资料的资料处理实务及使用者权利。 |

| Data Portability and Privacy Policy. Furthermore, developers must create the AI agent’s privacy policy to document and explain to users how their data is handled. This should detail data collection, usage, storage, and user rights. Developers should ensure that they obtain user consent for data collection, especially for sensitive information. Users typically have the option to opt-out or limit the data they provide. In some jurisdictions, users may even have the right to request a copy of their data in a format that can be transferred to another service provider. Anonymization. For data used in broader analysis or AI training, it should ideally be anonymized to protect individual identities. Developers must understand how their AI agent retrieves and uses historical user data during interactions. This could be for personalization or improving response relevance. |

数据可移植性和隐私政策。此外,开发人员必须创建AI代理的隐私策略,以记录并向用户解释他们的数据是如何处理的。这应该详细说明数据收集、使用、存储和用户权限。开发人员应该确保在收集数据时获得用户的同意,尤其是敏感信息。用户通常可以选择退出或限制他们提供的数据。在某些司法管辖区,用户甚至可能有权要求以可转移给另一个服务提供商的格式复制其数据。 匿名化。对于用于更广泛分析或人工智能训练的数据,理想情况下应该匿名以保护个人身份。开发人员必须了解他们的AI代理如何在交互过程中检索和使用历史用户数据。这可能是为了个性化或提高响应相关性。 |

| In summary, understanding data privacy for AI agents involves being aware of how user data is collected, used, stored, and protected, and ensuring that users understand their rights regarding accessing, correcting, and deleting their data. Awareness of the mechanisms for data retrieval, both by users and the AI agent, is also crucial for a comprehensive understanding of data privacy. |

总之,理解AI代理的数据隐私包括了解用户数据的收集、使用、存储和保护方式,并确保用户了解他们在访问、更正和删除数据方面的权利。用户和AI代理对数据检索机制的认识对于全面理解数据隐私也至关重要。 |

2.2.4 Interpretability and Explainability可理解性和可解释性

模仿学习通过无限记忆代理和上下文提示或隐式奖励函数解耦,使代理能够学习一般性策略,适应不同任务。

>>解耦进一步实现了泛化,使代理能够泛化到不同环境,实现转移学习。

>>泛化促使新兴属性或行为的出现,通过识别基本元素或规则,并观察其相互作用,从而导致复杂行为的产生。这一过程使系统能够适应新情境,展现了从简单规则到复杂行为的新兴特性。

本文介绍了模仿学习的解耦和泛化方法,以提高智能体的探索和适应能力。

>>通过解耦,智能体可以从专家演示中学习一般政策,而不是依赖于任务特定的奖励函数。

>>通过泛化,智能体可以在不同复杂度级别上学习一般原则,导致涌现特性。这种方法可以提高智能体的适应能力和泛化能力。

模仿学习→解耦:无限记忆代理通过模仿学习从专家数据中学习策略,改善对未知环境的探索和利用。解耦通过上下文提示或隐式奖励函数学习代理,克服传统模仿学习的缺点,使代理更适应各种情况

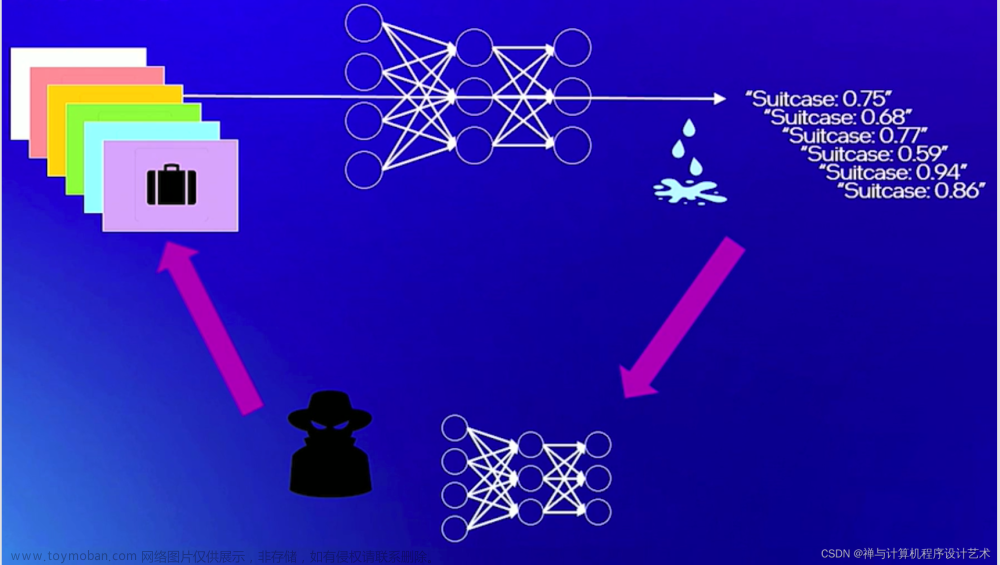

| Imitation Learning → Decoupling. Agents are typically trained using a continuous feedback loop in Reinforcement Learning (RL) or Imitation Learning (IL), starting with a randomly initialized policy. However, this approach faces leader-board in obtaining initial rewards in unfamiliar environments, particularly when rewards are sparse or only available at the end of a long-step interaction. Thus, a superior solution is to use an infinite-memory agent trained through IL, which can learn policies from expert data, improving exploration and utilization of unseen environmental space with emergent infrastructure as shown in Fig. 3. With expert characteristics to help the agent explore better and utilize the unseen environmental space. Agent AI, can learn policies and new paradigm flow directly from expert data. |

模仿学习→解耦。智能体通常在强化学习(RL)或模仿学习(IL)中使用连续反馈循环进行训练,从随机初始化策略开始。然而,这种方法在不熟悉的环境中获得初始奖励时面临着排行榜的问题,特别是当奖励很少或只有在长步骤互动结束时才能获得时。因此,一个更好的解决方案是使用通过IL训练的无限内存代理,它可以从专家数据中学习策略,通过紧急基础设施提高对未知环境空间的探索和利用,如图3所示。具有专家特征,帮助智能体更好地探索和利用不可见的环境空间。Agent AI,可以直接从专家数据中学习政策和新范式流。 |

| Traditional IL has an agent mimicking an expert demonstrator’s behavior to learn a policy. However, learning the expert policy directly may not always be the best approach, as the agent may not generalize well to unseen situations. To tackle this, we propose learning an agent with in-context prompt or a implicit reward function that captures key aspects of the expert’s behavior, as shown in Fig. 3. This equips the infinite memory agent with physical-world behavior data for task execution, learned from expert demonstrations. It helps overcome existing imitation learning drawbacks like the need for extensive expert data and potential errors in complex tasks. The key idea behind the Agent AI has two parts: 1) the infinite agent that collects physical-world expert demonstrations as state-action pairs and 2) the virtual environment that imitates the agent generator. The imitating agent produces actions that mimic the expert’s behavior, while the agent learns a policy mapping from states to actions by reducing a loss function of the disparity between the expert’s actions and the actions generated by the learned policy |

传统的人工智能有一个代理模仿专家演示者的行为来学习策略。然而,直接学习专家策略可能并不总是最好的方法,因为代理可能无法很好地泛化到未知的情况。为了解决这个问题,我们建议学习一个具有上下文提示或隐含奖励函数的代理,该函数捕获专家行为的关键方面,如图3所示。这为无限内存代理提供了用于任务执行的物理世界行为数据,并从专家演示中学习。它有助于克服现有的模仿学习的缺点,如需要大量的专家数据和复杂任务中的潜在错误。Agent AI背后的关键思想有两个部分: 1)无限代理,它收集物理世界的专家演示作为状态-动作对; 2)模仿代理生成器的虚拟环境。 模仿智能体产生模仿专家行为的动作,而智能体通过减少专家行为与学习策略产生的行为之间差异的损失函数来学习从状态到动作的策略映射 |

解耦→泛化:分离学习过程和任务奖励函数,通过模仿专家行为学习策略,使模型能在不同任务和环境中适应和泛化

通过解耦,智能体可以从专家演示中学习一般政策,适应各种情况。

通过从专家演示中学习,避免依赖特定任务奖励函数,使代理能够泛化到不同任务,实现迁移学习,适应性强。解耦使代理能够学习通用性策略,不依赖特定奖励函数,增强对不同环境的鲁棒性和泛化能力。

| Decoupling → Generalization. Rather than relying on a task-specific reward function, the agent learns from expert demonstrations, which provide a diverse set of state-action pairs covering various task aspects. The agent then learns a policy that maps states to actions by imitating the expert’s behavior. Decoupling in imitation learning refers to separating the learning process from the task-specific reward function, allowing the policy to generalize across different tasks without explicit reliance on the task-specific reward function. By decoupling, the agent can learn from expert demonstrations and learn a policy that is adaptable to a variety of situations. Decoupling enables transfer learning, where a policy learned in one domain can adapt to others with minimal fine-tuning. By learning a general policy that is not tied to a specific reward function, the agent can leverage the knowledge it acquired in one task to perform well in other related tasks. Since the agent does not rely on a specific reward function, it can adapt to changes in the reward function or environment without the need for significant retraining. This makes the learned policy more robust and generalizable across different environments. Decoupling in this context refers to the separation of two tasks in the learning process: learning the reward function and learning the optimal policy. |

解耦→泛化。智能体不是依赖于特定于任务的奖励函数,而是从专家演示中学习,专家演示提供了涵盖不同任务方面的各种状态-动作对。然后,代理通过模仿专家的行为来学习将状态映射到动作的策略。模仿学习中的解耦是指将学习过程从特定任务的奖励函数中分离出来,允许策略在不同任务之间进行推广,而无需明确依赖特定任务的奖励函数。通过解耦,代理可以从专家演示中学习,并学习适应各种情况的策略。解耦支持迁移学习,在一个领域中学习的策略可以通过最小的微调适应其他领域。通过学习与特定奖励函数无关的一般策略,代理可以利用它在一个任务中获得的知识在其他相关任务中表现良好。由于智能体不依赖于特定的奖励函数,它可以适应奖励函数或环境的变化,而无需进行重大的再培训。这使得学到的策略在不同的环境中更加健壮和一般化。这里的解耦是指学习过程中两个任务的分离:学习奖励函数和学习最优策略。 |

泛化→新兴行为:从简单规则或元素间的互动中产生复杂行为,模型在不同复杂层面上学习普适原则,促进跨领域知识迁移,导致涌现特性

泛化解释了如何从简单组件或规则中产生新兴属性或行为。通过识别系统行为的基本元素或规则,并观察这些简单组件或规则如何相互作用,从而导致复杂行为的产生。

泛化跨越不同复杂性水平,使系统能够学习适用于这些水平的一般原则,从而实现新兴属性。这有助于系统在新情境中适应,展现了从简单规则到复杂行为的新兴过程。

| Generalization → Emergent Behavior. Generalization explains how emergent properties or behaviors can arise from simpler components or rules. The key idea lies in identifying the basic elements or rules that govern the behavior of the system, such as individual neurons or basic algorithms. Consequently, by observing how these simple components or rules interact with one another. These interactions of these components often lead to the emergence of complex behaviors, which are not predictable by examining individual components alone. Generalization across different levels of complexity allows a system to learn general principles applicable across these levels, leading to emergent properties. This enables the system to adapt to new situations, demonstrating the emergence of more complex behaviors from simpler rules. Furthermore, the ability to generalize across different complexity levels facilitates knowledge transfer from one domain to another, which contributes to the emergence of complex behaviors in new contexts as the system adapts |

概括→涌现行为。泛化解释了如何从更简单的组件或规则中产生紧急属性或行为。关键思想在于识别控制系统行为的基本元素或规则,例如单个神经元或基本算法。因此,通过观察这些简单的组件或规则如何相互作用。这些组件之间的相互作用经常导致复杂行为的出现,这些行为是无法通过单独检查单个组件来预测的。跨越不同复杂级别的泛化允许系统学习适用于这些级别的一般原则,从而产生紧急属性。这使系统能够适应新的情况,证明从更简单的规则中出现更复杂的行为。此外,跨越不同复杂程度的泛化能力促进了知识从一个领域到另一个领域的转移,这有助于在系统适应的新环境中出现复杂行为 |

Figure 3: Example of the Emergent Interactive Mechanism using an agent to identify text relevant to the image from candidates. The task involves using a multi-modal AI agent from the web and human-annotated knowledge interaction samples to incorporate external world information.图3:使用代理识别候选图像相关文本的紧急交互机制示例。该任务涉及使用来自网络的多模态AI代理和人类注释的知识交互样本来整合外部世界信息。

2.2.5 Inference Augmentation推理增强

推理增强是提升AI代理商推理能力的关键,通过数据增强、算法增强、人机协作、实时反馈集成、跨领域知识迁移、特定用例定制、伦理和偏见考虑以及持续学习和适应等多种方法实现。这些方法有助于AI在不熟悉或复杂的决策场景中提高性能和准确性,确保其输出结果的可靠性。

| The inference ability of an AI agent lies in its capacity to interpret, predict, and respond based on its training and input data. While these capabilities are advanced and continually improving, it’s important to recognize their limitations and the influence of the underlying data they are trained on. Particularly, in the context of large language models, it refers to its capacity to draw conclusions, make predictions, and generate responses based on the data it has been trained on and the input it receives. Inference augmentation in AI agents refers to enhancing the AI’s natural inference abilities with additional tools, techniques, or data to improve its performance, accuracy, and utility. This can be particularly important in complex decision-making scenarios or when dealing with nuanced or specialized content. We denote particularly important sources for inference augmentation below: |

AI代理的推理能力在于其基于训练和输入数据的解释、预测和响应能力。虽然这些功能是先进的,并且在不断改进,但重要的是要认识到它们的局限性以及它们所训练的底层数据的影响。特别是,在大型语言模型的上下文中,它指的是它根据训练过的数据和接收到的输入得出结论、做出预测和生成响应的能力。AI代理中的推理增强是指通过额外的工具、技术或数据来增强人工智能的自然推理能力,以提高其性能、准确性和实用性。这在复杂的决策场景或处理微妙或专门的内容时尤为重要。我们在下面列出了特别重要的推理增强源: |

数据增强:引入额外数据提供更多上下文,帮助AI在不熟悉领域做出更明智推理。

算法增强:改进AI基础算法以更精准推理,包括更先进的机器学习模型等。

| Data Enrichment. Incorporating additional, often external, data sources to provide more context or background can help the AI agent make more informed inferences, especially in areas where its training data may be limited. For example, AI agents can infer meaning from the context of a conversation or text. They analyze the given information and use it to understand the intent and relevant details of user queries. These models are proficient at recognizing patterns in data. They use this ability to make inferences about language, user behavior, or other relevant phenomena based on the patterns they’ve learned during training. Algorithm Enhancement. Improving the AI’s underlying algorithms to make better inferences. This could involve using more advanced machine learning models, integrating different types of AI (like combining NLP with image recognition), or updating algorithms to better handle complex tasks. Inference in language models involves understanding and generating human language. This includes grasping nuances like tone, intent, and the subtleties of different linguistic constructions. |

数据浓缩。结合额外的(通常是外部的)数据源来提供更多的上下文或背景,可以帮助AI代理做出更明智的推断,特别是在其训练数据可能有限的领域。例如,AI代理可以从对话或文本的上下文中推断出意义。他们分析给定的信息,并使用它来理解用户查询的意图和相关细节。这些模型擅长识别数据中的模式。他们利用这种能力对语言、用户行为或其他相关现象进行推断,这些推断是基于他们在训练中所学到的模式。 算法改进。改进人工智能的底层算法,以做出更好的推断。这可能涉及使用更先进的机器学习模型,集成不同类型的人工智能(如将NLP与图像识别相结合),或更新算法以更好地处理复杂任务。语言模型中的推理涉及理解和生成人类语言。这包括把握语气、意图等细微差别,以及不同语言结构的微妙之处。 |

人机协作:在关键领域如伦理和创造性任务中,人类指导可补充AI推理

实时反馈集成:利用实时用户或环境反馈提高推理性能,如AI根据用户反馈调整推荐

跨领域知识迁移:将一个领域的知识应用于另一个领域以改善推理

特定用例定制:针对特定应用或行业定制AI推理能力,如法律分析、医疗诊断。

| Human-in-the-Loop (HITL). Involving human input to augment the AI’s inferences can be particularly useful in areas where human judgment is crucial, such as ethical considerations, creative tasks, or ambiguous scenarios. Humans can provide guidance, correct errors, or offer insights that the agent would not be able to infer on its own. Real-Time Feedback Integration. Using real-time feedback from users or the environment to enhance inferences is another promising method for improving performance during inference. For example, an AI might adjust its recommendations based on live user responses or changing conditions in a dynamic system. Or, if the agent is taking actions in a simulated environment that break certain rules, the agent can be dynamically given feedback to help correct itself. Cross-Domain Knowledge Transfer. Leveraging knowledge or models from one domain to improve inferences in another can be particularly helpful when producing outputs within a specialized discipline. For instance, techniques developed for language translation might be applied to code generation, or insights from medical diagnostics could enhance predictive maintenance in machinery. Customization for Specific Use Cases. Tailoring the AI’s inference capabilities for particular applications or industries can involve training the AI on specialized datasets or fine-tuning its models to better suit specific tasks, such as legal analysis, medical diagnosis, or financial forecasting. Since the particular language or information within one domain can greatly contrast with the language from other domains, it can be beneficial to finetune the agent on domain-specific information. |

Human-in-the-Loop (HITL)。在人类判断至关重要的领域,比如道德考虑、创造性任务或模棱两可的场景,让人类输入来增强人工智能的推理能力尤其有用。人类可以提供指导,纠正错误,或者提供智能体自己无法推断的见解。 实时反馈集成。使用来自用户或环境的实时反馈来增强推理是另一种在推理过程中提高性能的有前途的方法。例如,人工智能可能会根据实时用户响应或动态系统中不断变化的条件调整其建议。或者,如果代理在模拟环境中采取了违反某些规则的行动,代理可以动态地获得反馈以帮助纠正自己。 跨领域知识转移。利用一个领域的知识或模型来改进另一个领域的推论,在产生专业学科的输出时特别有用。例如,为语言翻译开发的技术可以应用于代码生成,或者来自医疗诊断的见解可以增强机器的预测性维护。 针对特定用例的定制。为特定的应用程序或行业定制人工智能的推理能力,包括在专门的数据集上训练人工智能,或微调其模型,以更好地适应特定的任务,如法律分析、医疗诊断或财务预测。由于一个领域内的特定语言或信息可能与其他领域的语言有很大的差异,因此根据特定于领域的信息对代理进行微调是有益的。 |

伦理和偏见考虑:确保增强过程不引入新偏见或伦理问题,考虑数据源和算法对公平性的影响

持续学习和适应:定期更新AI能力,以跟上发展、数据变化和用户需求

| Ethical and Bias Considerations. It is important to ensure that the augmentation process does not introduce new biases or ethical issues. This involves careful consideration of the sources of additional data or the impact of the new inference augmentation algorithms on fairness and transparency. When making inferences, especially about sensitive topics, AI agents must sometimes navigate ethical considerations. This involves avoiding harmful stereotypes, respecting privacy, and ensuring fairness. Continuous Learning and Adaptation. Regularly updating and refining the AI’s capabilities to keep up with new developments, changing data landscapes, and evolving user needs. |

道德和偏见考虑。重要的是要确保扩增过程不会引入新的偏见或伦理问题。这包括仔细考虑额外数据的来源或新的推理增强算法对公平性和透明度的影响。在进行推理时,特别是在敏感话题上,AI代理有时必须考虑道德因素。这包括避免有害的刻板印象、尊重隐私和确保公平。 持续学习和适应。定期更新和完善人工智能的能力,以跟上新的发展、不断变化的数据环境和不断发展的用户需求。 |

| In summmary, winference augmentation in AI agents involves methods in which their natural inference abilities can be enhanced through additional data, improved algorithms, human input, and other techniques. Depending on the use-case, this augmentation is often essential for dealing with complex tasks and ensuring accuracy in the agent’s outputs. |

综上所述,人工智能智能体的推理增强包括通过额外的数据、改进的算法、人工输入和其他技术来增强其自然推理能力的方法。根据用例的不同,这种增强对于处理复杂任务和确保代理输出的准确性通常是必不可少的。 |

2.2.6 Regulation监管

开发利用LLM或VLM的下一代AI驱动的人机交互管道:与机器的有效沟通和互动,对话能力来识别并满足人类需求,并采用提示工程、高层级验证来限制

文章提出了一个利用LLM或VLM的下一代AI驱动的人机交互管道,旨在与人类玩家有意义地交流和互动。为了应对LLM/VLM的不确定性问题,文章提出了提示工程和高层级验证等方法,以确保系统的稳定运行。

为了加快代理AI的开发并简化工作流程,提出了开发新一代AI赋能的代理交互管道。该系统将实现人与机器的有效沟通和互动,利用LLM或VLM的对话能力来识别并满足人类需求,并在请求时执行适当动作。在物理机器人设置中,由于LLM/VLM输出的不可预测性,采用了提示工程来限制其关注点,并包含解释性文本以提高输出的稳定性和可理解性。此外,通过实施预执行验证和修改功能,可在人类指导下更有效地操作系统。