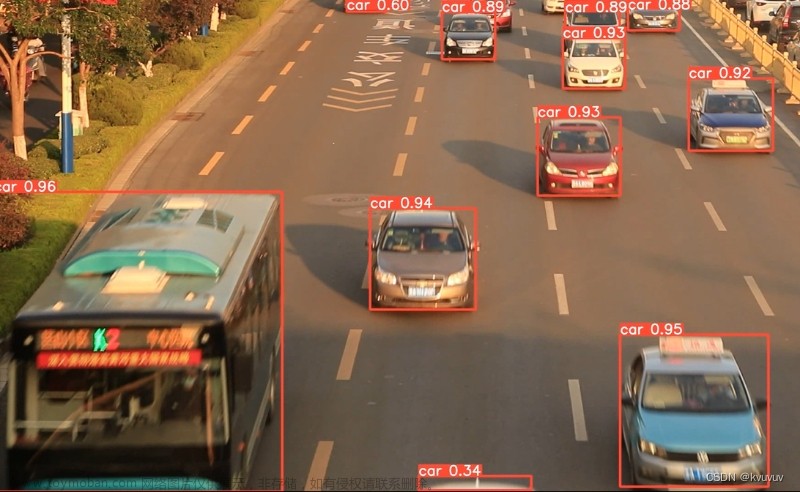

本系列文章记录本人硕士阶段YOLO系列目标检测算法自学及其代码实现的过程。其中算法具体实现借鉴于ultralytics YOLO源码Github,删减了源码中部分内容,满足个人科研需求。

本系列文章主要以YOLOv5为例完成算法的实现,后续修改、增加相关模块即可实现其他版本的YOLO算法。

文章地址:

YOLOv5算法实现(一):算法框架概述

YOLOv5算法实现(二):模型搭建

YOLOv5算法实现(三):数据集加载

YOLOv5算法实现(四):正样本匹配与损失计算

YOLOv5算法实现(五):预测结果后处理

YOLOv5算法实现(六):评价指标及实现

YOLOv5算法实现(七):模型训练

YOLOv5算法实现(八):模型验证

YOLOv5算法实现(九):模型预测

0 引言

本篇文章综合之前文章中的功能,实现模型的训练。模型训练的逻辑如图1所示。 文章来源:https://www.toymoban.com/news/detail-814411.html

文章来源:https://www.toymoban.com/news/detail-814411.html

1 超参数文件

YOLOv5中超参数主要包括学习率、优化器、置信度以及数据增强,源码中某一超参数文件及各参数含义如下所示:文章来源地址https://www.toymoban.com/news/detail-814411.html

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Hyperparameters for VOC training

# 学习率

lr0: 0.00334 # 初始学习率

lrf: 0.15135 # 最终学习率下降比例(lr0 * lrf)

# 优化器(SGD、Adam、AdamW)

momentum: 0.74832 # SGD momentum/Adam beta1

weight_decay: 0.00025 # optimizer weight decay 5e-4 ,权重衰变系数(防止过拟合)

# 热身训练(Warmup)

warmup_epochs: 3.3835 # 学习率热身epoch

warmup_momentum: 0.59462 # 学习率热身初始动量

warmup_bias_lr: 0.18657 # 学习率热身偏置学习率

# 损失增益

box: 0.02 # box loss gain

cls: 0.21638 # cls loss gain

cls_pw: 0.5 # cls BCELoss positive_weight

obj: 0.51728 # obj loss gain (scale with pixels)

obj_pw: 0.67198 # obj BCELoss positive_weight

fl_gamma: 0.0 # focal loss gamma (efficientDet default gamma=1.5)

# 正样本匹配阈值

iou_t: 0.2 # IoU training threshold

anchor_t: 3.3744 # anchor-multiple threshold

# 数据增强

## HSV色彩空间增强

hsv_h: 0.01041

hsv_s: 0.54703

hsv_v: 0.27739

## 仿射变换

degrees: 0.0 #图像旋转

translate: 0.04591 #图像平移

scale: 0.75544 #图像仿射变换的缩放比例

shear: 0.0 #设置裁剪的仿射矩阵系数

2 模型训练(train.py)

def train(hyp):

# ----------------------------------------------------------------------------------

# hyp超参数解析

# ----------------------------------------------------------------------------------

# 训练设备

device = torch.device(opt.device if torch.cuda.is_available() else "cpu")

print("Using {} device training.".format(device.type))

# 权重保存文件

wdir = "weights" + os.sep # weights dir

# 损失和学习率记录txt, 损失和学习率变化曲线, 权重文件保存路径

results_file, save_file_path, save_path \

= opt.results_file, opt.save_file_path, opt.save_path

# 模型结构文件

cfg = opt.yaml

# data文件路径, 其中存储了训练和验证数据集的路径

data = opt.data

# 训练批次

epochs = opt.epochs

batch_size = opt.batch_size

# 初始化权重路径

weights = opt.weights # initial training weights

# 训练和测试的图片大小

imgsz_train = opt.img_size

imgsz_test = opt.img_size

# 图像要设置成32的倍数

gs = 32 # (pixels) grid size

assert math.fmod(imgsz_test, gs) == 0, "--img-size %g must be a %g-multiple" % (imgsz_test, gs)

# ----------------------------------------------------------------------------------

# ----------------------------------------------------------------------------------

# 数据字典 "classes": 类别数, "train":训练数据集路径 "valid":验证数据集路径 "names": 类别名

data_dict = parse_data_cfg(data)

train_path = data_dict['train']

val_path = data_dict['valid']

# 类别数

nc = opt.nc

# Remove previous results

for f in glob.glob(results_file):

os.remove(f)

# ----------------------------------------------------------------------------------

# 模型初始化(加载预训练权重)

# ----------------------------------------------------------------------------------

# 初始化模型

model = Model(cfg=cfg, ch=3, nc=nc).to(device)

# 开始训练的epoch

start_epoch = 0

best_map = 0

pretrain = False

if weights.endswith(".pt") or weights.endswith(".pth"):

pretrain = True

ckpt = torch.load(weights, map_location=device)

# load model

try:

ckpt["model"] = {k: v for k, v in ckpt["model"].items() if model.state_dict()[k].numel() == v.numel()}

model.load_state_dict(ckpt["model"], strict=False)

except KeyError as e:

s = "%s is not compatible with %s. Specify --weights '' or specify a --cfg compatible with %s. " \

"See https://github.com/ultralytics/yolov3/issues/657" % (opt.weights, opt.cfg, opt.weights)

raise KeyError(s) from e

# load results

if ckpt.get("training_results") is not None:

with open(results_file, "w") as file:

file.write(ckpt["training_results"]) # write results.txt

# 加载最好的map

if "best_map" in ckpt.keys():

best_map = ckpt['best_map']

# epochs

start_epoch = ckpt["epoch"] + 1

if epochs < start_epoch:

print('%s has been trained for %g epochs. Fine-tuning for %g additional epochs.' %

(opt.weights, ckpt['epoch'], epochs))

epochs += ckpt['epoch'] # finetune additional epochs

del ckpt

hyp['lr0'] = hyp['lr0'] * hyp['lrf']

print(colorstr('Pretrain') + ': Successful load pretrained weights.')

# ----------------------------------------------------------------------------------

# ----------------------------------------------------------------------------------

# ----------------------------------------------------------------------------------

# 定义优化器和学习率策略

# ----------------------------------------------------------------------------------

nbs = 64 # 训练多少图片进行一次反向传播

accumulate = max(round(nbs / batch_size), 1) # accumulate n times before optimizer update (bs 64)

hyp['weight_decay'] *= batch_size * accumulate / nbs # scale weight_decay

# 定义优化器

optimizer = smart_optimizer(model, opt.optimizer, hyp['lr0'], hyp['momentum'], hyp['weight_decay'])

# Scheduler

if opt.cos_lr:

lf = one_cycle(1, hyp['lrf'], epochs) # cosine 1->hyp['lrf']

else:

lf = lambda x: (1 - x / epochs) * (1.0 - hyp['lrf']) + hyp['lrf'] # linear

scheduler = lr_scheduler.LambdaLR(optimizer, lr_lambda=lf)

scheduler.last_epoch = start_epoch # 指定从哪个epoch开始

# ----------------------------------------------------------------------------------

# ----------------------------------------------------------------------------------

# ----------------------------------------------------------------------------------

# 加载数据集

# ----------------------------------------------------------------------------------

train_dataset = LoadImagesAndLabels(train_path, imgsz_train, batch_size,

augment=True,

hyp=hyp, # augmentation hyperparameters

rect=False, # rectangular training

cache_images=opt.cache_images,)

# dataloader

num_workers = 0 # number of workers

train_dataloader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

num_workers=num_workers,

# Shuffle=True unless rectangular training is used

shuffle=not opt.rect,

pin_memory=True,

collate_fn=train_dataset.collate_fn)

# 当需要根据map保存最好的权值时, 加载验证数据集

val_dataset = LoadImagesAndLabels(val_path, imgsz_test, batch_size,

hyp=hyp,

rect=True,

cache_images=opt.cache_images)

val_dataloader = torch.utils.data.DataLoader(val_dataset,

batch_size=batch_size,

num_workers=num_workers,

pin_memory=True,

collate_fn=val_dataset.collate_fn)

# train_dataset.labels (1203, ) 1203张图片中的所有标签(nt,5)

# np.concatenate 在维度0上对所有标签进行拼接 (1880, 5)

labels = np.concatenate(train_dataset.labels, 0)

mlc = int(labels[:, 0].max()) # 最大的类别标签

assert mlc < nc, f'Label class {mlc} exceeds nc={nc} in {data}. Possible class labels are 0-{nc - 1}'

# ----------------------------------------------------------------------------------

# ----------------------------------------------------------------------------------

# ----------------------------------------------------------------------------------

# 模型参数定义

# ----------------------------------------------------------------------------------

nl = model.model[-1].nl # 输出特征图数量

hyp['box'] *= 3 / nl # scale to layers

hyp['cls'] *= nc / 80 * 3 / nl # scale to classes and layers

hyp['obj'] *= (imgsz_test / 640) ** 2 * 3 / nl # scale to image size and layers

hyp['label_smoothing'] = opt.label_smoothing

model.nc = nc # attach number of classes to model

model.hyp = hyp # attach hyperparameters to model

# ----------------------------------------------------------------------------------

# ----------------------------------------------------------------------------------

compute_loss = ComputeLoss(model)

# ----------------------------------------------------------------------------------

# 开始训练

# ----------------------------------------------------------------------------------

nb = len(train_dataloader) # batches的数量, dataloader已经将data安装batch_size进行了打包

nw = max(round(hyp['warmup_epochs'] * nb), 100) # 热身训练的迭代次数, max(3 epochs, 100 iterations)

last_opt_step = -1 # 最后一次更新参数的步数

train_loss, train_box_loss, train_obj_loss, train_cls_loss = [], [], [], []

learning_rate = []

if opt.savebest:

best_map = ComputeAP(model, val_dataloader, device=device)

print(f"{colorstr('Initialize best_map')}: mAP@0.50 = {best_map: .5f}")

print(f'Image sizes {imgsz_train} train, {imgsz_test} val\n'

f'Using {num_workers} dataloader workers\n'

f'Starting training for {epochs} epochs...')

for epoch in range(start_epoch, epochs):

model.train()

# 训练过程中的信息打印

metric_logger = utils.MetricLogger(delimiter=" ")

metric_logger.add_meter('lr', utils.SmoothedValue(window_size=1, fmt='{value:.6f}'))

header = 'Epoch: [{}]'.format(epoch)

# 当前训练批次的平均损失

mloss = torch.zeros(4, device=device)

now_lr = 0.

optimizer.zero_grad()

# imgs: [batch_size, 3, img_size, img_size]

# targets: [num_obj, 6] , that number 6 means -> (img_index, obj_index, x, y, w, h)

# 其中(x, y, w, h)绝对作了缩放处理后的相对坐标

# paths: list of img path(文件路径)

for i, (imgs, targets, paths, _, _) in enumerate(metric_logger.log_every(train_dataloader, 50, header)):

ni = i + nb * epoch # number integrated batches (since train start)

imgs = imgs.to(device).float() / 255.0 # uint8 to float32, 0 - 255 to 0.0 - 1.0

# ----------------------------------------------------------------------------------

# Warmup 热身训练

# ----------------------------------------------------------------------------------

if ni <= nw:

xi = [0, nw] # x interp

# compute_loss.gr = np.interp(ni, xi, [0.0, 1.0]) # iou loss ratio (obj_loss = 1.0 or iou)

accumulate = max(1, np.interp(ni, xi, [1, nbs / batch_size]).round())

for j, x in enumerate(optimizer.param_groups):

# bias lr falls from 0.1 to lr0, all other lrs rise from 0.0 to lr0

x['lr'] = np.interp(ni, xi, [hyp['warmup_bias_lr'] if j == 0 else 0.0, x['initial_lr'] * lf(epoch)])

if 'momentum' in x:

x['momentum'] = np.interp(ni, xi, [hyp['warmup_momentum'], hyp['momentum']])

# ----------------------------------------------------------------------------------

# ----------------------------------------------------------------------------------

# 前向传播

pred = model(imgs)

# 损失计算

loss_dict = compute_loss(pred, targets.to(device))

losses = sum(loss for loss in loss_dict.values())

loss_items = torch.cat((loss_dict["box_loss"],

loss_dict["obj_loss"],

loss_dict["class_loss"],

losses)).detach()

mloss = (mloss * i + loss_items) / (i + 1) # update mean losses

# 反向传播

losses *= batch_size

losses.backward()

# 每训练accumulate次进行参数更新

if ni - last_opt_step >= accumulate:

optimizer.step()

optimizer.zero_grad()

metric_logger.update(loss=losses, **loss_dict)

now_lr = optimizer.param_groups[0]["lr"]

metric_logger.update(lr=now_lr)

# end batch ----------------------------------------------------------------------

scheduler.step()

train_loss.append(mloss.tolist()[-1])

learning_rate.append(now_lr)

result_mAP = ComputeAP(model, val_dataloader, device=device)

voc_mAP = result_mAP[1] # @0.50

# write into txt

with open(results_file, "a") as f:

# box_loss, obj_clss, cls_loss, train_loss, lr

result_info = [str(round(i, 4)) for i in [mloss.tolist()[0]]] + \

[str(round(i, 4)) for i in [mloss.tolist()[1]]] + \

[str(round(i, 4)) for i in [mloss.tolist()[2]]] + \

[str(round(i, 4)) for i in [mloss.tolist()[-1]]] + \

[str(round(now_lr, 6))] + [str(round(voc_mAP, 6))]

txt = "epoch:{} {}".format(epoch, ' '.join(result_info))

f.write(txt + "\n")

if opt.savebest:

if voc_mAP > best_map:

print(f"{colorstr('Save best_map Weight')}: update mAP@0.50 from {best_map: .5f} to {voc_mAP: .5f}")

best_map = voc_mAP

with open(results_file, 'r') as f:

save_files = {

'model': model.state_dict(),

'optimizer': optimizer.state_dict(),

'training_results': f.read(),

'epoch': epoch,

'best_map': best_map

}

torch.save(save_files, save_path.format('best_map'))

else:

if (epoch + 1) % 20 == 0 or epoch == epochs - 1:

with open(results_file, 'r') as f:

save_files = {

'model': model.state_dict(),

'training_results': f.read(),

'epoch': epoch}

torch.save(save_files, save_path.format(epoch))

if __name__ == '__main__':

parser = argparse.ArgumentParser()

# -----------------------------------------

file = "yolov5s"

weight_file = f"weights/{file}" # 权重存储文件

result_file = f'results/{file}' # 训练损失、mAP等保存文件

if not os.path.exists(weight_file):

os.makedirs(weight_file)

if not os.path.exists(result_file):

os.makedirs(result_file)

# -----------------------------------------

parser.add_argument('--epochs', type=int, default=300)

parser.add_argument('--batch-size', type=int, default=4)

parser.add_argument('--nc', type=int, default=3)

yaml_path = f'cfg/models/{file}.yaml'

parser.add_argument('--yaml', type=str, default=yaml_path, help="model.yaml path")

parser.add_argument('--data', type=str, default='data/my_data.data', help='*.data path')

parser.add_argument('--hyp', type=str, default='cfg/hyps/hyp.scratch-med.yaml', help='hyperparameters path')

parser.add_argument('--optimizer', type=str, choices=['SGD', 'Adam', 'AdamW'], default='SGD', help='optimizer')

parser.add_argument('--cos-lr', type=bool, default=True, help='cosine LR scheduler')

parser.add_argument('--label-smoothing', type=float, default=0.0, help='Label smoothing epsilon')

parser.add_argument('--img-size', type=int, default=640, help='test size')

parser.add_argument('--rect', action='store_true', help='rectangular training')

parser.add_argument('--savebest', type=bool, default=False, help='only save best checkpoint')

# 当内存足够时, 设置为True, 将数据集加载到内存中, 在训练时不用从磁盘中读取图片可以加快训练速度

parser.add_argument('--cache-images', default=False, help='cache images for faster training')

# 预训练权重

weight = f'weights/{file}/{file}.pt'

parser.add_argument('--weights', type=str, default=weight if os.path.exists(weight) else "", help='initial weights path')

parser.add_argument('--device', default='cuda:0', help='device id (i.e. 0 or 0,1 or cpu)')

# 结果保存路径

time = datetime.datetime.now().strftime("%Y%m%d-%H%M%S")

results_file = f"./results/{file}/results{time}.txt"

parser.add_argument("--results_file", default=results_file, help="save results files")

save_file_path = f'./results/{file}/loss_and_lr{time}.png'

parser.add_argument('--save_file_path', default=save_file_path, help="save loss and lr fig")

save_path = f"./weights/{file}/{file}-" + "{}.pt"

parser.add_argument('--save_path', default=save_path, help="weight save path")

opt = parser.parse_args()

# 检查文件是否存在

opt.cfg = check_file(opt.yaml)

opt.data = check_file(opt.data)

opt.hyp = check_file(opt.hyp)

print(opt)

with open(opt.hyp) as f:

hyp = yaml.load(f, Loader=yaml.FullLoader)

train(hyp)

到了这里,关于【目标检测】YOLOv5算法实现(七):模型训练的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!