ReLU

import torch

from torch import nn

input = torch.tensor([[1, -0.5],

[-1, 3]])

input = torch.reshape(input, (-1, 1, 2, 2))

print(input.shape) # torch.Size([1, 1, 2, 2]) .shape = .size()

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.relu1 = nn.ReLU()

def forward(self, input):

output = self.relu1(input)

return output

tudui = Tudui()

output = tudui(input)

print(output) # tensor([[[[1., 0.],[0., 3.]]]])

Result

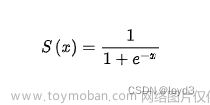

Sigmoid

import torch

import torchvision

from torch import nn

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

'''input = torch.tensor([[1, -0.5],

[-1, 3]])

input = torch.reshape(input, (-1, 1, 2, 2))

print(input.shape) # torch.Size([1, 1, 2, 2]) .shape = .size()'''

dataset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=64, shuffle=True)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.relu1 = nn.ReLU()

self.sigmoid1 = nn.Sigmoid()

def forward(self, input):

output = self.sigmoid1(input)

return output

tudui = Tudui()

writer = SummaryWriter('./logs_relu')

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images('input', imgs, step)

output = tudui(imgs)

writer.add_images('output', output, step)

step += 1

writer.close()

目的:引入非线性特征,非线性越多,才能训练出符合各种曲线,符合各种特征的模型,泛化能力好文章来源:https://www.toymoban.com/news/detail-816180.html

下面是ReLU的结果

文章来源地址https://www.toymoban.com/news/detail-816180.html

文章来源地址https://www.toymoban.com/news/detail-816180.html

到了这里,关于神经网络-非线性激活的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!

![[深度学习入门]什么是神经网络?[神经网络的架构、工作、激活函数]](https://imgs.yssmx.com/Uploads/2024/02/584734-1.png)