1. 需要的类库

import requests

from bs4 import BeautifulSoup

import pandas as pd

2. 请求地址

def fetch_data():

url = "https://bbs.xxx.com/" # Replace with the actual base URL

response = requests.get(url)

if response.status_code == 200:

return response.content

else:

print(f"Error fetching data. Status code: {response.status_code}")

return None

3. 编码

def parse_html(html_content, base_url):

soup = BeautifulSoup(html_content, 'html.parser')

items = soup.find_all('div', class_='text-list-model')

first_item = items[0]

contents = first_item.contents

data = []

for item in contents:

if item.select_one('.t-title') == None:

continue

title = item.select_one('.t-title').text.strip()

relative_url = item.select_one('a')['href']

full_url = base_url + relative_url

lights = item.select_one('.t-lights').text.strip()

replies = item.select_one('.t-replies').text.strip()

data.append({

'Title': title,

'URL': full_url,

'Lights': lights,

'Replies': replies

})

return data

注意:分析标签,这里加了非意向标签的跳过处理

4. 导出表格

def create_excel(data):

df = pd.DataFrame(data)

df.to_excel('hupu-top.xlsx', index=False)

print("Excel file created successfully.")

测试文章来源:https://www.toymoban.com/news/detail-818677.html

base_url = "https://bbs.xx.com" #替换成虎pu首页地址

html_content = fetch_data()

if html_content:

forum_data = parse_html(html_content, base_url)

create_excel(forum_data)

else:

print("Failed to create Excel file.")

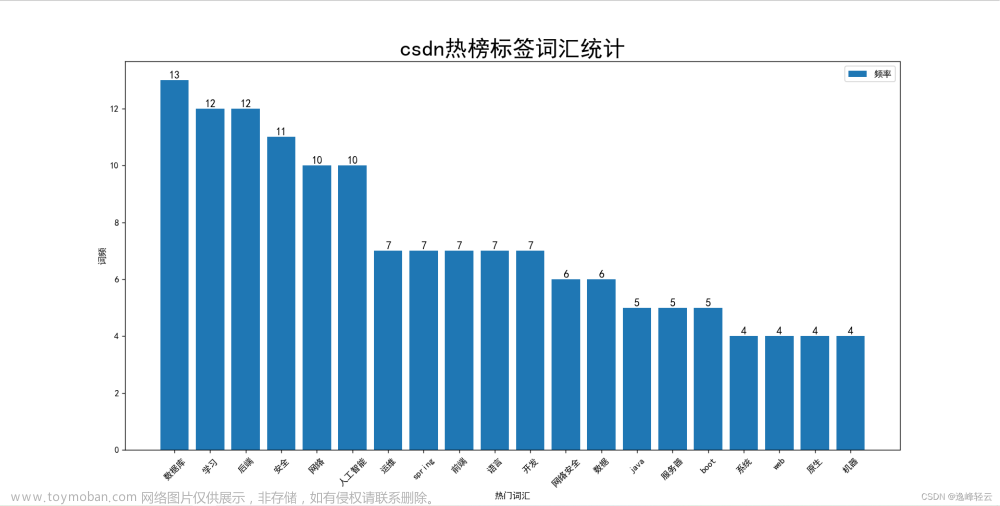

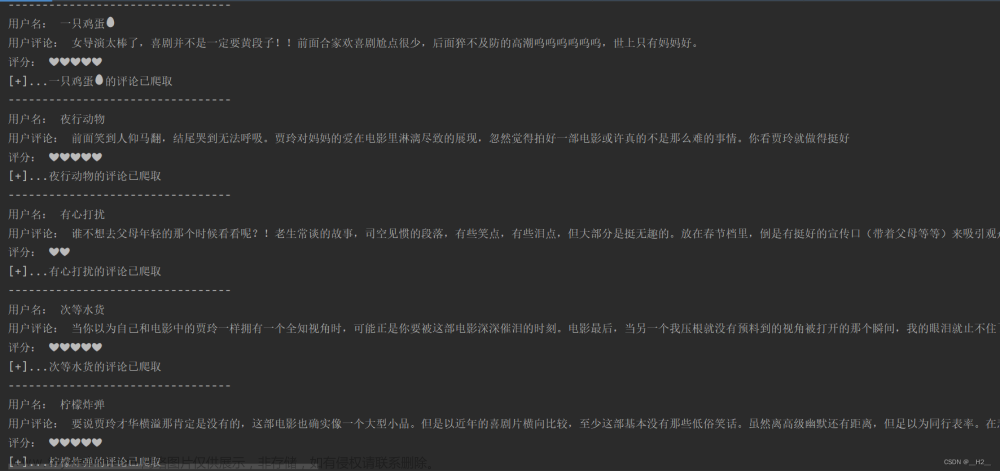

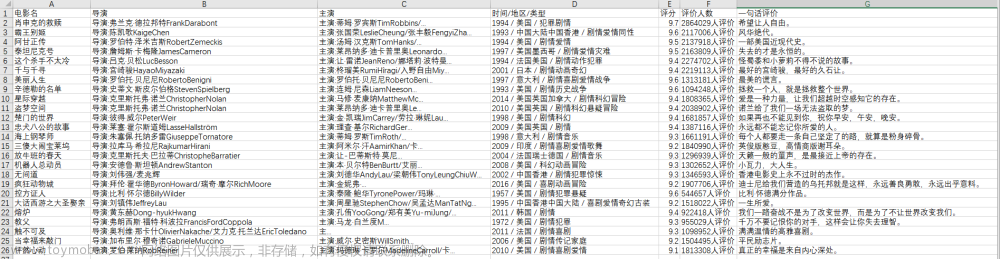

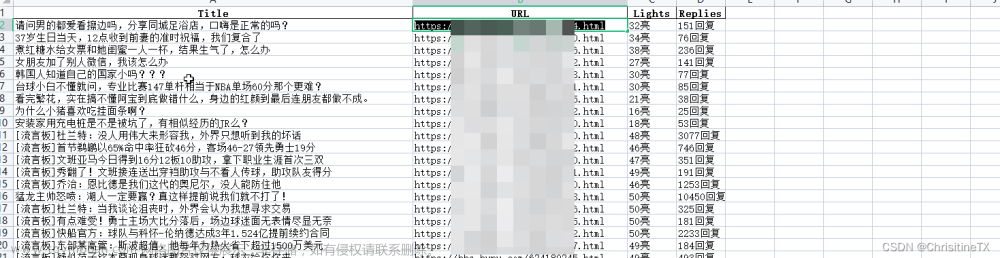

5. 成果展示

文章来源地址https://www.toymoban.com/news/detail-818677.html

文章来源地址https://www.toymoban.com/news/detail-818677.html

到了这里,关于python爬虫实战(8)--获取虎pu热榜的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!