EMS5730 Spring 2024 Homework #0

Release date: Jan 10, 2024

Due date: Jan 21, 2024 (Sunday) 23:59 pm

(Note: The course add-drop period ends at 5:30 pm on Jan 22.)

No late homework will be accepted!

Every Student MUST include the following statement, together with his/her signature in the

submitted homework.

I declare that the assignment submitted on the Elearning system is

original except for source material explicitly acknowledged, and that the

same or related material has not been previously submitted for another

course. I also acknowledge that I am aware of University policy and

regulations on honesty in academic work, and of the disciplinary

guidelines and procedures applicable to breaches of such policy and

regulations, as contained in the website

Submission notice:

● Submit your homework via the elearning system

General homework policies:

A student may discuss the problems with others. However, the work a student turns in must

be created COMPLETELY by oneself ALONE. A student may not share ANY written work or

pictures, nor may one copy answers from any source other than one’s own brain.

Each student MUST LIST on the homework paper the name of every person he/she has

discussed or worked with. If the answer includes content from any other source, the

student MUST STATE THE SOURCE. Failure to do so is cheating and will result in

sanctions. Copying answers from someone else is cheating even if one lists their name(s) on

the homework.

If there is information you need to solve a problem but the information is not stated in the

problem, try to find the data somewhere. If you cannot find it, state what data you need,

make a reasonable estimate of its value and justify any assumptions you make. You will be

graded not only on whether your answer is correct, but also on whether you have done an

intelligent analysis.

Q0 [10 marks]: Secure Virtual Machines Setup on the Cloud

In this task, you are required to set up virtual machines (VMs) on a cloud computing

platform. While you are free to choose any cloud platform, Google Cloud is recommended.

References [1] and [2] provide the tutorial for Google Cloud and Amazon AWS, respectively.

The default network settings in each cloud platform are insecure. Your VM can be hacked

by external users, resulting in resource overuse which may charge your credit card a

big bill of up to $5,000 USD. To protect your VMs from being hacked and prevent any

financial losses, you should set up secure network configurations for all your VMs.

In this part, you need to set up a whitelist for your VMs. You can choose one of the options

from the following choices to set up your whitelist: 1. only the IP corresponding to your

current device can access your VMs via SSH. Traffic from other sources should be blocked.

2. only users in the CUHK network can access your VMs via SSH. Traffic outside CUHK

should be blocked. You can connect to CUHK VPN to ensure you are in the CUHK network

(IP Range: 137.189.0.0/16). Reference [3] provides the CUHK VPN setup information from

ITSC.

a. [10 marks] Secure Virtual Machine Setup

Reference [4] and [5] are the user guides for the network security configuration of

AWS and Google Cloud, respectively. You can go through the document with respect

to the cloud platform you use. Then follow the listed steps to configure your VM’s

network:

i. locate or create the security group/ firewall of your VM;

ii. remove all rules of inbound/ ingress and outbound/ egress, except for the

default rule(s) responsible for internal access within the cloud platform;

iii. add a new rule to the inbound/ ingress, with the SSH port(s) of VMs (default:

22) and source specified, e.g., ‘137.189.0.0/16’ for CUHK users only;

iv. (Optional) more ports may be further permitted based on your needs (e.g.,

when completing Q1 below).

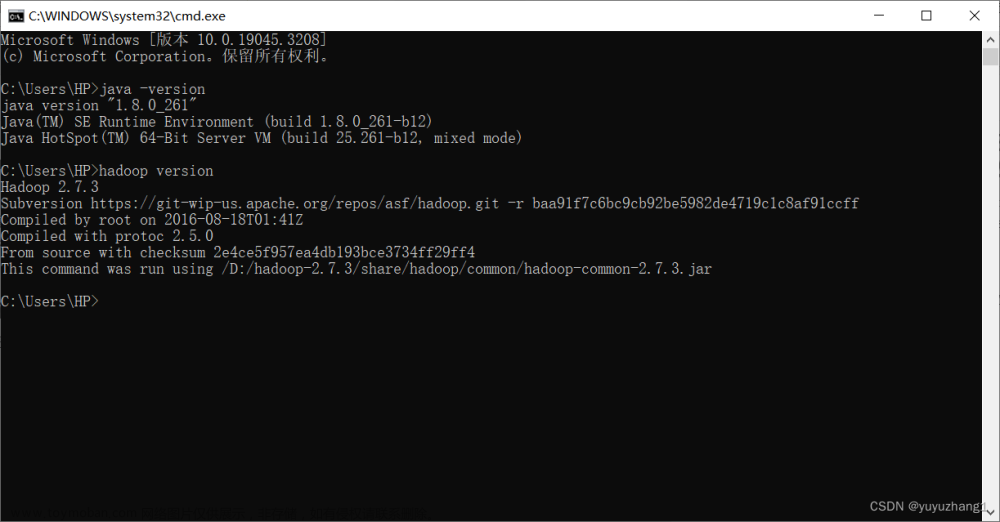

Q1 [90 marks + 20 bonus marks]: Hadoop Cluster Setup

Hadoop is an open-source software framework for distributed storage and processing. In this

problem, you are required to set up a Hadoop cluster using the VMs you instantiated in Q0.

In order to set up a Hadoop cluster with multiple virtual machines (VM), you can set up a

single-node Hadoop cluster for each VM first [6]. Then modify the configuration file in each

node to set up a Hadoop cluster with multiple nodes. References [7], [9], [10], [11] provide

the setup instructions for a Hadoop cluster. Some important notes/ tips on instantiating VMs

are given at the end of this section.

a. [20 marks] Single-node Hadoop Setup

In this part, you need to set up a single-node Hadoop cluster in a pseudo-distributed

mode and run the Terasort example on your Hadoop cluster.

i. Set up a single-node Hadoop cluster (recommended Hadoop version: 2.9.x,

all versions available in [16]). Attach the screenshot of http://localhost:50070

(or http://:50070 if opened in the browser of your local machine) to

verify that your installation is successful.

ii. After installing a single-node Hadoop cluster, you need to run the Terasort

example [8] on it. You need to record all your key steps, including your

commands and output. The following commands may be useful:

$ ./bin/hadoop jar \

./share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.2.jar \

teragen 120000 terasort/input

//generate the data for sorting

$ ./bin/hadoop jar \

./share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.2.jar \

terasort terasort/input terasort/output

//terasort the generated data

$ ./bin/hadoop jar \

./share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.2.jar \

teravalidate terasort/output terasort/check

//validate the output is sorted

Notes: To monitor the Hadoop service via Hadoop NameNode WebUI (http://ip>:50070) on your local browser, based on steps in Q0, you may further allow traffic

from CUHK network to access port 50070 of VMs.

b. [40 marks] Multi-node Hadoop Cluster Setup

After the setup of a single-node Hadoop cluster in each VM, you can modify the

configuration files in each node to set up the multi-node Hadoop cluster.

i. Install and set up a multi-node Hadoop cluster with 4 VMs (1 Master and 3

Slaves). Use the ‘jps’ command to verify all the processes are running.

ii. In this part, you need to use the ‘teragen’ command to generate 2 different

datasets to serve as the input for the Terasort program. You should use the

following two rules to determine the size of the two datasets of your own:

■ Size of dataset 1: (Your student ID % 3 + 1) GB

■ Size of dataset 2: (Your student ID % 20 + 10) GB

Then, run the Terasort code again for these two different datasets and

compare their running time.

Hints: Keep an image for your Hadoop cluster. You would need to use the Hadoop

cluster again for subsequent homework assignments.

Notes:

1. You may need to add each VM to the whitelist of your security group/ firewall

and further allow traffic towards more ports needed by Hadoop/YARN

services (reference [17] [18]).

2. For step i, the resulting cluster should consist of 1 namenode and 4

datanodes. More precisely, 1 namenode and 1 datanode would be running on

the master machine, and each slave machine runs one datanode.

3. Please ensure that after the cluster setup, the number of “Live Nodes” shown

on Hadoop NameNode WebUI (port 50070) is 4.

c. [30 marks] Running Python Code on Hadoop

Hadoop streaming is a utility that comes with the Hadoop distribution. This utility

allows you to create and run MapReduce jobs with any executable or script as the

mapper and/or the reducer. In this part, you need to run the Python wordcount script

to handle the Shakespeare dataset [12] via Hadoop streaming.

i. Reference [13] introduces the method to run a Python wordcount script via

Hadoop streaming. You can also download the script from the reference [14].

ii. Run the Python wordcount script and record the running time. The following

command may be useful:

$ ./bin/hadoop jar \

./share/hadoop/tools/lib/hadoop-streaming-2.9.2.jar \

-file mapper.py -mapper mapper.py \

-file reducer.py -reducer reducer.py \

-input input/* \

-output output

//submit a Python program via Hadoop streaming

d. [Bonus 20 marks] Compiling the Java WordCount program for MapReduce

The Hadoop framework is written in Java. You can easily compile and submit a Java

MapReduce job. In this part, you need to compile and run your own Java wordcount

program to process the Shakespeare dataset [12].

i. In order to compile the Java MapReduce program, you may need to use

“hadoop classpath” command to fetch the list of all Hadoop jars. Or you can

simply copy all dependency jars in a directory and use them for compilation.

Reference [15] introduces the method to compile and run a Java wordcount

program in the Hadoop cluster. You can also download the Java wordcount

program from reference [14].

ii. Run the Java wordcount program and compare the running time with part c.

Part (d) is a bonus question for IERG 4300 but required for ESTR 4300.

IMPORTANT NOTES:

1. Since AWS will not provide free credits anymore, we recommend you to use Google

Cloud (which offers a 90-day, $300 free trial) for this homework.

2. If you use Putty for SSH client, please download from the website

https://www.putty.org/ and avoid using the default private key. Failure to do so will

subject your AWS account/ Hadoop cluster to hijacking.

3. Launching instances with Ubuntu (version >= 18.04 LTS) is recommended. Hadoop

version 2.9.x is recommended. Older versions of Hadoop may have vulnerabilities

that can be exploited by hackers to launch DoS attacks.

4. (AWS) For each VM, you are recommended to use the t2.large instance type with

100GB hard disk, which consists of 2 CPU cores and 8GB RAM.

5. (Google) For each VM, you are recommended to use the n2-standard-2 instance

type with 100GB hard disk, which consists of 2 CPU cores and 8GB RAM.

6. When following the given references, you may need to modify the commands

according to your own environment, e.g., file location, etc.

7. After installing a single-node Hadoop, you can save the system image and launch

multiple copies of the VM with that image. This can simplify your process of installing

the single-node Hadoop cluster on each VM.

8. Keep an image for your Hadoop cluster. You will need to use the Hadoop cluster

again for subsequent homework assignments.

9. Always refer to the logs for debugging single/multi-node Hadoop setup, which

contains more details than CLI outputs.

10. Please shut down (not to terminate) your VMs when you are not using them. This can

save you some credits and avoid being attacked when your VMs are idle.

Submission Requirements:

1. Include all the key steps/ commands, your cluster configuration details, source codes

of your programs, your compiling steps (if any), etc., together with screenshots, into a

SINGLE PDF report. Your report should also include the signed declaration (the first

page of this homework file).

2. Package all the source codes (as you included in step 1) into a zip file individually.

3. You should submit two individual files: your homework report (in PDF format) and a

zip file packaged all the codes of your homework.

4. Please submit your homework report and code zip file through the Blackboard

WX:codehelp文章来源地址https://www.toymoban.com/news/detail-825675.html

文章来源:https://www.toymoban.com/news/detail-825675.html

到了这里,关于EMS5730 MapReduce program的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!