安装k8s集群相关系统及组件的详细版本号

Ubuntu 22.04.3 LTS

k8s: v1.27.5

containerd: 1.6.23

etcd: v3.5.9

coredns: 1.11.1

calico: v3.24.6

安装步骤清单:

1.deploy机器做好对所有k8s node节点的免密登陆操作

2.deploy机器安装好python2版本以及pip,然后安装ansible

3.对k8s集群配置做一些定制化配置并开始部署

# 需要注意的在线安装因为会从github及dockerhub上下载文件及镜像,有时候访问这些国外网络会非常慢,这里我也会大家准备好了完整离线安装包,下载地址如下,和上面的安装脚本放在同一目录下,再执行上面的安装命令即可

# 此离线安装包里面的k8s版本为v1.27.5

https://cloud.189.cn/web/share?code=6bayie3MNfIj(访问码:6trb)

1.添加主机名

sudo hostnamectl set-hostname node1

cat >> /etc/hosts <<EOF

10.0.0.220 node1

10.0.0.221 node2

10.0.0.222 node3

10.0.0.223 node4

10.0.0.224 node5

EOF

2.优化系统参数

#开启粘贴模式 set paste

#root的密码 xuexi123

#!/bin/bash

# http://releases.ubuntu.com/jammy/

# Control switch

#[[ "$1" != "" ]] && iptables_yn="$1" || iptables_yn='n'

iptables_yn="${1:-n}"

# install ssh and configure

apt-get install openssh-server -y

echo 'PermitRootLogin yes' >> /etc/ssh/sshd_config

echo 'root:xuexi123'|chpasswd

systemctl restart sshd && systemctl status ssh -l --no-pager

# Change apt-get source list

# https://opsx.alibaba.com/mirror

ubuntuCodename=$(lsb_release -a 2>/dev/null|awk 'END{print $NF}')

\cp /etc/apt/sources.list{,_bak}

#sed -ri "s+archive.ubuntu.com+mirrors.aliyun.com+g" /etc/apt/sources.list

# https://developer.aliyun.com/mirror/ubuntu?spm=a2c6h.13651102.0.0.3e221b11ev6YG5

# ubuntu 22.04: jammy

# ubuntu 20.04: focal

# ubuntu 18.04: bionic

# ubuntu 16.04: xenial

echo "

deb https://mirrors.aliyun.com/ubuntu/ ${ubuntuCodename} main restricted universe multiverse

deb-src https://mirrors.aliyun.com/ubuntu/ ${ubuntuCodename} main restricted universe multiverse

deb https://mirrors.aliyun.com/ubuntu/ ${ubuntuCodename}-security main restricted universe multiverse

deb-src https://mirrors.aliyun.com/ubuntu/ ${ubuntuCodename}-security main restricted universe multiverse

deb https://mirrors.aliyun.com/ubuntu/ ${ubuntuCodename}-updates main restricted universe multiverse

deb-src https://mirrors.aliyun.com/ubuntu/ ${ubuntuCodename}-updates main restricted universe multiverse

deb https://mirrors.aliyun.com/ubuntu/ ${ubuntuCodename}-backports main restricted universe multiverse

deb-src https://mirrors.aliyun.com/ubuntu/ ${ubuntuCodename}-backports main restricted universe multiverse

" > /etc/apt/sources.list

apt-get update

# Install package

pkgList="curl wget unzip gcc swig automake make perl cpio git libmbedtls-dev libudns-dev libev-dev python-pip python3-pip lrzsz iftop nethogs nload htop ifstat iotop iostat vim" &&\

for Package in ${pkgList}; do apt-get -y install $Package;done

apt-get clean all

# Custom profile

cat > /etc/profile.d/boge.sh << EOF

HISTSIZE=10000

HISTTIMEFORMAT="%F %T \$(whoami) "

alias l='ls -AFhlt --color=auto'

alias lh='l | head'

alias ll='ls -l --color=auto'

alias ls='ls --color=auto'

alias vi=vim

GREP_OPTIONS="--color=auto"

alias grep='grep --color'

alias egrep='egrep --color'

alias fgrep='fgrep --color'

EOF

sed -i 's@^"syntax on@syntax on@' /etc/vim/vimrc

# PS1

[ -z "$(grep ^PS1 ~/.bashrc)" ] && echo "PS1='\${debian_chroot:+(\$debian_chroot)}\\[\\e[1;32m\\]\\u@\\h\\[\\033[00m\\]:\\[\\033[01;34m\\]\\w\\[\\033[00m\\]\\$ '" >> ~/.bashrc

# history

[ -z "$(grep history-timestamp ~/.bashrc)" ] && echo "PROMPT_COMMAND='{ msg=\$(history 1 | { read x y; echo \$y; });user=\$(whoami); echo \$(date \"+%Y-%m-%d %H:%M:%S\"):\$user:\`pwd\`/:\$msg ---- \$(who am i); } >> /tmp/\`hostname\`.\`whoami\`.history-timestamp'" >> ~/.bashrc

# /etc/security/limits.conf

[ -e /etc/security/limits.d/*nproc.conf ] && rename nproc.conf nproc.conf_bk /etc/security/limits.d/*nproc.conf

[ -z "$(grep 'session required pam_limits.so' /etc/pam.d/common-session)" ] && echo "session required pam_limits.so" >> /etc/pam.d/common-session

sed -i '/^# End of file/,$d' /etc/security/limits.conf

cat >> /etc/security/limits.conf <<EOF

# End of file

* soft nproc 1000000

* hard nproc 1000000

* soft nofile 1000000

* hard nofile 1000000

root soft nproc 1000000

root hard nproc 1000000

root soft nofile 1000000

root hard nofile 1000000

EOF

ulimit -SHn 1000000

# /etc/hosts

[ "$(hostname -i | awk '{print $1}')" != "127.0.0.1" ] && sed -i "s@127.0.0.1.*localhost@&\n127.0.0.1 $(hostname)@g" /etc/hosts

# Set timezone

rm -rf /etc/localtime

ln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

# /etc/sysctl.conf

:<<BOGE

fs.file-max = 1000000

这个参数定义了系统中最大的文件句柄数。文件句柄是用于访问文件的数据结构。增加这个值可以提高系统同时打开文件的能力。

fs.inotify.max_user_instances = 8192

inotify是Linux内核中的一个机制,用于监视文件系统事件。这个参数定义了每个用户可以创建的inotify实例的最大数量。

net.ipv4.tcp_syncookies = 1

当系统遭受SYN洪水攻击时,启用syncookies可以防止系统资源被耗尽。SYN cookies是一种机制,用于在TCP三次握手中保护服务器端资源。

net.ipv4.tcp_fin_timeout = 30

这个参数定义了TCP连接中,等待关闭的时间。当一端发送FIN信号后,等待对端关闭连接的超时时间。

net.ipv4.tcp_tw_reuse = 1

启用该参数后,可以允许将TIME-WAIT状态的TCP连接重新用于新的连接。这可以减少系统中TIME-WAIT连接的数量。

net.ipv4.ip_local_port_range = 1024 65000

这个参数定义了本地端口的范围,用于分配给发送请求的应用程序。它限制了可用于客户端连接的本地端口范围。

net.ipv4.tcp_max_syn_backlog = 16384

这个参数定义了TCP连接请求的队列长度。当系统处理不及时时,超过该队列长度的连接请求将被拒绝。

net.ipv4.tcp_max_tw_buckets = 6000

这个参数定义了系统同时保持TIME-WAIT状态的最大数量。超过这个数量的连接将被立即关闭。

net.ipv4.route.gc_timeout = 100

这个参数定义了内核路由表清理的时间间隔,单位是秒。它影响路由缓存的生命周期。

net.ipv4.tcp_syn_retries = 1

这个参数定义了在发送SYN请求后,等待对端回应的次数。超过指定次数后仍未响应,连接将被认为失败。

net.ipv4.tcp_synack_retries = 1

这个参数定义了在发送SYN+ACK回应后,等待对端发送ACK的次数。超过指定次数后仍未收到ACK,连接将被认为失败。

net.core.somaxconn = 32768

这个参数定义了监听队列的最大长度。当服务器正在处理的连接数超过此值时,新的连接请求将被拒绝。

net.core.netdev_max_backlog = 32768

这个参数定义了网络设备接收队列的最大长度。当接收队列已满时,新的数据包将被丢弃。

net.core.netdev_budget = 5000

这个参数定义了每个网络设备接收队列在每个时间间隔中可以处理的数据包数量。

net.ipv4.tcp_timestamps = 0

禁用TCP时间戳。时间戳可以用于解决网络中的数据包乱序问题,但在高负载环境下可能会增加开销。

net.ipv4.tcp_max_orphans = 32768

这个参数定义了系统中允许存在的最大孤立(没有关联的父连接)TCP连接数量。超过这个数量的孤立连接将被立即关闭。

BOGE

[ -z "$(grep 'fs.file-max' /etc/sysctl.conf)" ] && cat >> /etc/sysctl.conf << EOF

fs.file-max = 1000000

fs.inotify.max_user_instances = 8192

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_fin_timeout = 30

net.ipv4.tcp_tw_reuse = 1

net.ipv4.ip_local_port_range = 1024 65000

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_max_tw_buckets = 6000

net.ipv4.route.gc_timeout = 100

net.ipv4.tcp_syn_retries = 1

net.ipv4.tcp_synack_retries = 1

net.core.somaxconn = 32768

net.core.netdev_max_backlog = 32768

net.core.netdev_budget = 5000

net.ipv4.tcp_timestamps = 0

net.ipv4.tcp_max_orphans = 32768

EOF

sysctl -p

# Normal display of Chinese in the text

apt-get -y install locales

echo 'export LANG=en_US.UTF-8'|tee -a /etc/profile && source /etc/profile

sed -i 's@^ACTIVE_CONSOLES.*@ACTIVE_CONSOLES="/dev/tty[1-2]"@' /etc/default/console-setup

#sed -i 's@^@#@g' /etc/init/tty[3-6].conf

locale-gen en_US.UTF-8

echo "en_US.UTF-8 UTF-8" > /var/lib/locales/supported.d/local

cat > /etc/default/locale << EOF

LANG=en_US.UTF-8

LANGUAGE=en_US:en

EOF

#sed -i 's@^@#@g' /etc/init/control-alt-delete.conf

# Update time

which ntpdate || apt-get update;apt install ntpdate

ntpdate pool.ntp.org

[ ! -e "/var/spool/cron/crontabs/root" -o -z "$(grep ntpdate /var/spool/cron/crontabs/root 2>/dev/null)" ] && { echo "*/20 * * * * $(which ntpdate) pool.ntp.org > /dev/null 2>&1" >> /var/spool/cron/crontabs/root;chmod 600 /var/spool/cron/crontabs/root; }

# iptables

if [ "${iptables_yn}" == 'y' ]; then

apt-get -y install debconf-utils

echo iptables-persistent iptables-persistent/autosave_v4 boolean true | sudo debconf-set-selections

echo iptables-persistent iptables-persistent/autosave_v6 boolean true | sudo debconf-set-selections

apt-get -y install iptables-persistent

if [ -e "/etc/iptables/rules.v4" ] && [ -n "$(grep '^:INPUT DROP' /etc/iptables/rules.v4)" -a -n "$(grep 'NEW -m tcp --dport 22 -j ACCEPT' /etc/iptables/rules.v4)" -a -n "$(grep 'NEW -m tcp --dport 80 -j ACCEPT' /etc/iptables/rules.v4)" ]; then

IPTABLES_STATUS=yes

else

IPTABLES_STATUS=no

fi

if [ "${IPTABLES_STATUS}" == "no" ]; then

cat > /etc/iptables/rules.v4 << EOF

# Firewall configuration written by system-config-securitylevel

# Manual customization of this file is not recommended.

*filter

:INPUT DROP [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

:syn-flood - [0:0]

-A INPUT -i lo -j ACCEPT

-A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT

-A INPUT -p tcp -m state --state NEW -m tcp --dport 22 -j ACCEPT

-A INPUT -p tcp -m state --state NEW -m tcp --dport 80 -j ACCEPT

-A INPUT -p tcp -m state --state NEW -m tcp --dport 443 -j ACCEPT

-A INPUT -p icmp -m icmp --icmp-type 8 -j ACCEPT

COMMIT

EOF

fi

FW_PORT_FLAG=$(grep -ow "dport ${ssh_port}" /etc/iptables/rules.v4)

[ -z "${FW_PORT_FLAG}" -a "${ssh_port}" != "22" ] && sed -i "s@dport 22 -j ACCEPT@&\n-A INPUT -p tcp -m state --state NEW -m tcp --dport ${ssh_port} -j ACCEPT@" /etc/iptables/rules.v4

iptables-restore < /etc/iptables/rules.v4

/bin/cp /etc/iptables/rules.v{4,6}

sed -i 's@icmp@icmpv6@g' /etc/iptables/rules.v6

ip6tables-restore < /etc/iptables/rules.v6

ip6tables-save > /etc/iptables/rules.v6

fi

service rsyslog restart

service ssh restart

. /etc/profile

. ~/.bashrc

# set ip and dns

validate_ip() {

local ip_var_name=$1

while true; do

read -p "Input IP address($ip_var_name): " $ip_var_name

# 检测是否为空

if [ -z "${!ip_var_name}" ]; then

echo "Input is empty. Please try again."

continue

fi

# 检测是否符合IP地址的格式

if ! [[ ${!ip_var_name} =~ ^([0-9]{1,3}\.){3}[0-9]{1,3}$ ]]; then

echo "Invalid IP address format. Please try again."

continue

fi

# 输入符合要求,跳出循环

break

done

}

# 调用函数并传递变量名作为参数

validate_ip "ip_address"

echo "IP address: $ip_address"

validate_ip "ip_gateway"

echo "IP gateway: $ip_gateway"

validate_ip "dns1_ip"

echo "Dns1 ip: $dns1_ip"

validate_ip "dns2_ip"

echo "Dns2 ip: $dns2_ip"

cat > /etc/netplan/00-installer-config.yaml << EOF

network:

version: 2

renderer: networkd

ethernets:

ens32:

dhcp4: false

dhcp6: false

addresses:

- ${ip_address}/24

routes:

- to: default

via: ${ip_gateway}

nameservers:

addresses: [${dns1_ip}, ${dns2_ip}]

EOF

apt install resolvconf -y

cat > /etc/resolvconf/resolv.conf.d/head << EOF

nameserver ${dns1_ip}

nameserver ${dns2_ip}

EOF

systemctl restart resolvconf

echo "过个10秒左右的样子可以关闭终端,然后换成刚才输入的主机IP进行ssh登陆即可."

netplan apply

自建k8s集群部署

挂载数据盘

注意: 如无需独立数据盘可忽略此步骤

# 创建下面4个目录

/var/lib/container/{kubelet,docker,nfs_dir}

/nfs_dir

# 不分区直接格式化数据盘,假设数据盘是/dev/vdb

mkfs.ext4 /dev/vdb

# 然后编辑 /etc/fstab,添加如下内容:

/dev/vdb /var/lib/container/ ext4 defaults 0 0

/var/lib/container/kubelet /var/lib/kubelet none defaults,bind 0 0

/var/lib/container/docker /var/lib/docker none defaults,bind 0 0

/var/lib/container/nfs_dir /nfs_dir none defaults,bind 0 0

# 刷新生效挂载

mount -a

k8s安装脚本说明

部署脚本调用核心项目github: https://github.com/easzlab/kubeasz , 此脚本是这个项目的上一层简化二进制部署k8s实施的封装

此脚本安装过的操作系统 CentOS 7, Ubuntu 16.04/18.04/20.04/22.04

注意: k8s 版本 >= 1.24 时,CRI仅支持 containerd

# 安装命令示例(假设我这里root的密码是rootPassword,如已做免密这里的密码可以任意填写;10.0.1为内网网段;后面的依次是主机位;CRI容器运行时;CNI网络插件;我们自己的域名是boge.com;要设定k8s集群名称为test):

# 单台节点部署

bash k8s_install_new.sh rootPassword 10.0.1 201 containerd calico boge.com test-cn

# 多台节点部署

bash k8s_install_new.sh rootPassword 10.0.1 201\ 202\ 203\ 204 containerd calico boge.com test-cn

# 注意:如果是在海外部署,而集群名称又不带aws的话,可以把安装脚本内此部分代码注释掉,避免pip安装过慢

if ! `echo $clustername |grep -iwE aws &>/dev/null`; then

mkdir ~/.pip

cat > ~/.pip/pip.conf <<CB

[global]

index-url = https://mirrors.aliyun.com/pypi/simple

[install]

trusted-host=mirrors.aliyun.com

CB

fi

# 直接执行上面的命令为在线安装,如需在离线环境部署,可自己在本地虚拟机安装一遍,然后将/etc/kubeasz目录打包成kubeasz.tar.gz,在无网络的机器上安装,把脚本和这个压缩包放一起再执行上面这行命令即是离线安装了

3.完整部署脚本k8s_install_new.sh

#!/bin/bash

# auther: boge

# descriptions: the shell scripts will use ansible to deploy K8S at binary for siample

# docker-tag

# curl -s -S "https://registry.hub.docker.com/v2/repositories/easzlab/kubeasz-k8s-bin/tags/" | jq '."results"[]["name"]' |sort -rn

# github: https://github.com/easzlab/kubeasz

#########################################################################

# 此脚本安装过的操作系统 CentOS/RedHat 7, Ubuntu 16.04/18.04/20.04/22.04

#########################################################################

echo "记得先把数据盘挂载弄好,已经弄好直接回车,否则ctrl+c终止脚本.(Remember to mount the data disk first, and press Enter directly, otherwise ctrl+c terminates the script.)"

read -p "" xxxxxx

# 传参检测

[ $# -ne 7 ] && echo -e "Usage: $0 rootpasswd netnum nethosts cri cni k8s-cluster-name\nExample: bash $0 rootPassword 10.0.1 201\ 202\ 203\ 204 [containerd|docker] [calico|flannel|cilium] boge.com test-cn\n" && exit 11

# 变量定义

export release=3.6.2 # 支持k8s多版本使用,定义下面k8s_ver变量版本范围: 1.28.1 v1.27.5 v1.26.8 v1.25.13 v1.24.17

export k8s_ver=v1.27.5 # | docker-tag tags easzlab/kubeasz-k8s-bin 注意: k8s 版本 >= 1.24 时,仅支持 containerd

rootpasswd=$1

netnum=$2

nethosts=$3

cri=$4

cni=$5

domainName=$6

clustername=$7

if ls -1v ./kubeasz*.tar.gz &>/dev/null;then software_packet="$(ls -1v ./kubeasz*.tar.gz )";else software_packet="";fi

pwd="/etc/kubeasz"

# deploy机器升级软件库

if cat /etc/redhat-release &>/dev/null;then

yum update -y

else

apt-get update && apt-get upgrade -y && apt-get dist-upgrade -y

[ $? -ne 0 ] && apt-get -yf install

fi

# deploy机器检测python环境

python2 -V &>/dev/null

if [ $? -ne 0 ];then

if cat /etc/redhat-release &>/dev/null;then

yum install gcc openssl-devel bzip2-devel

wget https://www.python.org/ftp/python/2.7.16/Python-2.7.16.tgz

tar xzf Python-2.7.16.tgz

cd Python-2.7.16

./configure --enable-optimizations

make altinstall

ln -s /usr/bin/python2.7 /usr/bin/python

cd -

else

apt-get install -y python2.7 && ln -s /usr/bin/python2.7 /usr/bin/python

fi

fi

python3 -V &>/dev/null

if [ $? -ne 0 ];then

if cat /etc/redhat-release &>/dev/null;then

yum install python3 -y

else

apt-get install -y python3

fi

fi

# deploy机器设置pip安装加速源

if `echo $clustername |grep -iwE cn &>/dev/null`; then

mkdir ~/.pip

cat > ~/.pip/pip.conf <<CB

[global]

index-url = https://mirrors.aliyun.com/pypi/simple

[install]

trusted-host=mirrors.aliyun.com

CB

fi

# deploy机器安装相应软件包

which python || ln -svf `which python2.7` /usr/bin/python

if cat /etc/redhat-release &>/dev/null;then

yum install git epel-release python-pip sshpass -y

[ -f ./get-pip.py ] && python ./get-pip.py || {

wget https://bootstrap.pypa.io/pip/2.7/get-pip.py && python get-pip.py

}

else

if grep -Ew '20.04|22.04' /etc/issue &>/dev/null;then apt-get install sshpass -y;else apt-get install python-pip sshpass -y;fi

[ -f ./get-pip.py ] && python ./get-pip.py || {

wget https://bootstrap.pypa.io/pip/2.7/get-pip.py && python get-pip.py

}

fi

python -m pip install --upgrade "pip < 21.0"

which pip || ln -svf `which pip` /usr/bin/pip

pip -V

pip install setuptools -U

pip install --no-cache-dir ansible netaddr

# 在deploy机器做其他node的ssh免密操作

for host in `echo "${nethosts}"`

do

echo "============ ${netnum}.${host} ===========";

if [[ ${USER} == 'root' ]];then

[ ! -f /${USER}/.ssh/id_rsa ] &&\

ssh-keygen -t rsa -P '' -f /${USER}/.ssh/id_rsa

else

[ ! -f /home/${USER}/.ssh/id_rsa ] &&\

ssh-keygen -t rsa -P '' -f /home/${USER}/.ssh/id_rsa

fi

sshpass -p ${rootpasswd} ssh-copy-id -o StrictHostKeyChecking=no ${USER}@${netnum}.${host}

if cat /etc/redhat-release &>/dev/null;then

ssh -o StrictHostKeyChecking=no ${USER}@${netnum}.${host} "yum update -y"

else

ssh -o StrictHostKeyChecking=no ${USER}@${netnum}.${host} "apt-get update && apt-get upgrade -y && apt-get dist-upgrade -y"

[ $? -ne 0 ] && ssh -o StrictHostKeyChecking=no ${USER}@${netnum}.${host} "apt-get -yf install"

fi

done

# deploy机器下载k8s二进制安装脚本(注:这里下载可能会因网络原因失败,可以多尝试运行该脚本几次)

if [[ ${software_packet} == '' ]];then

if [[ ! -f ./ezdown ]];then

curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

fi

# 使用工具脚本下载

sed -ri "s+^(K8S_BIN_VER=).*$+\1${k8s_ver}+g" ezdown

chmod +x ./ezdown

# ubuntu_22 to download package of Ubuntu 22.04

./ezdown -D && ./ezdown -P ubuntu_22 && ./ezdown -X

else

tar xvf ${software_packet} -C /etc/

sed -ri "s+^(K8S_BIN_VER=).*$+\1${k8s_ver}+g" ${pwd}/ezdown

chmod +x ${pwd}/{ezctl,ezdown}

chmod +x ./ezdown

./ezdown -D # 离线安装 docker,检查本地文件,正常会提示所有文件已经下载完成,并上传到本地私有镜像仓库

./ezdown -S # 启动 kubeasz 容器

fi

# 初始化一个名为$clustername的k8s集群配置

CLUSTER_NAME="$clustername"

${pwd}/ezctl new ${CLUSTER_NAME}

if [[ $? -ne 0 ]];then

echo "cluster name [${CLUSTER_NAME}] was exist in ${pwd}/clusters/${CLUSTER_NAME}."

exit 1

fi

if [[ ${software_packet} != '' ]];then

# 设置参数,启用离线安装

# 离线安装文档:https://github.com/easzlab/kubeasz/blob/3.6.2/docs/setup/offline_install.md

sed -i 's/^INSTALL_SOURCE.*$/INSTALL_SOURCE: "offline"/g' ${pwd}/clusters/${CLUSTER_NAME}/config.yml

fi

# to check ansible service

ansible all -m ping

#---------------------------------------------------------------------------------------------------

#修改二进制安装脚本配置 config.yml

sed -ri "s+^(CLUSTER_NAME:).*$+\1 \"${CLUSTER_NAME}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

## k8s上日志及容器数据存独立磁盘步骤(参考阿里云的)

mkdir -p /var/lib/container/{kubelet,docker,nfs_dir} /var/lib/{kubelet,docker} /nfs_dir

## 不用fdisk分区,直接格式化数据盘 mkfs.ext4 /dev/vdb,按下面添加到fstab后,再mount -a刷新挂载(blkid /dev/sdx)

## cat /etc/fstab

# UUID=105fa8ff-bacd-491f-a6d0-f99865afc3d6 / ext4 defaults 1 1

# /dev/vdb /var/lib/container/ ext4 defaults 0 0

# /var/lib/container/kubelet /var/lib/kubelet none defaults,bind 0 0

# /var/lib/container/docker /var/lib/docker none defaults,bind 0 0

# /var/lib/container/nfs_dir /nfs_dir none defaults,bind 0 0

## tree -L 1 /var/lib/container

# /var/lib/container

# ├── docker

# ├── kubelet

# └── lost+found

# docker data dir

DOCKER_STORAGE_DIR="/var/lib/container/docker"

sed -ri "s+^(STORAGE_DIR:).*$+STORAGE_DIR: \"${DOCKER_STORAGE_DIR}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# containerd data dir

CONTAINERD_STORAGE_DIR="/var/lib/container/containerd"

sed -ri "s+^(STORAGE_DIR:).*$+STORAGE_DIR: \"${CONTAINERD_STORAGE_DIR}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# kubelet logs dir

KUBELET_ROOT_DIR="/var/lib/container/kubelet"

sed -ri "s+^(KUBELET_ROOT_DIR:).*$+KUBELET_ROOT_DIR: \"${KUBELET_ROOT_DIR}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

if [[ $clustername != 'aws' ]]; then

# docker aliyun repo

REG_MIRRORS="https://pqbap4ya.mirror.aliyuncs.com"

sed -ri "s+^REG_MIRRORS:.*$+REG_MIRRORS: \'[\"${REG_MIRRORS}\"]\'+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

fi

# [docker]信任的HTTP仓库

sed -ri "s+127.0.0.1/8+${netnum}.0/24+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# disable dashboard auto install

sed -ri "s+^(dashboard_install:).*$+\1 \"no\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# 融合配置准备(按示例部署命令这里会生成testk8s.boge.com这个域名,部署脚本会基于这个域名签证书,优势是后面访问kube-apiserver,可以基于此域名解析任意IP来访问,灵活性更高)

CLUSEER_WEBSITE="${CLUSTER_NAME}k8s.${domainName}"

lb_num=$(grep -wn '^MASTER_CERT_HOSTS:' ${pwd}/clusters/${CLUSTER_NAME}/config.yml |awk -F: '{print $1}')

lb_num1=$(expr ${lb_num} + 1)

lb_num2=$(expr ${lb_num} + 2)

sed -ri "${lb_num1}s+.*$+ - "${CLUSEER_WEBSITE}"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

sed -ri "${lb_num2}s+(.*)$+#\1+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# node节点最大pod 数

MAX_PODS="120"

sed -ri "s+^(MAX_PODS:).*$+\1 ${MAX_PODS}+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# calico 自建机房都在二层网络可以设置 CALICO_IPV4POOL_IPIP=“off”,以提高网络性能; 公有云上VPC在三层网络,需设置CALICO_IPV4POOL_IPIP: "Always"开启ipip隧道

#sed -ri "s+^(CALICO_IPV4POOL_IPIP:).*$+\1 \"off\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# 修改二进制安装脚本配置 hosts

# clean old ip

sed -ri '/192.168.1.1/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.2/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.3/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.4/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.5/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

# 输入准备创建ETCD集群的主机位

echo "enter etcd hosts here (example: 203 202 201) ↓"

read -p "" ipnums

for ipnum in `echo ${ipnums}`

do

echo $netnum.$ipnum

sed -i "/\[etcd/a $netnum.$ipnum" ${pwd}/clusters/${CLUSTER_NAME}/hosts

done

# 输入准备创建KUBE-MASTER集群的主机位

echo "enter kube-master hosts here (example: 202 201) ↓"

read -p "" ipnums

for ipnum in `echo ${ipnums}`

do

echo $netnum.$ipnum

sed -i "/\[kube_master/a $netnum.$ipnum" ${pwd}/clusters/${CLUSTER_NAME}/hosts

done

# 输入准备创建KUBE-NODE集群的主机位

echo "enter kube-node hosts here (example: 204 203) ↓"

read -p "" ipnums

for ipnum in `echo ${ipnums}`

do

echo $netnum.$ipnum

sed -i "/\[kube_node/a $netnum.$ipnum" ${pwd}/clusters/${CLUSTER_NAME}/hosts

done

# 配置容器运行时CNI

case ${cni} in

flannel)

sed -ri "s+^CLUSTER_NETWORK=.*$+CLUSTER_NETWORK=\"${cni}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

;;

calico)

sed -ri "s+^CLUSTER_NETWORK=.*$+CLUSTER_NETWORK=\"${cni}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

;;

cilium)

sed -ri "s+^CLUSTER_NETWORK=.*$+CLUSTER_NETWORK=\"${cni}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

;;

*)

echo "cni need be flannel or calico or cilium."

exit 11

esac

# 配置K8S的ETCD数据备份的定时任务

# https://github.com/easzlab/kubeasz/blob/master/docs/op/cluster_restore.md

if cat /etc/redhat-release &>/dev/null;then

if ! grep -w '94.backup.yml' /var/spool/cron/root &>/dev/null;then echo "00 00 * * * /usr/local/bin/ansible-playbook -i /etc/kubeasz/clusters/${CLUSTER_NAME}/hosts -e @/etc/kubeasz/clusters/${CLUSTER_NAME}/config.yml /etc/kubeasz/playbooks/94.backup.yml &> /dev/null; find /etc/kubeasz/clusters/${CLUSTER_NAME}/backup/ -type f -name '*.db' -mtime +3|xargs rm -f" >> /var/spool/cron/root;else echo exists ;fi

chown root.crontab /var/spool/cron/root

chmod 600 /var/spool/cron/root

rm -f /var/run/cron.reboot

service crond restart

else

if ! grep -w '94.backup.yml' /var/spool/cron/crontabs/root &>/dev/null;then echo "00 00 * * * /usr/local/bin/ansible-playbook -i /etc/kubeasz/clusters/${CLUSTER_NAME}/hosts -e @/etc/kubeasz/clusters/${CLUSTER_NAME}/config.yml /etc/kubeasz/playbooks/94.backup.yml &> /dev/null; find /etc/kubeasz/clusters/${CLUSTER_NAME}/backup/ -type f -name '*.db' -mtime +3|xargs rm -f" >> /var/spool/cron/crontabs/root;else echo exists ;fi

chown root.crontab /var/spool/cron/crontabs/root

chmod 600 /var/spool/cron/crontabs/root

rm -f /var/run/crond.reboot

service cron restart

fi

#---------------------------------------------------------------------------------------------------

# 准备开始安装了

rm -rf ${pwd}/{dockerfiles,docs,.gitignore,pics,dockerfiles} &&\

find ${pwd}/ -name '*.md'|xargs rm -f

read -p "Enter to continue deploy k8s to all nodes >>>" YesNobbb

# now start deploy k8s cluster

cd ${pwd}/

# to prepare CA/certs & kubeconfig & other system settings

${pwd}/ezctl setup ${CLUSTER_NAME} 01

sleep 1

# to setup the etcd cluster

${pwd}/ezctl setup ${CLUSTER_NAME} 02

sleep 1

# to setup the container runtime(docker or containerd)

case ${cri} in

containerd)

sed -ri "s+^CONTAINER_RUNTIME=.*$+CONTAINER_RUNTIME=\"${cri}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

${pwd}/ezctl setup ${CLUSTER_NAME} 03

;;

docker)

sed -ri "s+^CONTAINER_RUNTIME=.*$+CONTAINER_RUNTIME=\"${cri}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

${pwd}/ezctl setup ${CLUSTER_NAME} 03

;;

*)

echo "cri need be containerd or docker."

exit 11

esac

sleep 1

# to setup the master nodes

${pwd}/ezctl setup ${CLUSTER_NAME} 04

sleep 1

# to setup the worker nodes

${pwd}/ezctl setup ${CLUSTER_NAME} 05

sleep 1

# to setup the network plugin(flannel、calico...)

${pwd}/ezctl setup ${CLUSTER_NAME} 06

sleep 1

# to setup other useful plugins(metrics-server、coredns...)

${pwd}/ezctl setup ${CLUSTER_NAME} 07

sleep 1

# [可选]对集群所有节点进行操作系统层面的安全加固 https://github.com/dev-sec/ansible-os-hardening

#ansible-playbook roles/os-harden/os-harden.yml

#sleep 1

#cd `dirname ${software_packet:-/tmp}`

k8s_bin_path='/opt/kube/bin'

echo "------------------------- k8s version list ---------------------------"

${k8s_bin_path}/kubectl version

echo

echo "------------------------- All Healthy status check -------------------"

${k8s_bin_path}/kubectl get componentstatus

echo

echo "------------------------- k8s cluster info list ----------------------"

${k8s_bin_path}/kubectl cluster-info

echo

echo "------------------------- k8s all nodes list -------------------------"

${k8s_bin_path}/kubectl get node -o wide

echo

echo "------------------------- k8s all-namespaces's pods list ------------"

${k8s_bin_path}/kubectl get pod --all-namespaces

echo

echo "------------------------- k8s all-namespaces's service network ------"

${k8s_bin_path}/kubectl get svc --all-namespaces

echo

echo "------------------------- k8s welcome for you -----------------------"

echo

# you can use k alias kubectl to siample

echo "alias k=kubectl && complete -F __start_kubectl k" >> ~/.bashrc

# get dashboard url

${k8s_bin_path}/kubectl cluster-info|grep dashboard|awk '{print $NF}'|tee -a /root/k8s_results

# get login token

${k8s_bin_path}/kubectl -n kube-system describe secret $(${k8s_bin_path}/kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')|grep 'token:'|awk '{print $NF}'|tee -a /root/k8s_results

echo

echo "you can look again dashboard and token info at >>> /root/k8s_results <<<"

echo ">>>>>>>>>>>>>>>>> You need to excute command [ reboot ] to restart all nodes <<<<<<<<<<<<<<<<<<<<"

#find / -type f -name "kubeasz*.tar.gz" -o -name "k8s_install_new.sh"|xargs rm -f

4.检查集群etcd

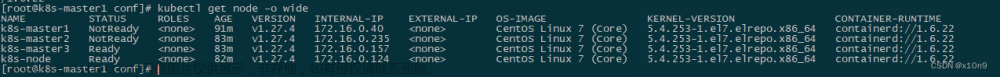

kubectl get node

kubectl get po -A

#获取节点的状态信息。输出结果会以表格方式展示每个节点的状态

etcdctl --endpoints=https://10.0.0.220:2379,\

https://10.0.0.221:2379,\

https://10.0.0.222:2379 \

--cacert=/etc/kubernetes/ssl/ca.pem \

--cert=/etc/kubernetes/ssl/etcd.pem \

--key=/etc/kubernetes/ssl/etcd-key.pem \

--write-out=table endpoint status

#加上endpoint health选项,表示要检查etcd集群的健康状态

etcdctl --endpoints=https://10.0.0.220:2379,\

https://10.0.0.221:2379,\

https://10.0.0.222:2379 \

--cacert=/etc/kubernetes/ssl/ca.pem \

--cert=/etc/kubernetes/ssl/etcd.pem \

--key=/etc/kubernetes/ssl/etcd-key.pem \

endpoint health --write-out=table

5.扩容集群

#帮助

root@node1:/etc/kubeasz# /etc/kubeasz/ezctl -h

Usage: ezctl COMMAND [args]

-------------------------------------------------------------------------------------

Cluster setups:

list to list all of the managed clusters

checkout <cluster> to switch default kubeconfig of the cluster

new <cluster> to start a new k8s deploy with name 'cluster'

setup <cluster> <step> to setup a cluster, also supporting a step-by-step way

start <cluster> to start all of the k8s services stopped by 'ezctl stop'

stop <cluster> to stop all of the k8s services temporarily

upgrade <cluster> to upgrade the k8s cluster

destroy <cluster> to destroy the k8s cluster

backup <cluster> to backup the cluster state (etcd snapshot)

restore <cluster> to restore the cluster state from backups

start-aio to quickly setup an all-in-one cluster with default settings

Cluster ops:

add-etcd <cluster> <ip> to add a etcd-node to the etcd cluster

add-master <cluster> <ip> to add a master node to the k8s cluster

add-node <cluster> <ip> to add a work node to the k8s cluster

del-etcd <cluster> <ip> to delete a etcd-node from the etcd cluster

del-master <cluster> <ip> to delete a master node from the k8s cluster

del-node <cluster> <ip> to delete a work node from the k8s cluster

Extra operation:

kca-renew <cluster> to force renew CA certs and all the other certs (with caution)

kcfg-adm <cluster> <args> to manage client kubeconfig of the k8s cluster

Use "ezctl help <command>" for more information about a given command.

######################################################################################################################

#添加节点

/etc/kubeasz/ezctl add-node test-cn 10.0.0.224

#删除节点

/etc/kubeasz/ezctl del-node test-cn 10.0.0.223

#在添加节点

/etc/kubeasz/ezctl del-node test-cn 10.0.0.223

#报错(解决:删除/etc/kubeasz/clusters/test-cn/hosts 文件中的 [kube_node]下面的10.0.0.223)

root@node1:/etc/kubeasz/clusters/test-cn# /etc/kubeasz/ezctl add-node test-cn 10.0.0.223

10.0.0.223

2024-02-18 16:02:46 ERROR node 10.0.0.223 already existed in /etc/kubeasz/clusters/test-cn/hosts

站在巨人的肩膀上,少走弯路

参考博客:https://blog.csdn.net/weixin_46887489?type=blog文章来源:https://www.toymoban.com/news/detail-827092.html

采用开源项目https://github.com/easzlab/kubeasz文章来源地址https://www.toymoban.com/news/detail-827092.html

到了这里,关于kubeasz部署k8s:v1.27.5集群的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!