目录

1 Submitting Applications

1 提交应用

2 Bundling Your Application’s Dependencies

2 捆绑应用程序的依赖

3 Launching Applications with spark-submit

3 使用spark-submit启动应用程序

4 Master URLs

5 Loading Configuration from a File

5 从文件加载配置

6 Advanced Dependency Management

6 高级依赖管理

8 More Information

8 更多信息

1 Submitting Applications

1 提交应用

The spark-submit script in Spark’s bin directory is used to launch applications on a cluster. It can use all of Spark’s supported cluster managers through a uniform interface so you don’t have to configure your application especially for each one.

Spark的 bin 目录中的 spark-submit 脚本用于在集群上启动应用程序。它可以通过统一的接口使用所有Spark支持的集群管理器,因此您不必为每个集群管理器配置应用程序。

2 Bundling Your Application’s Dependencies

2 捆绑应用程序的依赖

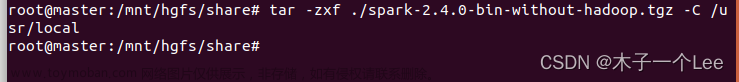

If your code depends on other projects, you will need to package them alongside your application in order to distribute the code to a Spark cluster. To do this, create an assembly jar (or “uber” jar) containing your code and its dependencies. Both sbt and Maven have assembly plugins. When creating assembly jars, list Spark and Hadoop as provided dependencies; these need not be bundled since they are provided by the cluster manager at runtime. Once you have an assembled jar you can call the bin/spark-submit script as shown here while passing your jar.

如果您的代码依赖于其他项目,则需要将它们与应用程序一起打包,以便将代码分发到Spark集群。为此,创建一个包含代码及其依赖项的组装jar(或“uber”jar)。sbt和Maven都有汇编插件。在创建assembly jar时,将Spark和Hadoop列为 provided 依赖项;这些不需要捆绑,因为它们是由集群管理器在运行时提供的。一旦你有了一个组装好的jar,你就可以在传递你的jar的时候调用这里所示的 bin/spark-submit 脚本。

For Python, you can use the --py-files argument of spark-submit to add .py, .zip or .egg files to be distributed with your application. If you depend on multiple Python files we recommend packaging them into a .zip or .egg. For third-party Python dependencies, see Python Package Management.

对于Python,您可以使用 spark-submit 的 --py-files 参数来添加要与应用程序一起分发的 .py 、 .zip 或 .egg 文件。如果你依赖于多个Python文件,我们建议将它们打包到 .zip 或 .egg 中。有关第三方Python依赖项,请参阅Python包管理。文章来源:https://www.toymoban.com/news/detail-833833.html

3 Launching Applications with spark-submit

3 使用spark-submit启动应用程序

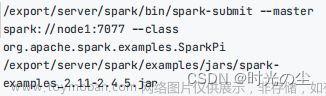

Once a user application is bundled, it can be launched using the bin/spark-submit script. This script takes care of setting up the classpath with Spark and its dependencies, and can support different cluster managers and deploy modes that Spark supports:

一旦用户应用程序被捆绑,就可以使用 bin/spark-submit 脚本启动它。此脚本负责使用Spark及其依赖项设置类路径,并可以支持Spark支持的不同集群管理器和部署模式:文章来源地址https://www.toymoban.com/news/detail-833833.html

到了这里,关于【spark-submit】【spark】的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!