一、前言

我创作这篇博客的初衷是因为我在ubuntu20.04环境下跑Colmap+OpenMVS这个算法框架的时候踩了很多坑,一方面是网上现在很多教程都是基于Windows环境下的,而Windows环境和Linux环境相比还是有很大的差异的;二是现在网上的很多教程基本很多步骤一带而过了,而往往这些一带而过的步骤也非常容易出问题。所以我希望我的这篇分享能够帮助到大家,不想再让后面的创作者再踩这些坑了。

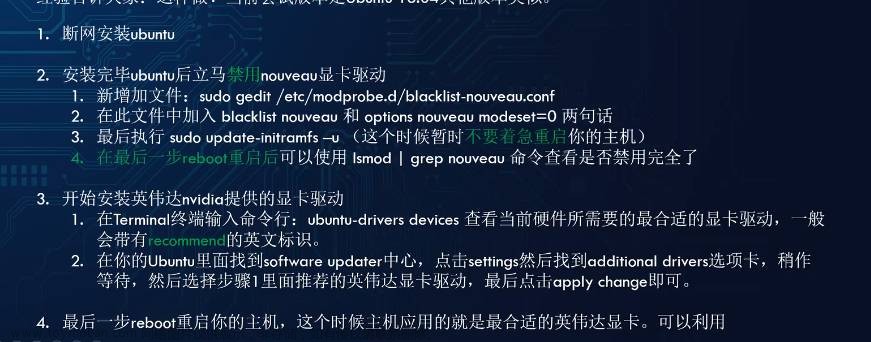

特别说明:如果大家在编译中遇到任何问题,建议先直接跳转到第七章:问题合集里面看是否有相应的问题,如果有任何问题,欢迎评论区留言交流。

在这里也有一些在这个方向上的一些优秀参考:

1、Windows环境下colmap+openmvs进行三维重建(选看):Windows环境下colmap+openmvs进行三维重建_colmap二次开发-CSDN博客

2、三维重建公开数据集(选看)

三维重建公开数据集整理(MVS篇)_三维重建数据集-CSDN博客

3、三维重建的一些方法总结(选看)

三维重建方法总结-CSDN博客

二、环境配置

下面是我所使用的一些环境配置,谨供大家参考:

Ubuntu: 20.04

cmake : 3.24.2(无太大版本要求)

g++/gcc : 7.5.0(无太大版本要求)

git : 2.25.1(无太大版本要求)

CUDA: 11.0 (建议就用这个版本)

Ubuntu 20.04安装CUDA & CUDNN 手把手带你撸_ubuntu20.04 cuda安装-CSDN博客

OpenCV: 3.4.5(建议就用这个版本)

Ubuntu20.04安装OpenCV3.4.5(两种方法&&图文详解)-云社区-华为云

Eigen: 3.4.1(建议就用这个版本)

ubuntu20.04安装eigen3.4.0(两种方式)和ceres-solver2.0.0_ceres-solver-2.0.0-CSDN博客

boost:

sudo apt-get -y install libboost-iostreams-dev libboost-program-options-dev libboost-system-dev libboost-serialization-dev

CGAL:

sudo apt-get -y install libcgal-dev libcgal-qt5-devceres-solver(这个前期在配置环境的时候不太建议大家装,在装的过程中很容易遇到版本冲突的问题,大家可以到具体要用的时候再装,建议装1.10版本及以上的)

Ubuntu20.04安装Ceres和g2o库_ubuntu20.04安装g2o-CSDN博客

vcglib: (这个库需要放在和OpenMVS同级目录,并且下载下来之后不用编译,在后面编译OpenMVS的时候可以直接链接到这个库)

git clone https://github.com/cdcseacave/VCG.git vcglib

下面附上一个查看电脑上相应库版本的链接:

查看Ubuntu中的OpenCV、Eigen、Ceres版本_查看ceres版本-CSDN博客

三、安装Colmap

建议把环境配置好之后再开始安装

以下这个博主的colmap安装教程写的很好,这里我就不再赘述,但是我建议先不要安装ceres-solver

Ubuntu20.04安装Colmap_colmap ubuntu20..04-CSDN博客

四、安装Meshlab

https://www.cnblogs.com/yibeimingyue/p/14690096.html

五、安装OpenMVS

以下这个博主的OpenMVS安装教程写的很好:OpenMVS详细安装教程(ubuntu18.04)_openmvs安装-CSDN博客

但是因为它这里面所用的是ubuntu18.04版本,所以建议从它的

第一章:安装以及运行OpenMVS

开始看起,一直看到

OpenMVS测试那里,

但是后面的数据集测试部分不建议继续看下去,根据我的测试,在这篇博客中使用的数据集并不适用于Colmap。

后面我会单独出一部分讲完整的Colmap+OpenMVS测试流程。

OpenMVS详细安装教程(ubuntu18.04)_openmvs安装-CSDN博客

六、运行测试

在安装好Colmap 和 OpenMVS之后,就可以开始进行下面的测试了

这里我们用的日本东北大学Tohoku University提供的公开数据集:

THU-MVS: Multi-View 3D Reconstruction Datasets

选择的是彩色小狗作为测试对象,选择DOG_RGB.zip文件夹下载

6.1colmap稀疏重建

1、新建项目

新建一个测试文件夹,比如我们这里新建的文件夹命名为project;

把解压好的DOG_RGB数据集放到project目录里面;

再在project文件夹里面新建一个文件夹dense;

在project目录下鼠标右击选择在终端中打开,在终端中输入:touch dog_test.db 新建一个文件dog_test.db;

ctrl+alt+t ,打开终端,输入colmap gui , 打开colmap

Fiel -> New project

点击Open,选择我们原来在project目录下创建的dog_test.db文件

点击Select, 选择我们存放照片的DOG_RGB文件夹

然后点击Save, 保存我们所新建的项目

2、特征提取:Processing -> Feature extraction (因为OpenMVS重建需要针孔相机模型,所以这里我们选择PINHOLE,其他的直接用系统默认)

点击Extract(这里如果CUDA版本不对的话可能会出现软件界面闪退的情况) ,特征提取完成之后在Colmap界面右侧会有下面这样的提示:

3、特征匹配 Processing -> Feature matching -> Run

4、Reconstruction -> Start recpnstruction

5、Reconstruction -> Dense reconstruction

点击Select, 选择我们前面在project目录里面新建的dense文件夹

然后依次点击Undistortion, Stereo, Fusion

该过程结束之后可以看到在dense文件夹下面有以下这些文件

可以双击fused.ply在Meshlab里面查看重建效果(这里的话要先安装Meshlab)

6.2 OpenMVS稠密重建

因为我们Colmap重建之后的点云数据没办法输入进OpenMVS直接使用,所以这里要进行一个数据格式的转换。

1、.bin 格式转换成 .txt格式

这里我有必要说明一下,因为OpenMVS使用Colmap里面的数据进行重建的话,需要camera.txt images.txt point3D.txt这三个文件,而我在实际操作的过程中发现一个问题,就是在Colmap软件界面里面Fiel -> export model as txt 导出来的camera.txt images.txt point3D.txt这三个文件里面相机参数和图片位姿参数有可能会是空的,这里可能是Colmap软件的一个BUG。

所以我这里着重讲一下怎么将Colmap软件输出的.bin文件格式转换成OpenMVS所需要的含有相机参数的.txt文件格式。

首先project -> dense -> sparse , 查看是否有这三个文件:camera.bin images.bin point3D.bin

然后这里附上一个Colmap官方给的 bin2txt 的python代码(不用细读,会用就行):

import os

import collections

import numpy as np

import struct

import argparse

CameraModel = collections.namedtuple(

"CameraModel", ["model_id", "model_name", "num_params"])

Camera = collections.namedtuple(

"Camera", ["id", "model", "width", "height", "params"])

BaseImage = collections.namedtuple(

"Image", ["id", "qvec", "tvec", "camera_id", "name", "xys", "point3D_ids"])

Point3D = collections.namedtuple(

"Point3D", ["id", "xyz", "rgb", "error", "image_ids", "point2D_idxs"])

class Image(BaseImage):

def qvec2rotmat(self):

return qvec2rotmat(self.qvec)

CAMERA_MODELS = {

CameraModel(model_id=0, model_name="SIMPLE_PINHOLE", num_params=3),

CameraModel(model_id=1, model_name="PINHOLE", num_params=4),

CameraModel(model_id=2, model_name="SIMPLE_RADIAL", num_params=4),

CameraModel(model_id=3, model_name="RADIAL", num_params=5),

CameraModel(model_id=4, model_name="OPENCV", num_params=8),

CameraModel(model_id=5, model_name="OPENCV_FISHEYE", num_params=8),

CameraModel(model_id=6, model_name="FULL_OPENCV", num_params=12),

CameraModel(model_id=7, model_name="FOV", num_params=5),

CameraModel(model_id=8, model_name="SIMPLE_RADIAL_FISHEYE", num_params=4),

CameraModel(model_id=9, model_name="RADIAL_FISHEYE", num_params=5),

CameraModel(model_id=10, model_name="THIN_PRISM_FISHEYE", num_params=12)

}

CAMERA_MODEL_IDS = dict([(camera_model.model_id, camera_model)

for camera_model in CAMERA_MODELS])

CAMERA_MODEL_NAMES = dict([(camera_model.model_name, camera_model)

for camera_model in CAMERA_MODELS])

def read_next_bytes(fid, num_bytes, format_char_sequence, endian_character="<"):

"""Read and unpack the next bytes from a binary file.

:param fid:

:param num_bytes: Sum of combination of {2, 4, 8}, e.g. 2, 6, 16, 30, etc.

:param format_char_sequence: List of {c, e, f, d, h, H, i, I, l, L, q, Q}.

:param endian_character: Any of {@, =, <, >, !}

:return: Tuple of read and unpacked values.

"""

data = fid.read(num_bytes)

return struct.unpack(endian_character + format_char_sequence, data)

def write_next_bytes(fid, data, format_char_sequence, endian_character="<"):

"""pack and write to a binary file.

:param fid:

:param data: data to send, if multiple elements are sent at the same time,

they should be encapsuled either in a list or a tuple

:param format_char_sequence: List of {c, e, f, d, h, H, i, I, l, L, q, Q}.

should be the same length as the data list or tuple

:param endian_character: Any of {@, =, <, >, !}

"""

if isinstance(data, (list, tuple)):

bytes = struct.pack(endian_character + format_char_sequence, *data)

else:

bytes = struct.pack(endian_character + format_char_sequence, data)

fid.write(bytes)

def read_cameras_text(path):

"""

see: src/base/reconstruction.cc

void Reconstruction::WriteCamerasText(const std::string& path)

void Reconstruction::ReadCamerasText(const std::string& path)

"""

cameras = {}

with open(path, "r") as fid:

while True:

line = fid.readline()

if not line:

break

line = line.strip()

if len(line) > 0 and line[0] != "#":

elems = line.split()

camera_id = int(elems[0])

model = elems[1]

width = int(elems[2])

height = int(elems[3])

params = np.array(tuple(map(float, elems[4:])))

cameras[camera_id] = Camera(id=camera_id, model=model,

width=width, height=height,

params=params)

return cameras

def read_cameras_binary(path_to_model_file):

"""

see: src/base/reconstruction.cc

void Reconstruction::WriteCamerasBinary(const std::string& path)

void Reconstruction::ReadCamerasBinary(const std::string& path)

"""

cameras = {}

with open(path_to_model_file, "rb") as fid:

num_cameras = read_next_bytes(fid, 8, "Q")[0]

for _ in range(num_cameras):

camera_properties = read_next_bytes(

fid, num_bytes=24, format_char_sequence="iiQQ")

camera_id = camera_properties[0]

model_id = camera_properties[1]

model_name = CAMERA_MODEL_IDS[camera_properties[1]].model_name

width = camera_properties[2]

height = camera_properties[3]

num_params = CAMERA_MODEL_IDS[model_id].num_params

params = read_next_bytes(fid, num_bytes=8*num_params,

format_char_sequence="d"*num_params)

cameras[camera_id] = Camera(id=camera_id,

model=model_name,

width=width,

height=height,

params=np.array(params))

assert len(cameras) == num_cameras

return cameras

def write_cameras_text(cameras, path):

"""

see: src/base/reconstruction.cc

void Reconstruction::WriteCamerasText(const std::string& path)

void Reconstruction::ReadCamerasText(const std::string& path)

"""

HEADER = "# Camera list with one line of data per camera:\n" + \

"# CAMERA_ID, MODEL, WIDTH, HEIGHT, PARAMS[]\n" + \

"# Number of cameras: {}\n".format(len(cameras))

with open(path, "w") as fid:

fid.write(HEADER)

for _, cam in cameras.items():

to_write = [cam.id, cam.model, cam.width, cam.height, *cam.params]

line = " ".join([str(elem) for elem in to_write])

fid.write(line + "\n")

def write_cameras_binary(cameras, path_to_model_file):

"""

see: src/base/reconstruction.cc

void Reconstruction::WriteCamerasBinary(const std::string& path)

void Reconstruction::ReadCamerasBinary(const std::string& path)

"""

with open(path_to_model_file, "wb") as fid:

write_next_bytes(fid, len(cameras), "Q")

for _, cam in cameras.items():

model_id = CAMERA_MODEL_NAMES[cam.model].model_id

camera_properties = [cam.id,

model_id,

cam.width,

cam.height]

write_next_bytes(fid, camera_properties, "iiQQ")

for p in cam.params:

write_next_bytes(fid, float(p), "d")

return cameras

def read_images_text(path):

"""

see: src/base/reconstruction.cc

void Reconstruction::ReadImagesText(const std::string& path)

void Reconstruction::WriteImagesText(const std::string& path)

"""

images = {}

with open(path, "r") as fid:

while True:

line = fid.readline()

if not line:

break

line = line.strip()

if len(line) > 0 and line[0] != "#":

elems = line.split()

image_id = int(elems[0])

qvec = np.array(tuple(map(float, elems[1:5])))

tvec = np.array(tuple(map(float, elems[5:8])))

camera_id = int(elems[8])

image_name = elems[9]

elems = fid.readline().split()

xys = np.column_stack([tuple(map(float, elems[0::3])),

tuple(map(float, elems[1::3]))])

point3D_ids = np.array(tuple(map(int, elems[2::3])))

images[image_id] = Image(

id=image_id, qvec=qvec, tvec=tvec,

camera_id=camera_id, name=image_name,

xys=xys, point3D_ids=point3D_ids)

return images

def read_images_binary(path_to_model_file):

"""

see: src/base/reconstruction.cc

void Reconstruction::ReadImagesBinary(const std::string& path)

void Reconstruction::WriteImagesBinary(const std::string& path)

"""

images = {}

with open(path_to_model_file, "rb") as fid:

num_reg_images = read_next_bytes(fid, 8, "Q")[0]

for _ in range(num_reg_images):

binary_image_properties = read_next_bytes(

fid, num_bytes=64, format_char_sequence="idddddddi")

image_id = binary_image_properties[0]

qvec = np.array(binary_image_properties[1:5])

tvec = np.array(binary_image_properties[5:8])

camera_id = binary_image_properties[8]

image_name = ""

current_char = read_next_bytes(fid, 1, "c")[0]

while current_char != b"\x00": # look for the ASCII 0 entry

image_name += current_char.decode("utf-8")

current_char = read_next_bytes(fid, 1, "c")[0]

num_points2D = read_next_bytes(fid, num_bytes=8,

format_char_sequence="Q")[0]

x_y_id_s = read_next_bytes(fid, num_bytes=24*num_points2D,

format_char_sequence="ddq"*num_points2D)

xys = np.column_stack([tuple(map(float, x_y_id_s[0::3])),

tuple(map(float, x_y_id_s[1::3]))])

point3D_ids = np.array(tuple(map(int, x_y_id_s[2::3])))

images[image_id] = Image(

id=image_id, qvec=qvec, tvec=tvec,

camera_id=camera_id, name=image_name,

xys=xys, point3D_ids=point3D_ids)

return images

def write_images_text(images, path):

"""

see: src/base/reconstruction.cc

void Reconstruction::ReadImagesText(const std::string& path)

void Reconstruction::WriteImagesText(const std::string& path)

"""

if len(images) == 0:

mean_observations = 0

else:

mean_observations = sum((len(img.point3D_ids) for _, img in images.items()))/len(images)

HEADER = "# Image list with two lines of data per image:\n" + \

"# IMAGE_ID, QW, QX, QY, QZ, TX, TY, TZ, CAMERA_ID, NAME\n" + \

"# POINTS2D[] as (X, Y, POINT3D_ID)\n" + \

"# Number of images: {}, mean observations per image: {}\n".format(len(images), mean_observations)

with open(path, "w") as fid:

fid.write(HEADER)

for _, img in images.items():

image_header = [img.id, *img.qvec, *img.tvec, img.camera_id, img.name]

first_line = " ".join(map(str, image_header))

fid.write(first_line + "\n")

points_strings = []

for xy, point3D_id in zip(img.xys, img.point3D_ids):

points_strings.append(" ".join(map(str, [*xy, point3D_id])))

fid.write(" ".join(points_strings) + "\n")

def write_images_binary(images, path_to_model_file):

"""

see: src/base/reconstruction.cc

void Reconstruction::ReadImagesBinary(const std::string& path)

void Reconstruction::WriteImagesBinary(const std::string& path)

"""

with open(path_to_model_file, "wb") as fid:

write_next_bytes(fid, len(images), "Q")

for _, img in images.items():

write_next_bytes(fid, img.id, "i")

write_next_bytes(fid, img.qvec.tolist(), "dddd")

write_next_bytes(fid, img.tvec.tolist(), "ddd")

write_next_bytes(fid, img.camera_id, "i")

for char in img.name:

write_next_bytes(fid, char.encode("utf-8"), "c")

write_next_bytes(fid, b"\x00", "c")

write_next_bytes(fid, len(img.point3D_ids), "Q")

for xy, p3d_id in zip(img.xys, img.point3D_ids):

write_next_bytes(fid, [*xy, p3d_id], "ddq")

def read_points3D_text(path):

"""

see: src/base/reconstruction.cc

void Reconstruction::ReadPoints3DText(const std::string& path)

void Reconstruction::WritePoints3DText(const std::string& path)

"""

points3D = {}

with open(path, "r") as fid:

while True:

line = fid.readline()

if not line:

break

line = line.strip()

if len(line) > 0 and line[0] != "#":

elems = line.split()

point3D_id = int(elems[0])

xyz = np.array(tuple(map(float, elems[1:4])))

rgb = np.array(tuple(map(int, elems[4:7])))

error = float(elems[7])

image_ids = np.array(tuple(map(int, elems[8::2])))

point2D_idxs = np.array(tuple(map(int, elems[9::2])))

points3D[point3D_id] = Point3D(id=point3D_id, xyz=xyz, rgb=rgb,

error=error, image_ids=image_ids,

point2D_idxs=point2D_idxs)

return points3D

def read_points3D_binary(path_to_model_file):

"""

see: src/base/reconstruction.cc

void Reconstruction::ReadPoints3DBinary(const std::string& path)

void Reconstruction::WritePoints3DBinary(const std::string& path)

"""

points3D = {}

with open(path_to_model_file, "rb") as fid:

num_points = read_next_bytes(fid, 8, "Q")[0]

for _ in range(num_points):

binary_point_line_properties = read_next_bytes(

fid, num_bytes=43, format_char_sequence="QdddBBBd")

point3D_id = binary_point_line_properties[0]

xyz = np.array(binary_point_line_properties[1:4])

rgb = np.array(binary_point_line_properties[4:7])

error = np.array(binary_point_line_properties[7])

track_length = read_next_bytes(

fid, num_bytes=8, format_char_sequence="Q")[0]

track_elems = read_next_bytes(

fid, num_bytes=8*track_length,

format_char_sequence="ii"*track_length)

image_ids = np.array(tuple(map(int, track_elems[0::2])))

point2D_idxs = np.array(tuple(map(int, track_elems[1::2])))

points3D[point3D_id] = Point3D(

id=point3D_id, xyz=xyz, rgb=rgb,

error=error, image_ids=image_ids,

point2D_idxs=point2D_idxs)

return points3D

def write_points3D_text(points3D, path):

"""

see: src/base/reconstruction.cc

void Reconstruction::ReadPoints3DText(const std::string& path)

void Reconstruction::WritePoints3DText(const std::string& path)

"""

if len(points3D) == 0:

mean_track_length = 0

else:

mean_track_length = sum((len(pt.image_ids) for _, pt in points3D.items()))/len(points3D)

HEADER = "# 3D point list with one line of data per point:\n" + \

"# POINT3D_ID, X, Y, Z, R, G, B, ERROR, TRACK[] as (IMAGE_ID, POINT2D_IDX)\n" + \

"# Number of points: {}, mean track length: {}\n".format(len(points3D), mean_track_length)

with open(path, "w") as fid:

fid.write(HEADER)

for _, pt in points3D.items():

point_header = [pt.id, *pt.xyz, *pt.rgb, pt.error]

fid.write(" ".join(map(str, point_header)) + " ")

track_strings = []

for image_id, point2D in zip(pt.image_ids, pt.point2D_idxs):

track_strings.append(" ".join(map(str, [image_id, point2D])))

fid.write(" ".join(track_strings) + "\n")

def write_points3D_binary(points3D, path_to_model_file):

"""

see: src/base/reconstruction.cc

void Reconstruction::ReadPoints3DBinary(const std::string& path)

void Reconstruction::WritePoints3DBinary(const std::string& path)

"""

with open(path_to_model_file, "wb") as fid:

write_next_bytes(fid, len(points3D), "Q")

for _, pt in points3D.items():

write_next_bytes(fid, pt.id, "Q")

write_next_bytes(fid, pt.xyz.tolist(), "ddd")

write_next_bytes(fid, pt.rgb.tolist(), "BBB")

write_next_bytes(fid, pt.error, "d")

track_length = pt.image_ids.shape[0]

write_next_bytes(fid, track_length, "Q")

for image_id, point2D_id in zip(pt.image_ids, pt.point2D_idxs):

write_next_bytes(fid, [image_id, point2D_id], "ii")

def detect_model_format(path, ext):

if os.path.isfile(os.path.join(path, "cameras" + ext)) and \

os.path.isfile(os.path.join(path, "images" + ext)) and \

os.path.isfile(os.path.join(path, "points3D" + ext)):

print("Detected model format: '" + ext + "'")

return True

return False

def read_model(path, ext=""):

# try to detect the extension automatically

if ext == "":

if detect_model_format(path, ".bin"):

ext = ".bin"

elif detect_model_format(path, ".txt"):

ext = ".txt"

else:

print("Provide model format: '.bin' or '.txt'")

return

if ext == ".txt":

cameras = read_cameras_text(os.path.join(path, "cameras" + ext))

images = read_images_text(os.path.join(path, "images" + ext))

points3D = read_points3D_text(os.path.join(path, "points3D") + ext)

else:

cameras = read_cameras_binary(os.path.join(path, "cameras" + ext))

images = read_images_binary(os.path.join(path, "images" + ext))

points3D = read_points3D_binary(os.path.join(path, "points3D") + ext)

return cameras, images, points3D

def write_model(cameras, images, points3D, path, ext=".bin"):

if ext == ".txt":

write_cameras_text(cameras, os.path.join(path, "cameras" + ext))

write_images_text(images, os.path.join(path, "images" + ext))

write_points3D_text(points3D, os.path.join(path, "points3D") + ext)

else:

write_cameras_binary(cameras, os.path.join(path, "cameras" + ext))

write_images_binary(images, os.path.join(path, "images" + ext))

write_points3D_binary(points3D, os.path.join(path, "points3D") + ext)

return cameras, images, points3D

def qvec2rotmat(qvec):

return np.array([

[1 - 2 * qvec[2]**2 - 2 * qvec[3]**2,

2 * qvec[1] * qvec[2] - 2 * qvec[0] * qvec[3],

2 * qvec[3] * qvec[1] + 2 * qvec[0] * qvec[2]],

[2 * qvec[1] * qvec[2] + 2 * qvec[0] * qvec[3],

1 - 2 * qvec[1]**2 - 2 * qvec[3]**2,

2 * qvec[2] * qvec[3] - 2 * qvec[0] * qvec[1]],

[2 * qvec[3] * qvec[1] - 2 * qvec[0] * qvec[2],

2 * qvec[2] * qvec[3] + 2 * qvec[0] * qvec[1],

1 - 2 * qvec[1]**2 - 2 * qvec[2]**2]])

def rotmat2qvec(R):

Rxx, Ryx, Rzx, Rxy, Ryy, Rzy, Rxz, Ryz, Rzz = R.flat

K = np.array([

[Rxx - Ryy - Rzz, 0, 0, 0],

[Ryx + Rxy, Ryy - Rxx - Rzz, 0, 0],

[Rzx + Rxz, Rzy + Ryz, Rzz - Rxx - Ryy, 0],

[Ryz - Rzy, Rzx - Rxz, Rxy - Ryx, Rxx + Ryy + Rzz]]) / 3.0

eigvals, eigvecs = np.linalg.eigh(K)

qvec = eigvecs[[3, 0, 1, 2], np.argmax(eigvals)]

if qvec[0] < 0:

qvec *= -1

return qvec

def main():

parser = argparse.ArgumentParser(description="Read and write COLMAP binary and text models")

parser.add_argument("--input_model", help="path to input model folder")

parser.add_argument("--input_format", choices=[".bin", ".txt"],

help="input model format", default="")

parser.add_argument("--output_model",

help="path to output model folder")

parser.add_argument("--output_format", choices=[".bin", ".txt"],

help="outut model format", default=".txt")

args = parser.parse_args()

cameras, images, points3D = read_model(path=args.input_model, ext=args.input_format)

print("num_cameras:", len(cameras))

print("num_images:", len(images))

print("num_points3D:", len(points3D))

if args.output_model is not None:

write_model(cameras, images, points3D, path=args.output_model, ext=args.output_format)

if __name__ == "__main__":

main()这个代码的使用方法如下:

在project目录下新建一个python文件,命名为bin_txt.py

创建好之后双击打开,将前面的python代码复制进去,点击保存

然后在终端中打开,

输入以下命令:

python3 bin_txt.py --input_model /home/merry/project/dense/sparse --input_format .bin --output_model /home/merry/project/dense/sparse --output_format .txt

解读:python3是因为里面的一些依赖可能需要最新版本的python,--input_model 后面跟的/home/merry/project/dense/sparse是.bin文件所在的绝对路径(当然也可以使用相对路径),--input_format.bin 是用来说明被转换的格式是.bin, 后面跟的/home/merry/project/dense/sparse是转换完成后的.txt文件存放的绝对路径, --output_format .txt是说明转换完成后的是.txt格式。

运行之后可以看到在/home/merry/project/dense/sparse目录下看到多出的几个含有相机参数的文件:

2、将前面Colmap导出的.txt 文件转换成OpenMVS能用的.mvs文件

首先进入到OpenMVS的安装目录:cd /usr/local/bin/OpenMVS(一般都是默认安装到这个目录)

在终端中打开, 输入以下命令:

./InterfaceCOLMAP -i /home/merry/project/dense -o /home/merry/project/dog.mvs

这个时候转换好的dog.mvs文件就可以在OpenMVS中打开了。

(但是切记这里输出的dog.mvs文件和dense文件有依赖关系,所以如果要移动的话建议要将这两个文件夹一起移动)

在终端中输入: ./Viewer /home/merry/project/dog.mvs

3、稠密重建

在进行这一步之前,可以把原始的DOG_RGB文件夹移到其他路径下

然后在终端中输入并运行:

./DensifyPointCloud -w /home/merry/project -i dog.mvs -o dog_dense.mvs

-w 设置的是图像数据的路径 -i 是输入的文件名 -o是输出的文件名

之后安静的等待几分钟

可以看到重建之后的界面如下:

虽然现在看起来很奇怪,但是我们可以一起期待一下后面完整流程走过之后的效果。

4、点云网格化

在终端输入并运行:

./ReconstructMesh -w /home/merry/project -i dog_dense.mvs -o dog_dense_recon.mvs

这里注意这个-w 后面的工作目录这里有可能会报错,不用进去/home/merry/project/dense目录里面,直接到 /home/merry/project 目录就可以

可以看到这里在project目录下生成了dog_dense_recon.mvs文件

可以看到,网格化之后的模型是这样的:

5、网格优化

在终端中输入:

./RefineMesh -w /home/merry/project -i dog_dense_recon.mvs -o dog_refine.mvs

因为这一步需要比较多的内存,所以有可能会出现进程被杀的情况

可以把电脑上其他进程先关闭,通过使用--resolution-level 1缩小处理图像的比例来解决,从而限制内存使用

终端输入:

./RefineMesh -w /home/merry/project -i dog_dense_recon.mvs -o dog_refine.mvs --resolution-level 1

现在就可以了,下面是进行网格优化之后的效果:

6、纹理贴图

在终端输入:

./TextureMesh -w /home/merry/project -i dog_refine.mvs -o dog_tex.mvs

下面是纹理贴图之后的效果,还是非常不错的

七、问题合集

下面是我在实际操作中所遇到的一些问题,大家可以对应着看

1、因为很容易就出现CUDA版本冲突的问题,下面是一个ubuntu20.04卸载CUDA的教程

Ubuntu20.04 卸载cuda 11.0_nvidia/cuda:11.0.3-base-ubuntu20.04-CSDN博客

2、编译过程中与eigen版本冲突

fatal error: Eigen/Core: No such file or directory · Issue #14868 · opencv/opencv · GitHub

3、编译过程中报错, CUDA找不到C++17的版本号

c++ - CMake cannot set CUDA standard c++17 - Stack Overflow

4、ceres-solver版本冲突问题

Found Eigen dependency, but the version of Eigen……编译问题解决_failed to find ceres - found eigen dependency, but-CSDN博客

5、The CMAKE_C_COMPILER: /usr/local/bin/cc is not a full path to an existing compiler tool

ros - The CMAKE_C_COMPILER: /usr/local/bin/cc is not a full path to an existing compiler tool - Ask Ubuntu

6、ERROR: cannot launch node of type [image_view/image_view]: image_viewROS path [0]=/opt/ros/noetic/s

ERROR: cannot launch node of type [image_view/image_view]: image_viewROS path [0]=/opt/ros/noetic/s-CSDN博客

这一步报错其实是在安装OpenMVS时,这一步没弄好:“# /path to vcglib/vcglib"改为自己的vcglib的路径:Vcglib的父级目录/vcglib”,

7、在OpenMVS的网格优化RefineMesh环节进程被杀

RefineMesh Killed · Issue #662 · cdcseacave/openMVS · GitHub

8、gcc编译警告关于(warning: this ‘if’ clause does not guard... [-Wmisleading-indentation] if(err)之类的问题)

gcc编译警告关于(warning: this ‘if’ clause does not guard... [-Wmisleading-indentation] if(err)之类的问题)_[warning] this 'for' clause does not guard... [-wm-CSDN博客

9、openMVS执行Reconstructmesh.exe只生成ply文件,不生成mvs文件

openMVS执行Reconstructmesh.exe只生成ply文件,不生成mvs文件-CSDN博客

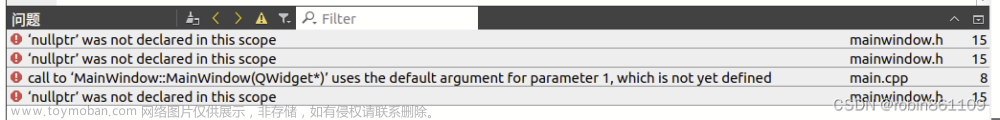

10、error: ‘BasicVertexPair’ does not name a type

error: ‘BasicVertexPair’ does not name a type_error: ‘basicvertexpair’ does not name a type type-CSDN博客

这一步报错主要是因为vcglib版本太高了,要使用git退回到低版本

11、Cannot set "VCG_INCLUDE_DIRS": current scope has no parent. #223

Cannot set "VCG_INCLUDE_DIRS": current scope has no parent. · Issue #223 · cnr-isti-vclab/vcglib · GitHub12、collect2: error: ld returned 1 exit status(解决方案大总结)

collect2: error: ld returned 1 exit status(解决方案大总结)-CSDN博客

如果出现这个报错,很有可能是eigen版本的问题文章来源:https://www.toymoban.com/news/detail-837492.html

以上就是本篇分享的全部内容了,感谢大家的观看,新人创作不易,如果大家喜欢的话,欢迎大家点赞、收藏+关注,后面我将会有更多三维重建相关的内容分享在这里。文章来源地址https://www.toymoban.com/news/detail-837492.html

到了这里,关于ubuntu20.04环境下安装运行Colmap+OpenMVS的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!