1.问题发现

[root@k8s-master 09:30:54 ~]# kubectl get pod

The connection to the server 10.75.78.212:6443 was refused - did you specify the right host or port?

敲击kubectl任意命令提示无法连接到10.75.78.212 的6443端口

kube-apiserver 默认端口无法连接,初步判断 kube-apiserver 服务出现问题

这里简单总结下kube-apiserver组件:

kube-apiserver 是 Kubernetes 控制平面的枢纽,负责处理所有的 API 调用,包括集群管理、应用部署和维护、用户交互等,并且它是集群中的其他组件与集群数据交互的中介。由于其至关重要的作用,kube-apiserver 必须保持高可用性,通常在生产环境中会以多副本的方式部署。

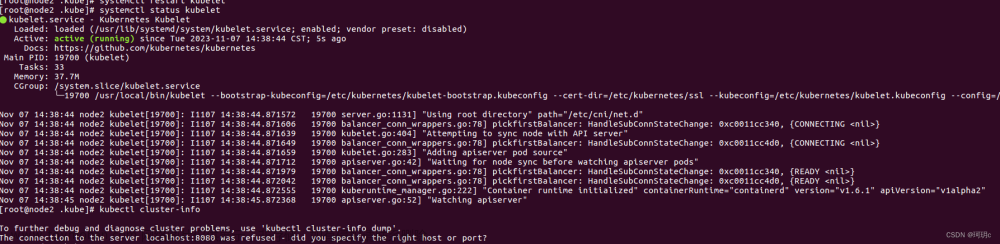

2.检查apiservice组件状态

[root@k8s-master 09:49:54 ~]# systemctl status kube-apiserver

Unit kube-apiserver.service could not be found.

这里注意使用kubeadm部署集群,是kubectl管理的组件,但是前面提到kubectl敲击任意命令都会报错,因为apiserver已经连不上了,那该怎么办呢?先别急!

这时候可以直接用docker ps查看kube-apiserver组件状态

[root@k8s-master 18:44:07 manifests]# docker ps -a |grep apiserver

c27dccf09b6f ca9843d3b545 "kube-apiserver --ad?? 2 minutes ago Exited (1) About a minute ago k8s_kube-apiserver_kube-apiserver-k8s-master_kube-system_fab85d8ba6972312b4dc0da409806a7e_891

根据 docker ps -a | grep kube-apiserver 命令输出,我们可以看到有一个 kube-apiserver 容器在 2 分钟前退出,并且退出代码是 1,这通常表示容器遇到了错误并异常终止。

此时大概是知道apiserver服务出问题

3.排查问题

为了进一步调查这个问题,查看该 kube-apiserver 容器的日志以获取更多信息。执行以下命令:

[root@k8s-master 18:44:22 manifests]# docker logs c27dccf09b6f

Flag --insecure-port has been deprecated, This flag has no effect now and will be removed in v1.24.

I0318 10:42:08.331025 1 server.go:632] external host was not specified, using 10.75.78.212

I0318 10:42:08.331610 1 server.go:182] Version: v1.20.0

I0318 10:42:08.533284 1 shared_informer.go:240] Waiting for caches to sync for node_authorizer

I0318 10:42:08.534164 1 plugins.go:158] Loaded 12 mutating admission controller(s) successfully in the following order: NamespaceLifecycle,LimitRanger,ServiceAccount,NodeRestriction,TaintNodesByCondition,Priority,DefaultTolerationSeconds,DefaultStorageClass,StorageObjectInUseProtection,RuntimeClass,DefaultIngressClass,MutatingAdmissionWebhook.

I0318 10:42:08.534179 1 plugins.go:161] Loaded 10 validating admission controller(s) successfully in the following order: LimitRanger,ServiceAccount,Priority,PersistentVolumeClaimResize,RuntimeClass,CertificateApproval,CertificateSigning,CertificateSubjectRestriction,ValidatingAdmissionWebhook,ResourceQuota.

I0318 10:42:08.534989 1 plugins.go:158] Loaded 12 mutating admission controller(s) successfully in the following order: NamespaceLifecycle,LimitRanger,ServiceAccount,NodeRestriction,TaintNodesByCondition,Priority,DefaultTolerationSeconds,DefaultStorageClass,StorageObjectInUseProtection,RuntimeClass,DefaultIngressClass,MutatingAdmissionWebhook.

I0318 10:42:08.534999 1 plugins.go:161] Loaded 10 validating admission controller(s) successfully in the following order: LimitRanger,ServiceAccount,Priority,PersistentVolumeClaimResize,RuntimeClass,CertificateApproval,CertificateSigning,CertificateSubjectRestriction,ValidatingAdmissionWebhook,ResourceQuota.

I0318 10:42:08.536967 1 client.go:360] parsed scheme: "endpoint"

I0318 10:42:08.537010 1 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://127.0.0.1:2379 <nil> 0 <nil>}]

W0318 10:42:08.547521 1 clientconn.go:1223] grpc: addrConn.createTransport failed to connect to {https://127.0.0.1:2379 <nil> 0 <nil>}. Err :connection error: desc = "transport: authentication handshake failed: x509: certificate is valid for 10.75.78.212, not 127.0.0.1". Reconnecting...

I0318 10:42:09.532937 1 client.go:360] parsed scheme: "endpoint"

I0318 10:42:09.532967 1 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://127.0.0.1:2379 <nil> 0 <nil>}]

W0318 10:42:09.541561 1 clientconn.go:1223] grpc: addrConn.createTransport failed to connect to {https://127.0.0.1:2379 <nil> 0 <nil>}. Err :connection error: desc = "transport: authentication handshake failed: x509: certificate is valid for 10.75.78.212, not 127.0.0.1". Reconnecting...

W0318 10:42:09.556086 1 clientconn.go:1223] grpc: addrConn.createTransport failed to connect to {https://127.0.0.1:2379 <nil> 0 <nil>}. Err :connection error: desc = "transport: authentication handshake failed: x509: certificate is valid for 10.75.78.212, not 127.0.0.1". Reconnecting...

W0318 10:42:10.550560 1 clientconn.go:1223] grpc: addrConn.createTransport failed to connect to {https://127.0.0.1:2379 <nil> 0 <nil>}. Err :connection error: desc = "transport: authentication handshake failed: x509: certificate is valid for 10.75.78.212, not 127.0.0.1". Reconnecting...

W0318 10:42:11.382747 1 clientconn.go:1223] grpc: addrConn.createTransport failed to connect to {https://127.0.0.1:2379 <nil> 0 <nil>}. Err :connection error: desc = "transport: authentication handshake failed: x509: certificate is valid for 10.75.78.212, not 127.0.0.1". Reconnecting...

W0318 10:42:12.307347 1 clientconn.go:1223] grpc: addrConn.createTransport failed to connect to {https://127.0.0.1:2379 <nil> 0 <nil>}. Err :connection error: desc = "transport: authentication handshake failed: x509: certificate is valid for 10.75.78.212, not 127.0.0.1". Reconnecting...

W0318 10:42:13.486728 1 clientconn.go:1223] grpc: addrConn.createTransport failed to connect to {https://127.0.0.1:2379 <nil> 0 <nil>}. Err :connection error: desc = "transport: authentication handshake failed: x509: certificate is valid for 10.75.78.212, not 127.0.0.1". Reconnecting...

W0318 10:42:14.739642 1 clientconn.go:1223] grpc: addrConn.createTransport failed to connect to {https://127.0.0.1:2379 <nil> 0 <nil>}. Err :connection error: desc = "transport: authentication handshake failed: x509: certificate is valid for 10.75.78.212, not 127.0.0.1". Reconnecting...

W0318 10:42:17.835021 1 clientconn.go:1223] grpc: addrConn.createTransport failed to connect to {https://127.0.0.1:2379 <nil> 0 <nil>}. Err :connection error: desc = "transport: authentication handshake failed: x509: certificate is valid for 10.75.78.212, not 127.0.0.1". Reconnecting...

W0318 10:42:18.449721 1 clientconn.go:1223] grpc: addrConn.createTransport failed to connect to {https://127.0.0.1:2379 <nil> 0 <nil>}. Err :connection error: desc = "transport: authentication handshake failed: x509: certificate is valid for 10.75.78.212, not 127.0.0.1". Reconnecting...

W0318 10:42:24.705585 1 clientconn.go:1223] grpc: addrConn.createTransport failed to connect to {https://127.0.0.1:2379 <nil> 0 <nil>}. Err :connection error: desc = "transport: authentication handshake failed: x509: certificate is valid for 10.75.78.212, not 127.0.0.1". Reconnecting...

W0318 10:42:25.289047 1 clientconn.go:1223] grpc: addrConn.createTransport failed to connect to {https://127.0.0.1:2379 <nil> 0 <nil>}. Err :connection error: desc = "transport: authentication handshake failed: x509: certificate is valid for 10.75.78.212, not 127.0.0.1". Reconnecting...

Error: context deadline exceeded

这里有一条重要的报错信息

transport: authentication handshake failed: x509: certificate is valid for 10.75.78.212, not 127.0.0.1

根据日志信息,kube-apiserver 容器无法启动,因为它尝试连接到本地的 etcd 服务(在 127.0.0.1:2379),但是遇到了证书验证的错误。错误信息表明 etcd 的证书对 IP 地址 10.75.78.212 是有效的,但不是对 127.0.0.1。

这一个配置问题,通常会在更新证书的时候操作不当导致,需要确保 etcd 的证书包含正确的 IP 地址。

通常,当使用 kubeadm 初始化集群时,它会自动生成所有必要的证书,并且应该包括本地主机的 IP 地址(127.0.0.1)和其他所有控制平面节点的 IP 地址。

4.解决问题

要解决这个问题,有几个选择:

这里我选择第2种方法

4.1 方法1重新生成etcd证书

重新生成etcd服务器的证书: 包含 127.0.0.1 和 localhost 在证书的SAN中。这将允许从本地主机地址到etcd服务器的连接成功进行身份验证。

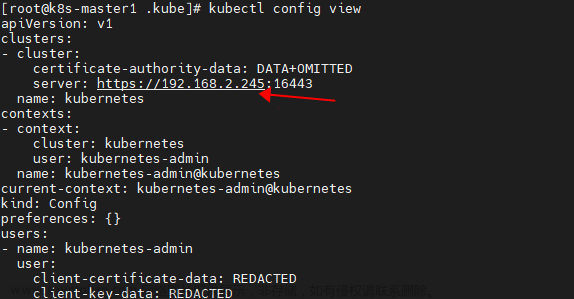

4.2 方法2修改IP

使用证书中包含的IP进行连接: 修改Kubernetes组件(最有可能是kube-apiserver)的配置,使其使用IP地址 10.75.78.212 而不是 127.0.0.1 连接到etcd。这将涉及更改 --etcd-servers 标志以使用与证书匹配的IP。

[root@k8s-master 09:59:11 ~]# cat /etc/kubernetes/manifests/kube-apiserver.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 10.75.78.212:6443

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=10.75.78.212

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --enable-admission-plugins=NodeRestriction

- --enable-bootstrap-token-auth=true

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

- --etcd-servers=https://127.0.0.1:2379

- --insecure-port=0

- --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

- --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

- --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

- --requestheader-allowed-names=front-proxy-client

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --requestheader-extra-headers-prefix=X-Remote-Extra-

- --requestheader-group-headers=X-Remote-Group

- --requestheader-username-headers=X-Remote-User

- --secure-port=6443

- --service-account-issuer=https://kubernetes.default.svc.cluster.local

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-account-signing-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=10.96.0.0/12

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

- --enable-aggregator-routing=true

image: registry.aliyuncs.com/google_containers/kube-apiserver:v1.20.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 10.75.78.212

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

name: kube-apiserver

readinessProbe:

failureThreshold: 3

httpGet:

host: 10.75.78.212

path: /readyz

port: 6443

scheme: HTTPS

periodSeconds: 1

timeoutSeconds: 15

resources:

requests:

cpu: 250m

startupProbe:

failureThreshold: 24

httpGet:

host: 10.75.78.212

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/pki

name: etc-pki

readOnly: true

- mountPath: /etc/kubernetes/pki

name: k8s-certs

readOnly: true

hostNetwork: true

priorityClassName: system-node-critical

volumes:

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: etc-pki

- hostPath:

path: /etc/kubernetes/pki

type: DirectoryOrCreate

name: k8s-certs

status: {}

把kube-apiserver.yaml 里面的–etcd-servers=https://127.0.0.1:2379

修改为–etcd-servers=https://10.75.78.212:2379

这里注意/etc/kubernetes/manifests/kube-apiserver.yaml文件时动态刷新的,修改之前建议备份,无需重启

查看apiserver日志

[root@k8s-master 10:01:26 ~]# docker logs 782b9f7303dc

Flag --insecure-port has been deprecated, This flag has no effect now and will be removed in v1.24.

I0319 02:01:13.628856 1 server.go:632] external host was not specified, using 10.75.78.212

I0319 02:01:13.629206 1 server.go:182] Version: v1.20.0

I0319 02:01:13.801987 1 shared_informer.go:240] Waiting for caches to sync for node_authorizer

I0319 02:01:13.803149 1 plugins.go:158] Loaded 12 mutating admission controller(s) successfully in the following order: NamespaceLifecycle,LimitRanger,ServiceAccount,NodeRestriction,TaintNodesByCondition,Priority,DefaultTolerationSeconds,DefaultStorageClass,StorageObjectInUseProtection,RuntimeClass,DefaultIngressClass,MutatingAdmissionWebhook.

I0319 02:01:13.803164 1 plugins.go:161] Loaded 10 validating admission controller(s) successfully in the following order: LimitRanger,ServiceAccount,Priority,PersistentVolumeClaimResize,RuntimeClass,CertificateApproval,CertificateSigning,CertificateSubjectRestriction,ValidatingAdmissionWebhook,ResourceQuota.

I0319 02:01:13.804247 1 plugins.go:158] Loaded 12 mutating admission controller(s) successfully in the following order: NamespaceLifecycle,LimitRanger,ServiceAccount,NodeRestriction,TaintNodesByCondition,Priority,DefaultTolerationSeconds,DefaultStorageClass,StorageObjectInUseProtection,RuntimeClass,DefaultIngressClass,MutatingAdmissionWebhook.

I0319 02:01:13.804258 1 plugins.go:161] Loaded 10 validating admission controller(s) successfully in the following order: LimitRanger,ServiceAccount,Priority,PersistentVolumeClaimResize,RuntimeClass,CertificateApproval,CertificateSigning,CertificateSubjectRestriction,ValidatingAdmissionWebhook,ResourceQuota.

I0319 02:01:13.806645 1 client.go:360] parsed scheme: "endpoint"

I0319 02:01:13.806678 1 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://10.75.78.212:2379 <nil> 0 <nil>}]

I0319 02:01:13.834158 1 client.go:360] parsed scheme: "endpoint"

I0319 02:01:13.834180 1 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://10.75.78.212:2379 <nil> 0 <nil>}]

I0319 02:01:13.851129 1 client.go:360] parsed scheme: "passthrough"

I0319 02:01:13.862553 1 passthrough.go:48] ccResolverWrapper: sending update to cc: {[{https://10.75.78.212:2379 <nil> 0 <nil>}] <nil> <nil>}

I0319 02:01:13.862569 1 clientconn.go:948] ClientConn switching balancer to "pick_first"

I0319 02:01:13.894346 1 client.go:360] parsed scheme: "endpoint"

I0319 02:01:13.894372 1 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://10.75.78.212:2379 <nil> 0 <nil>}]

I0319 02:01:13.957102 1 instance.go:289] Using reconciler: lease

I0319 02:01:13.957423 1 client.go:360] parsed scheme: "endpoint"

I0319 02:01:13.957442 1 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://10.75.78.212:2379 <nil> 0 <nil>}]

I0319 02:01:13.974413 1 client.go:360] parsed scheme: "endpoint"

I0319 02:01:13.974445 1 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://10.75.78.212:2379 <nil> 0 <nil>}]

I0319 02:01:13.986759 1 client.go:360] parsed scheme: "endpoint"

I0319 02:01:13.986780 1 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://10.75.78.212:2379 <nil> 0 <nil>}]

I0319 02:01:13.998254 1 client.go:360] parsed scheme: "endpoint"

I0319 02:01:13.998275 1 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://10.75.78.212:2379 <nil> 0 <nil>}]

I0319 02:01:14.009269 1 client.go:360] parsed scheme: "endpoint"

I0319 02:01:14.009290 1 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://10.75.78.212:2379 <nil> 0 <nil>}]

I0319 02:01:14.022671 1 client.go:360] parsed scheme: "endpoint"

I0319 02:01:14.022693 1 endpoint.go:68] ccResolverWrapper: sending new addresses to cc: [{https://10.75.78.212:2379 <nil> 0 <nil>}]

I0319 02:01:14.033878 1 client.go:360] parsed scheme: "endpoint"

重新查看apiserver的日志,发现已经更换到正确的etcd地址了,我们再看看能不能正常连接到kube-apiserver,并且看起来连接正常

这时候发现kubectl命令可以正常使用了

[root@k8s-master 10:04:54 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-97769f7c7-njqcv 1/1 Running 6 452d

calico-node-8wmhh 1/1 Running 107 452d

coredns-7f89b7bc75-527qj 1/1 Running 23 452d

coredns-7f89b7bc75-5qkbr 1/1 Running 24 452d

etcd-k8s-master 1/1 Running 18 53d

查看集群状态

[root@k8s-master 10:28:01 ~]# kubectl cluster-info

Kubernetes control plane is running at https://10.75.78.212:6443

KubeDNS is running at https://10.75.78.212:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Metrics-server is running at https://10.75.78.212:6443/api/v1/namespaces/kube-system/services/https:metrics-server:/proxy

查看节点状态

[root@k8s-master 10:28:07 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 452d v1.20.0

查看组件状态kubectl get componentstatuses或者kubectl get cs

[root@k8s-master 10:29:37 ~]# kubectl get componentstatuses

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true"}

4.3 方法3使用etcd代理

如果有必要,您可以设置一个etcd代理,该代理监听 127.0.0.1 并将请求代理到 10.75.78.212。这将需要额外的设置和配置。文章来源:https://www.toymoban.com/news/detail-846085.html

总结:遇到问题先检查对应服务状态,再看日志,大多数问题都在日志中有答案文章来源地址https://www.toymoban.com/news/detail-846085.html

到了这里,关于记一次k8s报错:The connection to the server ip:6443 was refused - did you specify the right host or port?的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!