-

提示:需要提前部署k8s集群(master、node01、node02..)

-

目录

1.部署kube-state-metrics

2.部署node-export

3.部署nfs-pv

4.部署alertmanager

4.1 vim alertmanager-configmap.yml

4.2 vim alertmanager-deployment.yml

4.3 vim alertmanager-pvc.yml

4.4 vim alertmanager-service.yml

5.部署promethus-server

6.部署grafana

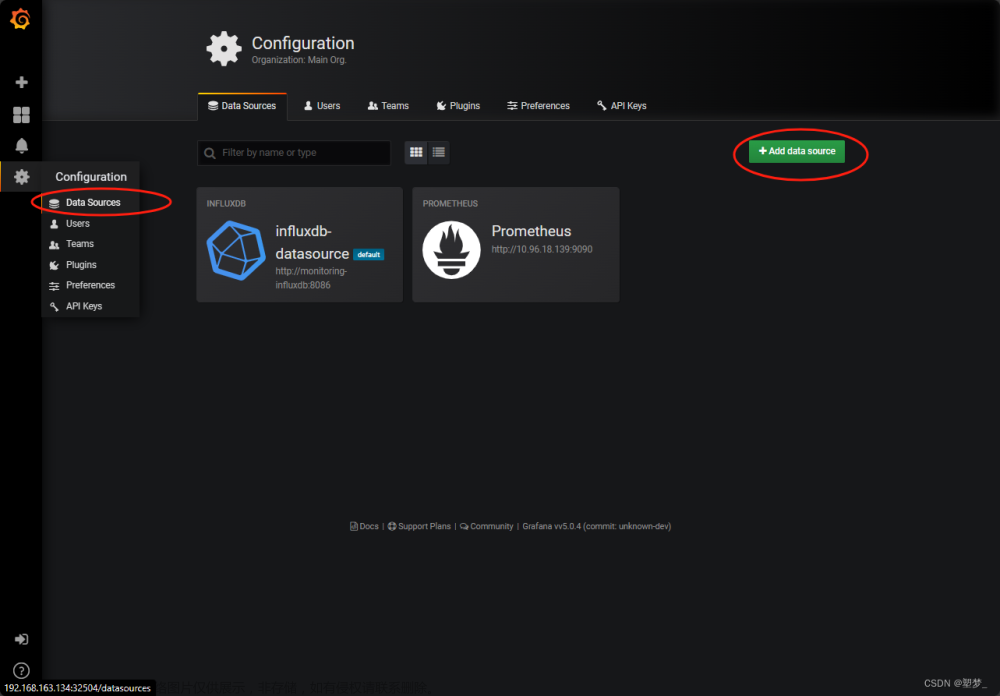

6.1.配置grafana的数据源为Prometheus

6.2.1填写数据源名称,类型均为Prometheus

6.2.2填写 kubectl get svc -n kube-system 命令查到的prometheus的ip(10.96.18.139)和端口映射(9090),然后保存并返回

6.3新建监控面板

6.3.1搜索cluster monitoring(集群监控)

6.3.2复制id,并下载

6.3.3输入id,并导入文件

6.3.4导入完成

7.测试告警发送邮箱

7.1健康的状态

7.2关闭k8s-master节点kubelet

7.3关闭后等待几分钟,出现提示 k8s-master停止工作

7.4查看 收件箱( 4.1 vim alertmanager-configmap.yml ,详细配置了告警邮箱)

1.部署kube-state-metrics

作用:用于暴露 Kubernetes 集群内各种资源的状态和数据,收集有关集群中各种对象(如节点、Pod、服务等)的信息,并将其以 Prometheus 指标格式暴露出来,使 Prometheus Server 能够收集和处理这些数据。

mkdir kube-state-metrics

cd kube-state-metrics1.1 vim kube-state-metrics-deployment.yml

vim kube-state-metrics-deployment.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v2.0.0-beta

spec:

selector:

matchLabels:

k8s-app: kube-state-metrics

version: v2.0.0-beta

replicas: 1

template:

metadata:

labels:

k8s-app: kube-state-metrics

version: v2.0.0-beta

spec:

priorityClassName: system-cluster-critical

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

image: quay.io/coreos/kube-state-metrics:v2.0.0-beta

ports:

- name: http-metrics

containerPort: 8080

- name: telemetry

containerPort: 8081

readinessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

- name: addon-resizer

image: registry.cn-beijing.aliyuncs.com/minminmsn/addon-resizer:1.8.4

resources:

limits:

cpu: 100m

memory: 30Mi

requests:

cpu: 100m

memory: 30Mi

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: config-volume

mountPath: /etc/config

command:

- /pod_nanny

- --config-dir=/etc/config

- --container=kube-state-metrics

- --cpu=100m

- --extra-cpu=1m

- --memory=100Mi

- --extra-memory=2Mi

- --threshold=5

- --deployment=kube-state-metrics

volumes:

- name: config-volume

configMap:

name: kube-state-metrics-config

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-state-metrics-config

namespace: kube-system

labels:

k8s-app: kube-state-metrics

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

data:

NannyConfiguration: |-

apiVersion: nannyconfig/v1alpha1

kind: NannyConfiguration

1.2 vim kube-state-metrics-rbac.yml

vim kube-state-metrics-rbac.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs: ["list", "watch"]

- apiGroups: ["extensions","apps"]

resources:

- daemonsets

- deployments

- replicasets

verbs: ["list", "watch"]

- apiGroups: ["apps"]

resources:

- statefulsets

verbs: ["list", "watch"]

- apiGroups: ["batch"]

resources:

- cronjobs

- jobs

verbs: ["list", "watch"]

- apiGroups: ["autoscaling"]

resources:

- horizontalpodautoscalers

verbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: kube-state-metrics-resizer

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]

resources:

- pods

verbs: ["get"]

- apiGroups: ["extensions","apps"]

resources:

- deployments

resourceNames: ["kube-state-metrics"]

verbs: ["get", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kube-state-metrics-resizer

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system1.3 vim kube-state-metrics-service.yml

vim kube-state-metrics-service.yml

apiVersion: v1

kind: Service

metadata:

name: kube-state-metrics

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "kube-state-metrics"

annotations:

prometheus.io/scrape: 'true'

spec:

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

protocol: TCP

- name: telemetry

port: 8081

targetPort: telemetry

protocol: TCP

selector:

k8s-app: kube-state-metrics

递归地应用当前目录下的所有YAML配置文件

kubectl apply -f .2.部署node-export

作用:存放节点采集的守护进程应用及service服务,用于暴露节点级别的系统资源指标的代理,如 CPU、内存、磁盘使用情况等。它通过在节点上运行的 HTTP 服务器暴露这些指标,Prometheus Server 通过抓取这些指标进行监控。

mkdir node-export

cd node-export2.1 vim node-exporter-ds.yml

vim node-exporter-ds.yml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-system

labels:

k8s-app: node-exporter

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v1.0.1

spec:

selector:

matchLabels:

k8s-app: node-exporter

version: v1.0.1

updateStrategy:

type: OnDelete

template:

metadata:

labels:

k8s-app: node-exporter

version: v1.0.1

spec:

priorityClassName: system-node-critical

containers:

- name: prometheus-node-exporter

image: "prom/node-exporter:v1.0.1"

imagePullPolicy: "IfNotPresent"

args:

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

ports:

- name: metrics

containerPort: 9100

hostPort: 9100

volumeMounts:

- name: proc

mountPath: /host/proc

readOnly: true

- name: sys

mountPath: /host/sys

readOnly: true

resources:

limits:

memory: 50Mi

requests:

cpu: 100m

memory: 50Mi

hostNetwork: true

hostPID: true

volumes:

- name: proc

hostPath:

path: /proc

- name: sys

hostPath:

path: /sys

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

2.2 vim node-exporter-service.yml

vim node-exporter-service.yml

apiVersion: v1

kind: Service

metadata:

name: node-exporter

namespace: kube-system

annotations:

prometheus.io/scrape: "true"

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "NodeExporter"

spec:

clusterIP: None

ports:

- name: metrics

port: 9100

protocol: TCP

targetPort: 9100

selector:

k8s-app: node-exporter

kubectl apply -f .3.部署nfs-pv

作用:用于创建 NFS 持久卷(pv),将文件系统的内容透明地映射到远程计算机。

mkdir nfs-pv

cd nfs-pv3.1 vim pv-demo.yml

vim pv-demo.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv-v1

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes: ["ReadWriteOnce","ReadWriteMany","ReadOnlyMany"]

persistentVolumeReclaimPolicy: Retain

mountOptions:

- hard

- nfsvers=4.1

nfs:

path: /data/v1

server: 192.168.163.134

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv-v2

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes: ["ReadWriteOnce","ReadWriteMany","ReadOnlyMany"]

persistentVolumeReclaimPolicy: Retain

mountOptions:

- hard

- nfsvers=4.1

nfs:

path: /data/v2

server: 192.168.163.134

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv-v3

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes: ["ReadWriteOnce","ReadWriteMany","ReadOnlyMany"]

persistentVolumeReclaimPolicy: Retain

mountOptions:

- hard

- nfsvers=4.1

nfs:

path: /data/v3

server: 192.168.163.134

kubectl apply -f .4.部署alertmanager

作用:接收 Prometheus 发出的警报,并根据预定义的规则进行处理,用于处理和发送警报通知

mkdir alertmanager

cd alertmanager4.1 vim alertmanager-configmap.yml

vim alertmanager-configmap.yml

kind: ConfigMap

metadata:

name: alertmanager-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

alertmanager.yml: |

#全局配置

global:

resolve_timeout: 1m #当告警的状态由"firing"变为"resolve"的以后还要呆多长时间,才宣布告警解除

smtp_from: "123456@163.com" #发件人邮箱

smtp_smarthost: "smtp.163.com:25" #qq邮箱smtp端口端口

smtp_auth_username: "123456@163.com" #邮箱地址

smtp_auth_password: "123456abcdef" #邮箱安全码/授权码

smtp_require_tls: false #不携带证书请求

#路由配置

route:

group_by: ["alertname"] #告警应该根据那些标签进行分组

group_wait: 5s #一组的告警发出前要等待多少秒,这个是为了把更多的告警一个批次发出去

group_interval: 5s #同一组的多批次告警间隔多少秒后,才能发出

repeat_interval: 5m #重复的告警要等待多久后才能再次发出去

receiver: "email" #指定路由到email的路由

#发送配置

receivers: #定义谁接收告警,(接收路由的告警)

- name: "email" #名称对应route中的receiver参数

email_configs: #采用邮箱机制

- to: "<123456@qq.com>" #发送到那里的邮箱

send_resolved: true #是否发送状态恢复的邮件

4.2 vim alertmanager-deployment.yml

vim alertmanager-deployment.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: kube-system

labels:

k8s-app: alertmanager

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v0.14.0

spec:

replicas: 1

selector:

matchLabels:

k8s-app: alertmanager

version: v0.14.0

template:

metadata:

labels:

k8s-app: alertmanager

version: v0.14.0

spec:

priorityClassName: system-cluster-critical

containers:

- name: prometheus-alertmanager

image: "prom/alertmanager:v0.14.0"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/alertmanager.yml

- --storage.path=/data

- --web.external-url=/

ports:

- containerPort: 9093

readinessProbe:

httpGet:

path: /#/status

port: 9093

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: storage-volume

mountPath: "/data"

subPath: ""

resources:

limits:

cpu: 10m

memory: 50Mi

requests:

cpu: 10m

memory: 50Mi

volumes:

- name: config-volume

configMap:

name: alertmanager-config

- name: storage-volume

persistentVolumeClaim:

claimName: alertmanager

4.3 vim alertmanager-pvc.yml

vim alertmanager-pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: alertmanager

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "2Gi"

4.4 vim alertmanager-service.yml

vim alertmanager-service.yml

apiVersion: v1

kind: Service

metadata:

name: alertmanager

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Alertmanager"

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 9093

nodePort: 30093

selector:

k8s-app: alertmanager

type: "NodePort"

递归地应用当前目录下的所有YAML配置文件

kubectl apply -f .5.部署promethus-server

mkdir promethus-server

cd promethus-server5.1 vim promethus-configmap.yml

vim promethus-configmap.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

prometheus.yml: |

rule_files:

- /etc/config/rules/*.rules

scrape_configs:

- job_name: prometheus

static_configs:

- targets:

- localhost:9090

- job_name: kubernetes-nodes

scrape_interval: 30s

static_configs:

- targets:

# - 192.168.163.134:9100

- 192.168.163.135:9100

- 192.168.163.128:9100

- job_name: kubernetes-apiservers

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: default;kubernetes;https

source_labels:

- __meta_kubernetes_namespace

- __meta_kubernetes_service_name

- __meta_kubernetes_endpoint_port_name

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-nodes-kubelet

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-nodes-cadvisor

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __metrics_path__

replacement: /metrics/cadvisor

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-service-endpoints

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

- job_name: kubernetes-services

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module:

- http_2xx

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_probe

- source_labels:

- __address__

target_label: __param_target

- replacement: blackbox

target_label: __address__

- source_labels:

- __param_target

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: kubernetes_pod_name

alerting:

alertmanagers:

- kubernetes_sd_configs:

- role: pod

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace]

regex: kube-system

action: keep

- source_labels: [__meta_kubernetes_pod_label_k8s_app]

regex: alertmanager

action: keep

- source_labels: [__meta_kubernetes_pod_container_port_number]

regex:

action: drop

5.2 vim promethus-rbac.yml

vim promethus-rbac.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/metrics

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- nonResourceURLs:

- "/metrics"

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

~

5.3 vim promethus-rules.yml

vim promethus-rules.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-rules

namespace: kube-system

data:

general.rules: |

groups:

- name: general.rules

rules:

- alert: InstanceDown

expr: up == 0

for: 1m

labels:

severity: error

annotations:

summary: "Instance {{ $labels.instance }} 停止工作"

description: "{{ $labels.instance }} job {{ $labels.job }} 已经停止5分钟以上."

node.rules: |

groups:

- name: node.rules

rules:

- alert: NodeFilesystemUsage

expr: 100 - (node_filesystem_free_bytes{fstype=~"ext4|xfs"} / node_filesystem_size_bytes{fstype=~"ext4|xfs"} * 100) > 80

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} : {{ $labels.mountpoint }} 分区使用率过高"

description: "{{ $labels.instance }}: {{ $labels.mountpoint }} 分区使用大于80% (当前值: {{ $value }})"

- alert: NodeMemoryUsage

expr: 100 - (node_memory_MemFree_bytes+node_memory_Cached_bytes+node_memory_Buffers_bytes) / node_memory_MemTotal_bytes * 100 > 80

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} 内存使用率过高"

description: "{{ $labels.instance }}内存使用大于80% (当前值: {{ $value }})"

- alert: NodeCPUUsage

expr: 100 - (avg(irate(node_cpu_seconds_total{mode="idle"}[5m])) by (instance) * 100) > 60

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} CPU使用率过高"

description: "{{ $labels.instance }}CPU使用大于60% (当前值: {{ $value }})"

pod.rules: |

groups:

- name: pod.rules

rules:

- alert: PodRestartNumber

expr: floor(delta(kube_pod_container_status_restarts_total{namespace="uat"}[3m]) != 0)

for: 1m

labels:

severity: warning

annotations:

#summary: "pod {{ $labels.pod }} Pod发生重启"

description: "Pod: {{ $labels.pod }}发生重启"

- alert: InstanceDown

expr: up == 0

for: 2m

labels:

severity: error

annotations:

summary: "监控采集器{{ $labels.instance }}停止工作"

value: "{{ $value }}"

- alert: PodSvcDown

expr: probe_success == 0

for: 1m

labels:

severity: error

annotations:

summary: "容器代理服务{{ $labels.instance }}停止工作"

value: "{{ $value }}"

- alert: MysqlCon

expr: MysqlCon_metric > 40

for: 1m

labels:

severity: warning

annotations:

summary: "mysql连接数过高"

value: "{{ $value }}"

- alert: PodCpuUsage

expr: sum by(pod_name, namespace) (rate(container_cpu_usage_seconds_total{image!=""}[1m])) * 100 > 80

for: 5m

labels:

severity: warning

annotations:

summary: "容器ns: {{ $labels.namespace }} | pod: {{ $labels.pod_name }} CPU使用率超过80%"

value: "{{ $value }}"

- alert: PodMemoryUsage

expr: sum(container_memory_rss{image!=""}) by(pod_name, namespace) / sum(container_spec_memory_limit_bytes{image!=""}) by(pod_name, namespace) * 100 != +inf > 80

for: 5m

labels:

severity: warning

annotations:

summary: "容器ns: {{ $labels.namespace }} | pod: {{ $labels.pod_name }} 内存使用率超过80%"

value: "{{ $value }}"

- alert: PodFailed

expr: sum (kube_pod_status_phase{phase="Failed"}) by (pod,namespace) > 0

for: 1m

labels:

severity: error

annotations:

summary: "容器ns: {{ $labels.namespace }} | pod: {{ $labels.pod }} pod status is Failed"

value: "{{ $value }}"

- alert: PodPending

expr: sum (kube_pod_status_phase{phase="Pending"}) by (pod,namespace) > 0

for: 1m

labels:

severity: error

annotations:

summary: "容器ns: {{ $labels.namespace }} | pod: {{ $labels.pod }} status is Pending"

value: "{{ $value }}"

- alert: PodNetworkReceive

expr: sum (rate (container_network_receive_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod_name,namespace) > 30000

for: 5m

labels:

severity: warning

annotations:

summary: "容器ns: {{ $labels.namespace }} | pod: {{ $labels.pod_name }} 接受到的网络入流量大于30MB/s"

value: "{{ $value }}K/s"

- alert: PodNetworkTransmit

expr: sum (rate (container_network_transmit_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod_name,namespace) > 30000

for: 5m

labels:

severity: warning

annotations:

summary: "容器ns: {{ $labels.namespace }} | pod: {{ $labels.pod_name }} 传输的网络出流量大于30MB/s"

value: "{{ $value }}K/s"

- alert: PodRestart

expr: sum (changes (kube_pod_container_status_restarts_total[1m])) by (pod,namespace) > 0

for: 5s

labels:

severity: warning

annotations:

summary: "容器ns: {{ $labels.namespace }} | pod: {{ $labels.pod }} pod is restart"

value: "{{ $value }}"

5.4 vim promethus-service.yml

vim promethus-service.yml

kind: Service

apiVersion: v1

metadata:

name: prometheus

namespace: kube-system

labels:

kubernetes.io/name: "Prometheus"

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

ports:

- name: http

port: 9090

protocol: TCP

targetPort: 9090

nodePort: 30090

selector:

k8s-app: prometheus

type: NodePort

5.5 vim promethus-statefulset.yml

vim promethus-statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: prometheus

namespace: kube-system

labels:

k8s-app: prometheus

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v2.24.0

spec:

serviceName: "prometheus"

replicas: 1

podManagementPolicy: "Parallel"

updateStrategy:

type: "RollingUpdate"

selector:

matchLabels:

k8s-app: prometheus

template:

metadata:

labels:

k8s-app: prometheus

spec:

priorityClassName: system-cluster-critical

serviceAccountName: prometheus

initContainers:

- name: "init-chown-data"

image: "busybox:latest"

imagePullPolicy: "IfNotPresent"

command: ["chown", "-R", "65534:65534", "/data"]

volumeMounts:

- name: prometheus-data

mountPath: /data

subPath: ""

containers:

- name: prometheus-server

image: "prom/prometheus:v2.24.0"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/prometheus.yml

- --storage.tsdb.path=/data

- --web.console.libraries=/etc/prometheus/console_libraries

- --web.console.templates=/etc/prometheus/consoles

- --web.enable-lifecycle

ports:

- containerPort: 9090

readinessProbe:

httpGet:

path: /-/ready

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

livenessProbe:

httpGet:

path: /-/healthy

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

resources:

limits:

cpu: 200m

memory: 1000Mi

requests:

cpu: 200m

memory: 1000Mi

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: prometheus-data

mountPath: /data

subPath: ""

# 添加:指定rules的configmap配置文件名称

- name: prometheus-rules

mountPath: /etc/config/rules

subPath: ""

terminationGracePeriodSeconds: 300

volumes:

- name: config-volume

configMap:

name: prometheus-config

# 添加:name rules

- name: prometheus-rules

# 添加:配置文件

configMap:

# 添加:定义文件名称

name: prometheus-rules

volumeClaimTemplates:

- metadata:

name: prometheus-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "5Gi"

kubectl apply -f .6.部署grafana

mkdir grafana

cd grafana6.1 vim grafana.yml

vim grafana.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: monitoring-grafana

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

task: monitoring

k8s-app: grafana

template:

metadata:

labels:

task: monitoring

k8s-app: grafana

spec:

containers:

- name: grafana

image: registry.cn-hangzhou.aliyuncs.com/mirror_googlecontainers/heapster-grafana-amd64:v5.0.4

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certificates

readOnly: true

- mountPath: /var

name: grafana-storage

env:

- name: GF_SERVER_HTTP_PORT

value: "3000"

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

value: /

volumes:

- name: ca-certificates

hostPath:

path: /etc/ssl/certs

- name: grafana-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

labels:

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 3000

selector:

k8s-app: grafana

type: "NodePort"

kubectl apply -f .6.2 配置grafana的数据源为Prometheus

浏览器输入:填写 k8s集群任意一个节点ip + monitoring-grafana暴露的端口(32504)

[root@k8s-master grafana]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

monitoring-grafana NodePort 10.96.176.39 <none> 80:32504/TCP 27h

进入配置:

6.3填写数据源名称,类型均为Prometheus

6.3.1填写 kubectl get svc -n kube-system 命令查到的prometheus的ip(10.96.18.139)和端口映射(9090),然后保存并返回

[root@k8s-master grafana]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

prometheus NodePort 10.96.18.139 <none> 9090:30090/TCP 6d10h

6.4新建监控面板

6.4.1搜索cluster monitoring(集群监控)

6.4.2复制id,并下载

6.4.3输入id,并导入文件

6.4.4导入完成

7.测试告警发送邮箱

7.1健康的状态

7.2关闭k8s-master节点kubelet

systemctl stop kubectl7.3关闭后等待几分钟,出现提示 k8s-master停止工作

7.4查看 收件箱( 4.1 vim alertmanager-configmap.yml ,详细配置了告警邮箱)

可以看到邮件中告警了k8s-master节点停止工作

文章来源:https://www.toymoban.com/news/detail-848009.html

文章来源:https://www.toymoban.com/news/detail-848009.html

觉得有用的小伙伴点点赞吧!文章来源地址https://www.toymoban.com/news/detail-848009.html

到了这里,关于基于k8s容器化部署Prometheus和Grafana可视化监控数据的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!