目录

参考文献

了解kubekey 英文和中文

前提条件

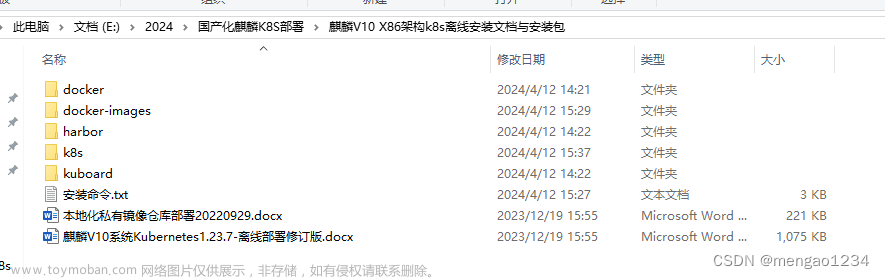

部署准备

下载kubukey

离线包配置和制作

配置离线包

制作离线包

离线安装集群

复制KubeKey 和制品 artifact到离线机器

创建初始换、安装配置文件

安装镜像仓库harbor

初始化harbor 项目

修改配置文件

安装k8s集群和kubesphere

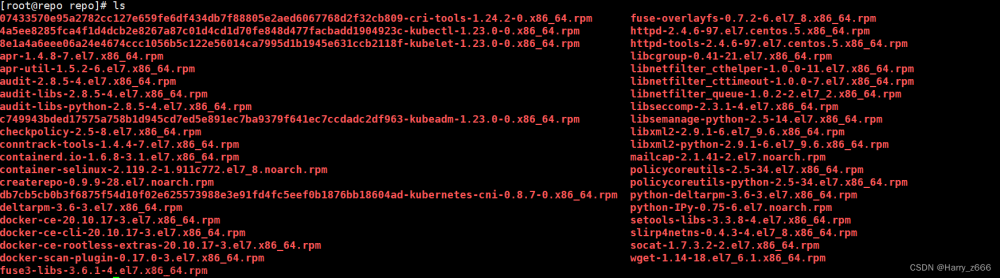

手动安装依赖包

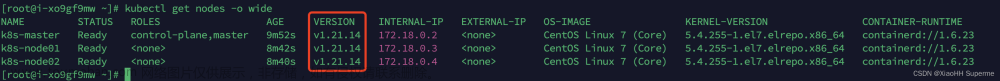

检查环境是否满足要求

检查防火墙端口是否满足要求

安装完成并登录

安装过程中遇到的问题

制作制品,不能下载github上的操作系统iso

初始化安装harbor报错must specify a CommonName

初始化harbor后,发现harbor的一些模块容器没有正常启动

麒麟系统安装,安装包没有 Fkylin-v10-amd64.iso

离线安装却在线下载calicoctl

参考文献

官网离线安装文档

了解kubekey 英文和中文

./kk --help

Deploy a Kubernetes or KubeSphere cluster efficiently, flexibly and easily. There are three scenarios to use KubeKey.

1. Install Kubernetes only

2. Install Kubernetes and KubeSphere together in one command

3. Install Kubernetes first, then deploy KubeSphere on it using https://github.com/kubesphere/ks-installer

Usage:

kk [command]

Available Commands:

add Add nodes to kubernetes cluster

alpha Commands for features in alpha

artifact Manage a KubeKey offline installation package

certs cluster certs

completion Generate shell completion scripts

create Create a cluster or a cluster configuration file

delete Delete node or cluster

help Help about any command

init Initializes the installation environment

plugin Provides utilities for interacting with plugins

upgrade Upgrade your cluster smoothly to a newer version with this command

version print the client version information

Flags:

-h, --help help for kk

Deploy a kubernetes or kubesphere cluster efficiently, flexibly and easily. There are three scenarios to use kubekey.

1. 仅安装kubernetes

2. 一条命令同时安装kubernetes和kubesphere

3. 现在安装kubernetes,然后在使用ks-installer在k8s上部署kubesphere,ks-installer参考:https://github.com/kubesphere/ks-installer

语法:

kk [command]

可用命令s:

add k8s集群添加节点

alpha Commands for features in alpha

artifact 管理kubekey离线下载的安装包

certs 集群证书

completion 生成 shell 完成脚本

create 创建一个集群或创建集群配置文件

delete 删除节点或删除集群

help 帮助

init 初始化安装环境

plugin Provides utilities for interacting with plugins

upgrade 平滑升级集群

version 打印kk版本信息

前提条件

要开始进行多节点安装,您需要参考如下示例准备至少三台主机。

| 主机 IP | 主机名称 | 角色 |

|---|---|---|

| 192.168.0.2 | node1 | 联网主机用于制作离线包 |

| 192.168.0.3 | node2 | 离线环境主节点 |

| 192.168.0.4 | node3 | 离线环境镜像仓库节点 |

关闭防火墙、selinux、swap、dnsmasq(所有节点)

关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config #永久

setenforce 0 #临时

关闭swap(k8s禁止虚拟内存以提高性能)

sed -ri 's/.*swap.*/#&/' /etc/fstab #永久

swapoff -a #临时

//关闭dnsmasq(否则可能导致docker容器无法解析域名)

service dnsmasq stop

systemctl disable dnsmaq

有的机器不允许关闭防火墙可以看下文需要开放的端口

部署准备

下载kubukey

执行以下命令下载 KubeKey 并解压

方式一(可以访问github):

从 GitHub Release Page 下载 KubeKey 或者直接运行以下命令。

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -方式二:

首先运行以下命令,以确保您从正确的区域下载 KubeKey。

export KKZONE=cn运行以下命令来下载 KubeKey:

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -离线包配置和制作

配置离线包

在联网主机上执行以下命令,并复制示例中的 manifest 内容。

vim manifest.yaml

---

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Manifest

metadata:

name: sample

spec:

arches:

- amd64

operatingSystems:

- arch: amd64

type: linux

id: centos

version: "7"

repository:

iso:

localPath:

url: https://github.com/kubesphere/kubekey/releases/download/v3.0.10/centos7-rpms-amd64.iso

- arch: amd64

type: linux

id: ubuntu

version: "20.04"

repository:

iso:

localPath:

url: https://github.com/kubesphere/kubekey/releases/download/v3.0.10/ubuntu-20.04-debs-amd64.iso

kubernetesDistributions:

- type: kubernetes

version: v1.23.15

components:

helm:

version: v3.9.0

cni:

version: v1.2.0

etcd:

version: v3.4.13

calicoctl:

version: v3.23.2

## For now, if your cluster container runtime is containerd, KubeKey will add a docker 20.10.8 container runtime in the below list.

## The reason is KubeKey creates a cluster with containerd by installing a docker first and making kubelet connect the socket file of containerd which docker contained.

containerRuntimes:

- type: docker

version: 20.10.8

- type: containerd

version: 1.6.4

crictl:

version: v1.24.0

docker-registry:

version: "2"

harbor:

version: v2.5.3

docker-compose:

version: v2.2.2

images:

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.23.15

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.23.15

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.23.15

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.23.15

- registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/typha:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/flannel:v0.12.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/provisioner-localpv:3.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/linux-utils:3.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/haproxy:2.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/nfs-subdir-external-provisioner:v4.0.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-installer:v3.4.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-apiserver:v3.4.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-console:v3.4.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-controller-manager:v3.4.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.22.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.21.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.20.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubefed:v0.8.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/tower:v0.2.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/minio:RELEASE.2019-08-07T01-59-21Z

- registry.cn-beijing.aliyuncs.com/kubesphereio/mc:RELEASE.2019-08-07T23-14-43Z

- registry.cn-beijing.aliyuncs.com/kubesphereio/snapshot-controller:v4.0.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nginx-ingress-controller:v1.1.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/defaultbackend-amd64:1.4

- registry.cn-beijing.aliyuncs.com/kubesphereio/metrics-server:v0.4.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/redis:5.0.14-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/haproxy:2.0.25-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/alpine:3.14

- registry.cn-beijing.aliyuncs.com/kubesphereio/openldap:1.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/netshoot:v1.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/cloudcore:v1.13.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/iptables-manager:v1.13.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/edgeservice:v0.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/gatekeeper:v3.5.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/openpitrix-jobs:v3.3.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/devops-apiserver:ks-v3.4.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/devops-controller:ks-v3.4.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/devops-tools:ks-v3.4.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-jenkins:v3.4.0-2.319.3-1

- registry.cn-beijing.aliyuncs.com/kubesphereio/inbound-agent:4.10-2

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-base:v3.2.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-nodejs:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.1-jdk11

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-python:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.16

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.17

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.18

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-base:v3.2.2-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-nodejs:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.1-jdk11-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-python:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.16-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.17-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.18-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/s2ioperator:v3.2.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/s2irun:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/s2i-binary:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java11-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java11-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java8-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java8-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-11-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-8-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-8-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-11-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nodejs-8-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nodejs-6-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nodejs-4-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-36-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-35-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-34-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-27-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/argocd:v2.3.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/argocd-applicationset:v0.4.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/dex:v2.30.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/redis:6.2.6-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/configmap-reload:v0.7.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus:v2.39.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-config-reloader:v0.55.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-operator:v0.55.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.11.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-state-metrics:v2.6.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/node-exporter:v1.3.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/alertmanager:v0.23.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/thanos:v0.31.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/grafana:8.3.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.11.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager-operator:v2.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager:v2.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-tenant-sidecar:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/elasticsearch-curator:v5.7.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/elasticsearch-oss:6.8.22

- registry.cn-beijing.aliyuncs.com/kubesphereio/opensearch:2.6.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/opensearch-dashboards:2.6.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/opensearch-curator:v0.0.5

- registry.cn-beijing.aliyuncs.com/kubesphereio/fluentbit-operator:v0.14.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/docker:19.03

- registry.cn-beijing.aliyuncs.com/kubesphereio/fluent-bit:v1.9.4

- registry.cn-beijing.aliyuncs.com/kubesphereio/log-sidecar-injector:v1.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/filebeat:6.7.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-events-operator:v0.6.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-events-exporter:v0.6.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-events-ruler:v0.6.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-auditing-operator:v0.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-auditing-webhook:v0.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/pilot:1.14.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/proxyv2:1.14.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-operator:1.29

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-agent:1.29

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-collector:1.29

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-query:1.29

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-es-index-cleaner:1.29

- registry.cn-beijing.aliyuncs.com/kubesphereio/kiali-operator:v1.50.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kiali:v1.50

- registry.cn-beijing.aliyuncs.com/kubesphereio/busybox:1.31.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/nginx:1.14-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/wget:1.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/hello:plain-text

- registry.cn-beijing.aliyuncs.com/kubesphereio/wordpress:4.8-apache

- registry.cn-beijing.aliyuncs.com/kubesphereio/hpa-example:latest

- registry.cn-beijing.aliyuncs.com/kubesphereio/fluentd:v1.4.2-2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/perl:latest

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-productpage-v1:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-reviews-v1:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-reviews-v2:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-details-v1:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-ratings-v1:1.16.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/scope:1.13.0

备注

-

若需要导出的 artifact 文件中包含操作系统依赖文件(如:conntarck、chrony 等),可在 operationSystem 元素中的 .repostiory.iso.url 中配置相应的 ISO 依赖文件下载地址或者提前下载 ISO 包到本地在 localPath 里填写本地存放路径并删除 url 配置项。

-

开启 harbor 和 docker-compose 配置项,为后面通过 KubeKey 自建 harbor 仓库推送镜像使用。

-

默认创建的 manifest 里面的镜像列表从 docker.io 获取。

-

可根据实际情况修改 manifest-sample.yaml 文件的内容,用于之后导出期望的 artifact 文件。

-

您可以访问 Release v3.0.7 🌈 · kubesphere/kubekey · GitHub 下载 ISO 文件。

个人备注:

在这一步我遇到了操作系统配置在githab上,然后下载不下来的情况

然后我去github上手动下载下来放到了本地,然后下盖下面的配置部分

operatingSystems:

- arch: amd64

type: linux

id: centos

version: "7"

repository:

iso:

localPath: 添加你的本地地址

url:

- arch: amd64

type: linux

id: ubuntu

version: "20.04"

repository:

iso:

localPath: 添加你的本地地址

url: 下载地址

https://github.com/kubesphere/kubekey/releases/tag/v3.0.10

制作离线包

导出制品 artifact。

方式一(可以访问github):

执行以下命令:

./kk artifact export -m manifest-sample.yaml -o kubesphere.tar.gz

方式二:

依次运行以下命令:

export KKZONE=cn

./kk artifact export -m manifest-sample.yaml -o kubesphere.tar.gz

备注

制品(artifact)是一个根据指定的 manifest 文件内容导出的包含镜像 tar 包和相关二进制文件的 tgz 包。在 KubeKey 初始化镜像仓库、创建集群、添加节点和升级集群的命令中均可指定一个 artifact,KubeKey 将自动解包该 artifact 并在执行命令时直接使用解包出来的文件。

-

导出时请确保网络连接正常。

-

KubeKey 会解析镜像列表中的镜像名,若镜像名中的镜像仓库需要鉴权信息,可在 manifest 文件中的 .registry.auths 字段中进行配置。

离线安装集群

复制KubeKey 和制品 artifact到离线机器

将下载的 KubeKey 和制品 artifact 通过 U 盘等介质拷贝至离线环境安装节点。

创建初始换、安装配置文件

执行以下命令创建离线集群配置文件:

./kk create config --with-kubesphere v3.4.1 --with-kubernetes v1.23.15 -f config-sample.yaml

执行以下命令修改配置文件:

vim config-sample.yaml备注

- 按照实际离线环境配置修改节点信息。

- 必须指定

registry仓库部署节点(用于 KubeKey 部署自建 Harbor 仓库)。 -

registry里必须指定type类型为harbor,否则默认安装 docker registry。

安装镜像仓库harbor

执行以下命令安装镜像仓库

-

./kk init registry -f config-sample.yaml -a kubesphere.tar.gz备注

命令中的参数解释如下:

-

config-sample.yaml 指离线环境集群的配置文件。

-

kubesphere.tar.gz 指源集群打包出来的 tar 包镜像。

-

个人备注

在执行初始换安装 harbor时报错

11:16:46 UTC success: [rs-node-178-02]

11:16:46 UTC success: [rs-node-177-01]

11:16:46 UTC success: [rs-master-174-01]

11:16:46 UTC success: [rs-node-179-03]

11:16:46 UTC success: [rs-master-175-02]

11:16:46 UTC success: [rs-master-176-03]

11:16:46 UTC success: [devops-180]

11:16:46 UTC [ConfigureOSModule] configure the ntp server for each node

11:16:46 UTC skipped: [rs-node-179-03]

11:16:46 UTC skipped: [rs-master-174-01]

11:16:46 UTC skipped: [rs-master-175-02]

11:16:46 UTC skipped: [rs-master-176-03]

11:16:46 UTC skipped: [devops-180]

11:16:46 UTC skipped: [rs-node-177-01]

11:16:46 UTC skipped: [rs-node-178-02]

11:16:46 UTC [InitRegistryModule] Fetch registry certs

11:16:46 UTC success: [devops-180]

11:16:46 UTC [InitRegistryModule] Generate registry Certs

[certs] Using existing ca certificate authority

11:16:46 UTC message: [LocalHost]

unable to sign certificate: must specify a CommonName

11:16:46 UTC failed: [LocalHost]

error: Pipeline[InitRegistryPipeline] execute failed: Module[InitRegistryModule] exec failed:

failed: [LocalHost] [GenerateRegistryCerts] exec failed after 1 retries: unable to sign certificate: must specify a CommonName解决方案:

https://ask.kubesphere.io/forum/d/22879-kubesphere34-unable-to-sign-certificate-must-specify-a-commonname

修改配置文件

...

registry:

type: harbor

auths:

"dockerhub.kubekey.local":

username: admin

password: Harbor12345

privateRegistry: "dockerhub.kubekey.local"

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: []

然后重新执行即可

执行安装后去harbor对应服务器检查harbor启动情况,

如果有部分模块启动失败

进入 /opt/harbor 目录

chmod 777 -R ./common并重启harbor

docker-compose down -v

docker-compose u -dharbor启动后可以浏览器访问

初始化harbor 项目

备注

由于 Harbor 项目存在访问控制(RBAC)的限制,即只有指定角色的用户才能执行某些操作。如果您未创建项目,则镜像不能被推送到 Harbor。Harbor 中有两种类型的项目:

- 公共项目(Public):任何用户都可以从这个项目中拉取镜像。

- 私有项目(Private):只有作为项目成员的用户可以拉取镜像。

Harbor 管理员账号:admin,密码:Harbor12345。Harbor 安装文件在 /opt/harbor , 如需运维 Harbor,可至该目录下。

方式一:

执行脚本创建 Harbor 项目。

a. 执行以下命令下载指定脚本初始化 Harbor 仓库:

curl -O https://raw.githubusercontent.com/kubesphere/ks-installer/master/scripts/create_project_harbor.shb. 执行以下命令修改脚本配置文件:

vim create_project_harbor.sh修改成

#!/usr/bin/env bash

# Copyright 2018 The KubeSphere Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

url="https://dockerhub.kubekey.local" #修改url的值为https://dockerhub.kubekey.local

user="admin"

passwd="Harbor12345"

harbor_projects=(library

kubesphereio

kubesphere

argoproj

calico

coredns

openebs

csiplugin

minio

mirrorgooglecontainers

osixia

prom

thanosio

jimmidyson

grafana

elastic

istio

jaegertracing

jenkins

weaveworks

openpitrix

joosthofman

nginxdemos

fluent

kubeedge

openpolicyagent

)

for project in "${harbor_projects[@]}"; do

echo "creating $project"

curl -u "${user}:${passwd}" -X POST -H "Content-Type: application/json" "${url}/api/v2.0/projects" -d "{ \"project_name\": \"${project}\", \"public\": true}" -k #curl命令末尾加上 -k

done

备注

-

修改 url 的值为 https://dockerhub.kubekey.local。

-

需要指定仓库项目名称和镜像列表的项目名称保持一致。

-

脚本末尾

curl命令末尾加上-k。

c. 执行以下命令创建 Harbor 项目:

chmod +x create_project_harbor.sh

./create_project_harbor.sh方式二:

登录 Harbor 仓库创建项目。将项目设置为公开以便所有用户都能够拉取镜像。关于如何创建项目,请参阅创建项目。

修改配置文件

再次执行以下命令修改集群配置文件:

vim config-sample.yaml

...

registry:

type: harbor

auths:

"dockerhub.kubekey.local":

username: admin

password: Harbor12345

privateRegistry: "dockerhub.kubekey.local"

namespaceOverride: "kubesphereio"

registryMirrors: []

insecureRegistries: []

addons: []

备注

- 新增 auths 配置增加 dockerhub.kubekey.local 和账号密码。

- privateRegistry 增加 dockerhub.kubekey.local。

- namespaceOverride 增加 kubesphereio。

安装k8s集群和kubesphere

执行以下命令安装 KubeSphere 集群:

./kk create cluster -f config-sample.yaml -a kubesphere.tar.gz --with-packages

参数解释如下:

- config-sample.yaml:离线环境集群的配置文件。

- kubesphere.tar.gz:源集群打包出来的 tar 包镜像。

- --with-packages:若需要安装操作系统依赖,需指定该选项。

执行以下命令查看集群状态:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f执行命令会看到以下提示:

[root@k8s-master kubekey]# ./kk create cluster -f config-sample.yaml -a kubesphere.tar.gz --with-packages

_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \| | | | '_ \ / _ \ \ / _ \ | | |

| |\ \ |_| | |_) | __/ |\ \ __/ |_| |

\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |

__/ |

|___/

11:07:36 CST [GreetingsModule] Greetings

11:07:37 CST message: [k8s-master]

Greetings, KubeKey!

11:07:37 CST message: [k8s-node]

Greetings, KubeKey!

11:07:37 CST success: [k8s-master]

11:07:37 CST success: [k8s-node]

11:07:37 CST [NodePreCheckModule] A pre-check on nodes

11:07:44 CST success: [k8s-master]

11:07:44 CST success: [k8s-node]

11:07:44 CST [ConfirmModule] Display confirmation form

+------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | ipvsadm | conntrack | chrony | docker | containerd | nfs client | ceph client | glusterfs client | time |

+------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| k8s-node | y | y | y | y | | y | | | y | 24.0.6 | v1.7.3 | y | | | CST 11:07:44 |

| k8s-master | y | y | y | y | | y | | | y | | y | y | | | CST 11:07:43 |

+------------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

This is a simple check of your environment.

Before installation, ensure that your machines meet all requirements specified at

https://github.com/kubesphere/kubekey#requirements-and-recommendations

Continue this installation? [yes/no]: no手动安装依赖包

然后需要手动离线安装

socat ipvsadm conntrack ceph client glusterfs client

离线安装依赖包方式参考下文

yum 离线安装 yumdownloader

并确保满足 https://github.com/kubesphere/kubekey#requirements-and-recommendations 中的安装条件

检查环境是否满足要求

- Minimum resource requirements (For Minimal Installation of KubeSphere only):

- 2 vCPUs

- 4 GB RAM

- 20 GB Storage

/var/lib/docker is mainly used to store the container data, and will gradually increase in size during use and operation. In the case of a production environment, it is recommended that /var/lib/docker mounts a drive separately.

- OS requirements:

-

SSHcan access to all nodes. - Time synchronization for all nodes.

-

sudo/curl/opensslshould be used in all nodes. -

dockercan be installed by yourself or by KubeKey. -

Red HatincludesSELinuxin itsLinux release. It is recommended to close SELinux or switch the mode of SELinux toPermissive

-

- It's recommended that Your OS is clean (without any other software installed), otherwise there may be conflicts.

- A container image mirror (accelerator) is recommended to be prepared if you have trouble downloading images from dockerhub.io. Configure registry-mirrors for the Docker daemon.

- KubeKey will install OpenEBS to provision LocalPV for development and testing environment by default, this is convenient for new users. For production, please use NFS / Ceph / GlusterFS or commercial products as persistent storage, and install the relevant client in all nodes.

- If you encounter

Permission deniedwhen copying, it is recommended to check SELinux and turn off it first

- Dependency requirements:

KubeKey can install Kubernetes and KubeSphere together. Some dependencies need to be installed before installing kubernetes after version 1.18. You can refer to the list below to check and install the relevant dependencies on your node in advance.

| Kubernetes Version ≥ 1.18 | |

|---|---|

socat |

Required |

conntrack |

Required |

ebtables |

Optional but recommended |

ipset |

Optional but recommended |

ipvsadm |

Optional but recommended |

- Networking and DNS requirements:

- Make sure the DNS address in

/etc/resolv.confis available. Otherwise, it may cause some issues of DNS in cluster. - If your network configuration uses Firewall or Security Group,you must ensure infrastructure components can communicate with each other through specific ports. It's recommended that you turn off the firewall or follow the link configuriation: NetworkAccess.

- Make sure the DNS address in

检查防火墙端口是否满足要求

端口开放满足 https://github.com/kubesphere/kubekey/blob/master/docs/network-access.md

If your network configuration uses an firewall,you must ensure infrastructure components can communicate with each other through specific ports that act as communication endpoints for certain processes or services.

| services | protocol | action | start port | end port | comment |

|---|---|---|---|---|---|

| ssh | TCP | allow | 22 | ||

| etcd | TCP | allow | 2379 | 2380 | |

| apiserver | TCP | allow | 6443 | ||

| calico | TCP | allow | 9099 | 9100 | |

| bgp | TCP | allow | 179 | ||

| nodeport | TCP | allow | 30000 | 32767 | |

| master | TCP | allow | 10250 | 10258 | |

| dns | TCP | allow | 53 | ||

| dns | UDP | allow | 53 | ||

| local-registry | TCP | allow | 5000 | offline environment | |

| local-apt | TCP | allow | 5080 | offline environment | |

| rpcbind | TCP | allow | 111 | use NFS | |

| ipip | IPENCAP / IPIP | allow | calico needs to allow the ipip protocol |

安装完成后,您会看到以下内容:

**************************************************

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://192.168.0.3:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

the "Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

1. Please change the default password after login.

#####################################################

https://kubesphere.io 2022-02-28 23:30:06

#####################################################

安装完成并登录

通过 http://{IP}:30880 使用默认帐户和密码 admin/P@88w0rd 访问 KubeSphere 的 Web 控制台。

安装过程中遇到的问题

制作制品,不能下载github上的操作系统iso

在这一步我遇到了操作系统配置在githab上,然后下载不下来的情况

然后我去github上手动下载下来放到了本地,然后下盖下面的配置部分

operatingSystems:

- arch: amd64

type: linux

id: centos

version: "7"

repository:

iso:

localPath: 添加你的本地地址

url:

- arch: amd64

type: linux

id: ubuntu

version: "20.04"

repository:

iso:

localPath: 添加你的本地地址

url: 下载地址

https://github.com/kubesphere/kubekey/releases/tag/v3.0.10

初始化安装harbor报错must specify a CommonName

11:16:46 UTC success: [rs-node-178-02]

11:16:46 UTC success: [rs-node-177-01]

11:16:46 UTC success: [rs-master-174-01]

11:16:46 UTC success: [rs-node-179-03]

11:16:46 UTC success: [rs-master-175-02]

11:16:46 UTC success: [rs-master-176-03]

11:16:46 UTC success: [devops-180]

11:16:46 UTC [ConfigureOSModule] configure the ntp server for each node

11:16:46 UTC skipped: [rs-node-179-03]

11:16:46 UTC skipped: [rs-master-174-01]

11:16:46 UTC skipped: [rs-master-175-02]

11:16:46 UTC skipped: [rs-master-176-03]

11:16:46 UTC skipped: [devops-180]

11:16:46 UTC skipped: [rs-node-177-01]

11:16:46 UTC skipped: [rs-node-178-02]

11:16:46 UTC [InitRegistryModule] Fetch registry certs

11:16:46 UTC success: [devops-180]

11:16:46 UTC [InitRegistryModule] Generate registry Certs

[certs] Using existing ca certificate authority

11:16:46 UTC message: [LocalHost]

unable to sign certificate: must specify a CommonName

11:16:46 UTC failed: [LocalHost]

error: Pipeline[InitRegistryPipeline] execute failed: Module[InitRegistryModule] exec failed:

failed: [LocalHost] [GenerateRegistryCerts] exec failed after 1 retries: unable to sign certificate: must specify a CommonName解决方案:

https://ask.kubesphere.io/forum/d/22879-kubesphere34-unable-to-sign-certificate-must-specify-a-commonname

修改配置文件

...

registry:

type: harbor

auths:

"dockerhub.kubekey.local":

username: admin

password: Harbor12345

privateRegistry: "dockerhub.kubekey.local"

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: []

然后重新执行即可

初始化harbor后,发现harbor的一些模块容器没有正常启动

执行安装后去harbor对应服务器检查harbor启动情况,

如果有部分模块容器启动失败

进入 /opt/harbor 目录

chmod 777 -R ./common并重启harbor

docker-compose down -v

docker-compose u -dharbor启动后可以浏览器访问

麒麟系统安装,安装包没有 Fkylin-v10-amd64.iso

关于##kylin##上安装KubeSphere的问题,如何解决? - KubeSphere 开发者社区

离线安装却在线下载calicoctl

离线安装 kubesphere v3.4.1 报错Failed to download calicoctl binary - KubeSphere 开发者社区

还有几个镜像也是拉取的3.26.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/typha:v3.23.2修改成

- registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.26.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.26.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.26.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.26.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/typha:v3.26.1重新制作制品

或者自己在有网的服务器上

docker pull下载这几个镜像

然后 docker tag 重命名镜像

docker save 保存镜像到本地,并传到没有网的服务器

docker load 加载本地镜像到没有网的服务器文章来源:https://www.toymoban.com/news/detail-848169.html

docker push 上传到harbor仓库文章来源地址https://www.toymoban.com/news/detail-848169.html

到了这里,关于kubekey 离线安装harbor、k8s、kubesphere的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!