前言:

Kinect是微软在2009年公布的XBOX360体感周边外设。它是一种3D体感摄影机,同时它导入了即时动态捕捉、影像辨识、麦克风输入、语音辨识、社群互动等功能。而相比Kinect V1,Kinect V2具有较大的红外传感器尺寸,并且(相对于其他深度相机)具有较宽阔的视场角,生成的深度图质量比较高。此外,Kinect V2的SDK非常给力,SDK中提供了同时最多进行六个人的骨架追踪、基本的手势操作和脸部跟踪,支持 Cinder 和 Open Frameworks,并且具有内置的Unity 3D插件。下面是Kinect V1和Kinect V2的一些对比。

| 种类 | V1 | V2 |

| 彩色图分辨率 | 640*480 | 1920*1280 |

| 深度图分辨率 | 320*240 | 512*424 |

| 红外图分辨率 | -- | 512*424 |

| 深度检测距离 | 0.4m~4m | 0.4m~4.5m |

| 垂直方向视角 | 57度 | 70度 |

| 水平方向视角 | 43度 | 60度 |

同时,相对V1版相机基本是通过C++进行读取与开发,V2版也提供了一些开源Python接口对相机进行读取,如(Kinect/Pykinect2)。接着就可以方便地使用OpenCV、Open3D或深度学习等算法了。目前应用Python开发KinectV2主要有三种思路:

A. Libfreenect2以及OpenNI2:此方法在ROS端配置比较方便(参考博客),但在Windows端配置比较复杂(参考博客)。且对UsbDk或libusbK和libfreenect2等文件通过Visual Studio编译的过程依赖工具多,不同电脑总会出现各种问题,解决起来比较繁琐。

B. Matlab(读取)和Python的联合编程:通过kin2工具箱(参考开源项目)调用C++封装好的Kinect SDK,并通过Matlab和Python的实时通讯接口传输图片。缺点是占用内存大、传输延时大、调试复杂。

C. 应用Pykinect2库:通过开源的Pykinect2工具库读取,但数据方面只提供RGB和深度图接口,且该库是使用Python2开发。在应用Python3的安装和开发过程中会遇到一系列的问题,在本文中列出相应的解决方案。

工作环境:

系统:windows10家庭版

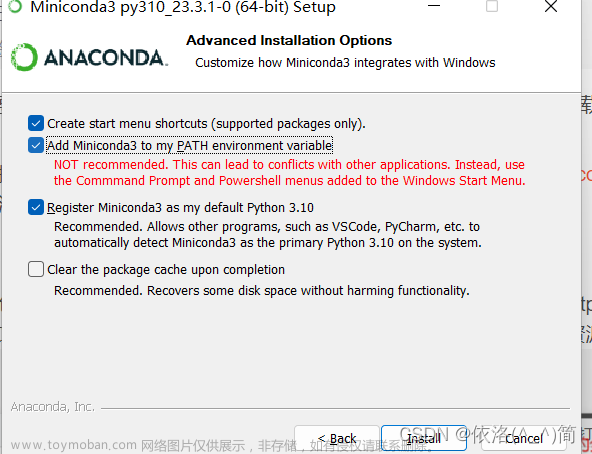

Anaconda版本:4.14.0

Python版本:3.8.12

IDE:Pycharm

安装流程:

1、下载Kinect for Windows SDK2.0,并正确安装至本机,打开Kinect Studio检查安装是否成功。参考博客

2、在Anaconda的Terminal下载Pykinect2库:

pip install pykinect2 -i https://pypi.tuna.tsinghua.edu.cn/simple

3、进入Pykinect2(Github)下载最新库,并把其中的这两个文件替换(Anaconda所在路径)\envs\(环境名称)\Lib\site-packages\pykinect2下的同名文件。

此步骤可以解决这个报错:

4、接下来会遇到这个报错,此报错是由于Pykinect2依赖库comtypes连接的版本与最新版本不兼容。

此时需要重新安装comtypes库:

pip install comtypes==1.1.4 -i https://pypi.tuna.tsinghua.edu.cn/simple5、接下来会遇到这个报错,此报错是由于该版本的comtypes库是基于Python2编写的,与现在环境的Python3版本不兼容。

此时需要将comtypes库中用到的Python2文件转换为Python3文件才能够正常执行,先找到Anaconda安装路径中的2to3.py文件,位于(Anaconda安装路径)\envs\(环境名称)\Tools\scripts:

打开电脑cmd(Win+R),进入到对应路径下:

找到comtypes中报错的文件路径,使用2to3.py将其转为Python3文件,例如输入:

python 2to3.py -w D:\Anaconda\envs\paddle\lib\site-packages\comtypes\__init__.py顺序解决所有类似的报错,此步骤涉及近10个文件,需要耐心重复执行。执行完后便可正常引用Pykinect2库了。文章来源:https://www.toymoban.com/news/detail-851194.html

6、编写Python调用Pykinect2的读取程序,此处参考(开源博客)中提供的示例代码进行修改:文章来源地址https://www.toymoban.com/news/detail-851194.html

#coding=utf-8

from pykinect2 import PyKinectV2

from pykinect2.PyKinectV2 import *

from pykinect2 import PyKinectRuntime

import numpy as np

import ctypes

import math

import cv2 as cv

import time

import copy

class Kinect(object):

def __init__(self):

self._kinect = PyKinectRuntime.PyKinectRuntime(PyKinectV2.FrameSourceTypes_Color | PyKinectV2.FrameSourceTypes_Depth | PyKinectV2.FrameSourceTypes_Infrared)

self.depth_ori = None

self.infrared_frame = None

self.color_frame = None

self.w_color = 1920

self.h_color = 1080

self.w_depth = 512

self.h_depth = 424

self.csp_type = _ColorSpacePoint * int(1920 * 1080)

self.csp = ctypes.cast(self.csp_type(), ctypes.POINTER(_DepthSpacePoint))

self.color = None

self.depth = None

self.depth_draw = None

self.color_draw = None

self.infrared = None

self.first_time = True

def get_the_last_color(self):

if self._kinect.has_new_color_frame():

frame = self._kinect.get_last_color_frame()

gbra = frame.reshape([self._kinect.color_frame_desc.Height, self._kinect.color_frame_desc.Width, 4])

self.color_frame = gbra[:, :, 0:3]

return self.color_frame

def get_the_last_depth(self):

if self._kinect.has_new_depth_frame():

frame = self._kinect.get_last_depth_frame()

image_depth_all = frame.reshape([self._kinect.depth_frame_desc.Height,

self._kinect.depth_frame_desc.Width])

self.depth_ori = image_depth_all

return self.depth_ori

def get_the_last_infrared(self):

if self._kinect.has_new_infrared_frame():

frame = self._kinect.get_last_infrared_frame()

image_infrared_all = frame.reshape([self._kinect.infrared_frame_desc.Height,

self._kinect.infrared_frame_desc.Width])

self.infrared_frame = image_infrared_all

return self.infrared_frame

def map_depth_point_to_color_point(self, depth_point):

depth_point_to_color = copy.deepcopy(depth_point)

n = 0

while 1:

self.get_the_last_depth()

self.get_the_last_color()

if self.depth_ori is None:

continue

color_point = self._kinect._mapper.MapDepthPointToColorSpace(

_DepthSpacePoint(511-depth_point_to_color[1], depth_point_to_color[0]), self.depth_ori[depth_point_to_color[0], 511-depth_point_to_color[1]])

# color_point = self._kinect._mapper.MapDepthPointToColorSpace(

# _DepthSpacePoint(depth_point[0], depth_point[1]), self.depth[depth_point[1], depth_point[0]])

if math.isinf(float(color_point.y)):

n += 1

if n >= 50000:

print('')

color_point = [0, 0]

break

else:

color_point = [np.int0(color_point.y), 1920-np.int0(color_point.x)]

break

return color_point

def map_color_points_to_depth_points(self, color_points):

self.get_the_last_depth()

self.get_the_last_color()

self._kinect._mapper.MapColorFrameToDepthSpace(

ctypes.c_uint(512 * 424), self._kinect._depth_frame_data, ctypes.c_uint(1920 * 1080), self.csp)

depth_points = [self.map_color_point_to_depth_point(x, True) for x in color_points]

return depth_points

#将彩色像素点映射到深度图像中

def map_color_point_to_depth_point(self, color_point, if_call_flg=False):

n = 0

color_point_to_depth = copy.deepcopy(color_point)

color_point_to_depth[1] = 1920 - color_point_to_depth[1]

while 1:

self.get_the_last_depth()

self.get_the_last_color()

# self.depth = cv.medianBlur(image_depth_all, 5)

if not if_call_flg:

self._kinect._mapper.MapColorFrameToDepthSpace(

ctypes.c_uint(512 * 424), self._kinect._depth_frame_data, ctypes.c_uint(1920 * 1080), self.csp)

if math.isinf(float(self.csp[color_point_to_depth[0]*1920+color_point_to_depth[1]-1].y)) or np.isnan(self.csp[color_point_to_depth[0]*1920+color_point_to_depth[1]-1].y):

n += 1

if n >= 50000:

print('彩色映射深度,无效的点')

depth_point = [0, 0]

break

else:

self.cor = self.csp[color_point_to_depth[0]*1920+color_point_to_depth[1]-1].y

try:

depth_point = [np.int0(self.csp[color_point_to_depth[0]*1920+color_point_to_depth[1]-1].y),

np.int0(self.csp[color_point_to_depth[0]*1920+color_point_to_depth[1]-1].x)]

except OverflowError as e:

print('彩色映射深度,无效的点')

depth_point = [0, 0]

break

depth_point[1] = 512-depth_point[1]

return depth_point

# depth_points = [self._kinect._mapper.MapColorPointToDepthSpace(_ColorSpacePoint(color_point[0],color_point[1]),self.color_frame[depth_point]))

# for depth_point in depth_points]

# return color_points

#获得最新的彩色和深度图像以及红外图像

def get_the_data_of_color_depth_infrared_image(self, Infrared_threshold = 16000):

# 访问新的RGB帧

time_s = time.time()

if self.first_time:

while 1:

n = 0

if self._kinect.has_new_color_frame():

# # 获得的图像数据是二维的,需要转换为需要的格式

frame = self._kinect.get_last_color_frame()

# 返回的是4通道,还有一通道是没有注册的

gbra = frame.reshape([self._kinect.color_frame_desc.Height, self._kinect.color_frame_desc.Width, 4])

# 取出彩色图像数据

# self.color = gbra[:, :, 0:3]

self.color = gbra[:, :, 0:3][:,::-1,:]

# 这是因为在python中直接复制该图像的效率不如直接再从C++中获取一帧来的快

frame = self._kinect.get_last_color_frame()

# 返回的是4通道,还有一通道是没有注册的

gbra = frame.reshape([self._kinect.color_frame_desc.Height, self._kinect.color_frame_desc.Width, 4])

# 取出彩色图像数据

# self.color_draw = gbra[:, :, 0:3][:,::-1,:]

self.color_draw = gbra[:, :, 0:3][:,::-1,:]

n += 1

# 访问新的Depth帧

if self._kinect.has_new_depth_frame():

# 获得深度图数据

frame = self._kinect.get_last_depth_frame()

# 转换为图像排列

image_depth_all = frame.reshape([self._kinect.depth_frame_desc.Height,

self._kinect.depth_frame_desc.Width])

# 转换为(n,m,1) 形式

image_depth_all = image_depth_all.reshape(

[self._kinect.depth_frame_desc.Height, self._kinect.depth_frame_desc.Width, 1])

self.depth_ori = np.squeeze(image_depth_all)

self.depth = np.squeeze(image_depth_all)[:,::-1]

"""————————————————(2019/5/11)——————————————————"""

# 获得深度图数据

frame = self._kinect.get_last_depth_frame()

# 转换为图像排列

depth_all_draw = frame.reshape([self._kinect.depth_frame_desc.Height,

self._kinect.depth_frame_desc.Width])

# 转换为(n,m,1) 形式

depth_all_draw = depth_all_draw.reshape(

[self._kinect.depth_frame_desc.Height, self._kinect.depth_frame_desc.Width, 1])

depth_all_draw[depth_all_draw >= 1500] = 0

depth_all_draw[depth_all_draw <= 500] = 0

depth_all_draw = np.uint8(depth_all_draw / 1501 * 255)

self.depth_draw = depth_all_draw[:,::-1,:]

n += 1

# 获取红外数据

if self._kinect.has_new_infrared_frame():

# 获得深度图数据

frame = self._kinect.get_last_infrared_frame()

# 转换为图像排列

image_infrared_all = frame.reshape([self._kinect.depth_frame_desc.Height,

self._kinect.depth_frame_desc.Width])

# 转换为(n,m,1) 形式

image_infrared_all[image_infrared_all > Infrared_threshold] = 0

image_infrared_all = image_infrared_all / Infrared_threshold * 255

self.infrared = image_infrared_all[:,::-1]

n += 1

t = time.time() - time_s

if n == 3:

self.first_time = False

break

elif t > 5:

print('未获取图像数据,请检查Kinect2连接是否正常')

break

else:

if self._kinect.has_new_color_frame():

# # 获得的图像数据是二维的,需要转换为需要的格式

frame = self._kinect.get_last_color_frame()

# 返回的是4通道,还有一通道是没有注册的

gbra = frame.reshape([self._kinect.color_frame_desc.Height, self._kinect.color_frame_desc.Width, 4])

# 取出彩色图像数据

# self.color = gbra[:, :, 0:3]

self.color = gbra[:, :, 0:3][:, ::-1, :]

# 这是因为在python中直接复制该图像的效率不如直接再从C++中获取一帧来的快

frame = self._kinect.get_last_color_frame()

# 返回的是4通道,还有一通道是没有注册的

gbra = frame.reshape([self._kinect.color_frame_desc.Height, self._kinect.color_frame_desc.Width, 4])

# 取出彩色图像数据

# self.color_draw = gbra[:, :, 0:3][:,::-1,:]

self.color_draw = gbra[:, :, 0:3][:, ::-1, :]

# 访问新的Depth帧

if self._kinect.has_new_depth_frame():

# 获得深度图数据

frame = self._kinect.get_last_depth_frame()

# 转换为图像排列

image_depth_all = frame.reshape([self._kinect.depth_frame_desc.Height,

self._kinect.depth_frame_desc.Width])

# 转换为(n,m,1) 形式

image_depth_all = image_depth_all.reshape(

[self._kinect.depth_frame_desc.Height, self._kinect.depth_frame_desc.Width, 1])

self.depth_ori = np.squeeze(image_depth_all)

self.depth = np.squeeze(image_depth_all)[:, ::-1]

# 获得深度图数据

frame = self._kinect.get_last_depth_frame()

# 转换为图像排列

depth_all_draw = frame.reshape([self._kinect.depth_frame_desc.Height,

self._kinect.depth_frame_desc.Width])

# 转换为(n,m,1) 形式

depth_all_draw = depth_all_draw.reshape(

[self._kinect.depth_frame_desc.Height, self._kinect.depth_frame_desc.Width, 1])

depth_all_draw[depth_all_draw >= 1500] = 0

depth_all_draw[depth_all_draw <= 500] = 0

depth_all_draw = np.uint8(depth_all_draw / 1501 * 255)

self.depth_draw = depth_all_draw[:, ::-1, :]

# 获取红外数据

if self._kinect.has_new_infrared_frame():

# 获得深度图数据

frame = self._kinect.get_last_infrared_frame()

# 转换为图像排列

image_infrared_all = frame.reshape([self._kinect.depth_frame_desc.Height,

self._kinect.depth_frame_desc.Width])

# 转换为(n,m,1) 形式

image_infrared_all[image_infrared_all > Infrared_threshold] = 0

image_infrared_all = image_infrared_all / Infrared_threshold * 255

self.infrared = image_infrared_all[:, ::-1]

return self.color, self.color_draw, self.depth, self.depth_draw, self.infrared

#显示各种图像的视频流

def Kinect_imshow(self,type_im='rgb'):

"""

Time :2019/9/3

FunC:

Input: color_data

Return: color_data

"""

if type_im =='all':

pass

elif type_im =='rgb':

pass

elif type_im =='depth':

pass

elif type_im =='grared':

pass

if __name__ == '__main__':

a = Kinect()

while 1:

t = time.time()

#color_data = a.get_the_data_of_color_depth_infrared_image()

color, color_draw, depth, depth_draw, infrared = a.get_the_data_of_color_depth_infrared_image()

cv.imshow('a',color)

cv.waitKey(1)

到了这里,关于Kinect系列1:(Windows环境配置)Python3+Pykinect2+KinectV2相机读取彩色图与深度图的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!