一、背景

原本consul集群是由三个server节点搭建的,购买的是三个ecs服务器,

java服务在注册到consul的时候,随便选择其中一个节点。

从上图可以看出, consul-01有28个服务注册,而consul-02有94个服务,consul-03则是29个。

有一次发生consul集群故障,某个conusl节点挂了,导致整个的服务发现都不通。

所以,我们需要进一步提高consul的高可用。

二、consul集群的高可用方案

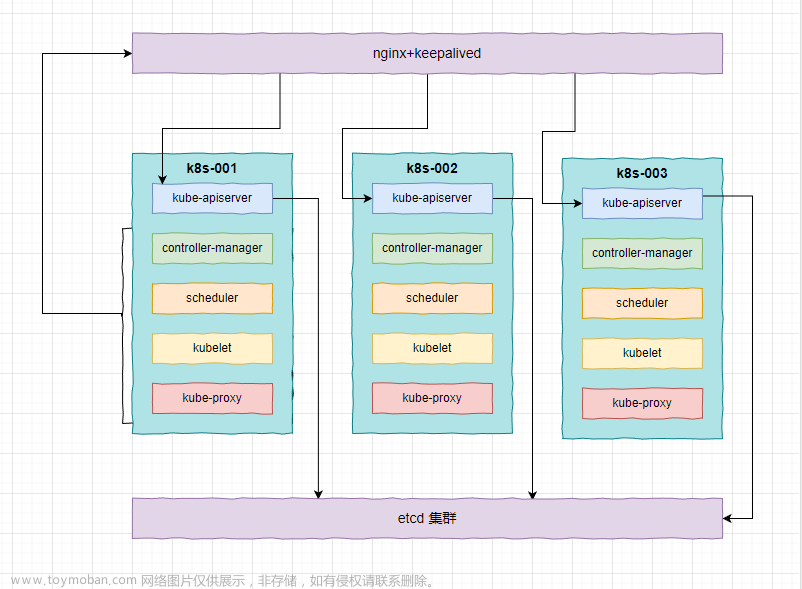

consul server有三个节点,保持不变,仍旧部署在ecs。

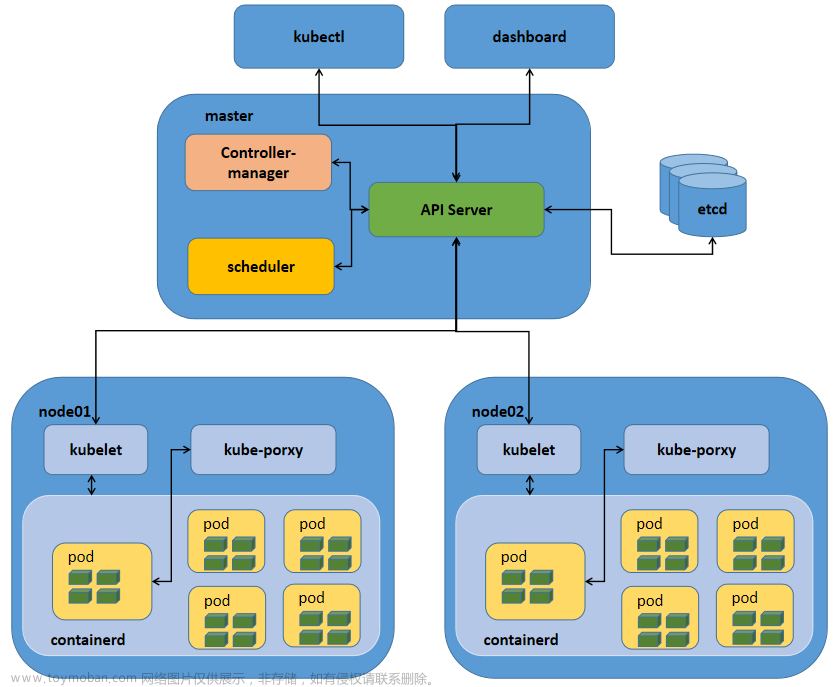

在k8s各个节点都部署一个consul agent,同一个节点上的pod服务依赖于当前节点的consul agent。

我这里的k8s容器共有11个节点,所以会再部署11个consul agent。

三、部署总结

1、ConfigMap挂载配置文件consul.json

apiVersion: v1

kind: ConfigMap

metadata:

name: consul-client

namespace: consul

data:

consul.json: |-

{

"datacenter": "aly-consul",

"addresses" : {

"http": "0.0.0.0",

"dns": "0.0.0.0"

},

"bind_addr": "{{ GetInterfaceIP \"eth0\" }}",

"client_addr": "0.0.0.0",

"data_dir": "/consul/data",

"rejoin_after_leave": true,

"retry_join": ["10.16.190.29", "10.16.190.28", "10.16.190.30"],

"verify_incoming": false,

"verify_outgoing": false,

"disable_remote_exec": false,

"encrypt":"Qy3w6MjoXVOMvOMSkuj43+buObaHLS8p4JONwvH0RUg=",

"encrypt_verify_incoming": true,

"encrypt_verify_outgoing": true,

"acl": {

"enabled": true,

"default_policy": "deny",

"down_policy": "extend-cache",

"tokens" :{ "agent": "xxxx-xx-xx-xx-xxxx" }

}

}

这里有几个地方需要额外注意:

- datacenter和consul server所在的dc保持一致

- bind_addr 采用读取的方式,不能写死

- acl 填写consul集群的访问token

2、DaemonSet守护进程集

这里采用守护进程来实现,在各个节点只部署一个consul agent。DaemonSet的典型使用场景:除本案例外,还适用于日志和监控等等。你只需要在每个节点仅部署一个filebeat和node-exporter,大大节约了资源。

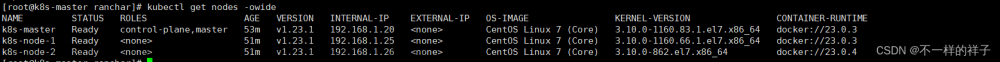

可以看到容器组共11个。

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: consul-client

namespace: consul

labels:

app: consul

environment: prod

component: client

spec:

minReadySeconds: 60

revisionHistoryLimit: 10

selector:

matchLabels:

app: consul

environment: prod

commponent: client

updateStrategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

namespace: consul

labels:

app: consul

environment: prod

commponent: client

spec:

containers:

- env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: HOST_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

name: consul-client

image: consul:1.6.2

imagePullPolicy: IfNotPresent

command:

- "consul"

- "agent"

- "-config-dir=/consul/config"

lifecycle:

postStart:

exec:

command:

- /bin/sh

- -c

- |

# 最大尝试次数

max_attempts=30

# 每次尝试之间的等待时间(秒)

wait_seconds=2

attempt=1

while [ $attempt -le $max_attempts ]; do

echo "Checking if Consul is ready (attempt: $attempt)..."

# 使用curl检查Consul的健康状态

if curl -s http://127.0.0.1:8500/v1/agent/self > /dev/null; then

echo "Consul is ready."

# 执行reload操作

consul reload

exit 0

else

echo "Consul is not ready yet."

fi

# 等待指定的时间后再次尝试

sleep $wait_seconds

attempt=$((attempt + 1))

done

echo "Consul did not become ready in time."

exit 1

preStop:

exec:

command:

- /bin/sh

- -c

- consul leave

ports:

- name: http-api

hostPort: 8500

containerPort: 8500

protocol: TCP

- name: dns-tcp

hostPort: 8600

containerPort: 8600

protocol: TCP

- name: dns-udp

hostPort: 8600

containerPort: 8600

protocol: UDP

- name: server-rpc

hostPort: 8300

containerPort: 8300

protocol: TCP

- name: serf-lan-tcp

hostPort: 8301

containerPort: 8301

protocol: TCP

- name: serf-lan-udp

hostPort: 8301

containerPort: 8301

protocol: UDP

- name: serf-wan-tcp

hostPort: 8302

containerPort: 8302

protocol: TCP

- name: serf-wan-udp

hostPort: 8302

containerPort: 8302

protocol: UDP

volumeMounts:

- name: consul-config

mountPath: /consul/config/consul.json

subPath: consul.json

- name: consul-data-dir

mountPath: /consul/data

- name: localtime

mountPath: /etc/localtime

livenessProbe:

tcpSocket:

port: 8500

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

timeoutSeconds: 1

readinessProbe:

httpGet:

path: /v1/status/leader

port: 8500

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

timeoutSeconds: 1

resources:

requests:

memory: "256Mi"

cpu: "200m"

limits:

memory: "1024Mi"

cpu: "1000m"

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

hostNetwork: true

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- name: consul-config

configMap:

name: consul-client

items:

- key: consul.json

path: consul.json

- name: consul-data-dir

hostPath:

path: /data/consul/data

type: DirectoryOrCreate

- name: localtime

hostPath:

path: /etc/localtime

type: File

四、踩过的坑

1、报错 ==> Multiple private IPv4 addresses found. Please configure one with ‘bind’ and/or ‘advertise’.

解决办法:

修改consul.json中的"bind_addr": “{{ GetInterfaceIP “eth0” }}”

2、lifecycle,在pod创建成功后,执行consul reload失败,导致consul pod节点反复重启。

进一步描述错误现象,consul agent加入到consul集群,但是一会儿容器又挂了,导致又退出consul集群。

我尝试去掉健康检测,没能解决,容器还是会挂。

所以不是健康检测的问题。

lifecycle:

postStart:

exec:

command:

- /bin/sh

- -c

- consul reload

preStop:

exec:

command:

- /bin/sh

- -c

- consul leave

后面修改为重试consul reload。

# 最大尝试次数

max_attempts=30

# 每次尝试之间的等待时间(秒)

wait_seconds=2

attempt=1

while [ $attempt -le $max_attempts ]; do

echo "Checking if Consul is ready (attempt: $attempt)..."

# 使用curl检查Consul的健康状态

if curl -s http://127.0.0.1:8500/v1/agent/self > /dev/null; then

echo "Consul is ready."

# 执行reload操作

consul reload

exit 0

else

echo "Consul is not ready yet."

fi

# 等待指定的时间后再次尝试

sleep $wait_seconds

attempt=$((attempt + 1))

done

echo "Consul did not become ready in time."

exit 1

3、忽略错误 [ERR] agent: failed to sync remote state: No known Consul servers,这个属于正常现象。

不要被这个错误日志给误导了,你只要保证后文有类似日志。

2024/02/21 11:09:42 [INFO] consul: adding server aly-consul-02 (Addr: tcp/10.16.190.29:8300) (DC: aly-consul)

4、别忘记了配置acl,否则consul agent是无法加入到consul server集群的。

"acl": {

"enabled": true,

"default_policy": "deny",

"down_policy": "extend-cache",

"tokens" : {

"agent": "xxxx-xx-xx-xx-xxxx"

}

}

五、服务接入示例

在deployment.yaml中增加环境变量。

containers:

- env:

- name: HOST_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.hostIP

- name: POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

- name: CONFIG_SERVICE_ENABLED

value: 'true'

- name: CONFIG_SERVICE_PORT

value: 8500

- name: CONFIG_SERVICE_HOST

value: $(HOST_IP)

spring boot工程的bootstrap.yml配置见下

spring:

application:

name: xxx-service

cloud:

consul:

enabled: ${CONFIG_SERVICE_ENABLED}

host: ${CONFIG_SERVICE_HOST}

port: ${CONFIG_SERVICE_PORT}

discovery:

prefer-ip-address: true

enabled: ${CONFIG_SERVICE_ENABLED}

config:

format: yaml

enabled: ${CONFIG_SERVICE_ENABLED}

六、总结

本文是以java服务注册到consul为示例,给你讲述了从部署到接入的全过程。

consul地址,这里填写的是ip地址,而非域名。

也就是说,与服务在同一个节点上的consul如果挂了,也就导致服务启动的时候,会注册失败。

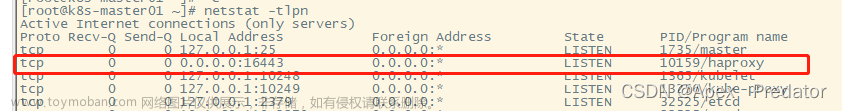

有人会想,可不可以像nginx反向代理后端服务那样,由k8s service或者nginx反向consul集群呢?

如下图所示:

不建议你这样去做,因为consul作为服务注册中心,有一个基本要求是:

java服务是从哪个consul注册的服务就要从那个consul注销。

所以负载均衡对后端consul agent就会要求有状态,而非无状态。文章来源:https://www.toymoban.com/news/detail-851211.html

好了, consul的高可用部署就写到这里。文章来源地址https://www.toymoban.com/news/detail-851211.html

到了这里,关于阿里云k8s容器部署consul集群的高可用方案的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!