本文参考

[k8s安装prometheus并持久化数据_/prometheus-config-reloader:-CSDN博客](https://blog.csdn.net/vic_qxz/article/details/119598466)

-

前置要求: 已经部署了NFS或者其他存储的K8s集群.

这里注意networkpolicies网络策略问题,可以后面删除这个策略,这里可以查看我之前的文档。

部署kube-prometheus

- 这里是配置好才执行这个,我们还没有配置存储什么的需要进行修改

$ git clone https://github.com/coreos/kube-prometheus.git #版本最新的是0.13.0

$ kubectl create -f manifests/setup

$ until kubectl get servicemonitors --all-namespaces ; do date; sleep 1; echo ""; done

kubectl create -f manifests/ #如果资源已经存在,则会报错

kubectl apply -f 跟这个一样 #如果资源已经存在,则会进行更新

详解一下

2. `kubectl create -f manifests/setup`: 使用 `kubectl` 命令创建 Kubernetes 资源,这些资源位于 manifests/setup 目录下。一般来说,这个命令会创建一些必要的资源,比如 ServiceAccount、ClusterRole 和 ClusterRoleBinding 等,用于配置 Prometheus 和 Grafana 在 Kubernetes 中的权限。

3. `until kubectl get servicemonitors --all-namespaces ; do date; sleep 1; echo ""; done`: 这是一个循环命令,它会持续执行 `kubectl get servicemonitors --all-namespaces` 命令,直到能够成功获取到所有命名空间中的 ServiceMonitor 资源。ServiceMonitor 是 Prometheus Operator 中的一种资源类型,用于指定 Prometheus 服务器应该如何监控应用程序。在这个命令中,通过 `until` 循环检查是否已经创建了所有的 ServiceMonitor 资源。

4. `kubectl create -f manifests/`: 使用 `kubectl` 命令创建 Kubernetes 资源,这些资源位于 manifests/ 目录下。在这个命令中,一般会创建 Prometheus、Alertmanager、Grafana 等监控相关的资源。

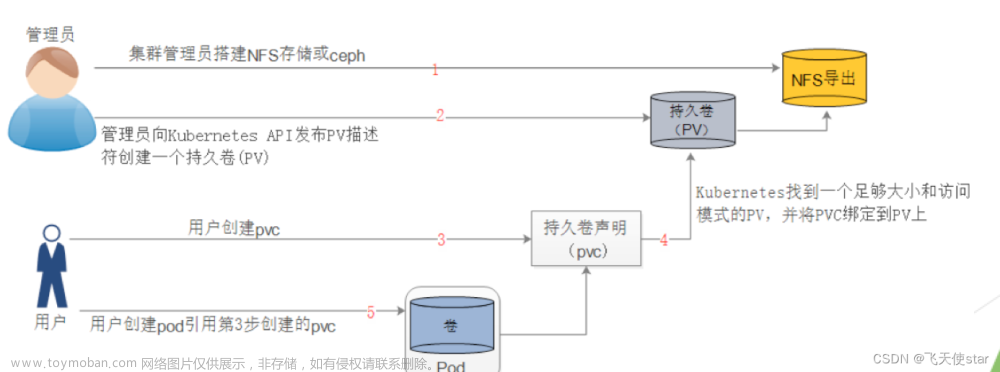

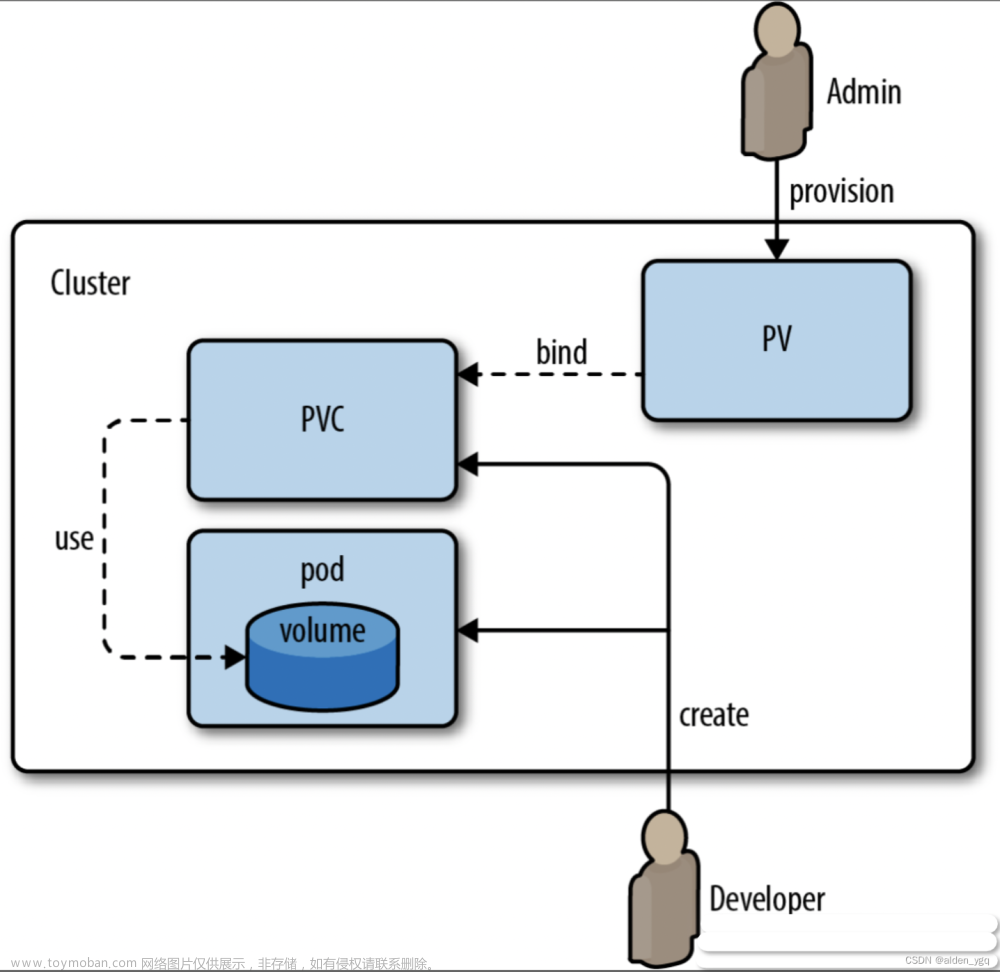

持久化数据我这里用的是NFS创建动态的pv

我的storageclass名称是nfs-storageclass

root@k8s-master01:~/test/prometheus/kube-prometheus-0.13.0# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage fuseim.pri/ifs Delete Immediate false 4d20h

nfs-storageclass prometheus-nfs-storage Retain Immediate false 16h

kube-prometheus的组件简介及配置变更

1.从整体架构看,prometheus 一共四大组件。 exporter 通过接口暴露监控数据, prometheus-server 采集并存储数据, grafana 通过prometheus-server查询并友好展示数据, alertmanager 处理告警,对外发送

prometheus-operator

prometheus-operator 服务是deployment方式部署,他是整个基础组件的核心,他监控我们自定义的 prometheus 和alertmanager,并生成对应的 statefulset。 就是prometheus和alertmanager服务是通过他部署出来的。

修改配置文件

grafana-pvc

创建grafana的存储卷. 并修改grafana-deployment.yaml文件, 将官方的emptyDir更换为persistentVolumeClaim

1.创建pvc

$ cd kube-prometheus/manifests/

$ cat grafana-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

# PersistentVolumeClaim 名称

name: grafana

namespace: monitoring

annotations:

# 与 nfs-storageClass.yaml metadata.name 保持一致

volume.beta.kubernetes.io/storage-class: "nfs-storageclass"

spec:

# 使用的存储类为 nfs-storageclass

storageClassName: "nfs-storageclass"

# 访问模式为 ReadWriteMany

accessModes:

- ReadWriteMany

#- ReadWriteOnce

resources:

# 存储请求为 50Gi

requests:

storage: 50Gi

$ kubectl apply -f grafana-pvc.yaml

2.修改默认的grafana配置文件

$ vim grafana-deployment.yaml

...

##找到 grafana-storage, 添加上面创建的pvc: grafana. 然后保存.

volumes:

- name: grafana-storage

persistentVolumeClaim:

claimName: grafana

...

$ kubectl apply -f grafana-deployment.yaml

prometheus-k8s持久化

prometheus-server 获取各端点数据并存储与本地,创建方式为自定义资源 crd中的prometheus。 创建自定义资源prometheus后,会启动一个statefulset,即prometheus-server. 默认是没有配置持久化存储的

1.修改配置文件

$ cd kube-prometheus/manifests/

$ vim prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

prometheus: k8s

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- name: alertmanager-main

namespace: monitoring

port: web

storage: #这部分为持久化配置

volumeClaimTemplate:

spec:

storageClassName: nfs-23

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 100Gi

nodeSelector:

kubernetes.io/os: linux

podMonitorNamespaceSelector: {}

podMonitorSelector: {}

replicas: 2

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: v2.17.2

执行变更, 这里会自动创建两个指定大小的pv(prometheus-k8s-0,prometheus-k8s-1)

$ kubectl apply -f manifests/prometheus-prometheus.yaml

修改存储时长

$ vim manifests/setup/prometheus-operator-deployment.yaml

....

- args:

- --kubelet-service=kube-system/kubelet

- --logtostderr=true

- --config-reloader-image=jimmidyson/configmap-reload:v0.3.0

- --prometheus-config-reloader=quay.io/coreos/prometheus-config-reloader:v0.39.0

- storage.tsdb.retention.time=180d ## 修改存储时长

....

$ kubectl apply -f manifests/setup/prometheus-operator-deployment.yaml

添加ingress访问grafana和promethues

这里访问是有问题的,参照我kubernetes-networkpolicies网络策略问题这篇文章解决

$ cat ingress.yml

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

k8s.eip.work/workload: grafana

k8s.kuboard.cn/workload: grafana

generation: 2

labels:

app: grafana

name: grafana

namespace: monitoring

spec:

rules:

- host: k8s-moni.fenghong.tech

http:

paths:

- backend:

serviceName: grafana

servicePort: http

path: /

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

k8s.kuboard.cn/workload: prometheus-k8s

generation: 2

labels:

app: prometheus

prometheus: k8s

managedFields:

- apiVersion: networking.k8s.io/v1beta1

name: prometheus-k8s

namespace: monitoring

spec:

rules:

- host: k8s-prom.fenghong.tech

http:

paths:

- backend:

serviceName: prometheus-k8s

servicePort: web

path: /

执行apply

## 安装 ingress controller

$ kubectl apply -f https://kuboard.cn/install-script/v1.18.x/nginx-ingress.yaml

## 暴露grafana及prometheus服务

$ kubectl apply -f ingress.yml

web访问

配置kube-prometheus监控额外的项目

添加additional-scrape-configs配置文件. 例如

$ cat monitor/add.yaml

- job_name: 'prometheus'

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ['192.168.0.23:9100', '192.168.0.21:9101', '192.168.0.61:9100', '192.168.0.62:9100', '192.168.0.63:9100', '192.168.0.64:9100', '192.168.0.89:9100', '192.168.0.11:9100']

- job_name: 'mysql'

static_configs:

- targets: ['192.168.0.21:9104','192.168.0.23:9104']

- job_name: 'nginx'

static_configs:

- targets: ['192.168.0.23:9913']

- job_name: 'elasticsearch'

metrics_path: "/_prometheus/metrics"

static_configs:

- targets: ['192.168.0.31:9200']

创建secret文件, 我这里部署到了monitoring 命名空间.

$ kubectl create secret generic additional-scrape-configs --from-file=add.yaml --dry-run -oyaml > additional-scrape-configs.yaml

$ kubectl apply -f additional-scrape-configs.yaml -n monitoring

在prometheus-prometheus.yaml中添加 additionalScrapeConfigs 选项.

$ cat prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

prometheus: k8s

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- name: alertmanager-main

namespace: monitoring

port: web

storage: #这部分为持久化配置

volumeClaimTemplate:

spec:

storageClassName: nfs-23

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 100Gi

image: quay.io/prometheus/prometheus:v2.17.2

nodeSelector:

kubernetes.io/os: linux

podMonitorNamespaceSelector: {}

podMonitorSelector: {}

replicas: 3

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: v2.17.2

additionalScrapeConfigs:

name: additional-scrape-configs

key: add.yaml

执行apply即可

$ kubectl apply -f prometheus-prometheus.yaml

其他系统的访问

参考文档

[Kube-prometheus部署Ingress为Prometheus-Grafana开启https_kube-prometheu配置ingress-CSDN博客](https://blog.csdn.net/Happy_Sunshine_Boy/article/details/107955691)

Prometheus

基于访问路径过滤

修改yaml:kube-prometheus-0.5.0/manifests/prometheus-prometheus.yaml

在参数下:image: quay.io/prometheus/prometheus:v2.15.2,添加如下参数:

externalUrl: https://master170.k8s:30443/prometheus

kubectl apply -f prometheus-prometheus.yaml

配置:ingress-tls.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/enable-cors: "true"

nginx.ingress.kubernetes.io/rewrite-target: /$2

name: prometheus-k8s

namespace: monitoring

spec:

rules:

- host: #写你的域名

http:

paths:

- path: /prometheus(/|$)(.*)

pathType: ImplementationSpecific

backend:

service:

name: prometheus-k8s

port:

number: 9090

访问prometheus时,都要带上“prometheus”:

举例:

https://master170.k8s:30443/prometheus/graph

AlertManager

修改yaml:manifests/alertmanager-alertmanager.yaml

在参数下:image: quay.io/prometheus/alertmanager:v0.20.0,添加如下参数:

externalUrl: https://master170.k8s:30443/alertmanager

文章来源:https://www.toymoban.com/news/detail-852516.html

文章来源:https://www.toymoban.com/news/detail-852516.html

配置:ingress-tls.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/enable-cors: "true"

nginx.ingress.kubernetes.io/rewrite-target: /$2

name: prometheus-k8s

namespace: monitoring

spec:

rules:

- host: #写你的域名

http:

paths:

# - path: /prometheus(/|$)(.*)

# pathType: ImplementationSpecific

# backend:

# service:

# name: prometheus-k8s

# port:

# number: 9090

- path: /alertmanager(/|$)(.*)

pathType: ImplementationSpecific

backend:

service:

name: alertmanager-main

port:

number: 9093

访问alertmanager时,都要带上“alertmanager”:

https://master170.k8s:30443/alertmanager/#/alerts 文章来源地址https://www.toymoban.com/news/detail-852516.html

文章来源地址https://www.toymoban.com/news/detail-852516.html

到了这里,关于k8s1.28.8版本安装prometheus并持久化数据的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!