Recent Advances in 3D Gaussian Splatting,author = Tong Wu 1 ,Yu-Jie Yuan 1 ,Ling-Xiao Zhang 1 ,Jie Yang 1 ,Yan-Pei Cao 2 ,Ling-Qi Yan 3 ,and Lin Gao 1 \cor,runauthor = T.吴玉- J Yuan,L.-张旭,杨俊,杨毅- P Cao,L.- Q Yan,L.三维高斯溅射(3DGS)的出现大大加快了新视图合成的绘制速度。与神经辐射场(NeRF)等神经隐式表示不同,3D高斯溅射利用一组高斯椭球来建模场景,以便通过将高斯椭球光栅化为图像来实现高效渲染。除了快速渲染速度外,3D高斯溅射的显式表示还有助于动态重建,几何编辑和物理模拟等下游任务。 考虑到这一领域的快速变化和越来越多的作品,我们提出了一个最近的3D高斯溅射方法的文献综述,它可以大致分为3D重建,3D编辑,和其他下游应用的功能。为了更好地理解这种技术,本文还介绍了传统的基于点的绘制方法和三维高斯溅射的绘制公式。本调查旨在帮助初学者快速进入这一领域,并为经验丰富的研究人员提供全面的概述,这可以促进3D高斯溅射表示的未来发展。 ,keywords = 3D Gaussian Splatting,radiance field,novel view synthesis,editing,generation,copyright =作者, 1 Introduction

| 1 |

Institute of Computing Technology, Chinese Academy of Sciences, Beijing, China, 100190. E-mail: Tong Wu, wutong19s@ict.ac.cn; Yu-Jie Yuan, yuanyujie@ict.ac.cn; Ling-Xiao Zhang, zhanglingxiao@ict.ac.cn; Jie Yang, yangjie01@ict.ac.cn; Lin Gao, gaolin@ict.ac.cn. |

| 2 |

VAST. Yan-Pei Cao, caoyanpei@gmail.com. |

| 3 |

Department of Computer Science, University of California. Ling-Qi Yan, lingqi@cs.ucsb.edu. |

| Manuscript received: 2024-02-28; |

1Introduction 1介绍

With the development of virtual reality and augmented reality, the demand for realistic 3D content is increasing. Traditional 3D content creation methods include 3D reconstruction from scanners or multi-view images and 3D modeling with professional software. However, traditional 3D reconstruction methods are likely to produce less faithful results due to imperfect capture and noisy camera estimation. 3D modeling methods yield realistic 3D content but require professional user training and interaction, which is time-consuming.

随着虚拟现实和增强现实技术的发展,人们对真实感3D内容的需求越来越大。传统的3D内容创建方法包括从扫描仪或多视图图像进行3D重建以及使用专业软件进行3D建模。然而,传统的3D重建方法可能会产生不太忠实的结果,由于不完美的捕获和噪声相机估计。3D建模方法产生逼真的3D内容,但需要专业的用户培训和交互,这是耗时的。

To create realistic 3D content automatically, Neural radiance fields (NeRF) [1] propose to model a 3D scene’s geometry and appearance with a density field and a color field, respectively. NeRF greatly improved the quality of novel view synthesis results but still suffered from its low training and rendering speed. While significant efforts [2, 3, 4] have been made to accelerate it to facilitate its applications on common devices, it is still hard to find a robust method that can both train a NeRF quickly enough (≤ 1 hour) on a consumer-level GPU and render a 3D scene at an interactive frame rate (∼ 30 FPS) on common devices like cellphones and laptops. To resolve the speed issues, 3D Gaussian Splatting (3DGS) [5] proposes to rasterize a set of Gaussian ellipsoids to approximate the appearance of a 3D scene, which not only achieves comparable novel view synthesis quality but also allows fast converge (∼ 30 minutes) and real-time rendering (≥ 30 FPS) at 1080p resolution, making low-cost 3D content creation and real-time applications possible.

为了自动创建逼真的3D内容,NeRF [ 1]建议分别使用密度场和颜色场来建模3D场景的几何形状和外观。NeRF极大地提高了新视图合成结果的质量,但仍然受到其低训练和渲染速度的影响。虽然已经做出了重大努力[2,3,4]来加速它以促进其在常见设备上的应用,但仍然很难找到一种强大的方法,既可以在消费级GPU上足够快地训练NeRF( ≤ 1小时),又可以在手机和笔记本电脑等常见设备上以交互式帧速率( ∼ 30 FPS)渲染3D场景。 为了解决速度问题,3D高斯溅射(3DGS)[ 5]提出了对一组高斯椭球进行光栅化以近似3D场景的外观,这不仅实现了相当新颖的视图合成质量,而且允许快速收敛( ∼ 30分钟)和1080p分辨率的实时渲染( ≥ 30 FPS),使低成本的3D内容创建和实时应用成为可能。

Figure 1:Structure of the literature review and taxonomy of current 3D Gaussian Splatting methods.

图1:文献综述的结构和当前3D高斯溅射方法的分类。

Based on the 3D Gaussian Splatting representation, a number of research works have come out and more are on the way. To help readers get familiar with 3D Gaussian Splatting quickly, we present a survey on 3D Gaussian Splatting, which covers both traditional splatting methods and recent neural-based 3DGS methods. There are two literature reviews [6, 7] that summarize recent works on 3DGS, which also serve as a good reference tool for this field. As shown in Fig. 1, we divide these works into three parts by functionality. We first introduce how 3D Gaussian Splatting allows realistic scene reconstruction under various sceneries (Sec. 2) and further present scene editing techniques with 3D Gaussian Splatting (Sec. 3) and how 3D Gaussian Splatting makes downstream applications like digital human possible (Sec. 4). Finally, we summarize recent research works on 3D Gaussian Splatting at a higher level and present future works remaining to be done in this field (Sec. 5). A timeline of representative works can be found in Fig. 2.

基于3D高斯溅射表示,已经有一些研究工作,更多的是在路上。为了帮助读者快速熟悉3D高斯溅射,我们对3D高斯溅射进行了调查,其中包括传统的溅射方法和最近的基于神经的3DGS方法。有两篇文献综述[6,7]总结了3DGS的最新工作,这也是该领域的一个很好的参考工具。如图1所示,我们将这些工作按功能分为三个部分。我们首先介绍3D高斯溅射如何在各种场景下实现逼真的场景重建(第二节)。2)并进一步提出了具有3D高斯溅射的场景编辑技术(Sec. 3)以及3D高斯溅射如何使数字人类等下游应用成为可能(第二节)。4)。最后,我们总结了最近的研究工作,在更高的水平上的3D高斯溅射和目前的未来工作仍有待于在这一领域(第二。(五)。 代表性作品的时间轴见图2。

2Gaussian Splatting for 3D Reconstruction 二维高斯散射三维重建

2.1Point-based Rendering 2.1基于点的渲染

Point-based rendering technique aims to generate realistic images by rendering a set of discrete geometry primitives. Grossman and Dally [8] propose the point-based rendering technique based on the purely point-based representation, where each point only influences one pixel on the screen. Instead of rendering points, Zwicker et al. [9] propose to render splats (ellipsoids) so that each splat can occupy multiple pixels and the mutual overlap between them can generate hole-free images more easily than purely point-based representation. Later, a series of splatting methods aim to enhance it by introducing a texture filter for anti-aliasing rendering [10], improving rendering efficiency [11, 12], and resolving discontinuous shading [13]. For more details about traditional point-based rendering techniques, please refer to [14].

基于点的绘制技术旨在通过绘制一组离散的几何图元来生成真实感图像。Grossman和Dally [ 8]基于纯点表示提出了基于点的渲染技术,其中每个点只影响屏幕上的一个像素。Zwicker等人[ 9]提出渲染splats(椭圆体),而不是渲染点,这样每个splat可以占用多个像素,并且它们之间的相互重叠可以比纯粹基于点的表示更容易生成无孔图像。后来,一系列飞溅方法旨在通过引入用于抗锯齿渲染的纹理过滤器[ 10],提高渲染效率[11,12]和解决不连续着色[ 13]来增强它。有关传统基于点的渲染技术的更多细节,请参考[ 14]。

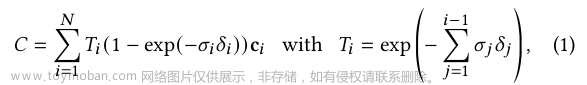

Traditional point-based rendering methods focus more on how to produce high-quality rendered results with a given geometry. With the development of recent implicit representation [15, 16, 17], researchers have started to explore point-based rendering with the neural implicit representation without any given geometry for the 3D reconstruction task. One representative work is NeRF [1] which models geometry with an implicit density field and predicts view-dependent color �� with another appearance field. The point-based rendering combines all sample points’ color on a camera ray to produce a pixel color � by:

传统的基于点的渲染方法更多地关注如何使用给定的几何体生成高质量的渲染结果。随着最近隐式表示的发展[15,16,17],研究人员已经开始探索基于点的渲染与神经隐式表示没有任何给定的几何3D重建任务。一个代表性的工作是NeRF [ 1],它使用隐式密度场对几何建模,并使用另一个外观场预测视图相关颜色 �� 。基于点的渲染通过以下方式组合摄影机光线上所有采样点的颜色以产生像素颜色 � :

| �=∑�=1������� | (1) |

where � is the number of sample points on a ray and ��=���(−∑�=1�−1����) are the view-dependent color and the opacity value for �th point on the ray. �� is the �th point’s density value. ��=∏�=1�−1(1−��) is accumulated transmittance. To ensure high-quality rendering, NeRF [1] typically requires sampling 128 points on a single ray, which unavoidably takes longer time to train and render.

其中 � 是光线上的采样点数量, ��=���(−∑�=1�−1����) 是光线上第 � 个点的视图相关颜色和不透明度值。 �� 是第 � 个点的密度值。 ��=∏�=1�−1(1−��) 是累积透射率。为了确保高质量的渲染,NeRF [ 1]通常需要在单个光线上采样128个点,这无疑需要更长的时间来训练和渲染。

To speed up both training and rendering, instead of predicting density values and colors for all sample points with neural networks, 3D Gaussian Splatting [5] abandons the neural network and directly optimizes Gaussian ellipsoids to which attributes like position �, rotation �, scale �, opacity � and Spherical Harmonic coefficients (SH) representing view-dependent color are attached. The pixel color is determined by Gaussian ellipsoids projected onto it from a given viewpoint. The projection of 3D Gaussian ellipsoids can be formulated as:

为了加快训练和渲染速度,3D Gaussian Splatting [ 5]放弃了神经网络,直接优化高斯椭圆体,并将位置 � ,旋转 � ,比例 � ,不透明度 � 和表示视图相关颜色的球谐系数(SH)等属性附加到高斯椭圆体上。像素颜色由从给定视点投影到其上的高斯椭球确定。3D高斯椭球的投影可以公式化为:

| Σ′=��Σ���� | (2) |

where Σ′ and Σ=������ are the covariance matrices for 3D Gaussian ellipsoids and projected Gaussian ellipsoids on 2D image from a viewpoint with viewing transformation matrix �. � is the Jacobian matrix for the projective transformation. 3DGS shares a similar rendering process with NeRF but there are two major differences between them:

其中 Σ′ 和 Σ=������ 是从具有观看变换矩阵 � 的视点在2D图像上的3D高斯椭圆和投影高斯椭圆的协方差矩阵。 � 是投影变换的雅可比矩阵。3DGS与NeRF共享类似的渲染过程,但它们之间有两个主要区别:

- 1.

3DGS models opacity values directly while NeRF transforms density values to the opacity values.

1. 3DGS直接为不透明度值建模,而NeRF将密度值转换为不透明度值。 - 2.

3DGS uses rasterization-based rendering which does not require sampling points while NeRF requires dense sampling in the 3D space.

2. 3DGS使用基于光栅化的渲染,不需要采样点,而NeRF需要在3D空间中进行密集采样。

Without sampling points and querying the neural network, 3DGS becomes extremely fast and achieves ∼ 30 FPS on a common device with comparable rendering quality with NeRF.

在没有采样点和查询神经网络的情况下,3DGS变得非常快,在普通设备上达到 ∼ 30 FPS,渲染质量与NeRF相当。

Jun 2023Aug 2023Oct 2023Dec 2023Mar 20243D Reconstruction3D EditingApplicationsGaussian Splatting [5]Dynamic 3D Gaussians [18]Deformable 3D Gaussians [19]DreamGaussian [20]PhysGaussian [21]SuGaR [22]Relightable 3D Gaussian [23]GaussianShader [24]GPS-Gaussian [25]Zou et al. [26]DreamGaussian4D [27]SC-GS [28]VR-GS [29]GaMes [30]Gao et al. [31]GaussianPro [32]

Figure 2:A brief timeline of representative works with the 3D Gaussian Splatting representation.

图2:使用3D高斯溅射表示的代表性作品的简短时间轴。

2.2Quality Enhancement 2.2质量增强

Though producing high-quality reconstruction results, there are still improvement spaces for 3DGS’s rendering. Mip-Splatting [33] observed that changing the sampling rate, for example, the focal length, can greatly influence the quality of rendered images by introducing high-frequency Gaussian shape-like artifacts or strong dilation effects. To eliminate the high-frequency Gaussian shape-like artifacts, Mip-Splatting [33] constrains the frequency of the 3D representation to be below half the maximum sampling frequency determined by the training images. In addition, to avoid the dilation effects, it introduces another 2D Mip filter to projected Gaussian ellipsoids to approximate the box filter similar to EWA-Splatting [10]. MS3DGS [34] also aims at solving the aliasing problem in the original 3DGS and introduces a multi-scale Gaussian splatting representation and when rendering a scene at a novel resolution level, it selects Gaussians from different scale levels to produce alias-free images. Analytic-Splatting [35] approximates the cumulative distribution function of Gaussians with a logistic function to better model each pixel’s intensity response for anti-alias. SA-GS [36] utilizes an adaptive 2D low-pass filter at the test time according to the rendering resolution and camera distance.

虽然3DGS的重建效果很好,但其绘制效果仍有很大的改进空间。Mip-Splatting [ 33]观察到改变采样率,例如焦距,可以通过引入高频高斯形状伪影或强膨胀效应来极大地影响渲染图像的质量。为了消除高频高斯形状伪影,Mip-Splatting [ 33]将3D表示的频率限制在训练图像确定的最大采样频率的一半以下。此外,为了避免膨胀效应,它将另一种2D Mip滤波器引入到投影的高斯椭球体中,以近似于EWA-Splatting [ 10]的箱式滤波器。 MS 3DGS [ 34]还旨在解决原始3DGS中的混叠问题,并引入了多尺度高斯飞溅表示,当以新的分辨率级别渲染场景时,它从不同的尺度级别选择高斯以产生无混叠图像。Analytic-Splarting [ 35]用逻辑函数近似高斯的累积分布函数,以更好地模拟每个像素的强度响应以进行抗混叠。SA-GS [ 36]根据渲染分辨率和相机距离在测试时使用自适应2D低通滤波器。

Apart from the aliasing problem, the capability of rendering view-dependent effects also needs to be improved. To produce more faithful view-dependent effects, VDGS [37] proposes to model the 3DGS to represent 3D shapes and predict attributes like view-dependent color and opacity with NeRF-like neural network instead of Spherical Harmonic (SH) coefficients in the original 3DGS. Scaffold-GS [38] proposes to initialize a voxel grid and attach learnable features onto each voxel point and all attributes of Gaussians are determined by interpolated features and lightweight neural networks. Based on Scaffold-GS, Octree-GS [39] introduces a level-of-detail strategy to better capture details. Instead of changing the view-dependent appearance modeling approach, StopThePop [40] points out that 3DGS tends to cheat view-dependent effects by popping 3D Gaussians due to the per-ray depth sorting, which leads to less faithful results when the viewpoint is rotated. To mitigate the potential of popping 3D Gaussians, StopThePop [40] replaces the per-ray depth sorting with tile-based sorting to ensure consistent sorting order at a local region. To better guide the growth of 3D Gaussian Splatting, GaussianPro [32] introduces a progressive propagation strategy to updating Gaussians by considering the normal consistency between neighboring views and adding plane constraints as shown in Fig. 3. GeoGaussian [41] proposes to densify Gaussians on the tangent plane of Gaussians and encourage the smoothness of geometric properties between neighboring Gaussians. RadSplat [42] initializes 3DGS with point cloud derived from a trained neural radiance field and prunes Gaussians with a multi-view importance score. To deal with more complex shadings like specular and anisotropic components, Spec-Gaussian [43] proposes to utilize Anisotropic Spherical Gaussian to approximate 3D scenes’ appearance. TRIPS [44] attaches a neural feature onto Gaussians and renders pyramid-like image feature planes according to the projected Gaussians size similar to ADOP [45] to resolve the blur issue in the original 3DGS. Handling the same issue, FreGS [46] applies frequency-domain regularization to the rendered 2D image to encourage high-frequency details recovery. GES [47] utilizes the Generalized Normal Distribution(NFD) to sharpen scene edges. To resolve the problem that 3DGS is sensitive to initialization, RAIN-GS [48] proposes to initialize Gaussians sparsely with large variance from the SfM point cloud and progressively applies low-pass filtering to avoid 2D Gaussian projections smaller than a pixel. Pixel-GS [49] takes the number of pixels that a Gaussian covers from all the input viewpoints into account in the splitting process and scales the gradient according to the distance to the camera to suppress floaters. Bulò et al. [50] also leverage pixel-level error as a densification criteria and revise the opacity setting in the cloning process for more stable training process. Quantitative results of different reconstruction methods can be found in Table 1. 3DGS-based methods and NeRF-based methods are comparable but 3DGS-based methods have faster rendering speed.

除了锯齿问题,渲染视图相关效果的能力也需要改进。为了产生更忠实的视图相关效果,VDGS [ 37]建议对3DGS进行建模,以表示3D形状,并使用NeRF类神经网络预测视图相关颜色和不透明度等属性,而不是原始3DGS中的球谐(SH)系数。Scaffold-GS [ 38]提出初始化体素网格并将可学习特征附加到每个体素点上,高斯的所有属性都由插值特征和轻量级神经网络确定。基于Scaffold-GS,Octree-GS [ 39]引入了细节层次策略以更好地捕捉细节。StopThePop [ 40]没有改变视图相关外观建模方法,而是指出3DGS倾向于通过弹出3D高斯来欺骗视图相关效果,这是由于逐射线深度排序,这导致视点旋转时结果不那么忠实。 为了减轻弹出3D高斯的可能性,StopThePop [ 40]用基于图块的排序替换了每射线深度排序,以确保局部区域的一致排序顺序。为了更好地指导3D高斯飞溅的增长,GaussianPro [ 32]通过考虑相邻视图之间的正常一致性并添加平面约束,引入了渐进传播策略来更新高斯,如图3所示。GeoGaussian [ 41]提出在高斯的切平面上加密高斯,并鼓励相邻高斯之间几何属性的平滑性。RadSplat [ 42]使用从训练的神经辐射场导出的点云来优化3DGS,并使用多视图重要性得分来修剪高斯。为了处理更复杂的阴影,如镜面反射和各向异性分量,Spec-Gaussian [ 43]提出利用各向异性球面高斯来近似3D场景的外观。 TRIPS [ 44]将神经特征附加到高斯上,并根据类似于ADOP [ 45]的投影高斯大小渲染类高斯图像特征平面,以解决原始3DGS中的模糊问题。处理相同的问题,FreGS [ 46]将频域正则化应用于渲染的2D图像,以促进高频细节恢复。GES [ 47]利用广义正态分布(NFD)来锐化场景边缘。为了解决3DGS对初始化敏感的问题,RAIN-GS [ 48]提出从SfM点云稀疏地初始化具有大方差的高斯,并逐步应用低通滤波以避免小于像素的2D高斯投影。Pixel-GS [ 49]在分割过程中考虑高斯覆盖所有输入视点的像素数量,并根据到相机的距离缩放梯度以抑制浮动。Bulletin et al. [ 50]还利用像素级误差作为致密化标准,并修改克隆过程中的不透明度设置,以实现更稳定的训练过程。不同重建方法的定量结果见表1。基于3DGS的方法和基于NeRF的方法具有可比性,但基于3DGS的方法具有更快的渲染速度。

Figure 3:Overview of GaussianPro [32]. Neighboring views’ normal direction consistency is considered to produce better reconstruction results.

图3:GaussianPro概述[ 32]。考虑相邻视图法向一致性,得到更好的重建结果。 Table 1: Quantitative comparison of novel view synthesis results on the MipNeRF 360 dataset [51] using PSNR, SSIM and LPIPS metrics.

表1:使用PSNR、SSIM和LPIPS指标对MipNeRF 360数据集[ 51]上的新视图合成结果进行定量比较。

| Methods | PSNR ↑ | SSIM ↑ | LPIPS ↓ |

| MipNeRF [52] | 24.04 | 0.616 | 0.441 |

| MipNeRF 360 [51] | 27.57 | 0.793 | 0.234 |

| ZipNeRF [53] | 28.54 | 0.828 | 0.189 |

| 3DGS [5] | 27.21 | 0.815 | 0.214 |

| Mip-Splatting [33] [ 33]第三十三话 | 27.79 | 0.827 | 0.203 |

| Scaffold-GS [38] 支架-GS [ 38] | 28.84 | 0.848 | 0.220 |

| VDGS [37] | 27.66 | 0.809 | 0.223 |

| GaussianPro [32] [ 32]第三十二话 | 27.92 | 0.825 | 0.208 |

2.3Compression and Regularization 2.3压缩和正则化

Although the 3D Gaussian Splatting achieves real-time rendering, there is improvement space in terms of lower computational requirements and better point distribution. Some methods focus on changing the original representation to reduce computational resources.

虽然3D高斯溅射实现了实时渲染,但在较低的计算要求和较好的点分布方面还有改进空间。一些方法专注于改变原始表示以减少计算资源。

Vector Quantization, a traditional compression method in signal processing, which involves clustering multi-dimensional data into a finite set of representations, is mainly utilized in Gaussians [54, 55, 56, 57, 58]. C3DGS [54] adopts residual vector quantization (R-VQ) [59] to represent geometric attributes, including scaling and rotation. SASCGS [56] utilizes vector clustering to encode color and geometric attributes into two codebooks, with a sensitivity-aware K-Means method. As shown in Fig. 4, EAGLES [57] quantizes all attributes including color, position, opacity, rotation, and scaling, they show that the quantization of opacity leads to fewer floaters or visual artifacts in the novel view synthesis task. Compact3D [55] does not quantize opacity and position, because sharing them results in overlapping Gaussians. LightGaussian [58] prunes Gaussians with a smaller importance score and adopts octree-based lossless compression in G-PCC [60] for the position attribute due to the sensitivity to the subsequent rasterization accuracy for the position. Based on the same importance score calculation, Mini-Splatting [61] samples Gaussians instead of pruning points to avoid artifacts caused by pruning. SOGS [62] adopt a different method from Vector Quantization. They arrange Gaussian attributes into multiple 2D grids. These grids are sorted and a smoothness regularization is applied to penalize all pixels that have very different values compared to their local neighborhood on the 2D grid. HAC [63] adopts the idea of Scaffold-GS [38] to model the scene with a set of anchor points and learnable features on these anchor points. It future introduces an adaptive quantization module to compress the features of anchor points with the multi-resolution hash grid [2]. Jo et al. [64] propose to identify unnecessary Gaussians to compress 3DGS and accelerate the computation. Apart from 3D compression, the 3D Gaussian Splatting has also been applied to 2D image compression [65], where the 3D Gaussians degenerates to 2D Gaussians.

矢量量化是信号处理中的一种传统压缩方法,它涉及将多维数据聚类为有限的表示集,主要用于高斯[ 54,55,56,57,58]。C3 DGS [ 54]采用残差矢量量化(R-VQ)[ 59]来表示几何属性,包括缩放和旋转。SASCGS [ 56]利用向量聚类将颜色和几何属性编码到两个码本中,并使用敏感度感知的K-Means方法。如图4所示,EAGLES [ 57]量化了所有属性,包括颜色,位置,不透明度,旋转和缩放,它们表明不透明度的量化导致新视图合成任务中的浮动物或视觉伪影较少。Compact 3D [ 55]不包含不透明度和位置,因为共享它们会导致重叠的高斯。 LightGaussian [ 58]修剪具有较小重要性分数的高斯,并采用G-PCC [ 60]中基于八叉树的无损压缩用于位置属性,因为对位置的后续光栅化精度敏感。基于相同的重要性得分计算,Mini-Splatting [ 61]对高斯样本而不是修剪点进行采样,以避免修剪引起的伪影。SOGS [ 62]采用与矢量量化不同的方法。它们将高斯属性排列到多个2D网格中。对这些网格进行排序,并应用平滑正则化来惩罚与2D网格上的局部邻域相比具有非常不同的值的所有像素。HAC [ 63]采用了Scaffold-GS [ 38]的思想,用一组锚点和这些锚点上的可学习特征对场景进行建模。它进一步引入了一个自适应量化模块,以多分辨率哈希网格压缩锚点的特征[ 2]。Jo等人 [ 64]建议识别不必要的高斯来压缩3DGS并加速计算。除了3D压缩之外,3D高斯溅射也被应用于2D图像压缩[ 65],其中3D高斯退化为2D高斯。

In terms of disk data storage, SASCGS [56] utilizes the entropy encoding method DEFLATE, which utilizes a combination of the LZ77 algorithm and Huffman coding, to compress the data. SOGS [62] compress the RGB grid with JPEG XL and store all other attributes as 32-bit OpenEXR images with zip compression. Quantitative reconstruction results and sizes of 3D scenes after compression are shown in Table 2.

在磁盘数据存储方面,SASCGS [ 56]利用熵编码方法DEFLATE来压缩数据,该方法利用LZ 77算法和霍夫曼编码的组合。SOGS [ 62]使用JPEG XL压缩RGB网格,并使用zip压缩将所有其他属性存储为32位OpenEXR图像。压缩后的3D场景的定量重建结果和尺寸如表2所示。

Figure 4:Pipeline from EAGLES [57]. Vector Quantization (VQ) is utilized to compress Gaussian attributes.

图4:EAGLES的管道[ 57]。利用矢量量化(VQ)对高斯属性进行压缩。 Table 2:Comparison of different compression methods on the MipNeRF360 [51] dataset. Size is measured in MB.

表2:MipNeRF360 [ 51]数据集上不同压缩方法的比较。大小以MB为单位。

| Methods | SSIM↑ |

PSNR↑ |

LPIPS↓ |

Size↓ 尺寸 ↓ |

| 3DGS[5] | 0.815 | 27.21 | 0.214 | 750 |

| C3DGS[54] | 0.798 |

27.08 |

0.247 |

48.8 |

| Compact3D [55] | 0.808 |

27.16 | 0.228 | / |

| EAGLES [57] | 0.81 | 27.15 |

0.24 |

68 |

| SOGS[62] | 0.763 |

25.83 |

0.273 |

18.2 |

| SASCGS[56] | 0.801 |

26.98 |

0.238 |

28.80 |

| LightGaussian [58] [ 58]第五十八话 | 0.857 | 28.45 | 0.210 | 42.48 |

2.4Dynamic 3D Reconstruction 2.4动态3D重建

The same as the NeRF representation, 3DGS can also be extended to reconstruct dynamic scenes. The core of dynamic 3DGS lies in how to model the variations of Gaussian attribute values over time. The most straightforward way is to assign different attribute values to 3D Gaussian at different timesteps. Luiten et al. [18] regard the center and rotation (quaternion) of 3D Gaussian as variables that change over time, while other attributes remain constant over all timesteps, thus achieving 6-DOF tracking by reconstructing dynamic scenes. However, the frame-by-frame discrete definition lacks continuity, which can cause poor results in long-term tracking. Therefore, physical-based constraints are introduced, which are three regularization losses, including short-term local-rigidity and local-rotation similarity losses and a long-term local-isometry loss. However, this method still lacks inter-frame correlation and requires high storage overhead for long-term sequences. Therefore, decomposing spatial and temporal information and modeling them with a canonical space and a deformation field, respectively, has become another exploration direction. The canonical space is the static 3DGS, then the problem becomes how to model the deformation field. One way is to use an MLP network to implicitly fit it, similar to the dynamic NeRF [66]. Yang et al. [19] follow this idea and propose to input the positional-encoded Gaussian position and timestep � to the MLP which outputs the offsets of the position, rotation, and scaling of 3D Gaussian. However, inaccurate poses may affect rendering quality. This is not significant in continuous modeling of NeRF, but discrete 3DGS can amplify this problem, especially in the time interpolation task. So, they add a linearly decaying Gaussian noise to the encoded time vector to improve temporal smoothing without additional computational overhead. Some results are shown in Fig. 5. 4D-GS [67] adopts the multi-resolution HexPlane voxels [68] to encode the temporal and spatial information of each 3D Gaussian rather than positional encoding and utilizes different compact MLPs for different attributes. For stable training, it first optimizes static 3DGS and then optimizes the deformation field represented by an MLP. GauFRe [69] applies the exponential and normalization operation to the scaling and rotation respectively after adding the delta values predicted by an MLP, ensuring convenient and reasonable optimization. As dynamic scenes contain large static parts, it randomly initializes the point cloud into dynamic point clouds and static point clouds, optimizes them accordingly, and renders them together to achieve decoupling of the dynamic part and the static part. 3DGStream [70] allows online training of 3DGS into the dynamic scene reconstruction by modeling the transformation between frames as a neural transformation cache and adaptively adding 3D Gaussians to handle emerging objects. 4DGaussianSplatting [71] turns 3D Gaussians into 4D Gaussians and slice the 4D Gaussians into 3D Gaussians for each time step. The sliced 3D Gaussians are projected onto image plane to reconstruct the corresponding frame. Guo et al. [72] and GaussianFlow [73] introduce 2D flow estimation results into the training of dynamic 3D Gaussians, which supports deformation modeling between neighboring frames and enables superior 4D reconstruction and 4D generation results. TOGS [74] constructs an opacity offset table to model the changes in digital subtraction angiography. Zhang et al. [75] leverage the diffusion prior to enhance dynamic scene reconstruction and propose a neural bone transformation module for animatable objects reconstruction from monocular videos.

与NeRF表示相同,3DGS也可以扩展到重建动态场景。动态3DGS的核心在于如何对高斯属性值随时间的变化进行建模。最直接的方法是在不同的时间步为3D高斯分配不同的属性值。Luiten等人。[ 18]将3D高斯的中心和旋转(四元数)视为随时间变化的变量,而其他属性在所有时间步长上保持不变,从而通过重建动态场景实现6-DOF跟踪。然而,逐帧离散定义缺乏连续性,这可能导致长期跟踪的结果不佳。因此,引入了基于物理的约束,这是三个正则化损失,包括短期局部刚性和局部旋转相似性损失和长期局部等距损失。然而,该方法仍然缺乏帧间相关性,并且对于长期序列需要高存储开销。 因此,分解空间和时间信息并分别用规范空间和变形场对其建模已成为另一个探索方向。正则空间是静态的3DGS,那么问题就变成了如何对变形场建模。一种方法是使用MLP网络来隐式拟合它,类似于动态NeRF [ 66]。Yang等人。[ 19]遵循这一思想,并提出将位置编码的高斯位置和时间步长 � 输入到MLP,MLP输出3D高斯的位置,旋转和缩放的偏移。但是,不准确的姿势可能会影响渲染质量。这在NeRF的连续建模中并不重要,但离散3DGS可以放大这个问题,特别是在时间插值任务中。因此,他们将线性衰减的高斯噪声添加到编码的时间向量中,以在不增加额外计算开销的情况下改善时间平滑。图5中示出了一些结果。 4D-GS [ 67]采用多分辨率HexPlane体素[ 68]来编码每个3D高斯的时间和空间信息,而不是位置编码,并针对不同属性使用不同的紧凑MLP。对于稳定的训练,它首先优化静态3DGS,然后优化由MLP表示的变形场。GauFRe [ 69]在添加由MLP预测的delta值之后,分别对缩放和旋转应用指数和归一化操作,以确保方便和合理的优化。由于动态场景中包含较大的静态部分,该方法将点云随机划分为动态点云和静态点云,并对它们进行相应的优化,然后将它们一起渲染,实现了动态部分和静态部分的解耦。 3DGStream [ 70]允许通过将帧之间的变换建模为神经变换缓存并自适应地添加3D高斯来处理新兴对象,从而将3DGS在线训练到动态场景重建中。4DGaussianSplatting [ 71]将3D高斯转换为4D高斯,并针对每个时间步长将4D高斯切片为3D高斯。将切片的3D高斯投影到图像平面上以重建相应的帧。Guo等人[ 72]和GaussianFlow [ 73]将2D流估计结果引入到动态3D高斯的训练中,支持相邻帧之间的变形建模,并实现上级4D重建和4D生成结果。TOGS [ 74]构建了一个不透明度偏移表,以模拟数字减影血管造影的变化。Zhang等人。[ 75]利用扩散优先增强动态场景重建,并提出了一种神经骨骼变换模块,用于从单目视频中重建可动画对象。

Compared to NeRF, 3DGS is an explicit representation and the implicit deformation modeling requires lots of parameters which may bring overfit, so some explicit deformation modeling methods are also proposed, which ensure fast training. Katsumata et al. [76] propose to use the Fourier series to fit the changes of the Gaussian position, inspired by the fact that the motion of human and articulated objects is sometimes periodic. The rotation is approximated by a linear function. Other attributes remain unchanged over time. So dynamic optimization is to optimize the parameters of the Fourier series and the linear function, and the number of parameters is independent of time. These parametric functions are continuous functions about time, ensuring temporal continuity and thus ensuring the robustness of novel view synthesis. In addition to the image losses, a bidirectional optical flow loss is also introduced. The polynomial fitting and Fourier approximation have advantages in modeling smooth motion and violent motion, respectively. So Gaussian-Flow [77] combines these two methods in the time and frequency domains to capture the time-dependent residuals of the attribute, named as Dual-Domain Deformation Model (DDDM). The position, rotation, and color are considered to change over time. To prevent optimization problems caused by uniform time division, this work adopts adaptive timestep scaling. Finally, the optimization iterates between static optimization and dynamic optimization, and introduces temporal smoothness loss and KNN rigid loss. Li et al. [78] introduce a temporal radial basis function to represent temporal opacity, which can effectively model the scene content that emerges or vanishes. Then, the polynomial function is exploited to model the motion and rotation of 3D Gaussians. They also replace the spherical harmonics with features to represent view- and time-related color. These features consist of three parts: base color, view-related feature, and time-related feature. The latter two are translated into a residual color through an MLP added to the base color to obtain the final color. During optimization, new 3D Gaussians will be sampled at the under-optimized positions based on training error and coarse depth. The explicit modeling methods used in the above methods are all based on commonly used functions. DynMF [79] assumes that each dynamic scene is composed of a finite and fixed number of motion trajectories and argues that a learned basis of the trajectories will be smoother and more expressive. All motion trajectories in the scene can be linearly represented by this learned basis and a small temporal MLP is used to generate the basis. The position and rotation change over time and both share the motion coefficients with different motion bases. The regularization, sparsity, and local rigidity terms of the motion coefficients are introduced during optimization.

与NeRF相比,3DGS是一种显式表示,隐式变形建模需要大量的参数,可能会带来过拟合,因此也提出了一些显式变形建模方法,保证了快速训练。Katsumata等人。[ 76]建议使用傅立叶级数来拟合高斯位置的变化,这是受到人类和铰接物体的运动有时是周期性的这一事实的启发。旋转由线性函数近似。其他属性随时间保持不变。因此动态优化就是对傅里叶级数和线性函数的参数进行优化,而参数的个数与时间无关。这些参数函数是关于时间的连续函数,确保了时间连续性,从而确保了新颖视图合成的鲁棒性。除了图像损失之外,还引入了双向光流损失。 多项式拟合和傅立叶近似分别在模拟平稳运动和剧烈运动时具有优势。因此,Gaussian-Flow [ 77]在时域和频域结合了这两种方法,以捕获属性的时间相关残差,称为双域变形模型(DDDM)。位置、旋转和颜色被认为随时间而改变。为了避免均匀时间划分带来的优化问题,本文采用了自适应时间步长缩放。最后,优化在静态优化和动态优化之间迭代,并引入时间平滑损失和KNN刚性损失。Li等人。[ 78]引入时间径向基函数来表示时间不透明度,它可以有效地对出现或消失的场景内容进行建模。然后,利用多项式函数来模拟3D高斯的运动和旋转。它们还将球谐函数替换为表示视图和时间相关颜色的特征。 这些特征由三部分组成:基色、视图相关特征和时间相关特征。后两者通过添加到基色的MLP转换为残留颜色,以获得最终颜色。在优化过程中,新的3D高斯将根据训练误差和粗略深度在未优化的位置进行采样。上述方法中使用的显式建模方法都是基于常用的函数。DynMF [ 79]假设每个动态场景由有限和固定数量的运动轨迹组成,并认为轨迹的学习基础将更平滑,更具表现力。场景中的所有运动轨迹都可以由这个学习的基础线性表示,并且使用小的时间MLP来生成基础。位置和旋转随时间变化,并且两者都共享具有不同运动基的运动系数。 在优化过程中引入了运动系数的正则化、稀疏性和局部刚度项。

Figure 5:The results of Deformable3DGS [19]. Given a set of monocular multi-view images (a), this method can achieve novel view synthesis (b) and time synthesis (c), and has better rendering quality compared to HyperNeRF [80] (d).

图5:Deformable3DGS的结果[ 19]。给定一组单目多视图图像(a),该方法可以实现新颖的视图合成(B)和时间合成(c),并且与HyperNeRF [ 80](d)相比具有更好的渲染质量。 Table 3: Quantitative comparison of novel view synthesis results on the D-NeRF [66] dataset using PSNR, SSIM and LPIPS metrics.

表3:使用PSNR、SSIM和LPIPS指标对D-NeRF [ 66]数据集上的新视图合成结果进行定量比较。

| Methods | PSNR ↑ | SSIM ↑ | LPIPS ↓ |

| D-NeRF [66] | 31.69 | 0.975 | 0.057 |

| TiNeuVox [81] [2019—04—16]·天龙八部私服[81] | 33.76 | 0.984 | 0.044 |

| Tensor4D [82] Tensor 4D(4D) | 27.72 | 0.945 | 0.051 |

| K-Planes [83] K—Planes(英语:K—Planes)[83] | 32.32 | 0.973 | 0.038 |

| CoGS [84] | 37.90 | 0.983 | 0.027 |

| GauFRe [69] | 34.80 | 0.985 | 0.028 |

| 4D-GS [67] | 34.01 | 0.989 | 0.025 |

| Yang et al. [19] 杨等[19] |

39.51 | 0.990 | 0.012 |

| SC-GS [28] | 43.30 | 0.997 | 0.0078 |

There are also some other ways to explore. 4DGS [85] regards the spacetime of the scene as an entirety and transforms 3D Gaussian into 4D Gaussian, that is, transforming the attribute values defined on Gaussian to 4D space. For example, the scaling matrix is diagonal, so adding a scaling factor of time dimension on the diagonal forms the scaling matrix in 4D space. The 4D extension of the spherical harmonics (SH) can be expressed as the combination of SH with 1D-basis functions. SWAGS [86] divides the dynamic sequence into different windows based on the amount of motion and trains separate dynamic 3DGS model in different windows, with different canonical spaces and deformation fields. The deformation field uses a tunable MLP [87], which focuses more on modeling the dynamic part of the scene. Finally, fine-tuning ensures temporal consistency between windows using the overlapping frame to add constraints. The MLP is fixed and only the canonical representation is optimized during fine-tuning.

还有其他一些方法可以探索。4DGS [ 85]将场景的时空视为一个整体,并将3D高斯转换为4D高斯,即将高斯上定义的属性值转换为4D空间。例如,缩放矩阵是对角的,因此在对角线上添加时间维度的缩放因子形成4D空间中的缩放矩阵。球谐函数(SH)的4D扩展可以表示为SH与1D基函数的组合。SWAGS [ 86]根据运动量将动态序列划分为不同的窗口,并在不同的窗口中训练单独的动态3DGS模型,具有不同的规范空间和变形场。变形场使用可调的MLP [ 87],它更侧重于对场景的动态部分进行建模。最后,微调确保使用重叠帧添加约束的窗口之间的时间一致性。 MLP是固定的,并且在微调期间仅优化规范表示。

These dynamic modeling methods can be further applied in the medical field, such as the markless motion reconstruction for motion analysis of infants and neonates [88] which introduces additional mask and depth supervisions, and monocular endoscopic reconstruction [89, 90, 91, 92]. Quantitative reconstruction results by representative NeRF-based and 3DGS-based methods are reported in Table 3. 3DGS-based methods have clear advantages compared to NeRF-based methods due to their explicit geometry representation that can model the dynamics more easily. The efficient rendering of 3DGS also avoids densely sampling and querying the neural fields in NeRF-based methods and makes downstream applications of dynamic reconstruction like free-viewpoint video more feasible.

这些动态建模方法可以进一步应用于医学领域,例如用于婴儿和新生儿运动分析的无标记运动重建[ 88],其引入了额外的掩模和深度监督,以及单眼内窥镜重建[ 89,90,91,92]。表3中报告了代表性的基于NeRF和基于3DGS的方法的定量重建结果。与基于NeRF的方法相比,基于3DGS的方法具有明显的优势,因为它们的显式几何表示可以更容易地建模动态。3DGS的高效渲染还避免了在基于NeRF的方法中对神经场进行密集采样和查询,并使动态重建的下游应用(如自由视点视频)更加可行。

2.53D Reconstruction from Challenging Inputs 2.53D重建从扫描输入

While most methods experiment on regular input data with dense viewpoints in relatively small scenes, there are also works targeting reconstructing 3D scenes with challenging inputs like sparse-view input, data without camera parameters, and larger scenes like urban streets. FSGS [93] is the first to explore reconstructing 3D scenes from sparse view input. It initializes sparse Gaussians from structure-from-motion (SfM) methods and identifies them by unpooling existing Gaussians. To allow faithful geometry reconstruction, an extra pre-trained 2D depth estimation network helps to supervise the rendered depth images. SparseGS [94], CoherentGS [95], and DNGaussian [96] also target 3D reconstruction from sparse-view inputs by introducing depth inputs estimated by pre-trained a 2D network. It further removes Gaussians with incorrect depth values and utilizes the Score Distillation Sampling (SDS) loss [97] to encourage rendered results from novel viewpoints to be more faithful. GaussainObject [98] instead initializes Gaussians with visual hull and fine-tunes a pre-trained ControlNet [99] repair degraded rendered images generated by adding noises to Gaussians’ attributes, which outperforms previous NeRF-based sparse-view reconstruction methods as shown in Fig. 6. Moving a step forward, pixelSplat [100] reconstructs 3D scenes from single-view input without any data priors. It extracts pixel-aligned image features similar to PixelNeRF [101] and predicts attributes for each Gaussian with neural networks. MVSplat [102] brings the cost volume representation into sparse view reconstruction, which is taken as input for the attributes prediction network for Gaussians. SplatterImage [103] also works on single-view data but instead utilizes a U-Net [104] network to translate the input image into attributes on Gaussians. It can extend to multi-view inputs by aggregating predicted Gaussians from different viewpoints via warping operation.

虽然大多数方法都是在相对较小的场景中使用具有密集视点的常规输入数据进行实验,但也有一些工作针对具有挑战性的输入(如稀疏视图输入,没有相机参数的数据)和较大的场景(如城市街道)重建3D场景。FSGS [ 93]是第一个探索从稀疏视图输入重建3D场景的方法。它从运动恢复结构(SfM)方法中提取稀疏高斯,并通过解池现有高斯来识别它们。为了实现忠实的几何重建,额外的预训练2D深度估计网络有助于监督渲染的深度图像。SparseGS [ 94],CoherentGS [ 95]和DNGaussian [ 96]也通过引入由预训练的2D网络估计的深度输入来从稀疏视图输入进行3D重建。它进一步删除了具有不正确深度值的高斯,并利用分数蒸馏采样(SDS)损失[ 97]来鼓励来自新视点的渲染结果更加忠实。 GaussainObject [ 98]用视觉船体代替高斯,并微调预训练的ControlNet [ 99]修复通过向高斯属性添加噪声而生成的退化渲染图像,其性能优于先前基于NeRF的稀疏视图重建方法,如图6所示。更进一步,pixelSplat [ 100]从单视图输入重建3D场景,而无需任何数据先验。它提取类似于PixelNeRF [ 101]的像素对齐图像特征,并使用神经网络预测每个高斯的属性。MVSplat [ 102]将成本体积表示带入稀疏视图重建,将其作为高斯属性预测网络的输入。SplatterImage [ 103]也适用于单视图数据,但使用U-Net [ 104]网络将输入图像转换为高斯属性。它可以扩展到多视图输入聚合预测高斯从不同的观点通过翘曲操作。

Figure 6:Results by GaussianObject [98]. Compared to previous NeRF-based sparse-view reconstruction methods [105, 106] and recent 3DGS [5], GaussianObject achieves high-quality 3D reconstruction from only 4 views.

图6:GaussianObject的结果[ 98]。与以前的基于NeRF的稀疏视图重建方法[ 105,106]和最近的3DGS [ 5]相比,GaussianObject仅从4个视图实现高质量的3D重建。

For urban scene data, PVG [107] makes Gaussian’s mean and opacity value time-dependent functions centered at corresponding Gaussian’s life peak (maximum prominence over time). DrivingGaussian [108] and HUGS [109] reconstruct dynamic driving data by first incrementally optimizing static 3D Gaussians and then composing them with dynamic objects’ 3D Gaussians. This process is also assisted by the Segmentation Anything Model [110] and input LiDAR depth data. StreetGaussians [111] models the static background with a static 3DGS and dynamic objects by a dynamic 3DGS where Gaussians are transformed by tracked vehicle poses and their appearance is approximated with time-related Spherical Harmonics (SH) coefficients. SGD [112] incorporate diffusion priors with street scene reconstruction to improve the novel view synthesis results similar to ReconFusion [113]. HGS-Mapping [114] separately models textureless sky, ground plane, and other objects for more faithful reconstruction. VastGaussian [115] divides a large scene into multiple regions based on the camera distribution projected on the ground and learns to reconstruct a scene by iteratively adding more viewpoints into training based on visibility criteria. In addition, it models the appearance changes with an optimizable appearance embedding for each view. CityGaussian [116] also models the large-scale scene with a divide-and-conquer strategy and further introduces level-of-detail rendering based on the distance between the camera to a Gaussian. To facilitate comparisons on the urban scenes for 3DGS methods, GauU-Scene [117] provides a large-scale dataset covering over 1.5��2.

对于城市场景数据,PVG [ 107]使高斯的平均值和不透明度值时间依赖函数集中在相应的高斯生命峰值(随时间的最大突出)。DrivingGaussian [ 108]和HUGS [ 109]通过首先增量优化静态3D高斯,然后将其与动态对象的3D高斯组合来重建动态驾驶数据。这个过程也得到了分割任何模型[ 110]和输入LiDAR深度数据的帮助。StreetGaussians [ 111]用静态3DGS和动态3DGS对静态背景进行建模,其中高斯通过跟踪车辆姿态进行变换,并且它们的外观用与时间相关的球谐(SH)系数进行近似。SGD [ 112]将扩散先验与街景重建结合起来,以改善与ReconFusion [ 113]类似的新视图合成结果。HGS映射[ 114]分别对无纹理天空、地平面和其他物体进行建模,以实现更忠实的重建。 VastGaussian [ 115]基于投影在地面上的摄像机分布将大型场景划分为多个区域,并通过迭代地将更多视点添加到基于可见性标准的训练中来学习重建场景。此外,它还为每个视图使用可优化的外观嵌入对外观更改进行建模。CityGaussian [ 116]还使用分而治之的策略对大规模场景进行建模,并进一步引入了基于相机与高斯之间距离的细节层次渲染。为了便于比较3DGS方法的城市场景,GauU-Scene [ 117]提供了一个覆盖 1.5��2 的大规模数据集。

Apart from the works mentioned above, other methods focus on special input data including images without camera [118, 119, 120, 121], blurry inputs [122, 123, 124, 125], unconstrained images [126, 127], mirror-like inputs [128, 129], CT scans [130, 131], panoramic images [132], and satellite images [133].

除了上面提到的工作,其他方法集中在特殊的输入数据,包括没有相机的图像[ 118,119,120,121],模糊输入[ 122,123,124,125],无约束图像[ 126,127],镜像输入[ 128,129],CT扫描[ 130,131],全景图像[ 132]和卫星图像[ 133]。

3Gaussian Splatting for 3D Editing 用于3D编辑的3Gaussian Splatting

3DGS allows for efficient training and high-quality real-time rendering using rasterization-based point-based rendering techniques. Editing in 3DGS has been investigated in a number of fields. We have summarized the editing on 3DGS into three categories: geometry editing, appearance editing, and physical simulation.

3DGS允许使用基于光栅化的基于点的渲染技术进行高效的训练和高质量的实时渲染。3DGS中的编辑已经在许多领域进行了研究。我们已经将3DGS上的编辑归纳为三类:几何编辑、外观编辑和物理模拟。

3.1Geometry Editing 3.1几何编辑

On the geometry side, GaussianEditor [134] controls the 3DGS using the text prompts and semantic information from proposed Gaussian semantic tracing, which enables 3D inpainting, object removal, and object composition. Gaussian Grouping [135] simultaneously rebuilds and segments open-world 3D objects under the supervision of 2D mask predictions from SAM and 3D spatial consistency constraints, which further enables diverse editing applications including 3D object removal, inpainting, and composition with high-quality visual effects and time efficiency. Furthermore, Point’n Move [136] combines interactive scene object manipulation with exposed region inpainting. Thanks to the explicit representation of 3DGS, the dual-stage self-prompting mask propagation process is proposed to transfer the given 2D prompt points to 3D mask segmentation, resulting in a user-friendly editing experience with high-quality effects. Feng et al. [137] propose a new Gaussian splitting algorithm to avoid inhomogeneous 3D Gaussian reconstruction and makes the boundary of 3D scenes after removal operation sharper. Although the above methods realize the editing on 3DGS, they are still limited to some simple editing operations (removal, rotation, and translation) for 3D objects. SuGaR [22] extracts explicit meshes from the 3DGS representation by regularizing Gaussians over surfaces. Further, it relies on manual adjustment of Gaussian parameters based on deformed meshes to realize desired geometry editing but struggles with large-scale deformation. SC-GS [28] learns a set of sparse control points for 3D scene dynamics but faces challenges with intense movements and detailed surface deformation. GaMeS [30] introduces a new GS-based model that combines conventional mesh and vanilla GS. The explicit mesh is utilized as input and parameterizes Gaussian components using the vertices, which can modify Gaussians in real time by altering mesh components during inference. However, it cannot handle significant deformations or changes, especially the deformation on large faces, since it cannot change the mesh topology during training. Although the above methods can finish some simple rigid transformations and non-rigid deformation, they still face challenges in their editing effectiveness and large-scale deformation. As shown in Fig. 7, Gao et al. [31] also adapt the mesh-based deformation to 3DGS by harnessing the priors of explicit representation (the surface properties like normals of the mesh, and the gradients generated by explicit deformation methods) and learning the face split to optimize the parameters and number of Gaussians, which provides adequate topological information to 3DGS and improves the quality for both the reconstruction and geometry editing results. GaussianFrosting [138] shares a similar idea as Gao et al. [31] by constructing a base mesh but further develops a forsting layer to allow Gaussians to move in a small range near the mesh surface.

在几何体方面,GaussianEditor [ 134]使用文本提示和来自建议的高斯语义跟踪的语义信息来控制3DGS,从而实现3D修补,对象删除和对象合成。高斯模型[ 135]在SAM和3D空间一致性约束的2D掩码预测的监督下同时重建和分割开放世界的3D对象,这进一步实现了各种编辑应用,包括3D对象删除,修复和合成,具有高质量的视觉效果和时间效率。此外,Point'n Move [ 136]将交互式场景对象操作与暴露区域修复相结合。由于3DGS的显式表示,提出了双阶段自提示掩模传播过程,以将给定的2D提示点转换为3D掩模分割,从而产生具有高质量效果的用户友好的编辑体验。Feng等人 [ 137]提出了一种新的高斯分裂算法,避免了非均匀的三维高斯重建,并使去除后的三维场景边界更加清晰。虽然上述方法实现了3DGS上的编辑,但它们仍然限于对3D对象的一些简单编辑操作(移除、旋转和平移)。SuGaR [ 22]通过在表面上正则化高斯来从3DGS表示中提取显式网格。此外,它依赖于基于变形网格的高斯参数的手动调整来实现所需的几何编辑,但与大规模变形的斗争。SC-GS [ 28]学习了一组用于3D场景动态的稀疏控制点,但面临激烈运动和详细表面变形的挑战。GaMeS [ 30]引入了一种新的基于GS的模型,该模型结合了传统的网格和香草GS。 该算法以显式网格为输入,利用顶点对高斯分量进行参数化,通过在推理过程中改变网格分量,实现了对高斯分量的真实的实时修改。但是,它无法处理显著的变形或更改,特别是大面上的变形,因为它无法在训练期间更改网格拓扑。虽然上述方法可以完成一些简单的刚性变换和非刚性变形,但它们在编辑效果和大规模变形方面仍然面临挑战。如图7所示,Gao等人。[ 31]还通过利用显式表示的先验来调整基于网格的变形以适应3DGS(表面属性,如网格的法线,以及由显式变形方法生成的梯度)和学习面部分割以优化高斯参数和数量,这为3DGS提供了足够的拓扑信息,并提高了重建和几何编辑结果的质量。 GaussianFrosting [ 138]与Gao等人[ 31]通过构建基础网格共享类似的想法,但进一步开发了forsting层,以允许Gaussians在网格表面附近的小范围内移动。

Figure 7:Pipeline of Gao et al. [31]. It allows large-scale geometry editing by binding 3D Gaussians onto the mesh.

图7:Gao等人[ 31]的管线。它允许通过将3D Gaussians绑定到网格上来进行大规模几何编辑。

3.2Appearance Editing 3.2外观编辑

On the appearance side, GaussianEditor [139] proposes to first modify 2D images with language input with diffusion model [140] in the masked region generated by the recent 2D segmentation model [110] and updating attributes of Gaussians again similar to the previous NeRF editing work Instruct-NeRF2NeRF [141]. Another independent research work also named GaussianEditor [134] operates similarly but it further introduces a Hierarchical Gaussian Splatting (HGS) to allow 3D editing like object inpainting. GSEdit [142] takes a texture mesh or pre-trained 3DGS as input and utilizes the Instruct-Pix2Pix [143] and the SDS loss to updated the input mesh or 3DGS. To alleviate the inconsistency issue, GaussCtrl [144] introduces the depth map as the conditional input of the ControlNet [99] to encourage geometry consistency. Wang et al. [145] also aims to solve this inconsistent issue by introducing multi-view cross-attention maps. Texture-GS [146] proposes to disentangle the geometry and appearance of 3DGS and learns a UV mapping network for points near the underlying surface, thus enabling manipulations including texture painting and texture swapping. 3DGM [147] also represents a 3D scene with a proxy mesh with fixed UV mapping where Gaussians are stored on the texture map. This disentangled representation also allows animation and texture editing. Apart from local texture editing, there are works [148, 149, 150] focusing on stylizing 3DGS with a reference style image.

在外观方面,GaussianEditor [ 139]建议首先在最近的2D分割模型[ 110]生成的掩蔽区域中使用扩散模型[ 140]修改具有语言输入的2D图像,并再次更新高斯的属性,类似于之前的NeRF编辑工作Instruct-NeRF 2NeRF [ 141]。另一个名为GaussianEditor的独立研究工作[ 134]的操作类似,但它进一步引入了分层高斯溅射(HGS),以允许像对象修复一样进行3D编辑。GSEdit [ 142]将纹理网格或预训练的3DGS作为输入,并利用Instruct-Pix 2 Pix [ 143]和SDS损失来更新输入网格或3DGS。为了缓解不一致的问题,GaussCtrl [ 144]引入了深度图作为ControlNet [ 99]的条件输入,以促进几何一致性。Wang等人。[ 145]还旨在通过引入多视图交叉注意力地图来解决这个不一致的问题。 Texture-GS [ 146]建议解开3DGS的几何形状和外观,并为底层表面附近的点学习UV映射网络,从而实现包括纹理绘制和纹理交换在内的操作。3DGM [ 147]还表示具有固定UV映射的代理网格的3D场景,其中高斯存储在纹理贴图上。这种分离的表示还允许动画和纹理编辑。除了局部纹理编辑,还有一些作品[ 148,149,150]专注于使用参考样式图像样式化3DGS。

To allow more tractable control over texture and lighting, researchers have started to disentangle texture and lighting to enable independent editing. As shown in Fig. 8, GS-IR [151] and RelightableGaussian [23] separately model texture and lighting. Additional materials parameters are defined on each Gaussian to represent texture and lighting is approximated by a learnable environment map. GIR [152] and GaussianShader [24] share the same disentanglement paradigm by binding material parameters onto 3D Gaussians, but to deal with more challenging reflective scenes, they add normal orientation constraints to Gaussians similar to Ref-NeRF [153]. After texture and lighting disentanglement, these methods can independently modify texture or lighting without influencing the other.

为了更好地控制纹理和光照,研究人员已经开始将纹理和光照分开,以实现独立编辑。如图8所示,GS-IR [ 151]和RelightableGaussian [ 23]分别对纹理和照明进行建模。在每个高斯上定义额外的材质参数来表示纹理,并且通过可学习的环境贴图来近似照明。GIR [ 152]和GaussianShader [ 24]通过将材料参数绑定到3D Gaussians上共享相同的解纠缠范例,但为了处理更具挑战性的反射场景,它们将法线方向约束添加到Gaussians,类似于Ref-NeRF [ 153]。在纹理和光照解纠缠后,这些方法可以独立地修改纹理或光照,而不影响其他。

Figure 8:By decomposing material and lighting, GS-IR [151] enables appearance editing including relighting and material manipulation.

图8:通过分解材料和照明,GS-IR [ 151]可以进行外观编辑,包括重新照明和材料操作。

3.3Physical Simulation 3.3物理模拟

On the physical-based 3DGS editing, as shown in Fig. 9, PhysGaussian [21] employs discrete particle clouds from 3D GS for physically-based dynamics and photo-realistic rendering through continuum deformation [154] of Gaussian kernels. Gaussian Splashing [155] combines 3DGS and position-based dynamics (PBD) [156] to manage rendering, view synthesis, and solid/fluid dynamics cohesively. Similar to Gaussian shaders [24], the normal is applied to each Gaussian kernel to align its orientation with the surface normal and improve PBD simulation, also allowing the physically-based rendering to enhance dynamic surface reflections on fluids. VR-GS [29] is a physical dynamics-aware interactive Gaussian Splatting system for VR, tackling the difficulty of editing high-fidelity virtual content in real time. VR-GS utilizes 3DGS to close the quality gap between generated and manually crafted 3D content. By utilizing physically-based dynamics, which enhance immersion and offer precise interaction and manipulation controllability. Spring-Gaus [157] applies the Spring-Mass model into the modeling of dynamic 3DGS and learns the physical properties like mass and velocity from input video, which are editable for real-world simulation. Feature Splatting [158] further incorporates semantic priors from pre-trained networks and makes object-level simulation possible.

在基于物理的3DGS编辑中,如图9所示,PhysGaussian [ 21]通过高斯内核的连续变形[ 154]采用来自3D GS的离散粒子云进行基于物理的动态和照片级真实感渲染。高斯飞溅[ 155]结合了3DGS和基于位置的动力学(PBD)[ 156]来管理渲染,视图合成和固体/流体动力学。与高斯着色器[ 24]类似,法线应用于每个高斯内核,以将其方向与表面法线对齐并改进PBD模拟,还允许基于物理的渲染增强流体上的动态表面反射。VR-GS [ 29]是一个物理动态感知的交互式高斯溅射系统,用于VR,解决了在真实的时间编辑高保真虚拟内容的困难。VR-GS利用3DGS缩小了生成的3D内容和手工制作的3D内容之间的质量差距。 通过利用基于物理的动力学,增强沉浸感并提供精确的交互和操纵可控性。Spring-Gaus [ 157]将Spring-Mass模型应用于动态3DGS的建模,并从输入视频中学习质量和速度等物理属性,这些属性可用于真实世界的模拟。特征飞溅[ 158]进一步结合了来自预训练网络的语义先验,并使对象级仿真成为可能。

Figure 9:Pipeline of PhysGaussian [21]. Treating 3D Gaussians as continuum, PhysGaussian [21] produces realistic physical simulation results.

图9:PhysGaussian的流水线[ 21]。将3D高斯视为连续体,PhysGaussian [ 21]产生逼真的物理模拟结果。

4Applications of Gaussian Splatting 4高斯溅射的应用

4.1Segmentation and Understanding 4.1分割和理解

Open-world 3D scene understanding is an essential challenge for robotics, autonomous driving, and VR/AR environments. With the remarkable progress in 2D scene understanding brought by SAM [110] and its variants, existing methods have tried to integrate semantic features, such as CLIP [159]/DINO [160] into NeRF, to deal with 3D segmentation, understanding, and editing.

开放世界3D场景理解是机器人、自动驾驶和VR/AR环境的一个重要挑战。随着SAM [ 110]及其变体在2D场景理解方面的显着进步,现有方法试图将语义特征(如CLIP [ 159]/DINO [ 160])集成到NeRF中,以处理3D分割,理解和编辑。

NeRF-based methods are computationally intensive because of the implicit and continuous representation. Recent methods try to integrate 2D scene understanding methods with 3D Gaussians to produce a real-time and easy-to-editing 3D scene representation. Most methods utilize pre-trained 2D segmentation methods like SAM [110] to produce semantic masks of input multi-view images [136, 135, 161, 162, 163, 164, 165, 166], or extract dense language features, CLIP [159]/DINO [160], of each pixel [167, 168, 169].

基于NeRF的方法是计算密集型的,因为隐式和连续表示。最近的方法尝试将2D场景理解方法与3D高斯方法相结合,以产生实时且易于编辑的3D场景表示。大多数方法利用预先训练的2D分割方法,如SAM [ 110]来生成输入多视图图像的语义掩码[ 136,135,161,162,163,164,165,166],或提取每个像素的密集语言特征CLIP [ 159]/DINO [ 160][ 167,168,169]。

LEGaussians [167] adds an uncertainty value attribute and semantic feature vector attribute for each Gaussian. It then renders a semantic map with uncertainties from a given viewpoint, to compare with the quantized CLIP and DINO dense features of the ground truth image. To achieve the 2D mask consistency across views, Gaussian Grouping [135] employs DEVA to propagate and associate masks from different viewpoints. It adds Identity Encoding attributes to 3D Gaussians and renders the identity feature map to compare with the extracted 2D masks.

LEGaussians [ 167]为每个高斯添加了不确定性值属性和语义特征向量属性。然后,它从给定的角度呈现具有不确定性的语义图,以与地面实况图像的量化CLIP和DINO密集特征进行比较。为了实现视图之间的2D掩模一致性,高斯模型[ 135]采用DEVA来传播和关联来自不同视点的掩模。它将身份编码属性添加到3D高斯,并渲染身份特征图以与提取的2D掩码进行比较。

Figure 10:Geometry reconstruction results by SuGaR [22].

图10:SuGaR的几何重建结果[ 22]。

4.2Geometry Reconstruction and SLAM 4.2几何重构与SLAM

Geometry reconstruction and SLAM are important subtasks in 3D reconstruction.

几何重建和SLAM是三维重建中的重要子任务。

Geometry reconstruction 几何重建

In the context of NeRF, a series of works [170, 171, 172, 173] have successfully reconstructed high-quality geometry from multi-view images. However, due to the discrete nature of 3DGS, only a few works stepped into this field. SuGaR [22] is the pioneering work that builds up 3D surfaces from multi-view images with the 3DGS representation. It introduces a simple but effective self-regularization loss to constrain that the distance between the camera and the closest Gaussian should be as close as possible to the corresponding pixel’s depth value in the rendered depth map, which encourages the alignment between 3DGS and the authentic 3D surface. Another work NeuSG [174] chooses to incorporate the previous NeRF-based surface reconstruction method NeuS [170] in the 3DGS representation to transfer the surface property to 3DGS. More specifically, it encourages Gaussians’ signed distance values to be zeros and the normal directions of 3DGS and the NeuS method to be as consistent as possible. 3DGSR [175] and GSDF [176] also encourage the consistency between SDF and 3DGS to enhance the geometry reconstruction quality. DN-Splatter [177] utilizes depth and normal priors captured from common devices or predicted from general-purpose networks to enhance the reconstruction quality of 3DGS. Wolf et al. [178] first train a 3DGS to render stereo-calibrated novel views and apply stereo depth estimation on the rendered views. The estimated dense depth maps are fused together by the Truncated Signed Distance Function(TSDF) to form a triangular mesh. 2D-GS [179] replaces 3D Gaussians with 2D Gaussians for more accurate ray-splat intersection and employs a low-pass filter to avoid degenerated line projection. Though attempts have been made in the field of geometry reconstruction, due to the discrete nature of 3DGS, current methods achieve comparable or worse results compared to implicit representation-based methods with continuous field assumption where the surface can be easily determined.

在NeRF的背景下,一系列工作[ 170,171,172,173]已经成功地从多视图图像重建高质量的几何形状。然而,由于3DGS的离散性,只有少数作品涉足这一领域。SuGaR [ 22]是一项开创性的工作,它使用3DGS表示从多视图图像构建3D表面。它引入了一个简单但有效的自正则化损失,以约束相机和最近的高斯之间的距离应该尽可能接近渲染深度图中相应像素的深度值,这有助于3DGS和真实3D表面之间的对齐。另一项工作NeuSG [ 174]选择将先前基于NeRF的表面重建方法NeuS [ 170]纳入3DGS表示中,以将表面属性转移到3DGS。 更具体地说,它鼓励高斯的符号距离值为零,并且3DGS和NeuS方法的法线方向尽可能一致。3DGSR [ 175]和GSDF [ 176]也鼓励SDF和3DGS之间的一致性,以提高几何重建质量。DN-Splatter [ 177]利用从常见设备捕获或从通用网络预测的深度和正常先验来增强3DGS的重建质量。Wolf等人。[ 178]首先训练3DGS渲染立体校准的新视图,并对渲染的视图应用立体深度估计。通过截断符号距离函数(TSDF)将估计的密集深度图融合在一起以形成三角形网格。2D-GS [ 179]用2D高斯代替3D高斯,以获得更准确的光线-splat相交,并采用低通滤波器以避免退化的线投影。虽然已经在几何重建领域进行了尝试,但由于3DGS的离散性质,与具有连续场假设的基于隐式表示的方法相比,当前方法实现了相当或更差的结果,其中可以容易地确定表面。

SLAM 满贯

There are also 3DGS methods targeting simultaneously localizing the cameras and reconstructing the 3D scenes. GS-SLAM [180] proposes an adaptive 3D Gaussian expanding strategy to add new 3D Gaussians into the training stage and delete unreliable ones with captured depths and rendered opacity values. To avoid duplicate densification, SplaTAM [181] uses view-independent colors for Gaussians and creates a densification mask to determine whether a pixel in a new frame needs densification by considering current Gaussians and the captured depth of the new frame. For stabilizing the localization and mapping, GaussianSplattingSLAM [182] and Gaussian-SLAM [183] put an extra scale regularization loss on the scale of Gaussians to encourage isotropic Gaussians. For easier initialization, LIV-GaussMap [184] initializes Gaussians with LiDAR point cloud and builds up an optimizable size-adaptive voxel grid for the global map. SGS-SLAM [185], NEDS-SLAM [186], and SemGauss-SLAM [187] further consider Gaussian’s semantic information in the simultaneous localization and mapping process by distilling 2D semantic information which can be obtained using 2D segmentation methods or provided by the dataset. Deng et al. [188] avoid redundant Gaussian splitting based on sliding window mask and use vector quantization to further encourage compact 3DGS. CG-SLAM [189] introduces an uncertainty map into the training process based on the rendered depth and greatly improves the reconstruction quality. Based on the reconstructed map by SLAM-based methods, tasks in robotics like relocalization [190], navigation [191, 192, 193], 6D pose estimation [194], multi-sensor calibration [195, 196] and manipulation [197, 198] can be performed efficiently. We report quantitative results by different SLAM methods on the reconstruction task in Table. 4. The explicit geometry representation provided by 3DGS enables flexible reprojection to alleviate the misalignment between different viewpoints, thus leading to better reconstruction compared to NeRF-based methods. The real-time rendering feature of 3DGS also makes neural-based SLAM methods more applicable as the training in previous NeRF-based methods require more hardware and time.

也有同时定位摄像机和重建3D场景的3DGS方法。GS-SLAM [ 180]提出了一种自适应3D高斯扩展策略,将新的3D高斯函数添加到训练阶段,并删除具有捕获深度和渲染不透明度值的不可靠的3D高斯函数。为了避免重复的致密化,SplaTAM [ 181]使用高斯的视图独立颜色,并创建一个致密化掩模,通过考虑当前高斯和新帧的捕获深度来确定新帧中的像素是否需要致密化。为了稳定定位和映射,GaussianSplattingSLAM [ 182]和Gaussian-SLAM [ 183]在高斯的尺度上增加了额外的尺度正则化损失,以鼓励各向同性高斯。为了更容易初始化,LIV-GaussMap [ 184]使用LiDAR点云计算高斯,并为全局地图建立一个可优化的大小自适应体素网格。 SGS-SLAM [ 185]、NEDS-SLAM [ 186]和SemGauss-SLAM [ 187]通过提取可以使用2D分割方法获得或由数据集提供的2D语义信息,在同时定位和映射过程中进一步考虑高斯的语义信息。Deng等人。[ 188]避免了基于滑动窗口掩码的冗余高斯分裂,并使用矢量量化来进一步鼓励紧凑的3DGS。CG-SLAM [ 189]基于渲染深度将不确定性图引入训练过程,并大大提高了重建质量。基于基于SLAM的方法重建的地图,可以有效地执行机器人中的任务,如重新定位[ 190],导航[ 191,192,193],6D姿态估计[ 194],多传感器校准[ 195,196]和操纵[ 197,198]。我们在表中报告了不同SLAM方法在重建任务上的定量结果。4. 由3DGS提供的显式几何表示使得灵活的重投影能够减轻不同视点之间的不对准,从而与基于NeRF的方法相比导致更好的重建。3DGS的实时渲染功能也使得基于神经的SLAM方法更适用,因为以前基于NeRF的方法中的训练需要更多的硬件和时间。

Table 4: Quantitative comparison of novel view synthesis results by different SLAM methods on the Replica [199] dataset using PSNR, SSIM and LPIPS metrics.

表四:使用PSNR、SSIM和LPIPS指标,在PSNR [ 199]数据集上通过不同SLAM方法对新视图合成结果进行定量比较。

| Methods | PSNR ↑ | SSIM ↑ | LPIPS ↓ |

| NICE-SLAM [200] [第200话] | 24.42 | 0.81 | 0.23 |

| Vox-Fusion [201] [第201话] | 24.41 | 0.80 | 0.24 |

| Co-SLAM [202] [第202话] | 30.24 | 0.94 | 0.25 |

| GS-SLAM [180] | 31.56 | 0.97 | 0.094 |

| SplaTAM [181] [第181话] | 34.11 | 0.97 | 0.10 |

| GaussianSplattingSLAM [182] [ 182]第182话 |

37.50 | 0.96 | 0.07 |

| Gaussian-SLAM [183] [ 183]第183话 | 38.90 | 0.99 | 0.07 |

| SGS-SLAM [185] | 34.15 | 0.97 | 0.096 |

4.3Digital Human 4.3数字人

Learning virtual humans with implicit representation has been explored in various ways, especially for the NeRF and SDF representations, which exhibit high-quality results from multi-view images but suffer from heavy computational costs. Thanks to the high efficiency of 3DGS, research works have flourished and pushed 3DGS into digital human creation.

已经以各种方式探索了使用隐式表示的虚拟人学习,特别是对于NeRF和SDF表示,其从多视图图像呈现高质量的结果,但遭受沉重的计算成本。由于3DGS的高效率,研究工作蓬勃发展,并将3DGS推向数字人创造。

Human body 人体

In full-body modeling, works aim to reconstruct dynamic humans from multi-view videos. D3GA [203] first creates animatable human avatars using drivable 3D Gaussians and tetrahedral cages, which achieves promising geometry and appearance modeling. To capture more dynamic details, SplatArmor [204] leverages two different MLPs to predict large motions built upon the SMPL and canonical space and allows the pose-dependent effects by the proposed SE(3) fields, enabling more detailed results. HuGS [205] creates a coarse-to-fine deformation module using linear blend skinning and local learning-based refinement for constructing and animating virtual human avatars based on 3DGS. It achieves state-of-the-art human neural rendering performance at 20 FPS. Similarly, HUGS [206] utilizes the tri-plane representation [207] to factorize the canonical space, which can reconstruct the person and scene from monocular video (50 100 frames) within 30 minutes. Since 3DGS learns a huge number of Gaussians ellipsoids, HiFi4G [208] combines 3DGS with the non-rigid tracking offered by its dual-graph mechanism for high-fidelity rendering, which successfully preserves spatial-temporal consistency in a more compact manner. To achieve higher rendering speeds with high resolution on consumer-level devices, GPS-Gaussian [25] introduces Gaussian parameter maps on the sparse source view to regress the Gaussian parameters jointly with a depth estimation module without any fine-tuning or optimization. Other than that, GART [209] extends the human to more articulated models (e.g., animals) based on the 3DGS representation.

在全身建模中,工作旨在从多视角视频中重建动态人体。D3GA [ 203]首先使用可驱动的3D高斯和四面体笼创建可动画化的人类化身,实现了有前途的几何和外观建模。为了捕捉更多的动态细节,SplatArmor [ 204]利用两种不同的MLP来预测基于SMPL和规范空间的大运动,并允许提出的SE(3)场的姿态依赖效应,从而实现更详细的结果。HuGS [ 205]使用线性混合蒙皮和基于局部学习的细化创建了一个由粗到细的变形模块,用于基于3DGS构建和动画化虚拟人类化身。它以20 FPS的速度实现了最先进的人类神经渲染性能。类似地,HUGS [ 206]利用三平面表示[ 207]来分解规范空间,可以在30分钟内从单目视频(50 100帧)重建人和场景。 由于3DGS学习了大量的高斯椭球,HiFi 4G [ 208]将3DGS与其双图机制提供的非刚性跟踪相结合,以实现高保真渲染,从而以更紧凑的方式成功地保持了时空一致性。为了在消费级设备上实现更高的渲染速度和高分辨率,GPS-Gaussian [ 25]在稀疏源视图上引入高斯参数图,以与深度估计模块联合回归高斯参数,而无需任何微调或优化。除此之外,GART [ 209]将人类扩展到更清晰的模型(例如,动物)的3DGS表示。

To make full use of the information from multi-view images, Animatable Gaussians [210] incorporates 3DGS and 2D CNNs for more accurate human appearances and realistic garment dynamics using a template-guided parameterization and pose projection mechanism. Gaussian Shell Maps [211] (GSMs) combines CNN-based generators with 3DGS to recreate virtual humans with sophisticated details such as clothing and accessories. ASH [212] projects the 3D Gaussian learning into a 2D texture space using mesh UV parameterization to capture the appearance, enabling real-time and high-quality animated humans. Furthermore, for reconstructing rich details on humans, such as the cloth, 3DGS-Avatar [213] introduces a shallow MLP instead of SH to model the color of 3D Gaussians and regularizes deformation with geometry priors, providing the photorealistic rendering with pose-dependent cloth deformation and generalizes to the novel poses effectively.

为了充分利用来自多视图图像的信息,Animatable Gaussians [ 210]采用模板引导的参数化和姿势投影机制,结合了3DGS和2D CNN,以实现更准确的人体外观和逼真的服装动态。高斯壳地图[ 211](GSMs)将基于CNN的生成器与3DGS相结合,以重新创建具有复杂细节(如服装和配饰)的虚拟人。ASH [ 212]使用网格UV参数化将3D高斯学习投影到2D纹理空间中以捕获外观,从而实现实时和高质量的动画人类。此外,为了重建人体上的丰富细节,例如衣服,3DGS-Avatar [ 213]引入了浅MLP而不是SH来建模3D高斯的颜色,并使用几何先验规则化变形,提供具有姿势依赖的衣服变形的真实感渲染,并有效地推广到新颖的姿势。

Figure 11:The results of GPS-Gaussian [25], MonoGaussianAvatar [214], and MANUS [215]. They explore the 3DGS-based approaches on the whole body, head, and hand modeling, respectively.

图11:GPS-Gaussian [ 25]、MonoGaussianAvatar [ 214]和MANUS [ 215]的结果。他们分别探索了基于3DGS的全身、头部和手部建模方法。

For dynamic digital human modeling based on monocular video, GaussianBody [216] further leverages the physical-based priors to regularize the Guassians in the canonical space to avoid artifacts in the dynamic cloth from monocular video. GauHuman [217] re-designs the prune/split/clone of the original 3DGS to achieve efficient optimization and incorporates pose refinement and weight fields modules for fine details learning. It achieves minute-level training and real-time rendering (166 FPS). GaussianAvatar [218] incorporates the optimizable tensor with a dynamic appearance network to capture the dynamics better, allowing the dynamic avatar reconstruction and realistic novel animation in real time. Human101 [219] further pushes the speed of high-fidelity dynamic human creation to 100 seconds using a fixed-perspective camera. Simliar to [31], SplattingAvatar [220] and GoMAvatar [221] embed Gaussians onto a canonical human body mesh. The positions of a Gaussian is determined by the barycentric point and the displacement along the normal direction. To resolve the unbalanced aggregation of Gaussians caused by densification and splitting operation, GVA [222] proposes a surface-guided Gaussians re-initialization strategy to make the trained Gaussians better fit the input monocular video. HAHA [223] also attaches Gaussians onto the surface of a mesh but combines rendered results from a textured human body mesh and Gaussians together to reduce the number of Gaussians.

对于基于单目视频的动态数字人体建模,GaussianBody [ 216]进一步利用基于物理的先验来正则化规范空间中的高斯,以避免单目视频的动态布料中的伪影。GauHuman [ 217]重新设计了原始3DGS的修剪/分割/克隆,以实现有效的优化,并结合了姿势细化和权重字段模块,用于精细细节学习。它实现了分钟级训练和实时渲染(166 FPS)。GaussianAvatar [ 218]将可优化的张量与动态外观网络相结合,以更好地捕捉动态,从而实现动态化身重建和实时逼真的新颖动画。Human 101 [ 219]进一步将使用固定视角相机的高保真动态人体创建速度推高至100秒。类似于[ 31],SplattingAvatar [ 220]和GoMAvatar [ 221]将高斯嵌入到规范的人体网格上。 高斯的位置由重心点和沿法线方向的位移沿着确定。为了解决由致密化和分裂操作引起的高斯的不平衡聚合,GVA [ 222]提出了一种表面引导的高斯重新初始化策略,以使训练的高斯更好地适应输入的单目视频。HAHA [ 223]还将高斯模型附加到网格的表面上,但将纹理人体网格的渲染结果与高斯模型结合在一起,以减少高斯模型的数量。

Head 头

For human head modeling with 3DGS, MonoGaussianAvatar [214] first applies 3DGS to dynamic head reconstruction using the canonical space modeling and deformation prediction. Further, PSAvatar [224] introduces the explicit Flame face model [225] to initialize Gaussians, which can capture the high-fidelity facial geometry and even complicated volumetric objects (e.g.glasses). The tri-plane representation and the motion fields are used in GaussianHead [226] to simulate geometrically changing heads in continuous movements and render rich textures, including the skin and hair. For easier head expression controllability, GaussianAvatars [227] introduce the geometric priors (Flame parametric face model [225]) into 3DGS, which binds the Gaussians onto the explicit mesh and optimize the parameters of Gaussian ellipsoids. Rig3DGS [228] employs a learnable deformation to provide stability and generalization to novel expressions, head poses, and viewing directions to achieve controllable portraits on portable devices. In another way, HeadGas [229] attributes the 3DGS with a base of latent features that are weighted by the expression vector from 3DMMs [230], which achieves real-time animatable head reconstruction. FlashAvatar [231] further embeds a uniform 3D Gaussian field in a parametric face model and learns additional spatial offsets to capture facial details, successfully pushing the rendering speed to 300 FPS. To synthesize the high-resolution results, Gaussian Head Avatar [232] adopts the super-resolution network to achieve high-fidelity head avatar learning. To synthesize high-quality avatar from few-view input, SplatFace [233] propose to first initialize Gaussians on a template mesh and jointly optimizing Gaussians and the mesh with a splat-to-mesh distance loss. GauMesh [234] propose a hybrid representation containing both tracked textured meshes and canonical 3D Gaussians together with a learnable deformation field to represent dynamic human head. Apart from these, some works extend the 3DGS into text-based head generation [235], DeepFake [236], and relighting [237].

对于使用3DGS的人类头部建模,MonoGaussianAvatar [ 214]首先使用规范空间建模和变形预测将3DGS应用于动态头部重建。此外,PSAvatar [ 224]引入了显式的Flame人脸模型[ 225]来初始化Gaussians,它可以捕获高保真的面部几何形状,甚至复杂的体积对象(例如眼镜)。三平面表示和运动场用于GaussianHead [ 226],以模拟连续运动中的几何变化头部,并渲染丰富的纹理,包括皮肤和头发。为了更容易控制头部表情,GaussianAvatars [ 227]将几何先验(Flame参数化面部模型[ 225])引入3DGS,将高斯绑定到显式网格上并优化高斯椭球的参数。 Rig 3DGS [ 228]采用可学习的变形来为新的表情、头部姿势和观看方向提供稳定性和概括性,以在便携式设备上实现可控的肖像。在另一种方式中,HeadGas [ 229]将3DGS归因于由来自3DMM [ 230]的表达向量加权的潜在特征的基础,这实现了实时可动画化的头部重建。FlashAvatar [ 231]进一步在参数化面部模型中嵌入均匀的3D高斯场,并学习额外的空间偏移来捕捉面部细节,成功地将渲染速度推高到300 FPS。为了合成高分辨率的结果,Gaussian Head Avatar [ 232]采用超分辨率网络来实现高保真的头部化身学习。为了从少数视图输入中合成高质量的化身,SplatFace [ 233]建议首先在模板网格上初始化高斯模型,并通过splat-to-mesh距离损失联合优化高斯模型和网格。 GauMesh [ 234]提出了一种混合表示,其中包含跟踪纹理网格和规范3D高斯以及可学习的变形场,以表示动态人类头部。除此之外,一些作品将3DGS扩展到基于文本的头部生成[ 235],DeepFake [ 236]和重新照明[ 237]。

Hair and hands 头发和手

Other parts of humans have also been explored, such as hair and hands. 3D-PSHR [238] combines hand geometry priors (MANO) with 3DGS, which first realizes the real-time hand reconstruction. MANUS [215] further explores the interaction between the hands and object using 3DGS. In addition, GaussianHair [239] first combines the Marschner Hair Model [240] with UE4’s real-time hair rendering to create the Gaussian Hair Scattering Model. It captures complex hair geometry and appearance for fast rasterization and volumetric rendering, enabling applications including editing and relighting.

人类的其他部位也被探索过,比如头发和手。3D-PSHR [ 238]将手部几何先验(MANO)与3DGS相结合,首次实现了实时手部重建。MANUS [ 215]使用3DGS进一步探索了手和物体之间的交互。此外,GaussianHair [ 239]首先将Marschner头发模型[ 240]与UE4的实时头发渲染相结合,以创建高斯头发散射模型。它可以捕捉复杂的头发几何形状和外观,以实现快速光栅化和体积渲染,支持包括编辑和重新照明在内的应用程序。

4.43D/4D Generation 4.43D/4D生成

Cross-modal image generation has achieved stunning results with the diffusion model [140]. However, due to the lack of 3D data, it is difficult to directly train a large-scale 3D generation model. The pioneering work DreamFusion [97] exploits the pre-trained 2D diffusion model and proposes the score distillation sampling (SDS) loss, which distills the 2D generative priors into 3D without requiring 3D data for training, achieving text-to-3D generation. However, the NeRF representation brings heavy rendering overhead. The optimization time for each case takes several hours and the rendering resolution is low, which leads to poor-quality results. Although some improved methods extract mesh representation from trained NeRF for fine-tuning to improve the quality [241], this way will further increase optimization time. 3DGS representation can render high-resolution images with high FPS and small memory, so it replaces NeRF as the 3D representation in some recent 3D/4D generation methods.

交叉模态图像生成已经使用扩散模型实现了惊人的结果[ 140]。然而,由于缺乏3D数据,很难直接训练大规模的3D生成模型。开创性的工作DreamFusion [ 97]利用了预先训练的2D扩散模型,并提出了分数蒸馏采样(SDS)损失,它将2D生成先验提取到3D中,而不需要3D数据进行训练,实现了文本到3D的生成。然而,NeRF表示带来了沉重的渲染开销。每种情况下的优化时间都需要几个小时,并且渲染分辨率较低,这会导致质量较差的结果。虽然一些改进的方法从训练的NeRF中提取网格表示进行微调以提高质量[ 241],但这种方式将进一步增加优化时间。3DGS表示可以以高FPS和小内存渲染高分辨率图像,因此在最近的一些3D/4D生成方法中,它取代了NeRF作为3D表示。

3D generation 3D生成

DreamGaussian [20] replaces the MipNeRF [52] representation in the DreamFusion [97] framework with 3DGS that uses SDS loss to optimize 3D Gaussians. The splitting process of 3DGS is very suitable for the optimization progress under the generative settings, so the efficiency advantages of 3DGS can be brought to text-to-3D generation based on the SDS loss. To improve the final quality, this work follows the idea of Magic3D [241] which extracts the mesh from the generated 3DGS and refines the texture details by optimizing UV textures through a pixel-wise Mean Squared Error (MSE) loss. In addition to 2D SDS, GSGEN [242] introduces a 3D SDS loss based on Point-E [243], a text-to-point-cloud diffusion model, to mitigate the multi-face or Janus problem. It adopts Point-E to initialize the point cloud as the initial geometry for optimization and also refines the appearance with only the 2D image prior. GaussianDreamer [244] also combines the priors of 2D and 3D diffusion models. It utilizes Shap-E [245] to generate the initial point cloud and optimizes 3DGS using 2D SDS. However, the generated initial point cloud is relatively sparse, so noisy point growth and color perturbation are further proposed to densify it. However, even if the 3D SDS loss is introduced, the Janus problem may still exist during optimization as the view is sampled one by one. Some methods [246, 247] fine-tune the 2D diffusion model [140] to generate multi-view images at once, thereby achieving multi-view supervision during SDS optimization. Or, the multi-view SDS proposed by BoostDream [248] directly creates a large 2×2 images by stitching the rendered images from 4 sampled views and calculates the gradients under the condition of the multi-view normal map. This is a plug-and-play method that can first convert a 3D asset into differentiable representations including NeRF, 3DGS, and DMTet [249] through rendering supervision, and then optimize them to improve the quality of the 3D asset. Some methods have made improvements to SDS loss. LucidDreamer [250] proposes Interval Score Matching (ISM), which replaces DDPM in SDS with DDIM inversion and introduces supervisions from interval steps of the diffusion process to avoid high error in one-step reconstruction. Some generation results are shown in Fig. 12. GaussianDiffusion [251] proposes to incorporate structured noises from multiple viewpoints to alleviate the Janus problem and variational 3DGS for better generation results by mitigating floaters. Yang et al. [252] point out that the differences between the diffusion prior and the training process of the diffusion model will impair the quality of 3D generation, so they propose iterative optimization of the 3D model and the diffusion prior. Specifically, two additional learnable parameters are introduced in the classifier-free guidance formula, one is a learnable unconditional embedding, and the other is additional parameters added to the network, such as LoRA [253] parameters. These methods are not limited to 3DGS, and other originally NeRF-based methods including VSD [254] and CSD [255] aiming at improving the SDS loss can be used for the 3DGS generation. GaussianCube [256] instead trains a 3D diffusion model based on a GaussianCube representation that is converted from a constant number of Gaussians with voxelization via optimal transport. GVGEN [257] also works in 3D space but is based on a 3D Gaussian volume representation.

DreamGaussian [ 20]用3DGS取代了DreamFusion [ 97]框架中的MipNeRF [ 52]表示,该框架使用SDS损失来优化3D高斯。3DGS的分割过程非常适合生成式设置下的优化过程,因此基于SDS损失的3DGS效率优势可以发挥到文本到3D的生成中。为了提高最终质量,这项工作遵循Magic 3D [ 241]的想法,从生成的3DGS中提取网格,并通过像素均方误差(MSE)损失优化UV纹理来细化纹理细节。除了2D SDS之外,GSGEN [ 242]还引入了基于Point-E [ 243]的3D SDS损失,这是一种文本到点云扩散模型,以减轻多面或Janus问题。它采用Point-E初始化点云作为优化的初始几何形状,并在仅使用2D图像先验的情况下细化外观。GaussianDreamer [ 244]还结合了2D和3D扩散模型的先验。 它利用Shap-E [ 245]生成初始点云,并使用2D SDS优化3DGS。然而,由于生成的初始点云相对稀疏,因此进一步提出了噪声点生长和颜色扰动来对其进行加密。然而,即使引入3D SDS损失,由于视图是逐个采样的,优化过程中仍然可能存在Janus问题。一些方法[ 246,247]微调2D扩散模型[ 140]以一次生成多视图图像,从而在SDS优化期间实现多视图监督。或者,BoostDream [ 248]提出的多视图SDS直接通过拼接来自4个采样视图的渲染图像来创建大的 2×2 图像,并在多视图法线贴图的条件下计算梯度。 这是一种即插即用的方法,可以首先通过渲染监督将3D资产转换为可区分的表示,包括NeRF,3DGS和DMTet [ 249],然后对其进行优化以提高3D资产的质量。一些方法对SDS损失进行了改进。LucidDreamer [ 250]提出了区间分数匹配(ISM),它用DDIM反演取代SDS中的DDPM,并从扩散过程的区间步骤引入监督,以避免一步重建中的高误差。一些生成结果如图12所示。GaussianDiffusion [ 251]建议从多个视点合并结构化噪声,以缓解Janus问题和变分3DGS,通过减少浮动点来获得更好的生成结果。Yang等人 [ 252]指出扩散先验和扩散模型训练过程的差异会影响三维生成的质量,因此提出了对三维模型和扩散先验进行迭代优化的方法。具体来说,在无分类器指导公式中引入了两个额外的可学习参数,一个是可学习的无条件嵌入,另一个是添加到网络中的额外参数,例如LoRA [ 253]参数。这些方法不限于3DGS,其他最初基于NeRF的方法,包括VSD [ 254]和CSD [ 255],旨在改善SDS损失,可用于3DGS生成。GaussianCube [ 256]基于GaussianCube表示来训练3D扩散模型,该表示通过最佳传输从具有体素化的恒定数量的高斯转换而来。GVGEN [ 257]也适用于3D空间,但基于3D高斯体积表示。

Figure 12:The text-to-3D generation results of Luciddreamer [250]. It distills generative prior from pre-trained 2D diffusion models with the proposed Interval Score Matching (ISM) objective to achieve 3D generation from the text prompt.

图12:Luciddreamer的文本到3D生成结果[ 250]。它从预训练的2D扩散模型中提取生成先验,并提出了区间分数匹配(ISM)目标,以实现从文本提示生成3D。

As a special category, the human body can introduce the model prior, such as SMPL [258], to assist in generation. GSMs [211] builds multi-layer shells from the SMPL template and binds 3D Gaussians on the shells. By utilizing the differentiable rendering of 3DGS and the generative adversarial network of StyleGAN2 [259], animatable 3D humans are efficiently generated. GAvatar [260] adopts a primitive-based representation [261] attached to the SMPL-X [262] and attaches 3D Gaussians to the local coordinate system of each primitive. The attribute values of 3D Gaussians are predicted by an implicit network and the opacity is converted to the signed distance field through a NeuS [170]-like method, providing geometry constraints and extracting detailed textured meshes. The generation is text-based and mainly guided by the SDS loss. HumanGaussian [263] initializes 3D Gaussians by randomly sampling points on the surface of the SMPL-X [262] template. It extends Stable Diffusion [140] to generate RGB and depth simultaneously and constructs a dual-branch SDS as the optimization guidance. It also combines the classifier score provided by the null text prompt and the negative score provided by the negative prompt to construct the negative prompt guidance to address the over-saturation issue.

作为一个特殊的类别,人体可以引入模型先验,如SMPL [ 258],以辅助生成。GSM [ 211]从SMPL模板构建多层壳,并在壳上绑定3D高斯。通过利用3DGS的可区分渲染和StyleGAN 2的生成对抗网络[ 259],可以有效地生成可动画化的3D人类。GAvatar [ 260]采用附加到SMPL-X [ 262]的基于连续性的表示[ 261],并将3D高斯附加到每个图元的局部坐标系。3D高斯的属性值由隐式网络预测,不透明度通过NeuS [ 170]类方法转换为带符号距离场,提供几何约束并提取详细的纹理网格。该生成是基于文本的,主要由SDS损失指导。HumanGaussian [ 263]通过SMPL-X [ 262]模板表面上的随机采样点来计算3D高斯。 它扩展了稳定扩散[ 140]以同时生成RGB和深度,并构建了一个双分支SDS作为优化指导。它还结合空文本提示提供的分类器得分和否定提示提供的否定得分来构建否定提示引导,以解决过饱和问题。

Figure 13:The text-to-4D generation results of AYG [264]. Different dynamic 4D sequences are shown with dotted lines representing the dynamics of the deformation field.

图13:AYG的文本到4D生成结果[ 264]。不同的动态4D序列用表示变形场的动态的虚线示出。

The above methods focus on the generation of an individual object, while scene generation requires consideration of interactions and relationships between different objects. CG3D [265] inputs a text prompt manually deconstructed by the user into a scene graph, and the textual scene graph is interpreted as a probabilistic graphical model where the directed edge has a tail of the object node and a head of the interaction node. Then scene generation becomes an ancestor sampling by first generating the objects and then their interactions. The optimization is divided into two stages, where gravity and normal contact forces are introduced in the second stage. LucidDreamer [266] and Text2Immersion [267] are both based on a reference image (user-specified or text-generated) and extend outward to achieve 3D scene generation. The former utilizes Stable Diffusion (SD) [140] for image inpainting to generate unseen regions on the sampled views and incorporates monocular depth estimation and alignment to establish a 3D point cloud from these views. Finally, the point cloud is used as the initial value, and a 3DGS is trained using the projected images as the ground truth to achieve 3D scene generation. The latter method has a similar idea, while there is a process to remove outliers in the point cloud and the 3DGS optimization has two stages: training coarse 3DGS and refinement. To explore 3D scene generation, GALA3D [268] leverages both the object-level text-to-3D model MVDream [246] to generate realistic objects and scene-level diffusion model to compose them. DreamScene [269] proposes multi-timestep sampling for multi-stage scene-level generation to synthesize surrounding environment, ground, and objects to avoid complicate object composition. Instead of separately modeling each object, RealmDreamer [270] utilizes the diffusion priors from inpainting and depth estimation models to generate different viewpoints of a scene iteratively. DreamScene360 [271] instead generates a 360-degree panoramic image and converts it into a 3D scene with depth estimation.

上述方法集中于单个对象的生成,而场景生成需要考虑不同对象之间的交互和关系。CG3D [ 265]将用户手动解构的文本提示输入到场景图中,并且文本场景图被解释为概率图形模型,其中有向边具有对象节点的尾部和交互节点的头部。然后,场景生成成为一个祖先采样,首先生成的对象,然后他们的相互作用。优化分为两个阶段,在第二阶段中引入重力和法向接触力。LucidDreamer [ 266]和Text2Immersion [ 267]都基于参考图像(用户指定或文本生成),并向外扩展以实现3D场景生成。 前者利用稳定扩散(SD)[ 140]进行图像修复,以在采样视图上生成不可见区域,并结合单目深度估计和对齐,从这些视图中建立3D点云。最后以点云为初始值,以投影图像为地面真值训练3DGS,实现三维场景生成。后一种方法具有类似的思想,但存在去除点云中的离群值的过程,并且3DGS优化包括两个阶段:训练粗3DGS和细化。为了探索3D场景生成,GALA3D [ 268]利用对象级文本到3D模型MVDream [ 246]来生成逼真的对象和场景级扩散模型来合成它们。DreamScene [ 269]提出多时间步采样用于多阶段场景级生成,以合成周围环境,地面和物体,以避免复杂的物体组成。 RealmDreamer [ 270]不是单独建模每个对象,而是利用来自修复和深度估计模型的扩散先验来迭代地生成场景的不同视点。DreamScene360 [ 271]生成360度全景图像,并将其转换为具有深度估计的3D场景。