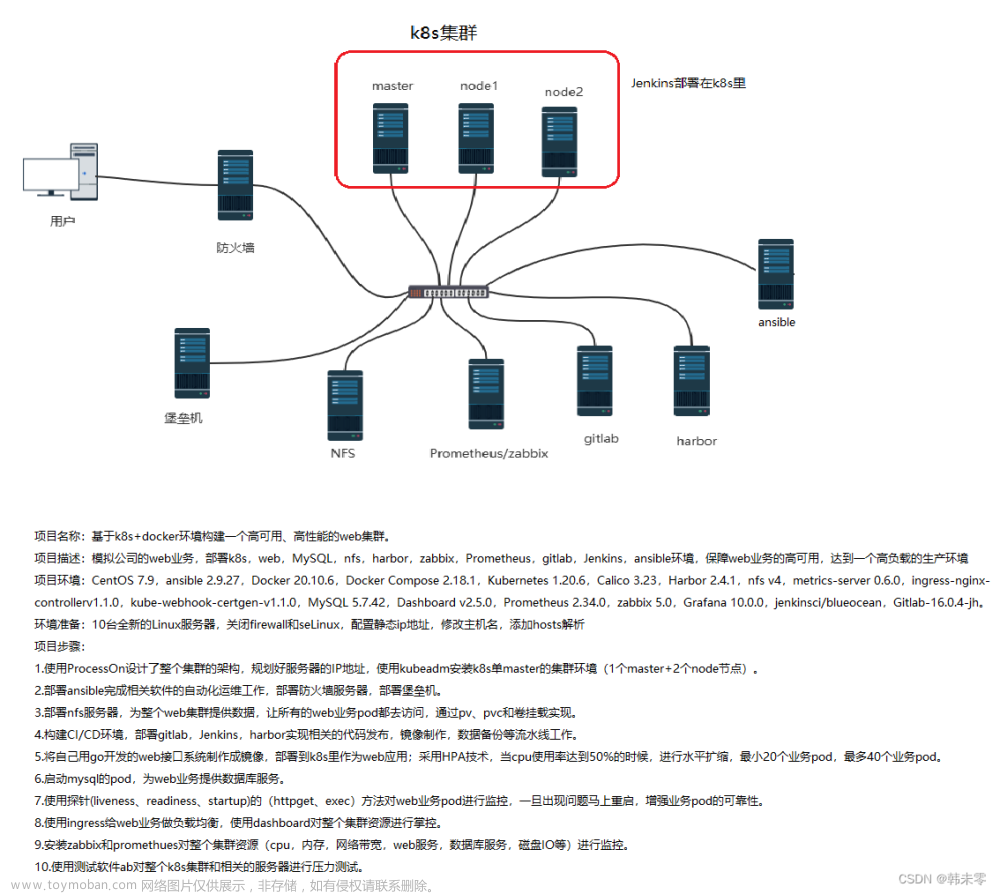

主机规划

-

master- 最低两核心,否则集群初始化失败

| 主机名 | IP地址 | 角色 | 操作系统 | 硬件配置 |

|---|---|---|---|---|

| ansible | 10.62.158.200 | 同步工具节点 | CentOS 7 | 2 Core/4G Memory |

| master01 | 10.62.158.201 | 管理节点01 | CentOS 7 | 2 Core/4G Memory |

| master02 | 10.62.158.202 | 管理节点02 | CentOS 7 | 2 Core/4G Memory |

| master03 | 10.62.158.203 | 管理节点03 | CentOS 7 | 2 Core/4G Memory |

| node01 | 10.62.158.204 | 工作节点01 | CentOS 7 | 1 Core/2G Memory |

| node02 | 10.62.158.205 | 工作节点02 | CentOS 7 | 1 Core/2G Memory |

| k8s-ha01 | 10.62.158.206 | 主代理节点 | CentOS 7 | 1 Core/2G Memory |

| k8s-ha02 | 10.62.158.207 | 备用代理节点 | CentOS 7 | 1 Core/2G Memory |

按照集群规划修改每个节点主机名

# 200节点

[root@localhost ~]# hostnamectl set-hostname ansible

[root@localhost ~]# exit

登出

Connection closed by foreign host.

Disconnected from remote host(测试机 - 200) at 08:29:30.

# 201节点

[root@localhost ~]# hostnamectl set-hostname master01

[root@localhost ~]# exit

登出

Connection closed by foreign host.

Disconnected from remote host(测试机 - 201) at 08:47:57.

# 202节点

[root@localhost ~]# hostnamectl set-hostname master02

[root@localhost ~]# exit

登出

Connection closed by foreign host.

Disconnected from remote host(测试机 - 202) at 08:48:14.

# 203节点

[root@localhost ~]# hostnamectl set-hostname master03

[root@localhost ~]# exit

登出

Connection closed by foreign host.

Disconnected from remote host(测试机 - 203) at 08:48:30.

# 204节点

[root@localhost ~]# hostnamectl set-hostname node01

[root@localhost ~]# exit

登出

Connection closed by foreign host.

Disconnected from remote host(测试机 - 204) at 08:48:46.

# 205节点

[root@localhost ~]# hostnamectl set-hostname node02

[root@localhost ~]# exit

登出

Connection closed by foreign host.

Disconnected from remote host(测试机 - 205) at 08:49:02.

# 206节点

[root@balance01 ~]# hostnamectl set-hostname k8s-ha01

[root@balance01 ~]# exit

登出

Connection closed by foreign host.

Disconnected from remote host(测试机 - 206) at 10:30:29.

# 207节点

[root@balance02 ~]# hostnamectl set-hostname k8s-ha02

[root@balance02 ~]# exit

登出

Connection closed by foreign host.

Disconnected from remote host(测试机 - 207) at 10:30:40.

200 节点安装 ansible 批量运营工具

- 上传

ansible离线压缩包 -ansible.tar.gz

[root@localhost ~]# ls

anaconda-ks.cfg ansible.tar.gz sysconfigure.sh

- 离线压缩包解压

[root@localhost ~]# tar -xf ansible.tar.gz

- 进入

ansible离线软件包目录

[root@localhost ~]# cd ansible

- 离线安装

ansible

[root@localhost ~]# yum install ./*.rpm -y

设置被控主机:201-205

删除全部内容,快捷键 dG

[root@localhost ~]# vim /etc/ansible/hosts

[k8s]

10.62.158.201

10.62.158.202

10.62.158.203

10.62.158.204

10.62.158.205

使用该命令查看 ansible 组内主机,k8s 为组名,后续可通过组名进行组内主机的批量管理

[root@localhost ~]# ansible k8s --list-host

hosts (5):

10.62.158.201

10.62.158.202

10.62.158.203

10.62.158.204

10.62.158.205

添加免密登录,使 ansible 操作被控主机时不必使用密码即可操作

-

ansible主机生成私钥,一路回车即可

[root@ansible ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:SF5KG4VdxuecNmFcGNeQqbw5vJoHwJXbkFqfxBx6nSo root@ansible

The key's randomart image is:

+---[RSA 2048]----+

| o.oo*o=+= |

| ....B.Xoo..|

| +..+.%.=o |

| + *+ ..%. |

| = S.Eo.+ |

| ..= |

| . o |

| .o |

| oo |

+----[SHA256]-----+

- 复制公钥到被控主机,输入yes与被控主机的登录密码,按步操作即可

[root@ansible ~]# for ip in 10.62.158.{201..205}

> do

> ssh-copy-id $ip

> done

- 测试被控主机是否可正常连接,

exit即可返回ansible主机

[root@ansible ~]# ssh 10.62.158.201

Last login: Wed Apr 17 08:31:07 2024 from 10.62.158.1

[root@master01 ~]# exit

登出

Connection to 10.62.158.201 closed.

以下前期环境准备需要在所有节点都执行

配置集群之间本地解析,集群在初始化时需要能够解析主机名

修改 hosts 文件,添加集群节点 ip 与主机名对照关系

[root@ansible ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 - 不必改动

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 - 不必改动

10.62.158.201 master01

10.62.158.202 master02

10.62.158.203 master03

10.62.158.204 node01

10.62.158.205 node02

拷贝配置文件到 k8s 组内其他机器中

# k8s -- 被控主机组名

# -m -- 调用模块

# copy -- 复制模块

# -a -- 指定模块参数

# src -- 当前主机文件地址

# dest -- 目标主机文件地址

[root@ansible ~]# ansible k8s -m copy -a 'src=/etc/hosts dest=/etc'

开启 bridge 网桥过滤功能

bridge(桥接网络) 是Linux系统中的一种虚拟网络设备,它充当一个虚拟的网桥,为集群内的容器提供网络通信功能,容器就可以通过这个 bridge 与其他容器或外部网络通信了

- 添加配置文件

# net.bridge.bridge-nf-call-ip6tables = 1 - 对网桥上的IPv6数据包通过ip6tables处理

# net.bridge.bridge-nf-call-iptables = 1 - 对网桥上的IPv4数据包通过iptables处理

# net.ipv4.ip_forward = 1 - 开启IPv4路由转发,来实现集群中的容器与外部网络的通信

[root@ansible ~]# vim k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

- 拷贝配置文件到k8s组内其他机器中

[root@ansible ~]# ansible k8s -m copy -a 'src=k8s.conf dest=/etc/sysctl.d/'

由于开启bridge功能,需要加载br_netilter模块来允许bridge路由的数据包经过iptables防火墙处理

- modprobe - 可以加载内核模块

- br_netfilter - 该模块允许bridge设备上的数据包经过iptables防火墙处理

# k8s -- 被控主机组名

# -m -- 调用模块

# shell -- 执行系统命令模块

# -a -- 指定模块参数

# modprobe br_netfilter && lsmod | grep br_netfilter -- 系统命令

[root@ansible ~]# ansible k8s -m shell -a 'modprobe br_netfilter && lsmod | grep br_netfilter'

从配置文件 k8s.conf 加载内核参数设置,使上述配置生效

[root@ansible ~]# ansible k8s -m shell -a 'sysctl -p /etc/sysctl.d/k8s.conf'

配置ipvs功能

在 k8s 中 Service 有两种代理模式,一种是基于 iptables 的,一种是基于 ipvs 的,两者对比ipvs负载均衡算法更加的灵活,且带有健康检查的功能,如果想要使用 ipvs 代理模式,需要手动载入ipvs 模块。

ipset 和 ipvsadm 是两个与网络管理和负载均衡相关的软件包,在k8s代理模式中,提供多种负载均衡算法,如轮询(Round Robin)、最小连接(Least Connection)和加权最小连接(Weighted Least Connection)等

- 安装软件包

[root@ansible ~]# ansible k8s -m shell -a 'yum install ipset ipvsadm -y'

- 将需要加载的

ipvs相关模块写入到脚本文件中

# ip_vs - ipvs模块

# ip_vs_rr - 轮询算法

# ip_vs_wrr - 加权轮询算法

# ip_vs_sh - hash算法

# nf_conntrack - 链路追踪

[root@ansible ~]# vim ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

- 拷贝配置文件到

k8s组内其他机器中

[root@ansible ~]# ansible k8s -m copy -a 'src=ipvs.modules dest=/etc/sysconfig/modules'

- 赋予配置文件执行权限

[root@ansible ~]# ansible k8s -m shell -a 'chmod +x /etc/sysconfig/modules/ipvs.modules'

- 执行脚本文件

[root@ansible ~]# ansible k8s -m shell -a '/etc/sysconfig/modules/ipvs.modules'

- 被控主机上查看

ipvs是否配置成功

[root@master01 ~]# lsmod |grep ip_vs

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145497 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 133095 1 ip_vs

libcrc32c 12644 3 xfs,ip_vs,nf_conntrack

关闭 SWAP 分区

为了保证 kubelet 正常工作,k8s 强制要求禁用,否则集群初始化失败

- 临时关闭,此步必做

[root@ansible ~]# ansible k8s -m shell -a 'swapoff -a'

- 被控主机上查看是否关闭

swap

[root@master01 ~]# free -h

total used free shared buff/cache available

Mem: 3.8G 119M 3.3G 11M 478M 3.5G

Swap: 0B 0B 0B

- 永久关闭

[root@ansible ~]# ansible k8s -m shell -a "sed -ri 's/.*swap.*/#&/' /etc/fstab"

安装 Docker - 离线安装

上传离线压缩包到 ansible 主机 - docker-20.10.tar.gz

[root@ansible ~]# ls

anaconda-ks.cfg ansible ansible.tar.gz docker-20.10.tar.gz ipvs.modules k8s.conf ssh-copy-id sysconfigure.sh

拷贝压缩文件到 k8s 组内其他机器中

[root@ansible ~]# ansible k8s -m copy -a 'src=docker-20.10.tar.gz dest=/root'

解压 docker 压缩包

[root@ansible ~]# ansible k8s -m shell -a 'tar -xf docker-20.10.tar.gz'

批量安装 docker

[root@ansible ~]# ansible k8s -m shell -a 'cd docker && yum install ./*.rpm -y'

启用 Cgroup 控制组,用于限制进程的资源使用量,如CPU、内存资源

- 添加

docker配置文件daemon.json

[root@ansible ~]# vim daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

- 复制配置文件到被控主机

[root@ansible ~]# ansible k8s -m copy -a 'src=daemon.json dest=/etc/docker/'

启动 docker 并设置 docker 随机自启

[root@ansible ~]# ansible k8s -m shell -a 'systemctl start docker && systemctl enable docker'

被控主机上查看 docker 是否启动成功

[root@master01 etc]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

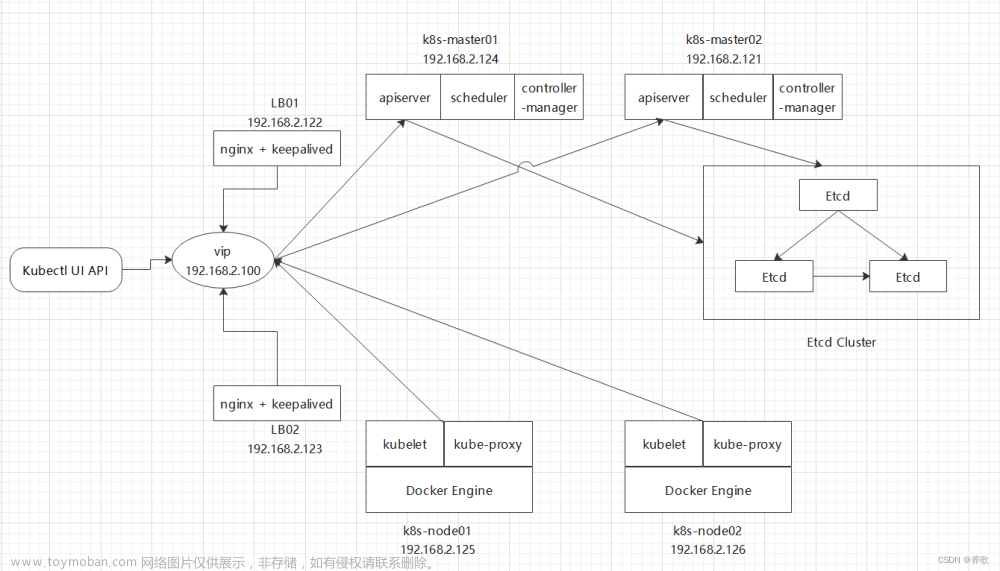

高可用代理服务环境配置 - 注意:此操作仅在两台代理节点执行

安装负载均衡及高可用软件

-

k8s-ha01节点

[root@k8s-ha01 ~]# yum install haproxy keepalived -y

-

k8s-ha02节点

[root@k8s-ha02 ~]# yum install haproxy keepalived -y

修改 haproxy 代理配置文件

-

k8s-ha01节点

[root@k8s-ha01 keepalived]# vim /etc/haproxy/haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:6443

bind 127.0.0.1:6443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server master01 10.62.158.201:6443 check

server master02 10.62.158.202:6443 check

server master03 10.62.158.203:6443 check

-

k8s-ha02节点

[root@k8s-ha02 keepalived]# vim /etc/haproxy/haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:6443

bind 127.0.0.1:6443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server master01 10.62.158.201:6443 check

server master02 10.62.158.202:6443 check

server master03 10.62.158.203:6443 check

修改 keepalived配置文件,虚拟IP地址:10.62.158.211

-

k8s-ha01节点 - 设置为主代理节点

[root@k8s-ha01 keepalived]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens32

virtual_router_id 51

priority 101

advert_int 2

authentication {

auth_type PASS

auth_pass abc123

}

virtual_ipaddress {

10.62.158.211/24

}

track_script {

chk_nginx

}

}

-

k8s-ha02节点 - 设置为备用代理节点

[root@k8s-ha02 keepalived]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface ens32

virtual_router_id 51

priority 99

advert_int 2

authentication {

auth_type PASS

auth_pass abc123

}

virtual_ipaddress {

10.62.158.211/24

}

track_script {

chk_nginx

}

}

在两台代理主机的 /etc/keepalived/ 目录准备 check_apiserver.sh 脚本

-

k8s-ha01节点

[root@k8s-ha01 keepalived]# vim /etc/keepalived/check_apiserver.sh

#!/bin/bash

conut=`ps -C haproxy | grep -v PID | wc -l`

if [ $conut -eq 0 ];then

systemctl stop keepalived

fi

-

k8s-ha02节点

[root@k8s-ha02 keepalived]# vim /etc/keepalived/check_apiserver.sh

#!/bin/bash

conut=`ps -C haproxy | grep -v PID | wc -l`

if [ $conut -eq 0 ];then

systemctl stop keepalived

fi

为脚本添加执行权限

-

k8s-ha01节点

[root@k8s-ha01 keepalived]# chmod +x /etc/keepalived/check_apiserver.sh

-

k8s-ha02节点

[root@k8s-ha02 keepalived]# chmod +x /etc/keepalived/check_apiserver.sh

两台主机启动 haproxy 服务与 keepalived 服务,并设置服务随机自启

-

k8s-ha01节点

[root@k8s-ha01 keepalived]# systemctl start haproxy keepalived

[root@k8s-ha01 keepalived]# systemctl enable haproxy keepalived

-

k8s-ha02节点

[root@k8s-ha02 keepalived]# systemctl start haproxy keepalived

[root@k8s-ha02 keepalived]# systemctl enable haproxy keepalived

在 k8s-ha01 主代理节点查看 keepalived 虚拟IP地址:10.62.158.211

[root@k8s-ha01 keepalived]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:85:63:23 brd ff:ff:ff:ff:ff:ff

inet 10.62.158.206/24 brd 10.62.158.255 scope global noprefixroute ens32

valid_lft forever preferred_lft forever

inet 10.62.158.211/24 scope global secondary ens32

valid_lft forever preferred_lft forever

inet6 fe80::3edc:10af:7a81:ebaf/64 scope link noprefixroute

valid_lft forever preferred_lft forever

kubeadm 方式部署集群

创建 k8s 仓库文件

[root@ansible ~]# vim kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

复制仓库文件到被控主机

[root@ansible ~]# ansible k8s -m copy -a 'src=kubernetes.repo dest=/etc/yum.repos.d'

被控主机查看仓库中 kubernetes 的版本

[root@master01 yum.repos.d]# yum list --showduplicates kubeadm

安装集群软件,本实验安装 k8s 1.23.0 版本软件

-

kubeadm:用于初始化集群,并配置集群所需的组件并生成对应的安全证书和令牌; -

kubelet:负责与 Master 节点通信,并根据 Master 节点的调度决策来创建、更新和删除 Pod,同时维护 Node 节点上的容器状态; -

kubectl:用于管理k8集群的一个命令行工具;

[root@ansible ~]# ansible k8s -m shell -a 'yum install -y kubeadm-1.23.0-0 kubelet-1.23.0-0 kubectl-1.23.0-0'

配置 kubelet 启用 Cgroup 控制组,用于限制进程的资源使用量,如CPU、内存等

- 编写

kubelet配置文件

[root@ansible ~]# vim kubelet

KUBELET_EXTRA_AGRS="--cgroup-driver=systemd"

- 复制配置文件到被控主机

[root@ansible ~]# ansible k8s -m copy -a 'src=kubelet dest=/etc/sysconfig/'

设置 kubelet 开机自启动即可,集群初始化后自动启动

[root@ansible ~]# ansible k8s -m shell -a 'systemctl enable kubelet'

初始化集群 - 退出 ansible 主机 200,以下为单独配置内容

- 退出

ansible主机

[root@ansible ~]# poweroff

Connection closed by foreign host.

Disconnected from remote host(测试机 - 200) at 12:25:49.

Type `help' to learn how to use Xshell prompt.

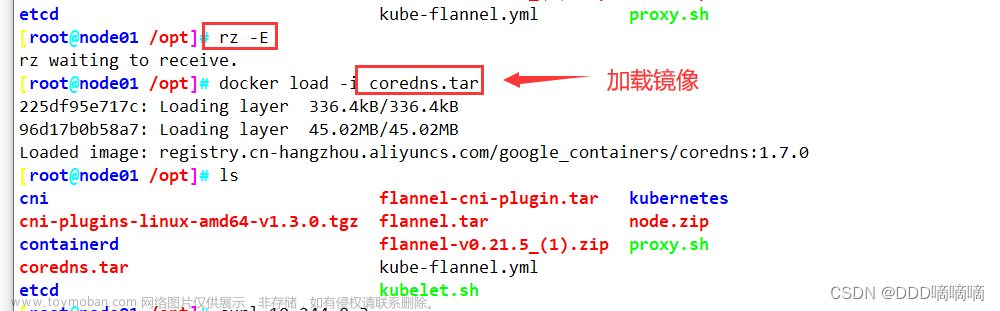

- 查看集群所需镜像

[root@master01 ~]# kubeadm config images list

I0417 12:26:34.006491 22221 version.go:255] remote version is much newer: v1.29.4; falling back to: stable-1.23

k8s.gcr.io/kube-apiserver:v1.23.17

k8s.gcr.io/kube-controller-manager:v1.23.17

k8s.gcr.io/kube-scheduler:v1.23.17

k8s.gcr.io/kube-proxy:v1.23.17

k8s.gcr.io/pause:3.6

k8s.gcr.io/etcd:3.5.1-0

k8s.gcr.io/coredns/coredns:v1.8.6

- 打印一个初始化集群的配置文件

[root@master01 ~]# kubeadm config print init-defaults > kubeadm-config.yml

- 修改集群初始化配置文件

[root@master01 ~]# vim kubeadm-config.yml

# advertiseAddress修改为当前主机ip地址,该地址为初始化集群的节点地址

# name修改为当前节点名称,该名称为初始化集群的节点名称

# mageRepository为阿里云仓库,否则无法下载镜像

localAPIEndpoint:

advertiseAddress: 10.62.158.201

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: master01

taints: nul

apiServer:

certSANs: # 自行添加

- 10.62.158.211 # 在证书中指定的可信IP地址,即负载均衡的vip地址

clusterName: kubernetes

controlPlaneEndpoint: 10.62.158.211:6443 # 负载均衡的IP地址,主要让Kubernetes知道生成主节点令牌

mageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

- 基于集群配置文件初始化

k8s集群,等待即可

[root@master01 ~]# kubeadm init --config kubeadm-config.yml --upload-certs

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

# 集群初始化成功后提示执行的命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 10.62.158.211:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:34fbd3e4c06be63e1ee7a289d041bc51402394cbe19843dccc06210e33e8eac5 \

--control-plane --certificate-key f94264d8953a7e52f5dde60463a931573ffe3777e506e406d3a4c3c487e6bbc8

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.62.158.211:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:34fbd3e4c06be63e1ee7a289d041bc51402394cbe19843dccc06210e33e8eac5

- 初始化

master01管理节点

[root@master01 ~]# mkdir -p $HOME/.kube

[root@master01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 查看

master01管理节点是否初始化完毕

[root@master01 ~]# l.

. .. .ansible .bash_history .bash_logout .bash_profile .bashrc .cshrc .kube .pki .ssh .tcshrc .viminfo

其他管理节点加入集群

-

master02节点 - 若失败,重新执行一遍加入集群命令

[root@master02 ~]# kubeadm join 10.62.158.211:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:34fbd3e4c06be63e1ee7a289d041bc51402394cbe19843dccc06210e33e8eac5 \

> --control-plane --certificate-key f94264d8953a7e52f5dde60463a931573ffe3777e506e406d3a4c3c487e6bbc8

# 管理节点成功加入集群后提示信息

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

- 初始化

master02管理节点

[root@master02 ~]# mkdir -p $HOME/.kube

[root@master02 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master02 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 查看

master02管理节点是否初始化完毕

[root@master02 ~]# l.

. .. .ansible .bash_history .bash_logout .bash_profile .bashrc .cshrc .kube .pki .ssh .tcshrc

-

master03节点 - 若失败,重新执行一遍加入集群命令

[root@master03 ~]# kubeadm join 10.62.158.211:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:34fbd3e4c06be63e1ee7a289d041bc51402394cbe19843dccc06210e33e8eac5 \

> --control-plane --certificate-key f94264d8953a7e52f5dde60463a931573ffe3777e506e406d3a4c3c487e6bbc8

# 管理节点成功加入集群后提示信息

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

- 初始化

master03管理节点

[root@master03 ~]# mkdir -p $HOME/.kube

[root@master03 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master03 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 查看

master03管理节点是否初始化完毕

[root@master03 ~]# l.

. .. .ansible .bash_history .bash_logout .bash_profile .bashrc .cshrc .kube .pki .ssh .tcshrc

工作节点加入集群

-

node01节点 - 若失败,重新执行一遍加入集群命令

[root@node01 ~]# kubeadm join 10.62.158.211:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:34fbd3e4c06be63e1ee7a289d041bc51402394cbe19843dccc06210e33e8eac5

# 工作节点成功加入集群后提示信息

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

-

node02节点 - 若失败,重新执行一遍加入集群命令

[root@node02 ~]# kubeadm join 10.62.158.211:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:34fbd3e4c06be63e1ee7a289d041bc51402394cbe19843dccc06210e33e8eac5

# 工作节点成功加入集群后提示信息

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

任意管理节点查看集群状态,NotReady 代表集群还没准备好,需要添加pod网络后才能使用

[root@master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01 NotReady control-plane,master 14m v1.23.0

master02 NotReady control-plane,master 9m21s v1.23.0

master03 NotReady control-plane,master 6m35s v1.23.0

node01 NotReady <none> 9m36s v1.23.0

node02 NotReady <none> 9m31s v1.23.0

添加Calico网络

Calico 和 Flanner 是两种流行的k8s网络插件,它们都为集群的Pod提供网络功能。然而,它们在实现方式和功能上存在一些重要的区别文章来源:https://www.toymoban.com/news/detail-855587.html

- 在任意管理节点安装

Calico网络即可,下载Calico配置文件,k8s1.18-1.28版本均可使用此文件

[root@master01 ~]# wget https://raw.githubusercontent.com/projectcalico/calico/v3.24.1/manifests/calico.yaml

--2024-04-17 12:57:14-- https://raw.githubusercontent.com/projectcalico/calico/v3.24.1/manifests/calico.yaml

正在解析主机 raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.109.133, 185.199.111.133, 185.199.110.133, ...

正在连接 raw.githubusercontent.com (raw.githubusercontent.com)|185.199.109.133|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:234906 (229K) [text/plain]

正在保存至: “calico.yaml”

100%[=======================================================================================================================================================================>] 234,906 4.81KB/s 用时 47s

2024-04-17 12:58:05 (4.84 KB/s) - 已保存 “calico.yaml” [234906/234906])

- 根据配置文件创建

Calico网络

[root@master01 ~]# kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

- 查看集群内组件状态,组件是否启动成功,等待即可

[root@master01 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-66966888c4-pjwfx 0/1 ContainerCreating 0 60s

calico-node-k8kcd 0/1 Init:0/3 0 60s

calico-node-qm2tp 0/1 Init:0/3 0 60s

calico-node-sqtkw 0/1 Init:0/3 0 60s

calico-node-w9sj9 0/1 Init:2/3 0 60s

calico-node-x4p9f 0/1 Init:0/3 0 60s

coredns-65c54cc984-659mw 0/1 ContainerCreating 0 21m

coredns-65c54cc984-8j49n 0/1 ContainerCreating 0 21m

etcd-master01 1/1 Running 0 21m

etcd-master02 1/1 Running 0 16m

etcd-master03 1/1 Running 0 13m

kube-apiserver-master01 1/1 Running 0 21m

kube-apiserver-master02 1/1 Running 0 16m

kube-apiserver-master03 1/1 Running 0 13m

kube-controller-manager-master01 1/1 Running 1 (15m ago) 21m

kube-controller-manager-master02 1/1 Running 0 16m

kube-controller-manager-master03 1/1 Running 0 13m

kube-proxy-bnkdg 1/1 Running 0 21m

kube-proxy-kccxk 1/1 Running 0 13m

kube-proxy-n6svq 1/1 Running 0 16m

kube-proxy-qgwgj 1/1 Running 0 16m

kube-proxy-qzp7w 1/1 Running 0 16m

kube-scheduler-master01 1/1 Running 1 (15m ago) 21m

kube-scheduler-master02 1/1 Running 0 16m

kube-scheduler-master03 1/1 Running 0 13m

-

Calico都为Running时,集群部署完成

[root@master01 ~]# kubectl get pod -n kube-system |grep calico

- 查看集群中节点状态,集群节点都为

Ready状态,集群搭建完成

[root@master01 ~]# kubectl get node

[root@master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01 Ready control-plane,master 23m v1.23.0

master02 Ready control-plane,master 18m v1.23.0

master03 Ready control-plane,master 15m v1.23.0

node01 Ready <none> 18m v1.23.0

node02 Ready <none> 18m v1.23.0

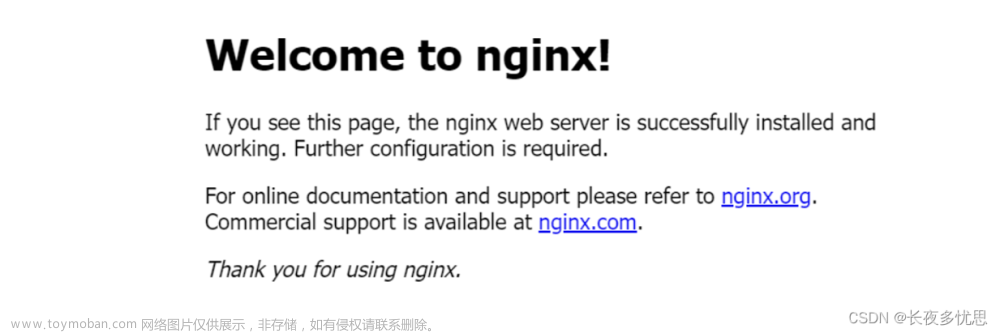

集群部署完成后,添加 nginx 配置文件,部署 nginx 应用

- 添加

nginx配置文件

[root@master01 ~]# vim nginx.yml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.20.2

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

type: NodePort

selector:

app: nginx

ports:

- port: 80

targetPort: 80

nodePort: 30000

- 执行配置文件,生成

nginx应用

[root@master01 ~]# kubectl apply -f nginx.yml

pod/nginx created

service/nginx-svc created

- 获取

k8s中服务端口列表

[root@master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 27m

nginx-svc NodePort 10.111.52.22 <none> 80:30000/TCP 26s

- 访问集群任意节点,访问

nginx服务,打完收工!!

http://10.62.158.201:30000/

http://10.62.158.202:30000/

http://10.62.158.203:30000/

http://10.62.158.204:30000/

http://10.62.158.205:30000/

文章来源地址https://www.toymoban.com/news/detail-855587.html

文章来源地址https://www.toymoban.com/news/detail-855587.html

到了这里,关于基于Docker搭建多主多从K8s高可用集群的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!