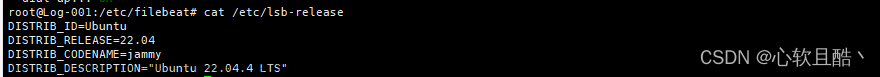

一:系统版本:

二:部署环境:

节点名称

IP

部署组件及版本

配置文件路径

机器CPU

机器内存

机器存储

Log-001

10.10.100.1

zookeeper:3.4.13

kafka:2.8.1

elasticsearch:7.7.0

logstash:7.7.0

kibana:7.7.0

zookeeper:/data/zookeeper

kafka:/data/kafka

elasticsearch:/data/es

logstash:/data/logstash

kibana:/data/kibana

2*1c/16cores

62g

50g 系统

800g 数据盘文章来源:https://www.toymoban.com/news/detail-860282.html

Log-002

10.10.100.2

zookeeper:3.4.13

kafka:2.8.1

elasticsearch:7.7.0

logstash:7.7.0

kibana:7.7.0

zookeeper:/data/zookeeper

kafka:/data/kafka

elasticsearch:/data/es

logstash:/data/logstash

kibana:/data/kibana

2*1c/16cores

62g

50g 系统

800g 数据盘

Log-003

10.10.100.3

zookeeper:3.4.13

kafka:2.8.1

elasticsearch:7.7.0

logstash:7.7.0

kibana:7.7.0

zookeeper:/data/zookeeper

kafka:/data/kafka

elasticsearch:/data/es

logstash:/data/logstash

kibana:/data/kibana

2*1c/16cores

62g

50g 系统

800g 数据盘

三:部署流程:

(1)安装docker和docker-compose

apt-get install -y docker wget https://github.com/docker/compose/releases/download/1.29.2/docker-compose-Linux-x86_64 mv docker-compose-Linux-x86_64 /usr/bin/docker-compose(2)提前拉取需要用到的镜像

docker pull zookeeper:3.4.13 docker pull wurstmeister/kafka docker pull elasticsearch:7.7.0 docker pull daocloud.io/library/kibana:7.7.0 docker pull daocloud.io/library/logstash:7.7.0 docker tag wurstmeister/kafka:latest kafka:2.12-2.5.0 docker tag docker.io/zookeeper:3.4.13 docker.io/zookeeper:3.4.13 docker tag daocloud.io/library/kibana:7.7.0 kibana:7.7.0 docker tag daocloud.io/library/logstash:7.7.0 logstash:7.7.0(3)准备应用的配置文件

mkdir -p /data/zookeeper mkdir -p /data/kafka mkdir -p /data/logstash/conf mkdir -p /data/es/conf mkdir -p /data/es/data chmod 777 /data/es/data mkdir -p /data/kibana(4)编辑各组件配置文件

## es配置文件 ~]# cat /data/es/conf/elasticsearch.yml cluster.name: es-cluster network.host: 0.0.0.0 node.name: master1 ## 每台节点需要更改此node.name,e.g master2,master3 http.cors.enabled: true http.cors.allow-origin: "*" ## 防止跨域问题 node.master: true node.data: true network.publish_host: 10.10.100.1 ## 每台节点需要更改为本机IP地址 discovery.zen.minimum_master_nodes: 1 discovery.zen.ping.unicast.hosts: ["10.10.100.1","10.10.100.2","10.10.100.3"] cluster.initial_master_nodes: ["10.10.100.1","10.10.100.2","10.10.100.3"] ## elasticsearch启动过程会有报错,提前做以下操作 ~]# vim /etc/sysctl.conf vm.max_map_count=655350 ~]# sysctl -p ~]# cat /etc/security/limits.conf * - nofile 100000 * - fsize unlimited * - nproc 100000 ## unlimited nproc for * ## logstash配置文件 ~]# cat /data/logstash/conf/logstash.conf input{ kafka{ topics => ["system-log"] ## 必须和前后配置的topic统一 bootstrap_servers => ["10.10.100.1:9092,10.10.100.2:9092,10.10.100.3:9092"] } } filter{ grok{ match =>{ "message" => "%{SYSLOGTIMESTAMP:timestamp} %{IP:ip} %{DATA:syslog_program} %{GREEDYDATA:message}" } overwrite => ["message"] } date { match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ] } } output{ elasticsearch{ hosts => ["10.10.100.1:9200","10.10.100.2:9200","10.10.100.3:9200"] index => "system-log-%{+YYYY.MM.dd}" } stdout{ codec => rubydebug } } ~]# cat /data/logstash/conf/logstash.yml http.host: "0.0.0.0" xpack.monitoring.elasticsearch.hosts: [ "http://10.10.100.1:9200","http://10.10.100.2:9200","http://10.10.100.3:9200" ] ## kibana配置文件 ~]# cat /data/kibana/conf/kibana.yml # # ** THIS IS AN AUTO-GENERATED FILE ** # # Default Kibana configuration for docker target server.name: kibana server.host: "0.0.0.0" elasticsearch.hosts: [ "http://10.10.100.1:9200","http://10.10.100.2:9200","http://10.10.100.3:9200" ] monitoring.ui.container.elasticsearch.enabled: true(5)所有组件的部署方式全部为docker-compose形式编排部署,docker-compose.yml文件所在路径/root/elk_docker_compose/,编排内容:

~]# mkdir /data/elk ~]# cat /root/elk_docker_compose/docker-compose.yml version: '2.1' services: elasticsearch: image: elasticsearch:7.7.0 container_name: elasticsearch environment: ES_JAVA_OPTS: -Xms1g -Xmx1g network_mode: host volumes: - /data/es/conf/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml - /data/es/data:/usr/share/elasticsearch/data logging: driver: json-file kibana: image: kibana:7.7.0 container_name: kibana depends_on: - elasticsearch volumes: - /data/kibana/conf/kibana.yml:/usr/share/kibana/config/kibana.yml logging: driver: json-file ports: - 5601:5601 logstash: image: logstash:7.7.0 container_name: logstash volumes: - /data/logstash/conf/logstash.conf:/usr/share/logstash/pipeline/logstash.conf - /data/logstash/conf/logstash.yml:/usr/share/logstash/config/logstash.yml depends_on: - elasticsearch logging: driver: json-file ports: - 4560:4560 zookeeper: image: zookeeper:3.4.13 container_name: zookeeper environment: ZOO_PORT: 2181 ZOO_DATA_DIR: /data/zookeeper/data ZOO_DATA_LOG_DIR: /data/zookeeper/logs ZOO_MY_ID: 1 ZOO_SERVERS: "server.1=10.10.100.1:2888:3888 server.2=10.10.100.2:2888:3888 server.3=10.10.100.3:2888:3888" volumes: - /data/zookeeper:/data/zookeeper network_mode: host logging: driver: json-file kafka: image: kafka:2.12-2.5.0 container_name: kafka depends_on: - zookeeper environment: KAFKA_BROKER_ID: 1 KAFKA_PORT: 9092 KAFKA_HEAP_OPTS: "-Xms1g -Xmx1g" KAFKA_HOST_NAME: 10.10.100.145 KAFKA_ADVERTISED_HOST_NAME: 10.10.100.1 KAFKA_LOG_DIRS: /data/kafka KAFKA_ZOOKEEPER_CONNECT: 10.10.100.1:2181,10.10.100.2:2181,10.10.100.3:2181 network_mode: host volumes: - /data:/data logging: driver: json-file(6)启动服务

#开始部署(三台节点分别修改配置文件和docker-compose配置) ~]# docker-compose up -d #停止运行的容器实例 ~]# docker-compose stop #单独启动容器 ~]# docker-compose up -d kafka(7)验证集群各组件服务状态

(1) 验证zookeeper: ]# docker exec -it zookeeper bash bash-4.4# zkServer.sh status ZooKeeper JMX enabled by default Using config: /conf/zoo.cfg Mode: follower (2) 验证kafka: ]# docker exec -it kafka bash bash-4.4# kafka-topics.sh --list --zookeeper 10.10.100.1:2181 __consumer_offsets system-log (3) 验证elasticsearch ]# curl '10.10.100.1:9200/_cat/nodes?v'ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name 10.10.100.1 57 81 0 0.37 0.15 0.09 dilmrt * master2 10.10.100.2 34 83 0 0.11 0.10 0.06 dilmrt - master1 10.10.100.3 24 81 0 0.03 0.06 0.06 dilmrt - master3 (4) 验证kibana 浏览器打开http://10.10.100.1:5601

三:日志收集

(1)以nginx日志为例,安装filebeat日志采集器

apt-get install filebeat(2)配置filebeat向kafka写数据

启用 Nginx 模块: sudo filebeat modules enable nginx 配置 Nginx 模块: 编辑 /etc/filebeat/modules.d/nginx.yml,确保日志路径正确。例如: - module: nginxaccess:enabled: truevar.paths: ["/var/log/nginx/access.log*"] error:enabled: truevar.paths: ["/var/log/nginx/error.log*"] 设置输出为 Kafka: 在 filebeat.yml 文件中,配置输出到 Kafka: output.kafka: # Kafka 服务地址 hosts: ["10.10.100.1:9092", "10.10.100.2:9092", "10.10.100.3:9092"] topic: "system-log" partition.round_robin: reachable_only: false required_acks: 1 compression: gzip max_message_bytes: 1000000 重启 Filebeat: sudo systemctl restart filebeat(3)配置验证:使用 Filebeat 的配置测试命令来验证配置文件的正确性:

filebeat test config

(4)连接测试:可以测试 Filebeat 到 Kafka 的连接:

filebeat test output

(5)登录kibana控制台查看nginx日志是否已正常收集到

文章来源地址https://www.toymoban.com/news/detail-860282.html

到了这里,关于3节点ubuntu24.04服务器docker-compose方式部署高可用elk+kafka日志系统并接入nginx日志的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!