二、企业 keepalived 高可用项目实战

1、Keepalived VRRP 介绍

keepalived是什么 keepalived是集群管理中保证集群高可用的一个服务软件,用来防止单点故障。 keepalived工作原理 keepalived是以VRRP协议为实现基础的,VRRP全称Virtual Router Redundancy Protocol,即虚拟路由冗余协议。 虚拟路由冗余协议,可以认为是实现路由器高可用的协议,即将N台提供相同功能的路由器组成一个路由器组,这个组里面有一个master和多个backup,master上面有一个对外提供服务的vip(该路由器所在局域网内其他机器的默认路由为该vip),master会发组播,当backup收不到vrrp包时就认为master宕掉了,这时就需要根据VRRP的优先级来选举一个backup当master。这样的话就可以保证路由器的高可用了。 keepalived主要有三个模块,分别是core、check和vrrp。core模块为keepalived的核心,负责主进程的启动、维护以及全局配置文件的加载和解析。check负责健康检查,包括常见的各种检查方式。vrrp模块是来实现VRRP协议的。 ============================================== 脑裂 split barin: Keepalived的BACKUP主机在收到不MASTER主机报文后就会切换成为master,如果是它们之间的通信线路出现问题,无法接收到彼此的组播通知,但是两个节点实际都处于正常工作状态,这时两个节点均为master强行绑定虚拟IP,导致不可预料的后果,这就是脑裂。 解决方式: 1、添加更多的检测手段,比如冗余的心跳线(两块网卡做健康监测),ping对方等等。尽量减少"裂脑"发生机会。(指标不治本,只是提高了检测到的概率); 2、设置仲裁机制。两方都不可靠,那就依赖第三方。比如启用共享磁盘锁,ping网关等。(针对不同的手段还需具体分析); 3、爆头,将master停掉。然后检查机器之间的防火墙。网络之间的通信

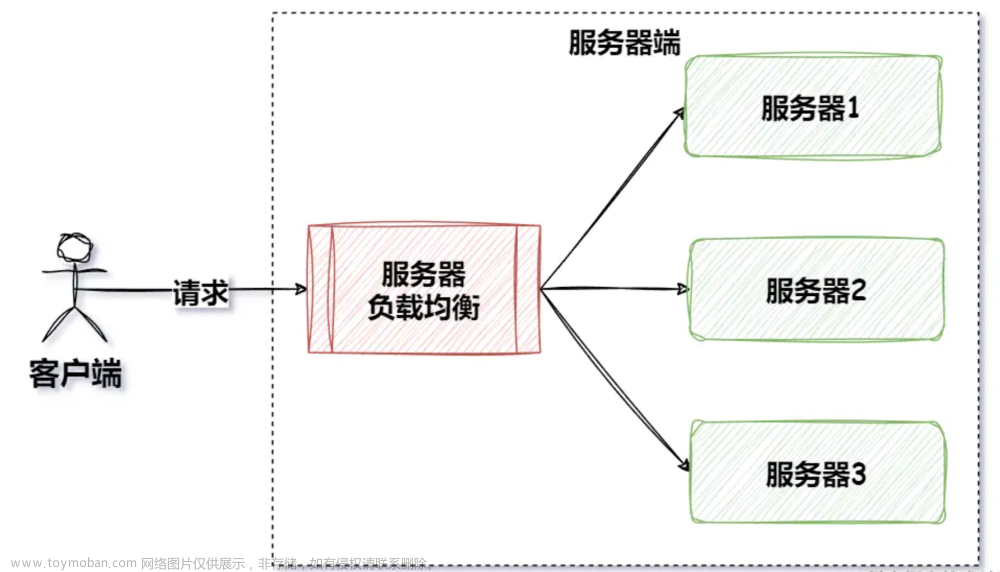

2、Nginx+keepalived实现七层的负载均衡

Nginx通过Upstream模块实现负载均衡

upstream 支持的负载均衡算法

主机清单:

| 主机名 | ip | 系统 | 用途 |

|---|---|---|---|

| Proxy-master | 172.16.147.155 | centos7.5 | 主负载 |

| Proxy-slave | 172.16.147.156 | centos7.5 | 主备 |

| Real-server1 | 172.16.147.153 | Centos7.5 | web1 |

| Real-server2 | 172.16.147.154 | centos7.5 | Web2 |

| Vip for proxy | 172.16.147.100 |

配置安装nginx 所有的机器,关闭防火墙和selinux [root@proxy-master ~]# systemctl stop firewalld //关闭防火墙 [root@proxy-master ~]# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/sysconfig/selinux //关闭selinux,重启生效 [root@proxy-master ~]# setenforce 0 //关闭selinux,临时生效 安装nginx, 全部4台 [root@proxy-master ~]# cd /etc/yum.repos.d/ [root@proxy-master yum.repos.d]# vim nginx.repo [nginx-stable] name=nginx stable repo baseurl=http://nginx.org/packages/centos/$releasever/$basearch/ gpgcheck=0 enabled=1 [root@proxy-master yum.repos.d]# yum install yum-utils -y [root@proxy-master yum.repos.d]# yum install nginx -y

一、实施过程

1、选择两台nginx服务器作为代理服务器。

2、给两台代理服务器安装keepalived制作高可用生成VIP

3、配置nginx的负载均衡

# 两台配置完全一样

[root@proxy-master ~]# vim /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

include /etc/nginx/conf.d/*.conf;

upstream backend {

server 172.16.147.154:80 weight=1 max_fails=3 fail_timeout=20s;

server 172.16.147.153:80 weight=1 max_fails=3 fail_timeout=20s;

}

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://backend;

proxy_set_header Host $host:$proxy_port;

proxy_set_header X-Forwarded-For $remote_addr;

}

}

}

Keepalived实现调度器HA

注:主/备调度器均能够实现正常调度

1. 主/备调度器安装软件

[root@proxy-master ~]# yum install -y keepalived

[root@proxy-slave ~]# yum install -y keepalived

[root@proxy-master ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

[root@proxy-master ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id directory1 #辅助改为directory2

}

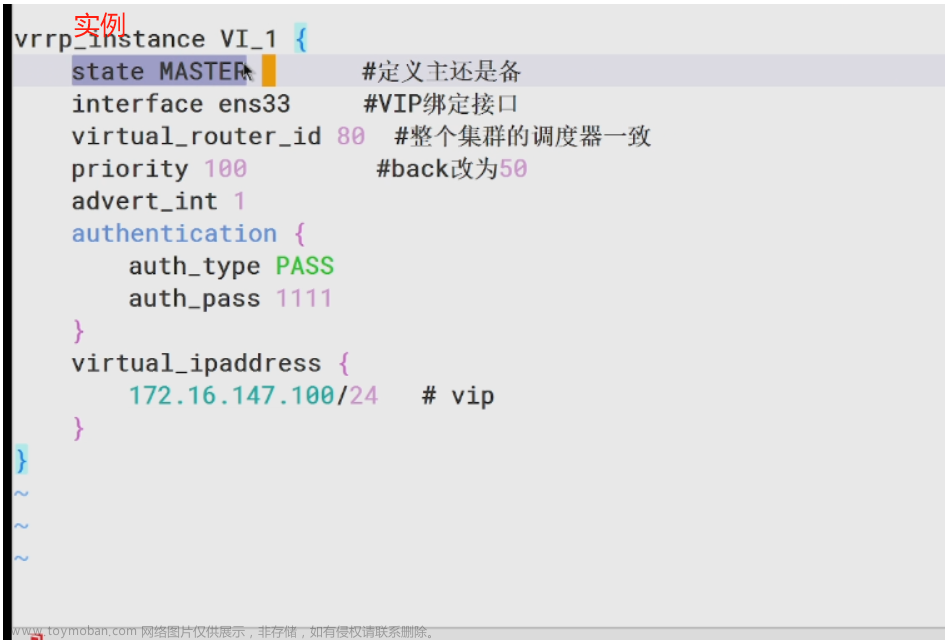

vrrp_instance VI_1 {

state MASTER #定义主还是备

interface ens33 #VIP绑定接口

virtual_router_id 80 #整个集群的调度器一致

priority 100 #back改为50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.147.100/24 # vip

}

}

[root@proxy-slave ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

[root@proxy-slave ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id directory2

}

vrrp_instance VI_1 {

state BACKUP #设置为backup

interface ens33

nopreempt #设置到back上面,不抢占资源

virtual_router_id 80

priority 50 #辅助改为50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.147.100/24

}

}

3. 启动KeepAlived(主备均启动)

[root@proxy-master ~]# systemctl enable keepalived

[root@proxy-slave ~]# systemctl start keepalived

[root@proxy-master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 172.16.147.100/32 scope global lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:ec:8a:fe brd ff:ff:ff:ff:ff:ff

inet 172.16.147.155/24 brd 172.16.147.255 scope global noprefixroute dynamic ens33

valid_lft 1115sec preferred_lft 1115sec

inet 172.16.147.101/24 scope global secondary ens33

valid_lft forever preferred_lft forever

到此:

可以解决心跳故障keepalived

不能解决Nginx服务故障

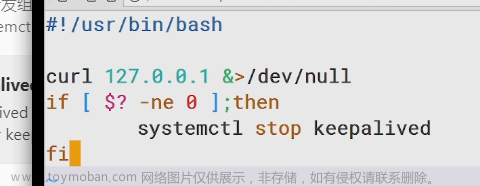

4. 扩展对调度器Nginx健康检查(可选)两台都设置

思路:

让Keepalived以一定时间间隔执行一个外部脚本,脚本的功能是当Nginx失败,则关闭本机的Keepalived

(1) script

[root@proxy-master ~]# vim /etc/keepalived/check_nginx_status.sh

#!/bin/bash

/usr/bin/curl -I http://localhost &>/dev/null

if [ $? -ne 0 ];then

# /etc/init.d/keepalived stop

systemctl stop keepalived

fi

[root@proxy-master ~]# chmod a+x /etc/keepalived/check_nginx_status.sh

(2). keepalived使用script

! Configuration File for keepalived

global_defs {

router_id director1

}

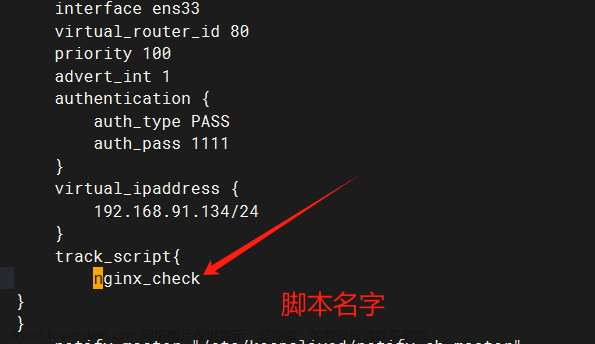

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx_status.sh"

interval 5

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.246.16/24

}

track_script {

check_nginx

}

}

注:必须先启动nginx,再启动keepalived

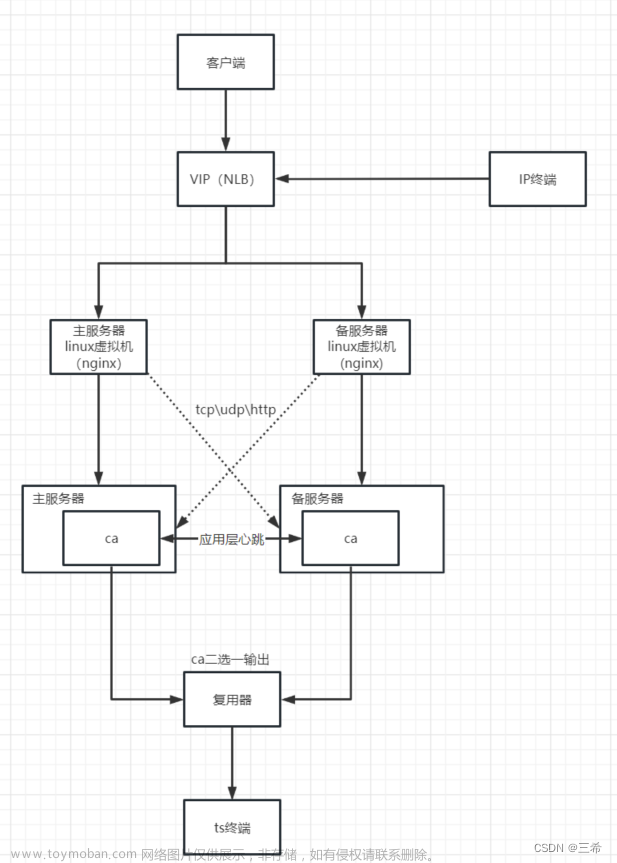

3、LVS_Director + KeepAlived

| 主机名 | ip | 系统 | 用途 |

|---|---|---|---|

| client | 172.16.147.1 | mac | 客户端 |

| lvs-keepalived-master | 172.16.147.154 | centos7.5 | 分发器 |

| lvs-keepalived-slave | 172.16.147.155 | centos7.5 | 分发器备 |

| test-nginx1 | 172.16.147.153 | centos7.5 | web1 |

| test-nginx2 | 172.16.147.156 | centos7.5 | web2 |

| vip | 172.16/147.101 |

LVS_Director + KeepAlived

KeepAlived在该项目中的功能:

1. 管理IPVS的路由表(包括对RealServer做健康检查)

2. 实现调度器的HA

http://www.keepalived.org

Keepalived所执行的外部脚本命令建议使用绝对路径

实施步骤:

1. 主/备调度器安装软件

[root@lvs-keepalived-master ~]# yum -y install ipvsadm keepalived

[root@lvs-keepalived-slave ~]# yum -y install ipvsadm keepalived

2. Keepalived

lvs-master

[root@lvs-keepalived-master ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lvs-keepalived-master #辅助改为lvs-backup

}

vrrp_instance VI_1 {

state MASTER

interface ens33 #VIP绑定接口

virtual_router_id 80 #VRID 同一组集群,主备一致

priority 100 #本节点优先级,辅助改为50

advert_int 1 #检查间隔,默认为1s

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.147.101/24 # 可以写多个vip

}

}

virtual_server 172.16.147.101 80 { #LVS配置

delay_loop 3

lb_algo rr #LVS调度算法

lb_kind DR #LVS集群模式(路由模式)

net_mask 255.255.255.0

protocol TCP #健康检查使用的协议

real_server 172.16.147.153 80 {

weight 1

inhibit_on_failure #当该节点失败时,把权重设置为0,而不是从IPVS中删除

TCP_CHECK { #健康检查

connect_port 80 #检查的端口

connect_timeout 3 #连接超时的时间

}

}

real_server 172.16.147.156 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_timeout 3

connect_port 80

}

}

}

[root@lvs-keepalived-slave ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lvs-keepalived-slave

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

nopreempt #不抢占资源

virtual_router_id 80

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.147.101/24

}

}

virtual_server 172.16.147.101 80 {

delay_loop 3

lb_algo rr

lb_kind DR

net_mask 255.255.255.0

protocol TCP

real_server 172.16.147.153 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_port 80

connect_timeout 3

}

}

real_server 172.16.147.156 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_timeout 3

connect_port 80

}

}

}

3. 启动KeepAlived(主备均启动)

[root@lvs-keepalived-master ~]# systemctl start keepalived

[root@lvs-keepalived-master ~]# systemctl enable keepalived

[root@lvs-keepalived-master ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.147.101:80 rr persistent 20

-> 172.16.147.153:80 Route 1 0 0

-> 172.16.147.156:80 Route 0 0 0

4. 所有RS配置(nginx1,nginx2)

配置好网站服务器,测试所有RS

[root@test-nginx1 ~]# yum install -y nginx

[root@test-nginx2 ~]# yum install -y nginx

[root@test-nginx1 ~]# echo "ip addr add dev lo 172.16.147.101/32" >> /etc/rc.local

[root@test-nginx1 ~]# echo "net.ipv4.conf.all.arp_ignore = 1" >> /etc/sysctl.conf

[root@test-nginx1 ~]# echo "net.ipv4.conf.all.arp_announce = 2" >> /etc/sysctl.conf

[root@test-nginx1 ~]# sysctl -p

[root@test-nginx1 ~]# echo "web1..." >> /usr/share/nginx/html/index.html

[root@test-nginx1 ~]# systemctl start nginx

[root@test-nginx1 ~]# chmod +x /etc/rc.local

LB集群测试

所有分发器和Real Server都正常

主分发器故障及恢复

MySQL+Keepalived

Keepalived+mysql 自动切换

项目环境:

VIP 192.168.246.100

mysql1 192.168.246.162 keepalived-master

mysql2 192.168.246.163 keepalived-salve一、mysql 主主同步 (不使用共享存储,数据保存本地存储)

二、安装keepalived

三、keepalived 主备配置文件

四、mysql状态检测脚本/root/bin/keepalived_check_mysql.sh

五、测试及诊断

实施步骤:

一、mysql 主主同步

二、安装keepalived---两台机器都操作

[root@mysql-keepalived-master ~]# yum -y install keepalived

[root@mysql-keepalived-slave ~]# yum -y install keepalived

三、keepalived 主备配置文件

192.168.246.162 master配置

[root@mysql-keepalived-master ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

[root@mysql-keepalived-master ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalivedglobal_defs {

router_id master

}

vrrp_script check_run {

script "/etc/keepalived/keepalived_chech_mysql.sh"

interval 5

}vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 89

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.246.100/24

}

track_script {

check_run

}

}

slave 192.168.246.163 配置

[root@mysql-keepalived-slave ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

[root@mysql-keepalived-slave ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalivedglobal_defs {

router_id backup

}

vrrp_script check_run {

script "/etc/keepalived/keepalived_check_mysql.sh"

interval 5

}vrrp_instance VI_1 {

state BACKUP

nopreempt

interface ens33

virtual_router_id 89

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.246.100/24

}

track_script {

check_run

}

}

四、mysql状态检测脚本/root/keepalived_check_mysql.sh(两台MySQL同样的脚本)

版本一:简单使用:

[root@mysql-keepalived-master ~]# vim /etc/keepalived/keepalived_check_mysql.sh

#!/bin/bash

/usr/bin/mysql -uroot -p'QianFeng@2019!' -e "show status" &>/dev/null

if [ $? -ne 0 ] ;then

# service keepalived stop

systemctl stop keepalived

fi

[root@mysql-keepalived-master ~]# chmod +x /etc/keepalived/keepalived_check_mysql.sh

==========================================================================

两边均启动keepalived

方式一:

[root@mysql-keepalived-master ~]# systemctl start keepalived

[root@mysql-keepalived-master ~]# systemctl enable keepalived

方式二:

# /etc/init.d/keepalived start

# /etc/init.d/keepalived start

# chkconfig --add keepalived

# chkconfig keepalived on

注意:在任意一台机器作为客户端。在测试的时候记得检查mysql用户的可不可以远程登录。

补充

keepalived 配置lvs

! Configuration File for keepalived

global_defs {

router_id lvs-master

}

vrrp_instance VI_1 {

state MASTER

nopreempt

interface em1

# mcast src ip 发送多播包的地址,如果不设置默认使用绑定网卡的primary ip

mcast_src_ip 10.3.131.50

# unicast src ip 如果两节点的上联交换机禁用了组播,则只能采用vrrp单播通告的方式

# unicast_src_ip xx.xx.xx.xx

# unicast_peer {

# xx.xx.xx.xx

# }

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.3.131.221

}

# 当前节点成为主节点时触发的脚本 notify_master

notify_master "/etc/keepalived/mail.sh master"

# 当前节点转为备节点时触发的脚本 notify_backup

notify_backup "/etc/keepalived/mail.sh backup"

# 当前节点转为失败状态时触发的脚本 notify_fault

notify_fault "/etc/keepalived/mail.sh fault"

}

virtual_server 10.3.131.221 80 {

# 健康检查时间间隔,小于6秒

delay_loop 6

# 轮询算法

lb_algo rr

# lvs 模式

lb_kind DR

nat_mask 255.255.255.0

# 会话保持时间

persistence_timeout 20

# 使用的协议

protocol TCP

sorry_server 2.2.2.2 80

real_server 10.3.131.30 80 {

# 权重

weight 1

# 在服务器健康检查失效时,将其设为0,而不是直接从ipvs中删除

inhibit_on_failure

#在检测到server up后执行脚本

notify_up /etc/keepalived/start.sh start

#在检测到server down后执行脚本

notify_down /etc/keepalived/start.sh shutdown

# 采用url方式检查

HTTP_GET {

url {

path /index.html

digest 481bf8243931326614960bdc17f99b00

}

# 检测端口

connect_port 80

# 连接超时时间

connect_timeout 3

# 重试次数

nb_get_retry 3

# 重连间隔时间

delay_before_retry 2

}

}

}

检查方式:

HTTP_GET URL检查

TCP_GET 端口检查

节点配置

keepalived 自带通知组件并不是很友好,这里我们采用自定义邮件通知方式

1、shell 邮件告警

# yum install -y mailx

# vim /etc/mail.rc

set from=newrain_wang@163.com

set smtp=smtp.163.com

set smtp-auth-user=newrain_wang@163.com

set smtp-auth-password=XXXXXXXXXXXXXX

set smtp-auth=login

set ssl-verify=ignore

# 脚本代码

#!/bin/bash

to_email='1161733918@qq.com'

ipaddress=`ip -4 a show dev ens33 | awk '/brd/{print $2}'`

notify() {

mailsubject="${ipaddress}to be $1, vip转移"

mailbody="$(date +'%F %T'): vrrp 飘移, $(hostname) 切换到 $1"

echo "$mailbody" | mail -s "$mailsubject" $to_email

}

case $1 in

master)

notify master

;;

backup)

notify backup

;;

fault)

notify fault

;;

*)

echo "Usage: $(basename $0) {master|backup|fault}"

exit 1

;;

esac

2、配置文件

# master配置

! Configuration File for keepalived

global_defs {

router_id directory1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.96.100/24

}

notify_master "/etc/keepalived/script/sendmail.sh master"

notify_backup "/etc/keepalived/script/sendmail.sh backup"

notify_fault "/etc/keepalived/script/sendmail.sh fault"

}

# 解释:

#当前节点成为主节点时触发的脚本 notify_master

#当前节点转为备节点时触发的脚本 notify_backup

#当前节点转为失败状态时触发的脚本 notify_fault

#back配置

! Configuration File for keepalived

global_defs {

router_id directory2

}

vrrp_instance VI_1 {

state MASTER

interface ens33

nopreempt

virtual_router_id 80

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.91.134/24

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

}

自己总结的:

两个负载均衡器

master backup

master down掉,backup上

master发组播

如果虚拟机版本是7.7 ,需要systemctl kill keepalived

实例 间隔时间 验证

配置好了之后,master那台机子有VIP,backup没有,这是正常的,因为master没有down掉

写一个小代码,让keepalived和nginx关联起来,在master中写

写到配置文件global下面,这个间隔时间要比master组播的间隔时间要长一点

vrrp_script check{

script "/etc/keepalived/script/nginx_check.sh"

interval 2

}

测试:确保nginx是开着的,不然ip飘逸不过去

测试结果:

·

格式一定要标准,脚本写了之后不需要重启

keepalived 配置lvs

master error real-server1 real-server2

在master机子配置 keepalived

并添加一个ip或者一个网卡

其他三台机子需要配置唯一ip

ip a a 192.168.91.134/32 dev lo

当dr要接受消息时候让rs保持静默

在其他三台机子:

[root@real-server1 ~]# ip addr add dev lo 172.16.147.200/32 #在lo接口上绑定VIP [root@real-server1 ~]# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore #忽略arp广播 [root@real-server1 ~]# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce #匹配精确ip地址回包

并且下载并启动nginx服务,写好自己要测试的三个页面(每台一个)文章来源:https://www.toymoban.com/news/detail-861575.html

并测试文章来源地址https://www.toymoban.com/news/detail-861575.html

到了这里,关于就业班 第三阶段(负载均衡) 2401--4.19 day3的文章就介绍完了。如果您还想了解更多内容,请在右上角搜索TOY模板网以前的文章或继续浏览下面的相关文章,希望大家以后多多支持TOY模板网!